摘要

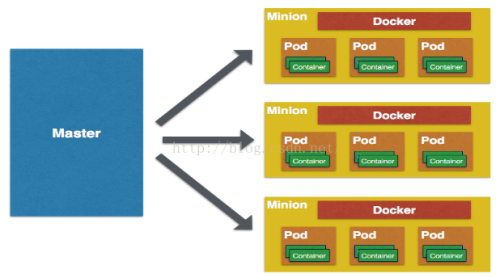

使用swarm构建docker集群之后我们发现面临很多问题 swarm虽好但是还处于发展阶段功能上有所不足 我们使用kubernetes来解决这个问题

kubernetes 与swarm 比较

优点

复制集与健康维护

服务自发现与负载均衡

灰度升级

垃圾回收 自动回收失效镜像与容器

与容器引擎解耦 不仅仅支持docker容器

用户认证与资源隔离

缺点

大而全意味着 复杂度较高 从部署到使用都比swarm 复杂的多 相对而已swarm比较轻量级 而且跟docker引擎配合的更好 从精神上我是更支持swarm 奈何现在功能太欠缺 几天前发布了一个 SwarmKit的管理功能 功能多了不少 待其完善以后可以重回swarm的怀抱

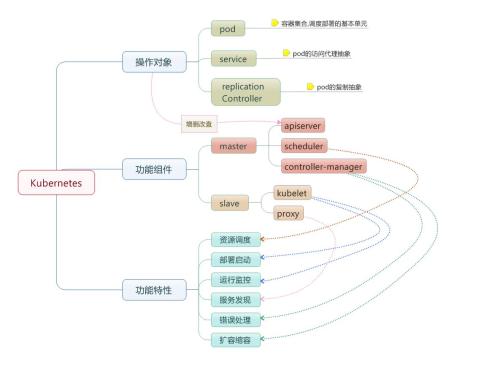

K8s 核心概念简介

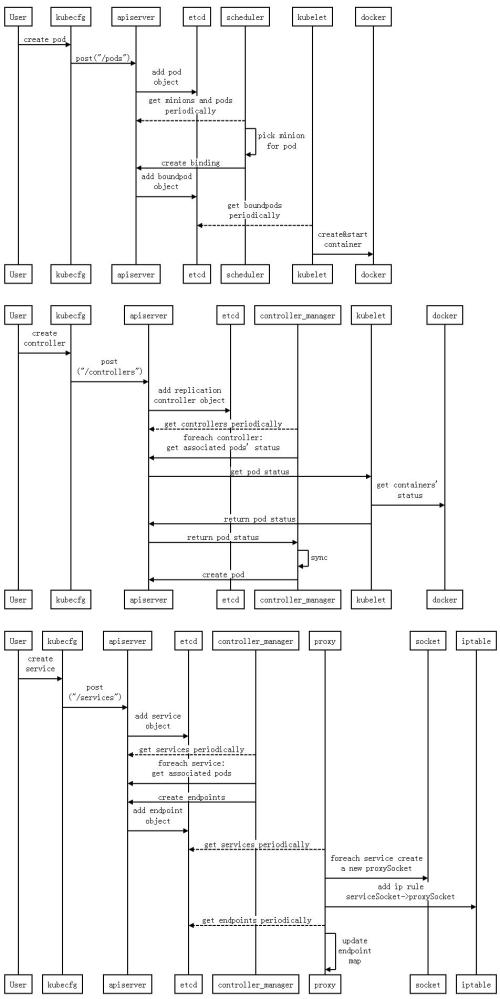

pod

k8s 中创建的最小部署单元就是pod 而容器是运行在pod里面的 pod可以运行多个容器 pod内的容器可以共享网络和存储相互访问

replication controller

复制及控制器:对多个pod创建相同的副本运行在不同节点 一般不会创建单独的pod而是与rc配合创建 rc控制并管理pod的生命周期维护pod的健康

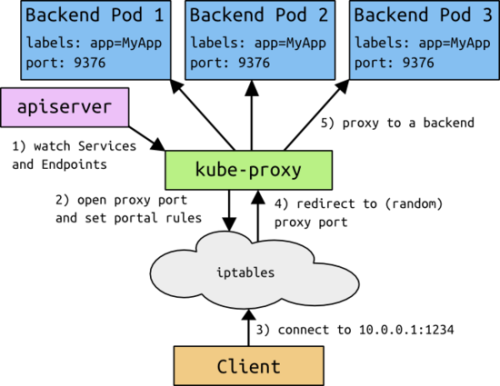

service

每个容器重新运行后的ip地址都不是固定的 所以要有一个服务方向和负载均衡来处理 service就可以实现这个需求 service创建后可以暴露一个固定的端口 与相应的pod 进行绑定

K8s 核心组件简介

apiserver

提供对外的REST API服务 运行在 master节点 对指令进行验证后 修改etcd的存储数据

shcheduler

调度器运行在master节点,通过apiserver定时监控数据变化 运行pod时通过自身调度算法选出可运行的节点

controller-manager

控制管理器运行在master节点 分别几大管理器定时运行 分别为

1)replication controller 管理器 管理并保存所有的rc的的状态

2 ) service Endpoint 管理器 对service 绑定的pod 进行实时更新操作 对状态失败的pod进行解绑

3)Node controller 管理器 定时对集群的节点健康检查与监控

4)资源配额管理器 追踪集群资源使用情况

kuctrl (子节点)

管理维护当前子节点的所有容器 如同步创建新容器 回收镜像垃圾等

kube-proxy (子节点)

对客户端请求进行负载均衡并分配到service后端的pod 是service的具体实现保证了ip的动态变化 proxy 通过修改iptable 实现路由转发

工作流程

k8s 安装过程:

一、主机规划表:

| IP地址 | 角色 | 安装软件包 | 启动服务及顺序 |

| 192.168.20.60 | k8s-master兼minion | kubernetes-v1.2.4、etcd-v2.3.2、flannel-v0.5.5、docker-v1.11.2 | etcd flannel docker kube-apiserver kube-controller-manager kube-scheduler kubelet kube-proxy |

| 192.168.20.61 | k8s-minion1 | kubernetes-v1.2.4、etcd-v2.3.2、flannel-v0.5.5、docker-v1.11.2 | etcd flannel docker kubelet kube-proxy |

| 192.168.20.62 | k8s-minion2 | kubernetes-v1.2.4、etcd-v2.3.2、flannel-v0.5.5、docker-v1.11.2 | etcd flannel docker kubelet kube-proxy |

二、环境准备:

系统环境: CentOS-7.2

#yum update

#关闭firewalld,安装iptables

systemctl stop firewalld.service

systemctl disable firewalld.service

yum -y install iptables-services

systemctl restart iptables.service

systemctl enable iptables.service

#关闭selinux

sed -i "s/SELINUX=enforcing/SELINUX=disabled/g" /etc/selinux/config

setenforce 0

#添加repo

#tee /etc/yum.repos.d/docker.repo <<-'EOF'

[dockerrepo]

name=Docker Repository

baseurl=https://yum.dockerproject.org/repo/main/centos/7/

enabled=1

gpgcheck=1

gpgkey=https://yum.dockerproject.org/gpg

EOF

#yum install docker-engine

#使用内部私有仓库

#vi /usr/lib/systemd/system/docker.service

ExecStart=/usr/bin/docker daemon --insecure-registry=192.168.4.231:5000 -H fd://

#启动docker

systemctl start docker

三、安装etcd集群 (为k8s提供存储功能和强一致性保证)

tar zxf etcd-v2.3.2-linux-amd64.tar.gz

cd etcd-v2.3.2-linux-amd64

cp etcd* /usr/local/bin/

#注册系统服务

#vi /usr/lib/systemd/system/etcd.service

Description=etcd

[Service]

Environment=ETCD_NAME=k8s-master #节点名称,唯一。minion节点就对应改为主机名就好

Environment=ETCD_DATA_DIR=/var/lib/etcd #存储数据路径,如果集群出现问题,可以删除这个目录重新配。

Environment=ETCD_INITIAL_ADVERTISE_PEER_URLS=http://192.168.20.60:7001 #监听地址,其他机器按照本机IP地址修改

Environment=ETCD_LISTEN_PEER_URLS=http://192.168.20.60:7001 #监听地址,其他机器按照本机IP地址修改

Environment=ETCD_LISTEN_CLIENT_URLS=http://192.168.20.60:4001,http://127.0.0.1:4001 #对外监听地址,其他机器按照本机IP地址修改

Environment=ETCD_ADVERTISE_CLIENT_URLS=http://192.168.20.60:4001 #对外监听地址,其他机器按照本机IP地址修改

Environment=ETCD_INITIAL_CLUSTER_TOKEN=etcd-k8s-1 #集群名称,三台节点统一

Environment=ETCD_INITIAL_CLUSTER=k8s-master=http://192.168.20.60:7001,k8s-minion1=http://192.168.20.61:7001,k8s-minion2=http://192.168.20.62:7001 #集群监控

Environment=ETCD_INITIAL_CLUSTER_STATE=new

ExecStart=/usr/local/bin/etcd

[Install]

WantedBy=multi-user.target

#启动服务

systemctl start etcd

#检查etcd集群是否正常工作

[root@k8s-monion2 etcd]# etcdctl cluster-health

member 2d3a022000105975 is healthy: got healthy result from http://192.168.20.61:4001

member 34a68a46747ee684 is healthy: got healthy result from http://192.168.20.62:4001

member fe9e66405caec791 is healthy: got healthy result from http://192.168.20.60:4001

cluster is healthy #出现这个说明已经正常启动了。

#然后设置一下打通的内网网段范围

etcdctl set /coreos.com/network/config '{ "Network": "172.20.0.0/16" }'

四、安装启动Flannel(打通容器间网络,可实现容器跨主机互连)

tar zxf flannel-0.5.5-linux-amd64.tar.gz

mv flannel-0.5.5 /usr/local/flannel

cd /usr/local/flannel

#注册系统服务

#vi /usr/lib/systemd/system/flanneld.service

[Unit]

Description=flannel

After=etcd.service

After=docker.service

[Service]

EnvironmentFile=/etc/sysconfig/flanneld

ExecStart=/usr/local/flannel/flanneld \

-etcd-endpoints=${FLANNEL_ETCD} $FLANNEL_OPTIONS

[Install]

WantedBy=multi-user.target

#新建配置文件

#vi /etc/sysconfig/flanneld

FLANNEL_ETCD="http://192.168.20.60:4001,http://192.168.20.61:4001,http://192.168.20.62:4001"

#启动服务

systemctl start flanneld

mk-docker-opts.sh -i

source /run/flannel/subnet.env

ifconfig docker0 ${FLANNEL_SUBNET}

systemctl restart docker

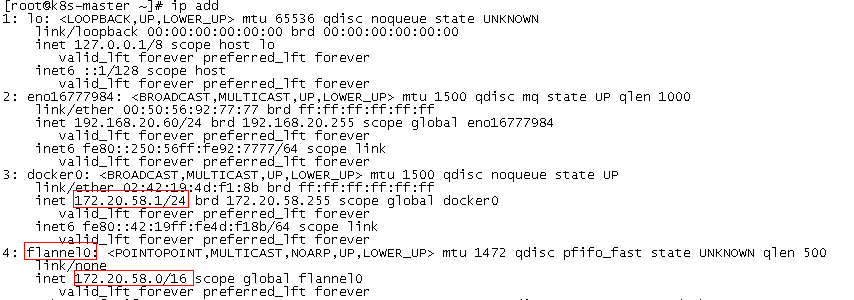

#验证是否成功

五、安装kubernets

1.下载源码包

cd /usr/local/

git clone

https://github.com/kubernetes/kubernetes.git

cd kubernetes/server/

tar zxf kubernetes-server-linux-amd64.tar.gz

cd kubernetes/server/bin

cp kube-apiserver kubectl kube-scheduler kube-controller-manager kube-proxy

kubelet

/usr/local/bin/

2.注册系统服务

#vi /usr/lib/systemd/system/kubelet.service

[Unit]

Description=Kubernetes Kubelet Server

Documentation=https://github.com/kubernetes/kubernetes

[Service]

EnvironmentFile=/etc/kubernetes/config

EnvironmentFile=/etc/kubernetes/kubelet

User=root

ExecStart=/usr/local/bin/kubelet \

$KUBE_LOGTOSTDERR \

$KUBE_LOG_LEVEL \

$KUBE_ALLOW_PRIV \

$KUBELET_ADDRESS \

$KUBELET_PORT \

$KUBELET_HOSTNAME \

$KUBELET_API_SERVER \

$KUBELET_ARGS

Restart=on-failure

LimitNOFILE=65536

[Install]

WantedBy=multi-user.target

#vi /usr/lib/systemd/system/kube-proxy.service

[Unit]

Description=Kubernetes Kube-proxy Server

Documentation=https://github.com/kubernetes/kubernetes

[Service]

EnvironmentFile=/etc/kubernetes/config

EnvironmentFile=/etc/kubernetes/kube-proxy

User=root

ExecStart=/usr/local/bin/kube-proxy \

$KUBE_LOGTOSTDERR \

$KUBE_LOG_LEVEL \

$KUBE_MASTER \

$KUBE_PROXY_ARGS

Restart=on-failure

LimitNOFILE=65536

[Install]

WantedBy=multi-user.target

3.创建配置文件

mkdir /etc/kubernetes

vi /etc/kubernetes/config

# Comma separated list of nodes in the etcd cluster

KUBE_ETCD_SERVERS="--etcd-servers=http://192.168.20.60:4001"

# logging to stderr means we get it in the systemd journal

KUBE_LOGTOSTDERR="--logtostderr=true"

# journal message level, 0 is debug

KUBE_LOG_LEVEL="--v=0"

# Should this cluster be allowed to run privileged docker containers

#KUBE_ALLOW_PRIV="--allow-privileged=false"

KUBE_ALLOW_PRIV="--allow-privileged=true"

vi /etc/kubernetes/kubelet

# The address for the info server to serve on

KUBELET_ADDRESS="--address=0.0.0.0"

# The port for the info server to serve on

KUBELET_PORT="--port=10250"

# You may leave this blank to use the actual hostname

KUBELET_HOSTNAME="--hostname-override=192.168.20.60" #master,minion节点填本机的IP

# Location of the api-server

KUBELET_API_SERVER="--api-servers=http://192.168.20.60:8080"

# Add your own!

KUBELET_ARGS="--cluster-dns=192.168.20.64 --cluster-domain=cluster.local" #后面使用dns插件会用到

vi /etc/kubernetes/kube-proxy

# How the replication controller and scheduler find the kube-apiserver

KUBE_MASTER="--master=http://192.168.20.60:8080"

# Add your own!

KUBE_PROXY_ARGS="--proxy-mode=userspace" #代理模式,这里使用userspace。而iptables模式效率比较高,但要注意你的内核版本和iptables的版本是否符合要求,要不然会出错。

关于代理模式的选择,可以看国外友人的解释:

4.以上服务需要在所有节点上启动,下面的是master节点另外需要的服务:

kube-apiserver

、

kube-controller-manager

、

kube-scheduler

4.1、配置相关服务

#vi /usr/lib/systemd/system/kube-apiserver.service

After=etcd.service

[Service]

EnvironmentFile=-/etc/kubernetes/config

EnvironmentFile=-/etc/kubernetes/apiserver

User=root

ExecStart=/usr/local/bin/kube-apiserver \

$KUBE_LOGTOSTDERR \

$KUBE_LOG_LEVEL \

$KUBE_ETCD_SERVERS \

$KUBE_API_ADDRESS \

$KUBE_API_PORT \

$KUBELET_PORT \

$KUBE_ALLOW_PRIV \

$KUBE_SERVICE_ADDRESSES \

$KUBE_ADMISSION_CONTROL \

$KUBE_API_ARGS

Restart=on-failure

Type=notify

LimitNOFILE=65536

[Install]

WantedBy=multi-user.target

#vi /usr/lib/systemd/system/kube-controller-manager.service

[Unit]

Description=Kubernetes Controller Manager

Documentation=https://github.com/GoogleCloudPlatform/kubernetes

[Service]

EnvironmentFile=-/etc/kubernetes/config

EnvironmentFile=-/etc/kubernetes/controller-manager

User=root

ExecStart=/usr/local/bin/kube-controller-manager \

$KUBE_LOGTOSTDERR \

$KUBE_LOG_LEVEL \

$KUBE_MASTER \

$KUBE_CONTROLLER_MANAGER_ARGS

Restart=on-failure

LimitNOFILE=65536

[Install]

WantedBy=multi-user.target

#vi /usr/lib/systemd/system/kube-scheduler.service

[Unit]

Description=Kubernetes Scheduler Plugin

Documentation=https://github.com/GoogleCloudPlatform/kubernetes

[Service]

EnvironmentFile=-/etc/kubernetes/config

EnvironmentFile=-/etc/kubernetes/scheduler

User=root

ExecStart=/usr/local/bin/kube-scheduler \

$KUBE_LOGTOSTDERR \

$KUBE_LOG_LEVEL \

$KUBE_MASTER \

$KUBE_SCHEDULER_ARGS

Restart=on-failure

LimitNOFILE=65536

[Install]

4.2、配置文件

vi /etc/kubernetes/apiserver

# The address on the local server to listen to.

KUBE_API_ADDRESS="--address=0.0.0.0"

# The port on the local server to listen on.

KUBE_API_PORT="--port=8080"

# How the replication controller and scheduler find the kube-apiserver

KUBE_MASTER="--master=http://192.168.20.60:8080"

# Port kubelets listen on

KUBELET_PORT="--kubelet-port=10250"

# Address range to use for services

KUBE_SERVICE_ADDRESSES="--service-cluster-ip-range=192.168.20.0/24"

#KUBE_SERVICE_ADDRESSES="--service-cluster-ip-range=10.254.0.0/16"

# Add your own!

KUBE_API_ARGS=""

vi /etc/kubernetes/controller-manager

# How the replication controller and scheduler find the kube-apiserver

KUBE_MASTER="--master=http://192.168.20.60:8080"

# Add your own!

KUBE_CONTROLLER_MANAGER_ARGS=""

vi /etc/kubernetes/scheduler

# How the replication controller and scheduler find the kube-apiserver

KUBE_MASTER="--master=http://192.168.20.60:8080"

# Add your own!

KUBE_SCHEDULER_ARGS=""

更多配置项可以参考官方文档:

http://kubernetes.io/docs/admin/kube-proxy/

#启动master服务

systemctl start kubelet

systemctl start kube-proxy

systemctl start kube-apiserver

systemctl start kube-controller-manager

systemctl start kube-scheduler

#启动minion服务

systemctl start kubelet

systemctl start kube-proxy

#检查服务是否启动正常

[root@k8s-master bin]# kubectl get no

NAME STATUS AGE

192.168.20.60 Ready 24s

192.168.20.61 Ready 46s

192.168.20.62 Ready 35s

#重启命令

systemctl restart kubelet

systemctl restart kube-proxy

systemctl restart kube-apiserver

systemctl restart kube-controller-manager

systemctl restart kube-scheduler

#填坑

pause gcr.io 被墙,没有这个镜像k8s应用不了,报下面错误:

p_w_picpath pull failed for gcr.io/google_containers/pause:2.0

使用docker hub的镜像代替,或者下到本地仓库,然后再重新打tag,并且每个节点都需要这个镜像

docker pull kubernetes/pause

docker tag kubernetes/pause gcr.io/google_containers/pause:2.0

[root@k8s-master addons]# docker p_w_picpaths

REPOSITORY TAG IMAGE ID CREATED SIZE

192.168.4.231:5000/pause 2.0 2b58359142b0 9 months ago 350.2 kB

gcr.io/google_containers/pause 2.0 2b58359142b0 9 months ago 350.2 kB

5.官方源码包里有一些插件,如:监控面板、dns

cd /usr/local/kubernetes/cluster/addons/

5.1、dashboard 监控面板插件

cd /usr/local/kubernetes/cluster/addons/dashboard

下面有两个文件:

=============================================================

dashboard-controller.yaml #用来设置部署应用,如:副本数,使用镜像,资源控制等等

apiVersion: v1

kind: ReplicationController

metadata:

# Keep the name in sync with p_w_picpath version and

# gce/coreos/kube-manifests/addons/dashboard counterparts

name: kubernetes-dashboard-v1.0.1

namespace: kube-system

labels:

k8s-app: kubernetes-dashboard

version: v1.0.1

kubernetes.io/cluster-service: "true"

spec:

replicas: 1 #副本数量

selector:

k8s-app: kubernetes-dashboard

template:

metadata:

labels:

k8s-app: kubernetes-dashboard

version: v1.0.1

kubernetes.io/cluster-service: "true"

spec:

containers:

- name: kubernetes-dashboard

p_w_picpath: 192.168.4.231:5000/kubernetes-dashboard:v1.0.1

resources:

# keep request = limit to keep this container in guaranteed class

limits:

cpu: 100m

memory: 50Mi

requests:

cpu: 100m

memory: 50Mi

ports:

- containerPort: 9090

args:

- --apiserver-host=http://192.168.20.60:8080 #这里需要注意,不加这个参数,会默认去找localhost,而不是去master那里取。还有就是这个配置文件各项缩减问题,空格。

livenessProbe:

httpGet:

path: /

port: 9090

initialDelaySeconds: 30

timeoutSeconds: 30

========================================================

dashboard-service.yaml #提供外部访问服务

apiVersion: v1

kind: Service

metadata:

name: kubernetes-dashboard

namespace: kube-system

labels:

k8s-app: kubernetes-dashboard

kubernetes.io/cluster-service: "true"

spec:

selector:

k8s-app: kubernetes-dashboard

ports:

- port: 80

targetPort: 9090

=========================================================

kubectl create -f ./ #创建服务

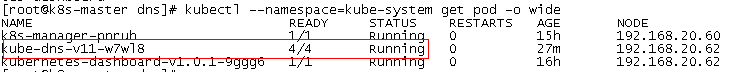

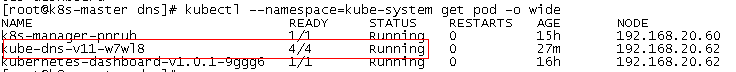

kubectl --namespace=kube-system get po #查看系统服务启动状态

kubectl --namespace=kube-system get po -o wide #查看系统服务起在哪个节点

#若想删除,可以执行下面命令

kubectl delete -f ./

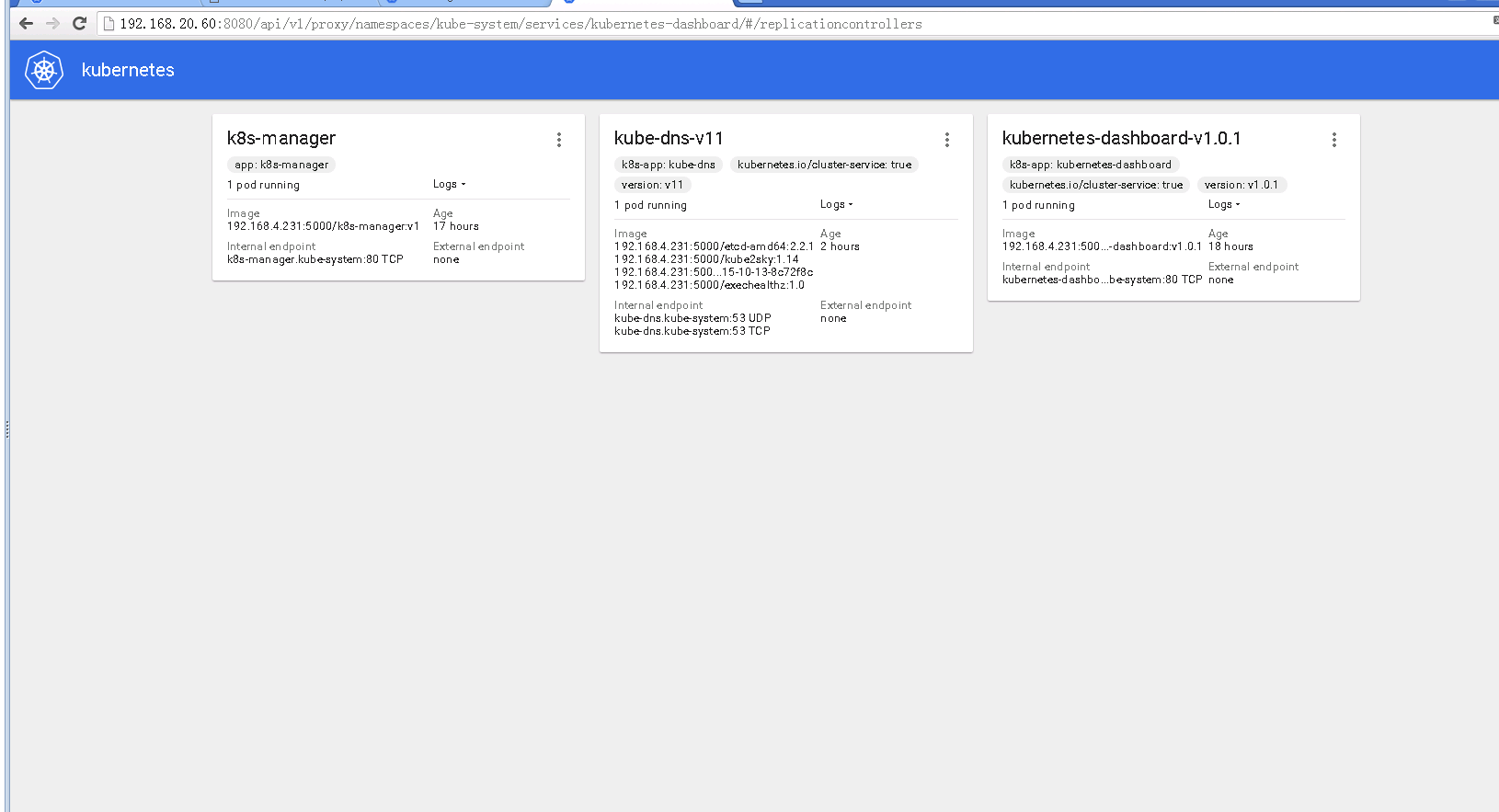

在浏览器输入: http://192.168.20.60:8080/ui/

5.2、DNS 插件安装

#使用ip地址方式不太容易记忆,集群内可以使用dns绑定ip并自动更新维护。

cd /usr/local/kubernetes/cluster/addons/dns

cp skydns-rc.yaml.in /opt/dns/skydns-rc.yaml

cp skydns-svc.yaml.in /opt/dns/skydns-svc.yaml

#/opt/dns/skydns-rc.yaml 文件

apiVersion: v1

kind: ReplicationController

metadata:

name: kube-dns-v11

namespace: kube-system

labels:

k8s-app: kube-dns

version: v11

kubernetes.io/cluster-service: "true"

spec:

replicas: 1

selector:

k8s-app: kube-dns

version: v11

template:

metadata:

labels:

k8s-app: kube-dns

version: v11

kubernetes.io/cluster-service: "true"

spec:

containers:

- name: etcd

p_w_picpath: 192.168.4.231:5000/etcd-amd64:2.2.1 #我都是先把官方镜像下载到本地仓库

resources:

# TODO: Set memory limits when we've profiled the container for large

# clusters, then set request = limit to keep this container in

# guaranteed class. Currently, this container falls into the

# "burstable" category so the kubelet doesn't backoff from restarting it.

limits:

cpu: 100m

memory: 500Mi

requests:

cpu: 100m

memory: 50Mi

command:

- /usr/local/bin/etcd

- -data-dir

- /var/etcd/data

- -listen-client-urls

- http://127.0.0.1:2379,http://127.0.0.1:4001

- -advertise-client-urls

- http://127.0.0.1:2379,http://127.0.0.1:4001

- -initial-cluster-token

- skydns-etcd

volumeMounts:

- name: etcd-storage

mountPath: /var/etcd/data

- name: kube2sky

p_w_picpath: 192.168.4.231:5000/kube2sky:1.14 #本地仓库取

resources:

# TODO: Set memory limits when we've profiled the container for large

# clusters, then set request = limit to keep this container in

# guaranteed class. Currently, this container falls into the

# "burstable" category so the kubelet doesn't backoff from restarting it.

limits:

cpu: 100m

# Kube2sky watches all pods.

memory: 200Mi

requests:

cpu: 100m

memory: 50Mi

livenessProbe:

httpGet:

path: /healthz

port: 8080

scheme: HTTP

initialDelaySeconds: 60

timeoutSeconds: 5

successThreshold: 1

failureThreshold: 5

readinessProbe:

httpGet:

path: /readiness

port: 8081

scheme: HTTP

# we poll on pod startup for the Kubernetes master service and

# only setup the /readiness HTTP server once that's available.

initialDelaySeconds: 30

timeoutSeconds: 5

args:

# command = "/kube2sky"

- --domain=cluster.local #一个坑,要和/etc/kubernetes/kubelet 内的一致

- --kube_master_url=http://192.168.20.60:8080 #master管理节点

- name: skydns

p_w_picpath: 192.168.4.231:5000/skydns:2015-10-13-8c72f8c

resources:

# TODO: Set memory limits when we've profiled the container for large

# clusters, then set request = limit to keep this container in

# guaranteed class. Currently, this container falls into the

# "burstable" category so the kubelet doesn't backoff from restarting it.

limits:

cpu: 100m

memory: 200Mi

requests:

cpu: 100m

memory: 50Mi

args:

# command = "/skydns"

- -machines=http://127.0.0.1:4001

- -addr=0.0.0.0:53

- -ns-rotate=false

- -domain=cluster.local. #另一个坑!! 后面要带"."

ports:

- containerPort: 53

name: dns

protocol: UDP

- containerPort: 53

name: dns-tcp

protocol: TCP

- name: healthz

p_w_picpath: 192.168.4.231:5000/exechealthz:1.0 #本地仓库取镜像

resources:

# keep request = limit to keep this container in guaranteed class

limits:

cpu: 10m

memory: 20Mi

requests:

cpu: 10m

memory: 20Mi

args:

- -cmd=nslookup kubernetes.default.svc.cluster.local 127.0.0.1 >/dev/null #还是这个坑

- -port=8080

ports:

- containerPort: 8080

protocol: TCP

volumes:

- name: etcd-storage

emptyDir: {}

dnsPolicy: Default # Don't use cluster DNS.

========================================================================

#/opt/dns/skydns-svc.yaml 文件

apiVersion: v1

kind: Service

metadata:

name: kube-dns

namespace: kube-system

labels:

k8s-app: kube-dns

kubernetes.io/cluster-service: "true"

kubernetes.io/name: "KubeDNS"

spec:

selector:

k8s-app: kube-dns

clusterIP: 192.168.20.100

ports:

- name: dns

port: 53

protocol: UDP

- name: dns-tcp

port: 53

protocol: TCP

==================================================================

#启动

cd /opt/dns/

kubectl create -f ./

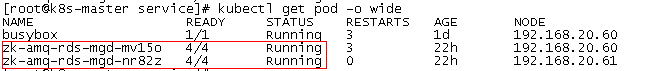

#查看pod启动状态,看到4/4 个服务都启动完成,那就可以进行下一步验证阶段。

kubectl --namespace=kube-system get pod -o wide

转官网的验证方法:

网址:https://github.com/kubernetes/kubernetes/blob/release-1.2/cluster/addons/dns/README.md#userconsent#

How do I test if it is working?

First deploy DNS as described above.

1 Create a simple Pod to use as a test environment.

Create a file named busybox.yaml with the following contents:

apiVersion:v1kind:Podmetadata:name:busyboxnamespace:defaultspec:containers:- p_w_picpath:busyboxcommand:- sleep- "3600"p_w_picpathPullPolicy:IfNotPresentname:busyboxrestartPolicy:Always

Then create a pod using this file:

kubectl create -f busybox.yaml

2 Wait for this pod to go into the running state.

You can get its status with:

kubectl get pods busybox

You should see:

NAME READY STATUS RESTARTS AGE busybox 1/1 Running 0 <some-time>

3 Validate DNS works

Once that pod is running, you can exec nslookup in that environment:

kubectl exec busybox -- nslookup kubernetes.default

You should see something like:

Server: 10.0.0.10 Address 1: 10.0.0.10 Name: kubernetes.default Address 1: 10.0.0.1

If you see that, DNS is working correctly.

5.3、manage插件

参考:http://my.oschina.net/fufangchun/blog/703985

mkdir /opt/k8s-manage

cd /opt/k8s-manage

================================================

# cat k8s-manager-rc.yaml

apiVersion: v1

kind: ReplicationController

metadata:

name: k8s-manager

namespace: kube-system

labels:

app: k8s-manager

spec:

replicas: 1

selector:

app: k8s-manager

template:

metadata:

labels:

app: k8s-manager

spec:

containers:

- p_w_picpath: mlamina/k8s-manager:latest

name: k8s-manager

resources:

limits:

cpu: 100m

memory: 50Mi

ports:

- containerPort: 80

name: http

=================================================

# cat k8s-manager-svr.yaml

apiVersion: v1

kind: Service

metadata:

name: k8s-manager

namespace: kube-system

labels:

app: k8s-manager

spec:

ports:

- port: 80

targetPort: http

selector:

app: k8s-manager

=================================================

#启动

kubectl create -f ./

浏览器访问:

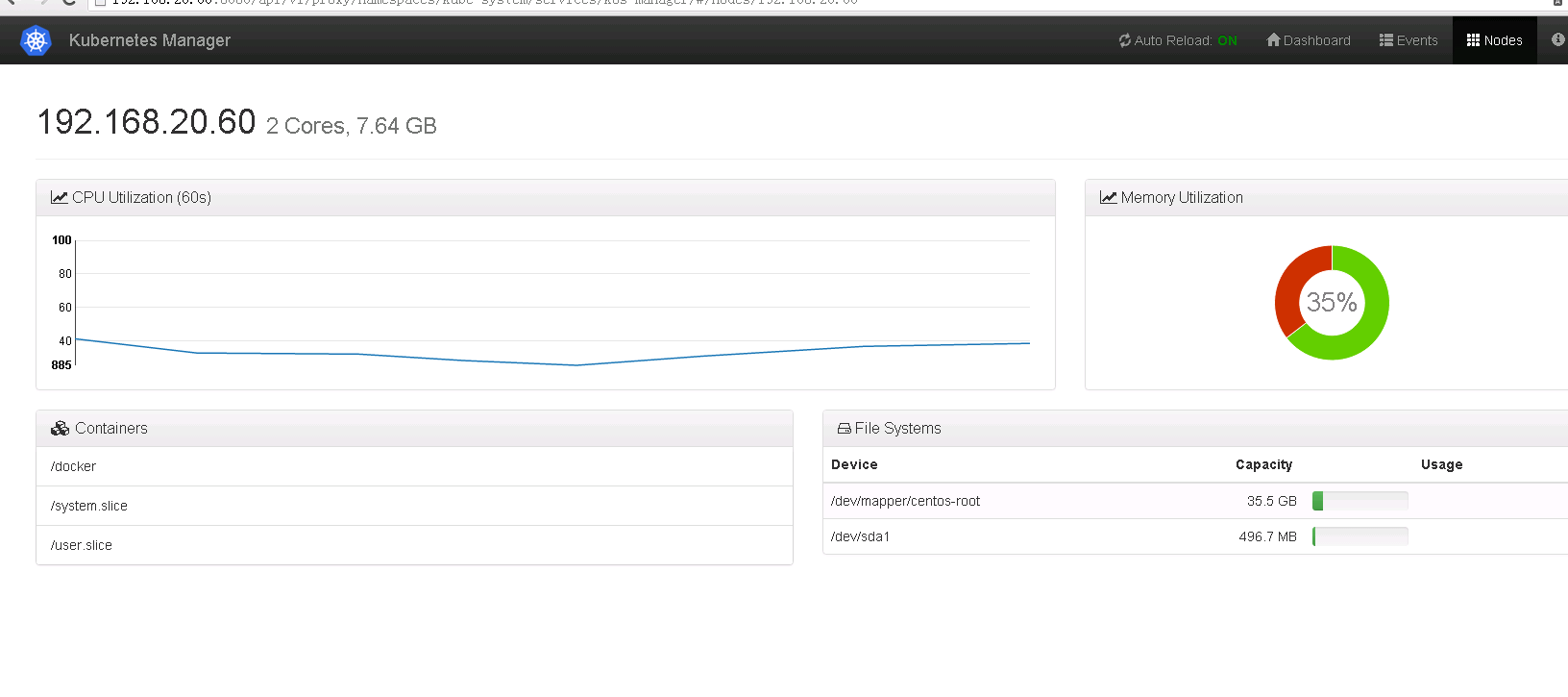

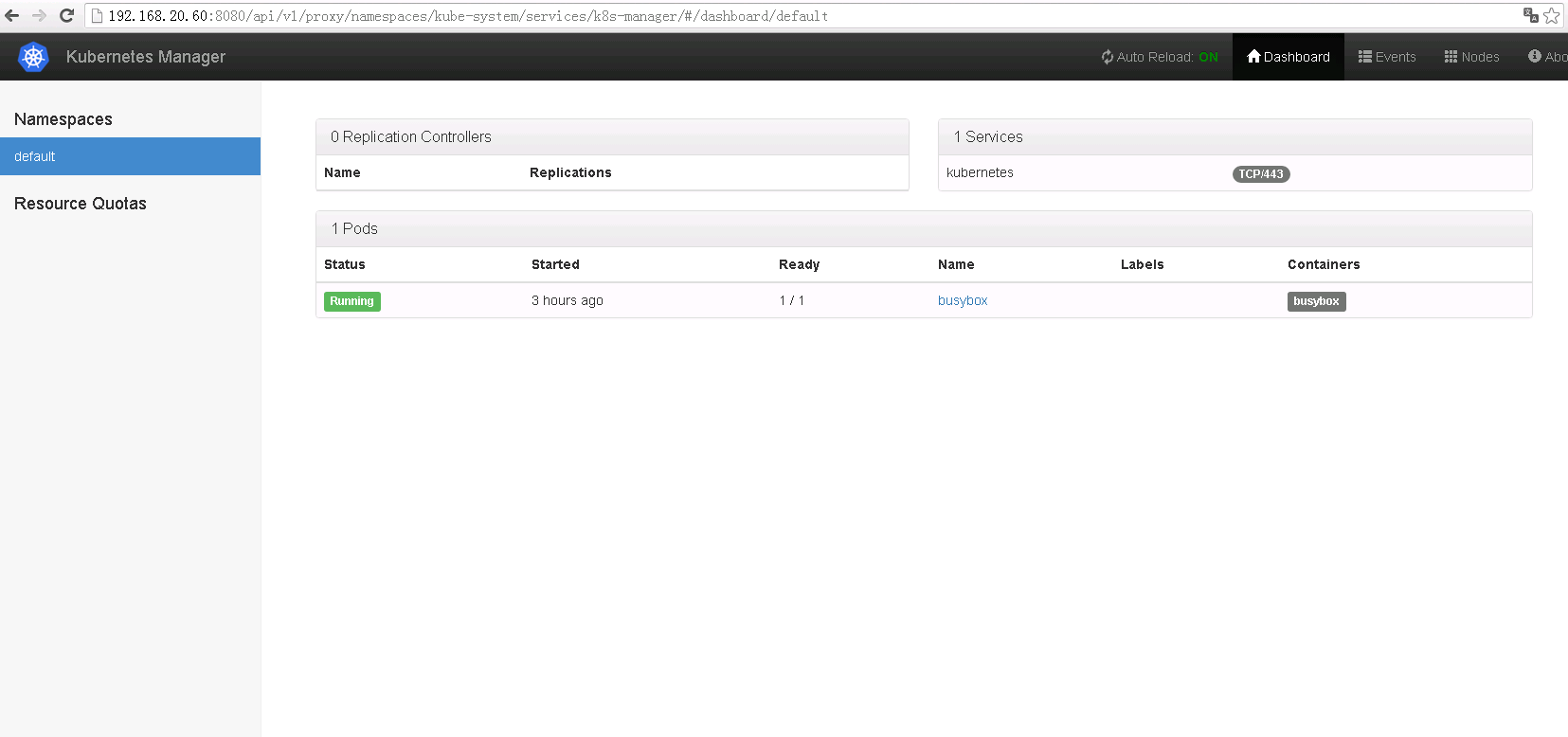

http://192.168.20.60:8080/api/v1/proxy/namespaces/kube-system/services/k8s-manager

实例演示

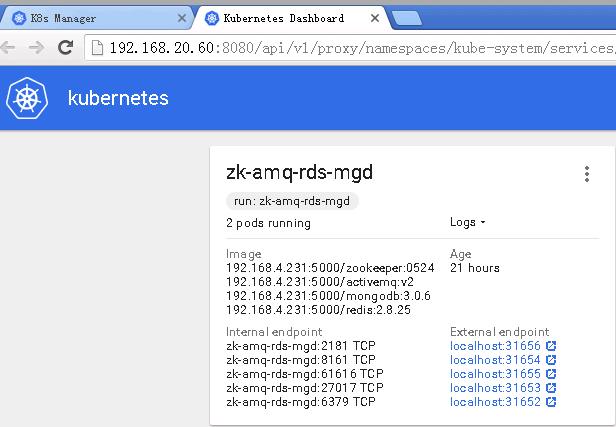

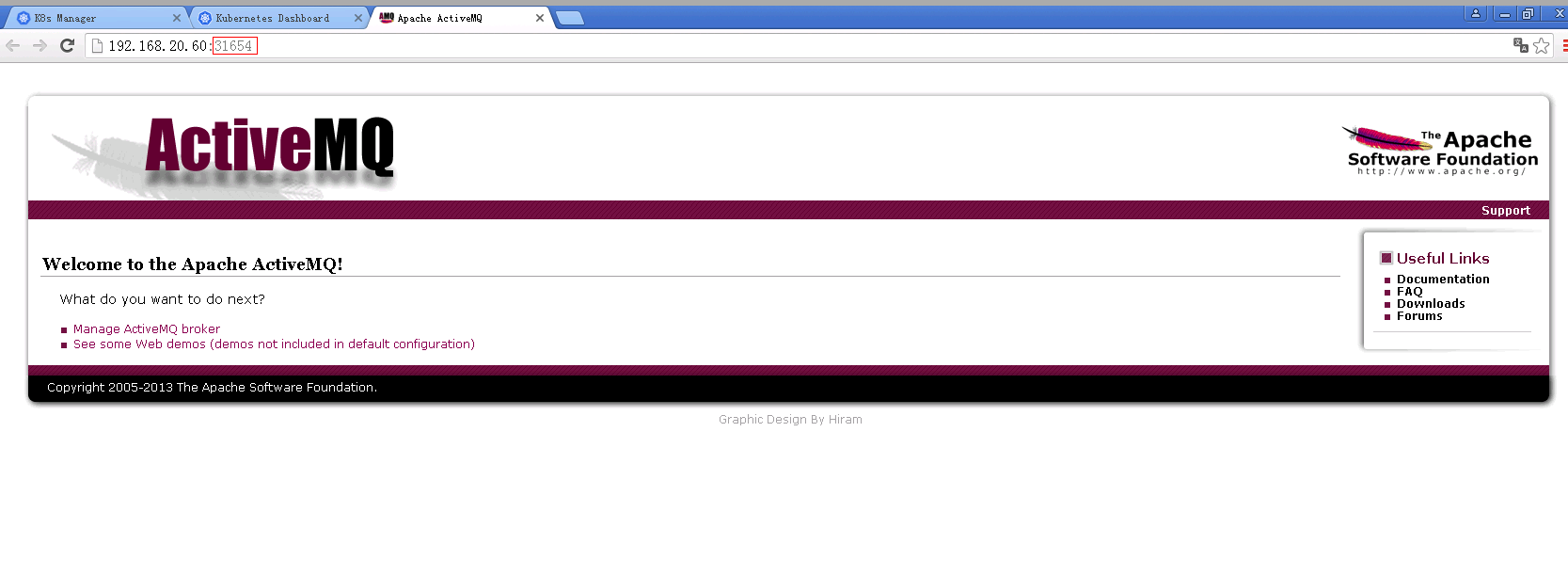

1.搭zookeeper、activeMQ、redis、mongodb服务

mkdir /opt/service/

cd /opt/service

==========================================================

#cat service.yaml

apiVersion: v1

kind: Service

metadata:

name: zk-amq-rds-mgd #服务名称

labels:

run: zk-amq-rds-mgd

spec:

type: NodePort

ports:

- port: 2181 #标识

nodePort: 31656 #master节点对外服务端口

targetPort: 2181 #容器内部端口

protocol: TCP #协议类型

name: zk-app #表示名

- port: 8161

nodePort: 31654

targetPort: 8161

protocol: TCP

name: amq-http

- port: 61616

nodePort: 31655

targetPort: 61616

protocol: TCP

name: amq-app

- port: 27017

nodePort: 31653

targetPort: 27017

protocol: TCP

name: mgd-app

- port: 6379

nodePort: 31652

targetPort: 6379

protocol: TCP

name: rds-app

selector:

run: zk-amq-rds-mgd

---

#apiVersion: extensions/v1beta1

apiVersion: v1

kind: ReplicationController

metadata:

name: zk-amq-rds-mgd

spec:

replicas: 2 #两个副本

template:

metadata:

labels:

run: zk-amq-rds-mgd

spec:

containers:

- name: zookeeper #应用名称

p_w_picpath: 192.168.4.231:5000/zookeeper:0524 #使用本地镜像

p_w_picpathPullPolicy: IfNotPresent #自动分配到性能好的节点,去拉镜像并启动容器。

ports:

- containerPort: 2181 #容器内部服务端口

env:

- name: LANG

value: en_US.UTF-8

volumeMounts:

- mountPath: /tmp/zookeeper #容器内部挂载点

name: zookeeper-d#挂载名称,要与下面配置外部挂载点一致

- name: activemq

p_w_picpath: 192.168.4.231:5000/activemq:v2

p_w_picpathPullPolicy: IfNotPresent

ports:

- containerPort: 8161

- containerPort: 61616

volumeMounts:

- mountPath: /opt/apache-activemq-5.10.2/data

name: activemq-d

- name: mongodb

p_w_picpath: 192.168.4.231:5000/mongodb:3.0.6

p_w_picpathPullPolicy: IfNotPresent

ports:

- containerPort: 27017

volumeMounts:

- mountPath: /var/lib/mongo

name: mongodb-d

- name: redis

p_w_picpath: 192.168.4.231:5000/redis:2.8.25

p_w_picpathPullPolicy: IfNotPresent

ports:

- containerPort: 6379

volumeMounts:

- mountPath: /opt/redis/var

name: redis-d

volumes:

- hostPath:

path: /mnt/mfs/service/zookeeper/data #宿主机挂载点,我这里用了分布式共享存储(MooseFS),这样可以保证多个副本数据的一致性。

name: zookeeper-d

- hostPath:

path: /mnt/mfs/service/activemq/data

name: activemq-d

- hostPath:

path: /mnt/mfs/service/mongodb/data

name: mongodb-d

- hostPath:

path: /mnt/mfs/service/redis/data

name: redis-d

===========================================================================================

#创建服务

kubectl create -f ./

参考文献:http://my.oschina.net/jayqqaa12/blog/693919#userconsent#

转载于:https://blog.51cto.com/chenguomin/1828905

1160

1160

被折叠的 条评论

为什么被折叠?

被折叠的 条评论

为什么被折叠?