介绍

Tez目标是用来构建复杂的有向无环图数据处理程序。Tez项目是构建在YARN之上的。Tez的设计上有两点优势:

1 用户体验

使用API来自定义数据流

灵活的Input-Processor-Output运行模式

与计算的数据类型无关

简单的部署流程

2 计算性能

性能高于MapReduce

资源管理更加优化

运行时配置预加载

物理数据流动态运行

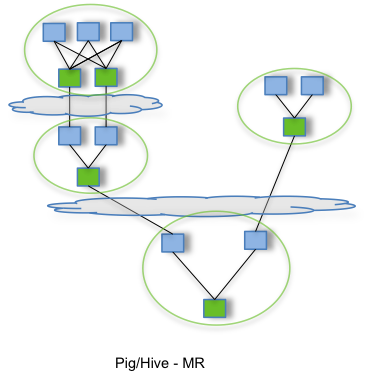

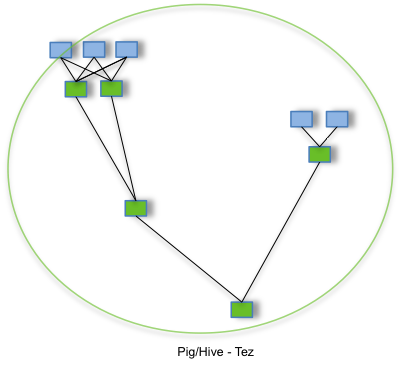

MR区别

基于MR的Job:

在YARN中,每个作业的AM会先向RM申请资源(Container),申请到资源之后开始运行作业,作业处理完成后释放资源,期间没有资源重新利用的环节。这样会使作业大大的延迟。基于MR的Hive/Pig的DAG数据流处理过程”,可以看出图中的每一节点都是把结果写到一个中间存储(HDFS/S3)中,下个节点从中间存储读取数据,再来继续接下来的计算。可见中间存储的读写性能对整个DAG的性能影响是很大的。

基于Tez的Job:

使用Tez后,yarn的作业不是先提交给RM了,而是提交给AMPS。AMPS在启动后,会预先创建若干个AM,作为AM资源池,当作业被提交到AMPS的时候,AMPS会把该作业直接提交到AM上,这样就避免每个作业都创建独立的AM,大大的提高了效率。AM缓冲池中的每个AM在启动时都会预先创建若干个container,以此来减少因创建container所话费的时间。每个任务运行完之后,AM不会立马释放Container,而是将它分配给其它未执行的任务。

编译

基础环境:

操作系统:CentOS release 6.7

Hadoop:2.6.0+cdh5.10.0 (Tez是Hortonworks贡献的,CDH要使用Tez,你懂得)

软件依赖:

java:jdk1.7.0_67

mvn:maven-3.3.9

node:node-v6.10.3

tez:tez-0.7.1

protobuf:protobuf-2.5.0

其他编译依赖

yum -y install gcc-c++ openssl-devel glibc

yum -y install autoconf automake libtool

yum -y install git unzip下载相关软件

#编译主目录

cd /opt/programs/

#下载pb

wget 'https://github-production-release-asset-2e65be.s3.amazonaws.com/23357588/09f5cfca-d24e-11e4-9840-20d894b9ee09?X-Amz-Algorithm=AWS4-HMAC-SHA256&X-Amz-Credential=AKIAIWNJYAX4CSVEH53A%2F20170606%2Fus-east-1%2Fs3%2Faws4_request&X-Amz-Date=20170606T001340Z&X-Amz-Expires=300&X-Amz-Signature=fd9ea5018702a129ef6fa9bc0830b72370a0ad11adc46f38d81328f95fd9145b&X-Amz-SignedHeaders=host&actor_id=12913767&response-content-disposition=attachment%3B%20filename%3Dprotobuf-2.5.0.tar.gz&response-content-type=application%2Foctet-stream'

#下载node

wget https://nodejs.org/dist/v6.10.3/node-v6.10.3-linux-x64.tar.xz

#下载tez

wget 'http://mirrors.hust.edu.cn/apache/tez/0.7.1/apache-tez-0.7.1-src.tar.gz'

修改bash_profile

export JAVA_HOME=/opt/programs/jdk1.7.0_67

PATH=$PATH:/opt/programs/jdk1.7.0_67/bin:/opt/programs/apache-maven-3.3.9/bin:/opt/programs/node-v6.10.3-linux-x64/bin

export PATH修改tez主pom.xml

tez编译主目录:/opt/programs/apache-tez-0.7.1-src,修改pom.xml,在<profiles>...</profiles>中添加CDH的mvn依赖。

<profile>

<id>cdh5.10.0</id>

<activation>

<activeByDefault>true</activeByDefault>

</activation>

<properties>

<hadoop.version>2.6.0-cdh5.10.0</hadoop.version>

</properties>

<pluginRepositories>

<pluginRepository>

<id>cloudera</id>

<url>https://repository.cloudera.com/artifactory/cloudera-repos/</url>

</pluginRepository>

<pluginRepository>

<id>nexus public</id>

<url>http://central.maven.org/maven2/</url>

</pluginRepository>

</pluginRepositories>

<repositories>

<repository>

<id>nexus public</id>

<url>http://central.maven.org/maven2/</url>

</repository>

<repository>

<id>cloudera</id>

<url>https://repository.cloudera.com/artifactory/cloudera-repos/</url>

</repository>

</repositories>

</profile>

开始编译

mvn -Pcdh5.10.0 clean package -Dtar -DskipTests=true -Dmaven.javadoc.skip=truetez-mapreduce编译错误

[ERROR] COMPILATION ERROR :

[INFO] -------------------------------------------------------------

[ERROR] /opt/programs/apache-tez-0.7.1-src/tez-mapreduce/src/main/java/org/apache/tez/mapreduce/hadoop/mapreduce/JobContextImpl.java:[57,8] org.apache.tez.mapreduce.hadoop.mapreduce.JobContextImpl is not abstract and does not override abstract method userClassesTakesPrecedence() in org.apache.hadoop.mapreduce.JobContext

[INFO] 1 error

[INFO] -------------------------------------------------------------

[INFO] ------------------------------------------------------------------------

[INFO] Reactor Summary:

[INFO]

[INFO] tez ................................................ SUCCESS [ 0.779 s]

[INFO] tez-api ............................................ SUCCESS [ 7.778 s]

[INFO] tez-common ......................................... SUCCESS [ 0.394 s]

[INFO] tez-runtime-internals .............................. SUCCESS [ 0.629 s]

[INFO] tez-runtime-library ................................ SUCCESS [ 1.644 s]

[INFO] tez-mapreduce ...................................... FAILURE [ 0.484 s]

[INFO] tez-examples ....................................... SKIPPED

改正:修改apache-tez-0.7.1-src/tez-mapreduce/src/main/java/org/apache/tez/mapreduce/hadoop/mapreduce/JobContextImpl.java文件:在471行追加下面代码

465 @Override

466 public Progressable getProgressible() {

467 return progress;

468 }

469

470 @Override

471 public boolean userClassesTakesPrecedence() {

472 return getJobConf().getBoolean(MRJobConfig.MAPREDUCE_JOB_USER_CLASSPATH_FIRST, false);

473 } tez-ui编译错误

[INFO] --- exec-maven-plugin:1.3.2:exec (Bower install) @ tez-ui ---

bower ESUDO Cannot be run with sudo

Additional error details:

Since bower is a user command, there is no need to execute it with superuser permissions.

If you're having permission errors when using bower without sudo, please spend a few minutes learning more about how your system should work and make any necessary repairs.

http://www.joyent.com/blog/installing-node-and-npm

https://gist.github.com/isaacs/579814

You can however run a command with sudo using --allow-root option

[INFO] ------------------------------------------------------------------------

[INFO] Reactor Summary:

[INFO]

[INFO] tez ................................................ SUCCESS [ 0.750 s]

[INFO] tez-api ............................................ SUCCESS [ 7.433 s]

[INFO] tez-common ......................................... SUCCESS [ 0.377 s]

[INFO] tez-runtime-internals .............................. SUCCESS [ 0.625 s]

[INFO] tez-runtime-library ................................ SUCCESS [ 1.575 s]

[INFO] tez-mapreduce ...................................... SUCCESS [ 0.764 s]

[INFO] tez-examples ....................................... SUCCESS [ 0.238 s]

[INFO] tez-dag ............................................ SUCCESS [ 2.887 s]

[INFO] tez-tests .......................................... SUCCESS [ 0.642 s]

[INFO] tez-ui ............................................. FAILURE [01:07 min]

[INFO] tez-plugins ........................................ SKIPPED

[INFO] tez-yarn-timeline-history .......................... SKIPPED

[INFO] tez-yarn-timeline-history-with-acls ................ SKIPPED

[INFO] tez-history-parser ................................. SKIPPED

[INFO] tez-tools .......................................... SKIPPED

[INFO] tez-perf-analyzer .................................. SKIPPED

[INFO] tez-job-analyzer ................................... SKIPPED

[INFO] tez-dist ........................................... SKIPPED

[INFO] Tez ................................................ SKIPPED

[INFO] ------------------------------------------------------------------------

[INFO] BUILD FAILURE

[INFO] ------------------------------------------------------------------------

[INFO] Total time: 01:23 min

[INFO] Finished at: 2017-06-06T15:30:48+08:00

[INFO] Final Memory: 64M/368M

[INFO] ------------------------------------------------------------------------

[ERROR] Failed to execute goal org.codehaus.mojo:exec-maven-plugin:1.3.2:exec (Bower install) on project tez-ui: Command execution failed. Process exited with an error: 1 (Exit value: 1) -> [Help 1]

[ERROR]

改正:修改apache-tez-0.7.1-src/tez-ui/pom.xml文件,在<artifactId>exec-maven-plugin</artifactId>下面的arguments tag追加<argument>--allow-root</argument>,如下部分:

<plugin>

<artifactId>exec-maven-plugin</artifactId>

<groupId>org.codehaus.mojo</groupId>

<executions>

<execution>

<id>Bower install</id>

<phase>generate-sources</phase>

<goals>

<goal>exec</goal>

</goals>

<configuration>

<workingDirectory>${webappDir}</workingDirectory>

<executable>${node.executable}</executable>

<arguments>

<argument>node_modules/bower/bin/bower</argument>

<argument>install</argument>

<argument>--remove-unnecessary-resolutions=false</argument>

<argument>--allow-root</argument>

</arguments>

</configuration>

</execution>

编译成功

[INFO] --- build-helper-maven-plugin:1.8:maven-version (maven-version) @ tez-docs ---

[INFO]

[INFO] --- maven-jar-plugin:2.4:test-jar (default) @ tez-docs ---

[WARNING] JAR will be empty - no content was marked for inclusion!

[INFO] Building jar: /opt/programs/apache-tez-0.7.1-src/docs/target/tez-docs-0.7.1-tests.jar

[INFO] ------------------------------------------------------------------------

[INFO] Reactor Summary:

[INFO]

[INFO] tez ................................................ SUCCESS [ 0.821 s]

[INFO] tez-api ............................................ SUCCESS [ 7.644 s]

[INFO] tez-common ......................................... SUCCESS [ 0.388 s]

[INFO] tez-runtime-internals .............................. SUCCESS [ 0.578 s]

[INFO] tez-runtime-library ................................ SUCCESS [ 1.443 s]

[INFO] tez-mapreduce ...................................... SUCCESS [ 0.766 s]

[INFO] tez-examples ....................................... SUCCESS [ 0.237 s]

[INFO] tez-dag ............................................ SUCCESS [ 2.694 s]

[INFO] tez-tests .......................................... SUCCESS [ 0.609 s]

[INFO] tez-ui ............................................. SUCCESS [18:01 min]

[INFO] tez-plugins ........................................ SUCCESS [ 0.032 s]

[INFO] tez-yarn-timeline-history .......................... SUCCESS [ 0.470 s]

[INFO] tez-yarn-timeline-history-with-acls ................ SUCCESS [ 0.307 s]

[INFO] tez-history-parser ................................. SUCCESS [01:02 min]

[INFO] tez-tools .......................................... SUCCESS [ 0.016 s]

[INFO] tez-perf-analyzer .................................. SUCCESS [ 0.015 s]

[INFO] tez-job-analyzer ................................... SUCCESS [ 1.461 s]

[INFO] tez-dist ........................................... SUCCESS [ 20.955 s]

[INFO] Tez ................................................ SUCCESS [ 0.026 s]

[INFO] ------------------------------------------------------------------------

[INFO] BUILD SUCCESS

[INFO] ------------------------------------------------------------------------

[INFO] Total time: 19:42 min

[INFO] Finished at: 2017-06-06T09:21:29+08:00

[INFO] Final Memory: 56M/462M

[INFO] ------------------------------------------------------------------------

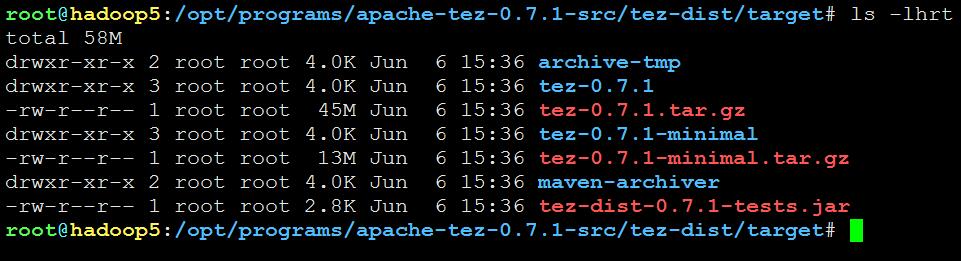

生成的文件

在apache-tez-0.7.1-src/tez-dist/target目录下

安装

创建tez部署目录

mkdir /opt/programs/tez_0.7.1/拷贝tez-dist/target/tez-0.7.1.tar.gz到部署目录并解压

cp /opt/programs/apache-tez-0.7.1-src/tez-dist/target/tez-0.7.1.tar.gz /opt/programs/tez_0.7.1/

cd /opt/programs/tez_0.7.1/

tar zxvf tez-0.7.1.tar.gz拷贝lzo包到解压后的lib目录

cp /usr/lib/hadoop/lib/hadoop-lzo.jar /opt/programs/tez_0.7.1/lib/

cp /usr/lib/hadoop/lib/hadoop-lzo-0.4.15-cdh5.5.1.jar /opt/programs/tez_0.7.1/lib/在/etc/hadoop/conf目录创建tez-site.xml配置文件(hadoop1-6)

<?xml version="1.0" encoding="UTF-8"?>

<?xml-stylesheet type="text/xsl" href="configuration.xsl"?>

<configuration>

<property>

<name>tez.lib.uris</name>

<value>hdfs://dev-dalu:8020/user/tez/tez-0.7.1.tar.gz</value>

</property>

<property>

<name>tez.history.logging.service.class</name>

<value>org.apache.tez.dag.history.logging.ats.ATSHistoryLoggingService</value>

</property>

<property>

<name>tez.tez-ui.history-url.base</name>

<value>http://172.31.217.155:8080/tez-ui/</value>

</property>

<property>

<name>tez.use.cluster.hadoop-libs</name>

<value>false</value>

</property>

</configuration>

在/etc/hadoop/conf目录创建hadoop-env.sh文件(hadoop1-6)

export TEZ_HOME=/opt/programs/tez_0.7.1

export TEZ_CONF_DIR=/etc/hadoop/conf

for jar in `ls $TEZ_HOME |grep jar`; do

export HADOOP_CLASSPATH=$HADOOP_CLASSPATH:$TEZ_HOME/$jar

done

for jar in `ls $TEZ_HOME/lib`; do

export HADOOP_CLASSPATH=$HADOOP_CLASSPATH:$TEZ_HOME/lib/$jar

done

在hadoop5上启动timeline-service服务

yarn timelineserver调整yarn-site.xml配置文件(hadoop1-6)

<?xml version="1.0"?>

<!--

Licensed to the Apache Software Foundation (ASF) under one or more

contributor license agreements. See the NOTICE file distributed with

this work for additional information regarding copyright ownership.

The ASF licenses this file to You under the Apache License, Version 2.0

(the "License"); you may not use this file except in compliance with

the License. You may obtain a copy of the License at

http://www.apache.org/licenses/LICENSE-2.0

Unless required by applicable law or agreed to in writing, software

distributed under the License is distributed on an "AS IS" BASIS,

WITHOUT WARRANTIES OR CONDITIONS OF ANY KIND, either express or implied.

See the License for the specific language governing permissions and

limitations under the License.

-->

<?xml-stylesheet type="text/xsl" href="configuration.xsl"?>

<configuration>

<!-- RM Manager Configd -->

<property>

<name>yarn.resourcemanager.connect.retry-interval.ms</name>

<value>2000</value>

</property>

<property>

<name>yarn.resourcemanager.ha.enabled</name>

<value>true</value>

</property>

<property>

<name>yarn.resourcemanager.ha.automatic-failover.enabled</name>

<value>true</value>

</property>

<property>

<name>yarn.resourcemanager.ha.automatic-failover.embedded</name>

<value>true</value>

</property>

<property>

<name>yarn.resourcemanager.cluster-id</name>

<value>dalu-rm-cluster</value>

</property>

<property>

<name>yarn.resourcemanager.ha.rm-ids</name>

<value>hadoop1,hadoop6</value>

</property>

<property>

<name>yarn.resourcemanager.ha.id</name>

<value>hadoop1</value>

</property>

<!--scheduler capacity -->

<property>

<name>yarn.resourcemanager.scheduler.class</name>

<value>org.apache.hadoop.yarn.server.resourcemanager.scheduler.capacity.CapacityScheduler</value>

</property>

<property>

<name>yarn.resourcemanager.recovery.enabled</name>

<value>true</value>

</property>

<property>

<name>yarn.resourcemanager.store.class</name>

<value>org.apache.hadoop.yarn.server.resourcemanager.recovery.ZKRMStateStore</value>

</property>

<property>

<name>yarn.resourcemanager.zk-address</name>

<value>hadoop2:2181,hadoop3:2181,hadoop4:2181</value>

</property>

<property>

<name>yarn.resourcemanager.zk.state-store.address</name>

<value>hadoop2:2181,hadoop3:2181,hadoop4:2181</value>

</property>

<property>

<name>yarn.app.mapreduce.am.scheduler.connection.wait.interval-ms</name>

<value>5000</value>

</property>

<!-- RM1 Configs-->

<property>

<name>yarn.resourcemanager.address.hadoop1</name>

<value>hadoop1:23140</value>

</property>

<property>

<name>yarn.resourcemanager.scheduler.address.hadoop1</name>

<value>hadoop1:23130</value>

</property>

<property>

<name>yarn.resourcemanager.webapp.https.address.hadoop1</name>

<value>hadoop1:23189</value>

</property>

<property>

<name>yarn.resourcemanager.webapp.address.hadoop1</name>

<value>hadoop1:23188</value>

</property>

<property>

<name>yarn.resourcemanager.resource-tracker.address.hadoop1</name>

<value>hadoop1:23125</value>

</property>

<property>

<name>yarn.resourcemanager.admin.address.hadoop1</name>

<value>hadoop1:23141</value>

</property>

<!-- RM2 Configs -->

<property>

<name>yarn.resourcemanager.address.hadoop6</name>

<value>hadoop6:23140</value>

</property>

<property>

<name>yarn.resourcemanager.scheduler.address.hadoop6</name>

<value>hadoop6:23130</value>

</property>

<property>

<name>yarn.resourcemanager.webapp.https.address.hadoop6</name>

<value>hadoop6:23189</value>

</property>

<property>

<name>yarn.resourcemanager.webapp.address.hadoop6</name>

<value>hadoop6:23188</value>

</property>

<property>

<name>yarn.resourcemanager.resource-tracker.address.hadoop6</name>

<value>hadoop6:23125</value>

</property>

<property>

<name>yarn.resourcemanager.admin.address.hadoop6</name>

<value>hadoop6:23141</value>

</property>

<!-- Node Manager Configs -->

<property>

<name>yarn.nodemanager.resource.memory-mb</name>

<value>2048</value>

</property>

<property>

<name>yarn.nodemanager.resource.cpu-vcores</name>

<value>2</value>

</property>

<property>

<name>yarn.log-aggregation.retain-seconds</name>

<value>86400</value>

</property>

<property>

<name>yarn.log-aggregation.retain-check-interval-seconds</name>

<value>8640</value>

</property>

<property>

<name>yarn.nodemanager.localizer.address</name>

<value>0.0.0.0:23344</value>

</property>

<property>

<name>yarn.nodemanager.webapp.address</name>

<value>0.0.0.0:23999</value>

</property>

<property>

<name>yarn.web-proxy.address</name>

<value>0.0.0.0:8080</value>

</property>

<property>

<name>mapreduce.shuffle.port</name>

<value>23080</value>

</property>

<property>

<name>yarn.nodemanager.aux-services</name>

<value>mapreduce_shuffle,llama_nm_plugin</value>

</property>

<property>

<name>yarn.nodemanager.aux-services.llama_nm_plugin.class</name>

<value>com.cloudera.llama.nm.LlamaNMAuxiliaryService</value>

</property>

<property>

<name>yarn.nodemanager.aux-services.mapreduce_shuffle.class</name>

<value>org.apache.hadoop.mapred.ShuffleHandler</value>

</property>

<property>

<name>yarn.log-aggregation-enable</name>

<value>true</value>

</property>

<property>

<name>yarn.nodemanager.log-aggregation.roll-monitoring-interval-seconds</name>

<value>7200</value>

</property>

<property>

<name>yarn.log.server.url</name>

<value>http://hadoop5:19888/jobhistory/logs/</value>

</property>

<property>

<name>yarn.nodemanager.local-dirs</name>

<value>file:///opt/hadoop/yarn/dn</value>

</property>

<property>

<name>yarn.nodemanager.log-dirs</name>

<value>file:///opt/hadoop/yarn/logs</value>

</property>

<property>

<name>yarn.nodemanager.remote-app-log-dir</name>

<value>hdfs://dev-dalu:8020/var/log/hadoop-yarn/apps</value>

</property>

<property>

<name>yarn.web-proxy.address</name>

<value>hadoop5:41202</value>

</property>

<property>

<name>yarn.resourcemanager.delegation.key.update-interval</name>

<value>31536000000</value>

</property>

<property>

<name>yarn.resourcemanager.delegation.token.max-lifetime</name>

<value>31536000000</value>

</property>

<property>

<name>yarn.resourcemanager.delegation.token.renew-interval</name>

<value>31536000000</value>

</property>

<property>

<name>yarn.nodemanager.pmem-check-enabled</name>

<value>false</value>

</property>

<property>

<name>yarn.scheduler.minimum-allocation-mb</name>

<value>1024</value>

</property>

<property>

<name>yarn.scheduler.minimum-allocation-vcores</name>

<value>1</value>

</property>

<property>

<name>yarn.scheduler.maximum-allocation-mb</name>

<value>4096</value>

</property>

<property>

<name>yarn.application.classpath</name>

<value>

$HADOOP_CONF_DIR,

$HADOOP_COMMON_HOME/*,$HADOOP_COMMON_HOME/lib/*,

$HADOOP_HDFS_HOME/*,$HADOOP_HDFS_HOME/lib/*,

$HADOOP_MAPRED_HOME/*,$HADOOP_MAPRED_HOME/lib/*,

$HADOOP_YARN_HOME/*,$HADOOP_YARN_HOME/lib/*

</value>

</property>

<!-- tez -->

<property>

<name>yarn.timeline-service.enabled</name>

<value>true</value>

</property>

<property>

<name>yarn.timeline-service.hostname</name>

<value>172.31.217.156</value>

</property>

<property>

<name>yarn.timeline-service.http-cross-origin.enabled</name>

<value>true</value>

</property>

<property>

<name>yarn.resourcemanager.system-metrics-publisher.enabled</name>

<value>true</value>

</property>

<property>

<name>yarn.timeline-service.address</name>

<value>${yarn.timeline-service.hostname}:10200</value>

</property>

<property>

<name>yarn.timeline-service.webapp.address</name>

<value>${yarn.timeline-service.hostname}:8188</value>

</property>

<property>

<name>yarn.timeline-service.webapp.https.address</name>

<value>${yarn.timeline-service.hostname}:8190</value>

</property>

<property>

<name>yarn.timeline-service.handler-thread-count</name>

<value>10</value>

</property>

<property>

<name>yarn.timeline-service.http-cross-origin.enabled</name>

<value>true</value>

</property>

<property>

<name>yarn.timeline-service.http-cross-origin.allowed-origins</name>

<value>*</value>

</property>

<property>

<name>yarn.timeline-service.http-cross-origin.allowed-methods</name>

<value>GET,POST,HEAD,OPTIONS</value>

</property>

<property>

<name>yarn.timeline-service.http-cross-origin.allowed-headers</name>

<value>X-Requested-With,Content-Type,Accept,Origin,Access-Control-Allow-Origin</value>

</property>

<property>

<name>yarn.timeline-service.http-cross-origin.max-age</name>

<value>1800</value>

</property>

<property>

<name>yarn.resourcemanager.webapp.cross-origin.enabled</name>

<value>true</value>

</property>

</configuration>

打包/opt/programs/tez_0.7.1/目录下文件为tez-0.7.1.tar.gz

cd /opt/programs/tez_0.7.1

tar czvf tez-0.7.1.tar.gz ./*hadoop上创建tez目录

hadoop fs -mkdir /user/tez上传/opt/programs/tez_0.7.1/tez-0.7.1.tar.gz到hadoop的/user/tez目录

hadoop fs -put /opt/programs/tez_0.7.1/tez-0.7.1.tar.gz /user/tez/拷贝tez-ui-0.7.1.war到tomcat的webapp目录,并解压

#拷贝

cp /opt/programs/apache-tez-0.7.1-src/tez-dist/target/tez-0.7.1/tez-ui-0.7.1.war /opt/programs/tomcat_7.0.68/webapps/

#解压tez-ui-0.7.1.war到tez-ui

cd /opt/programs/tomcat_7.0.68/webapps

jar -xvf tez-ui-0.7.1.war -C tez-ui修改tomcat_7.0.68/webapps/tez-ui/scripts/configs.js

timelineBaseUrl: 'http://172.31.217.156:8188',

RMWebUrl: 'http://172.31.217.151:21388',部署/opt/programs/tez_0.7.1/tez-0.7.1.tar.gz到整个集群(hadoop1-6)

重启RM/Job-history/NM

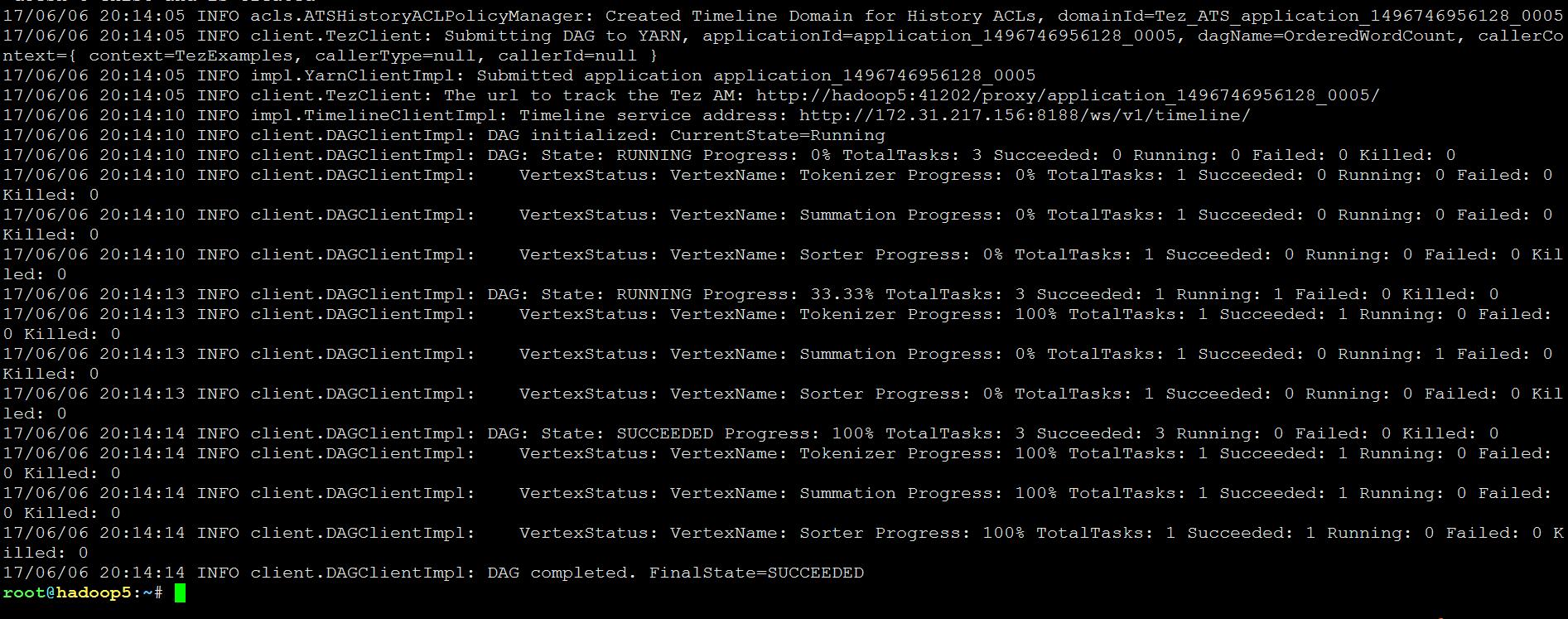

实验word count

在hadoop上创建相关输出目录

hadoop fs -mkdir /tmp/output上传计算文件

hadoop fs -put /opt/programs/apache-tez-0.7.1-src/LICENSE.txt /tmp/开始计算

hadoop jar /opt/programs/apache-tez-0.7.1-src/tez-dist/target/tez-0.7.1/tez-examples-0.7.1.jar orderedwordcount /tmp/LICENSE.txt /tmp/output计算过程

SLF4J: Class path contains multiple SLF4J bindings.

SLF4J: Found binding in [jar:file:/usr/lib/zookeeper/lib/slf4j-log4j12-1.7.5.jar!/org/slf4j/impl/StaticLoggerBinder.class]

SLF4J: Found binding in [jar:file:/opt/programs/tez_0.7.1/lib/slf4j-log4j12-1.7.5.jar!/org/slf4j/impl/StaticLoggerBinder.class]

SLF4J: See http://www.slf4j.org/codes.html#multiple_bindings for an explanation.

SLF4J: Actual binding is of type [org.slf4j.impl.Log4jLoggerFactory]

17/06/06 20:14:03 INFO client.TezClient: Tez Client Version: [ component=tez-api, version=0.7.1, revision=${buildNumber}, SCM-URL=scm:git:https://git-wip-us.apache.org/repos/asf/tez.git, buildTime=2017-06-06T07:34:50Z ]

17/06/06 20:14:03 INFO impl.TimelineClientImpl: Timeline service address: http://172.31.217.156:8188/ws/v1/timeline/

17/06/06 20:14:04 INFO client.TezClient: Using org.apache.tez.dag.history.ats.acls.ATSHistoryACLPolicyManager to manage Timeline ACLs

17/06/06 20:14:04 INFO impl.TimelineClientImpl: Timeline service address: http://172.31.217.156:8188/ws/v1/timeline/

17/06/06 20:14:05 INFO examples.OrderedWordCount: Running OrderedWordCount

17/06/06 20:14:05 INFO client.TezClient: Submitting DAG application with id: application_1496746956128_0005

17/06/06 20:14:05 INFO client.TezClientUtils: Using tez.lib.uris value from configuration: hdfs://dev-dalu:8020/user/tez/tez-0.7.1.tar.gz

17/06/06 20:14:05 INFO client.TezClient: Tez system stage directory hdfs://dev-dalu:8020/tmp/root/tez/staging/.tez/application_1496746956128_0005 doesn't exist and is created

17/06/06 20:14:05 INFO acls.ATSHistoryACLPolicyManager: Created Timeline Domain for History ACLs, domainId=Tez_ATS_application_1496746956128_0005

17/06/06 20:14:05 INFO client.TezClient: Submitting DAG to YARN, applicationId=application_1496746956128_0005, dagName=OrderedWordCount, callerContext={ context=TezExamples, callerType=null, callerId=null }

17/06/06 20:14:05 INFO impl.YarnClientImpl: Submitted application application_1496746956128_0005

17/06/06 20:14:05 INFO client.TezClient: The url to track the Tez AM: http://hadoop5:41202/proxy/application_1496746956128_0005/

17/06/06 20:14:10 INFO impl.TimelineClientImpl: Timeline service address: http://172.31.217.156:8188/ws/v1/timeline/

17/06/06 20:14:10 INFO client.DAGClientImpl: DAG initialized: CurrentState=Running

17/06/06 20:14:10 INFO client.DAGClientImpl: DAG: State: RUNNING Progress: 0% TotalTasks: 3 Succeeded: 0 Running: 0 Failed: 0 Killed: 0

17/06/06 20:14:10 INFO client.DAGClientImpl: VertexStatus: VertexName: Tokenizer Progress: 0% TotalTasks: 1 Succeeded: 0 Running: 0 Failed: 0 Killed: 0

17/06/06 20:14:10 INFO client.DAGClientImpl: VertexStatus: VertexName: Summation Progress: 0% TotalTasks: 1 Succeeded: 0 Running: 0 Failed: 0 Killed: 0

17/06/06 20:14:10 INFO client.DAGClientImpl: VertexStatus: VertexName: Sorter Progress: 0% TotalTasks: 1 Succeeded: 0 Running: 0 Failed: 0 Killed: 0

17/06/06 20:14:13 INFO client.DAGClientImpl: DAG: State: RUNNING Progress: 33.33% TotalTasks: 3 Succeeded: 1 Running: 1 Failed: 0 Killed: 0

17/06/06 20:14:13 INFO client.DAGClientImpl: VertexStatus: VertexName: Tokenizer Progress: 100% TotalTasks: 1 Succeeded: 1 Running: 0 Failed: 0 Killed: 0

17/06/06 20:14:13 INFO client.DAGClientImpl: VertexStatus: VertexName: Summation Progress: 0% TotalTasks: 1 Succeeded: 0 Running: 1 Failed: 0 Killed: 0

17/06/06 20:14:13 INFO client.DAGClientImpl: VertexStatus: VertexName: Sorter Progress: 0% TotalTasks: 1 Succeeded: 0 Running: 0 Failed: 0 Killed: 0

17/06/06 20:14:14 INFO client.DAGClientImpl: DAG: State: SUCCEEDED Progress: 100% TotalTasks: 3 Succeeded: 3 Running: 0 Failed: 0 Killed: 0

17/06/06 20:14:14 INFO client.DAGClientImpl: VertexStatus: VertexName: Tokenizer Progress: 100% TotalTasks: 1 Succeeded: 1 Running: 0 Failed: 0 Killed: 0

17/06/06 20:14:14 INFO client.DAGClientImpl: VertexStatus: VertexName: Summation Progress: 100% TotalTasks: 1 Succeeded: 1 Running: 0 Failed: 0 Killed: 0

17/06/06 20:14:14 INFO client.DAGClientImpl: VertexStatus: VertexName: Sorter Progress: 100% TotalTasks: 1 Succeeded: 1 Running: 0 Failed: 0 Killed: 0

17/06/06 20:14:14 INFO client.DAGClientImpl: DAG completed. FinalState=SUCCEEDED

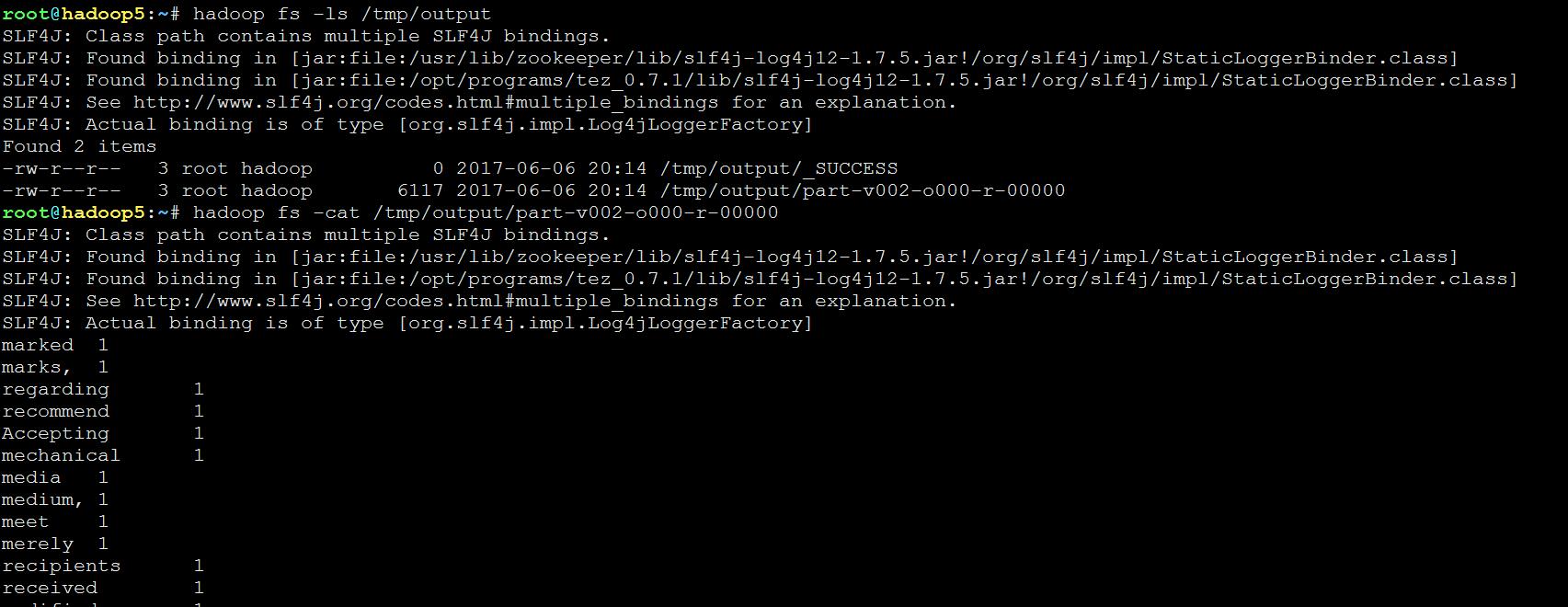

查看结果

hadoop fs -ls /tmp/output

hadoop fs -cat /tmp/output/part-v002-o000-r-00000

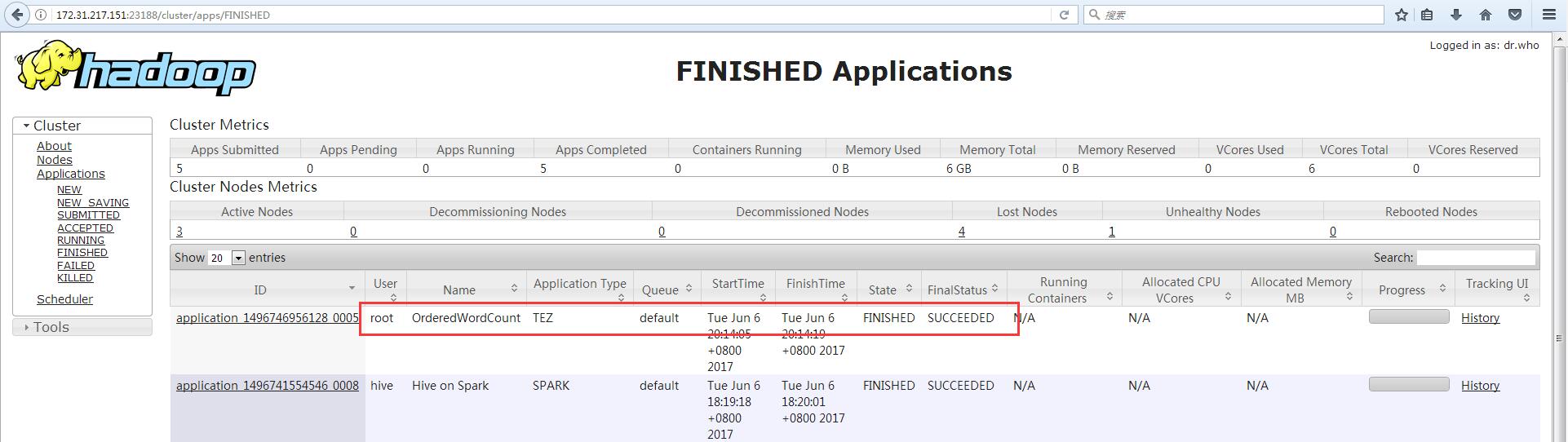

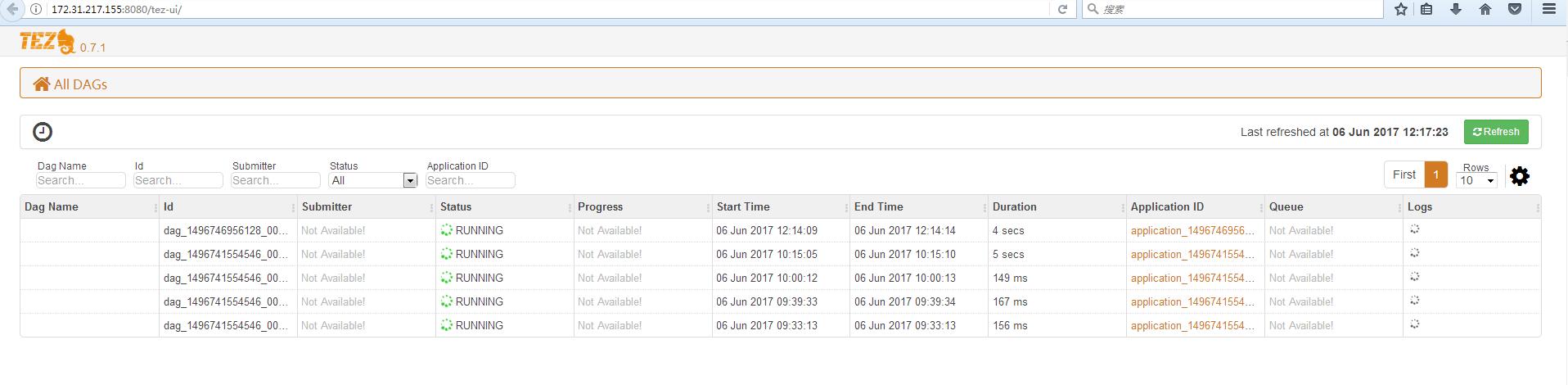

WEB显示

Tez-ui

438

438

被折叠的 条评论

为什么被折叠?

被折叠的 条评论

为什么被折叠?