1.环境说明: Test1: 10.253.1.231 Test2:10.253.1.232 Test3:10.253.1.233 三台机器分别搭建es集群,kibana集群,zk集群,安装fluent(td-agent),安装kibana可视化数据

搭建持续性存储es集群

** 1.安装es包(三台机器操作相同)**

[root@test3 ~]# yum -y install java-1.7.0

[root@test3 ~]# yum -y localinstall elasticsearch-1.7.3.noarch.rpm

2.建议安装以下插件 管理集群插件

# head

/usr/share/elasticsearch/bin/plugin install mobz/elasticsearch-head

# bigdesk

/usr/share/elasticsearch/bin/plugin install hlstudio/bigdesk

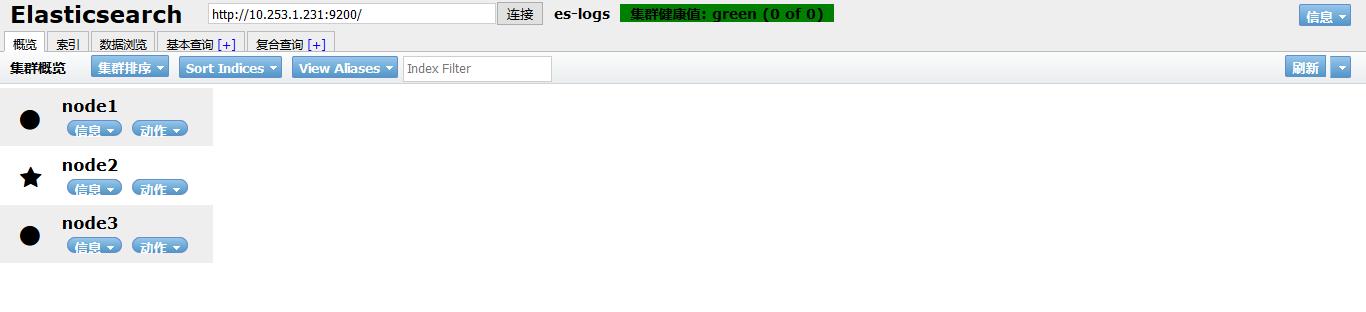

安装一台,其余可以复制es的plugin下面的相应目录到其他两台的es的plugin目录。 3.查看插件状态 直接访问http://ip:9200/_glugin/插件名就可查看 下面是head集群管理界面的状态图,五角星表示该节点为master。

搭建zookeeper集群

1.安装

[root[@test1](https://my.oschina.net/test1) ~]# tar zookeeper-3.4.8.tar.gz

[root[@test1](https://my.oschina.net/test1) ~]# mv zookeeper-3.4.8 /usr/local/zookeeper

[root[@test1](https://my.oschina.net/test1) ~]# cd /usr/local/zookeeper/

2.配置zk

[root@test1 conf]# cp zoo_sample.cfg zoo.cfg

修改配置如下: 创建一个目录,用来存储与zk服务器有关的数据(三台都创建)

[root@test2 zookeeper]# mkdir -p /var/zookeeper/data

zk配置文件如下:

# The number of milliseconds of each tick

tickTime=2000

# The number of ticks that the initial

# synchronization phase can take

initLimit=10

# The number of ticks that can pass between

# sending a request and getting an acknowledgement

syncLimit=5

# the directory where the snapshot is stored.

# do not use /tmp for storage, /tmp here is just

# example sakes.

dataDir=/var/zookeeper/data

# the port at which the clients will connect

clientPort=2181

# the maximum number of client connections.

# increase this if you need to handle more clients

#maxClientCnxns=60

server.1=10.253.1.231:2888:3888

server.2=10.253.1.232:2888:3888

server.3=10.253.1.233:2888:3888

# Be sure to read the maintenance section of the

# administrator guide before turning on autopurge.

#

# http://zookeeper.apache.org/doc/current/zookeeperAdmin.html#sc_maintenance

#

# The number of snapshots to retain in dataDir

#autopurge.snapRetainCount=3

# Purge task interval in hours

# Set to "0" to disable auto purge feature

#autopurge.purgeInterval=1

在数据目录下创建myid文件写入服务器id

# 10.253.1.231

echo 1 >/var/zookeeeper/data/myid

# 10.253.1.232

echo 2 >/var/zookeeper/data/myid

# 10.253.1.233

echo 3 >/var/zookeeper/data/myid

注:该id与配置文件的要一致

3.启动服务查看节点状态

# 10.253.1.231

bin/zkServer.sh start

bin/zkServer.sh status

# 10.253.1.232

bin/zkServer.sh start

bin/zkServer.sh status

# 10.252.1.233

bin/zkServer.sh start

bin/zkServer.sh status

自此,zookeeper集群搭建完毕。

配置zookeeper开机自启动服务

[root@test1 init.d]# cd /etc/rc.d/init.d/

[root@test1 init.d]# vi zookeeper

Zookeeper内容:

#!/bin/bash

#chkconfig:2345 20 90

#description:zookeeper

#processname:zookeeper

case $1 in

start) su root /usr/local/zookeeper/bin/zkServer.sh start;;

stop) su root /usr/local/zookeeper/bin/zkServer.sh stop;;

status) su root /usr/local/zookeeper/bin/zkServer.sh status;;

restart) su root /usr/local/zookeeper/bin/zkServer.sh restart;;

*) echo "require start|stop|status|restart";;

Esac

给zkServer.sh赋予执行权限

[root@test1 init.d]#chmod +x zookeeper

开机自启设置

[root@test1 init.d]# chkconfig --add zookeeper

[root@test1 init.d]# chkconfig –list

下面,我们可以通过以下命令进行启动,停止,重启,状态查询等服务。

[root@test1 ~]# service zookeeper status

ZooKeeper JMX enabled by default

Using config: /usr/local/zookeeper/bin/../conf/zoo.cfg

Mode: follower

搭建Kafka Broker集群

**** 1.安装****

[root@test1 local]# tar -xf kafka_2.11-0.10.0.0.tgz

[root@test1 local]# mv kafka_2.11-0.10.0.0 /usr/local/kafka

**** 2.修改配置文件****

[root@test1 local]# vi config/server.properties

配置文件内容如下:

############################ Server Basics #############################

# The id of the broker. This must be set to a unique integer for each broker.

broker.id=1 唯一

# FORMAT:

# listeners = security_protocol://host_name:port

# EXAMPLE:

# listeners = PLAINTEXT://your.host.name:9092

#listeners=PLAINTEXT://:9092

# The number of threads handling network requests

num.network.threads=3

# The number of threads doing disk I/O

num.io.threads=8

# The send buffer (SO_SNDBUF) used by the socket server

socket.send.buffer.bytes=102400

# The receive buffer (SO_RCVBUF) used by the socket server

socket.receive.buffer.bytes=102400

socket.request.max.bytes=104857600

log.dirs=/tmp/kafka-logs

# The default number of log partitions per topic. More partitions allow greater

# parallelism for consumption, but this will also result in more files across

# the brokers.

num.partitions=1

#log.flush.interval.ms=1000

# A size-based retention policy for logs. Segments are pruned from the log as long as the remaining

# segments don't drop below log.retention.bytes.

#log.retention.bytes=1073741824

# The maximum size of a log segment file. When this size is reached a new log segment will be created.

log.segment.bytes=1073741824

# The interval at which log segments are checked to see if they can be deleted according

# to the retention policies

log.retention.check.interval.ms=300000

############################# Zookeeper #############################

# Zookeeper connection string (see zookeeper docs for details).

# This is a comma separated host:port pairs, each corresponding to a zk

# server. e.g. "127.0.0.1:3000,127.0.0.1:3001,127.0.0.1:3002".

# You can also append an optional chroot string to the urls to specify the

# root directory for all kafka znodes.

zookeeper.connect=10.253.1.231:2181,10.253.1.232:2181,10.253.1.233:2181/chroot/var/zookeeper/data 此处内容要同步给消费者consumer.properties配置文件

zookeeper.connection.timeout.ms=6000

在所有机器创建目录/var/zookeeper/data

将kafka目录同步给10.253.1.232和10.253.1.233

[root@test1 config]# scp -r kafka/ root@test2:/usr/local/

[root@test1 config]# scp -r kafka/ root@test3:/usr/local/

修改232和233的kafka配置文件中的broker.id

# 10.253.1.232

broker.id=2

# 10.253.1.233

broker.id=3

3.启动kafka服务

[root@test1 kafka]# bin/kafka-server-start.sh config/server.properties &

4.配置kafka开机自启动

[root@test1 ~]# cd /etc/rc.d/init.d/

[root@test1 init.d]# vi kafka

#!/bin/bash

#chkconfig:2345 30 80

#description:kafka

#processname:kafka

case $1 in

start) /usr/local/kafka/bin/kafka-server-start.sh /usr/local/kafka/config/server.properties;;

stop) /usr/local/kafka/bin/kafka-server-stop.sh /usr/local/kafka/config/server.properties;;

restart) /usr/local/kafka/bin/kafka-server-stop.sh /usr/local/kafka/config/server.properties

/usr/local/kafka/bin/kafka-server-start.sh /usr/local/kafka/config/server.properties

;;

*) echo "require start|stop|restart" ;;

esac

添加执行权限

[root@test1 init.d]# chmod +x kafka

添加开机自启

[root@test1 init.d]# chkconfig --add kafka

[root@test1 init.d]# chkconfig –list

5.安装与配置kafka监控工具Kafka Manager

1.环境要求 Java1.8.0及以上 有kafka集群,zk集群 如果源码编译还需要安装SBT 2.安装stb

# wget https://dl.bintray.com/sbt/native-packages/sbt/0.13.11/sbt-0.13.11.zip

# unzip sbt-0.13.11.zip

# cd sbt

Sbt的目录在/usr/local/sbt,将其加入path中。

vi /etc/profile

...

...

export SBT_HOME=/usr/local/sbt/

export PATH=$SBT_HOME/bin:$PATH

3.编译 在github上下载源码

#git clone https://github.com/yahoo/kafka-manager.git

进入kafka-manager目录,运行以下目录,编译时间相当长,且需要联网下载相应库

#sbt clean dist

编译完成之后会在kafka-manage目录的 target/universal/目录下生产一个.zip包,我们将使用该包部署kafka-manage。 4.配置kafka-manage 将该.zip包解压,配置其.conf文件

#vim application.conf

kafka-manager.zkhosts="${IP}:2181" //配置zk,与kafka中的zk配置一样。

5.启动kafka-manage

./bin/kafka-manager -Dconfig.file=conf/application.conf -Dhttp.port=8180 > /dev/null 2>&1 &

-Dconfig.file 指定其配置文件,-Dhttp.port指定其端口,默认端口为9000 6.web访问 http://ip:port

搭建fluentd去收集日志

1.安装td-anget及插件

curl -L https://toolbelt.treasuredata.com/sh/install-redhat-td-agent2.sh | sh

安装kafka插件

gem install fluent-plugin-kafka

本文采用在线安装,你可以下载安装包,或者zip包进行安装。 2.配置td-anget服务 在配置文件中写入source(本文选择了message为收集对象),并配置输出,使其交给kafka

<source>

@type tail

path /var/log/messages

pos_file /var/log/td-agent/messages.log.pos

tag messages.log

format /^(?<time>[^ ]*\s*[^ ]* [^ ]*) (?<host>[^ ]*) (?<ident>[a-zA-Z0-9_\/\.\-]*)(?:\[(?<pid>[0-9]+)\])?(?:[^\:]*\:)? *(?<message>.*)$/

time_format %b %d %H:%M:%S

</source>

<match messages.**>

type kafka

brokers 10.253.1.233:9092,10.253.1.232:9092,10.253.1.231:9092

default_topic test //注

output_data_type json

output_include_tag false

output_include_time flase

</match>

注:上面配置中,topic可先在kafka中创建完成,也可以不创建,建议提前创建并规划好partition和replications。 3.起服务

/etc/init.d/td-agent start

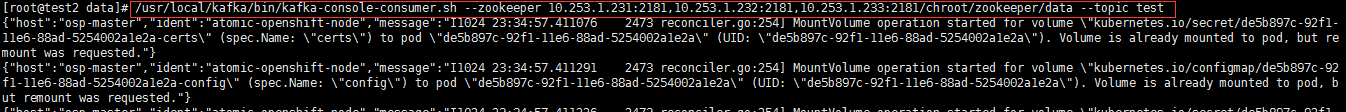

4.在kafka的broker上验证

/usr/local/kafka/bin/kafka-console-consumer.sh --zookeeper 10.253.1.231:2181,10.253.1.232:2181,10.253.1.233:2181/chroot/zookeeper/data --topic test

Kafka消费者能正常消费消息  搭建中间fluentd将kafka的数据写入elasticsearch集群 1.安装服务 安装过程与上述一致。 2.安装kafka插件与elasticsearch插件

搭建中间fluentd将kafka的数据写入elasticsearch集群 1.安装服务 安装过程与上述一致。 2.安装kafka插件与elasticsearch插件

gem install fluent-plugin-kafka

gem install fluent-plugin-elasticsearch

3.写配置文件

<source>

@type kafka

brokers 10.253.1.231:9092,10.253.1.232:9092,10.253.1.233:9092

topics test

offset_zookeeper 10.253.1.231:2181,10.253.1.232:2181,10.253.10.233:2181

offset_zk_root_node /zookeeper/data

</source>

<match test.**>

@type elasticsearch

host 10.253.1.232

port 9200

scheme http

index_name kafka-es

</match>

4.起服务 [root@test3 ~]# systemctl enable td-angetd

5.在es中查看是否写入数据

6.安装kibana进行可视化数据 安装kibana可视化你的数据。

说明:

2089

2089

被折叠的 条评论

为什么被折叠?

被折叠的 条评论

为什么被折叠?