***面向生产环境的大集群模式重新安装实施Hadoop,要求

1)使用DNS而不是hosts文件解析主机名

2)使用NFS共享密钥文件,而不是逐个手工拷贝添加密钥

3)复制Hadoop时使用批量拷贝脚本而不是逐台复制

4)使集群具有HA和(或)HDFS联邦特性

一、软件环境

- 操作系统版本:虚机下的64位CentOS6.3

- hadoop版本:hadoop2.3.0(本地编译,具体见本人博客:https://my.oschina.net/TaoPengFeiBlog/blog/795191

- 辅助工具:Xshell 5

二、JDK的安装

具体的安装步骤见本人的博客:https://my.oschina.net/TaoPengFeiBlog/blog/794311

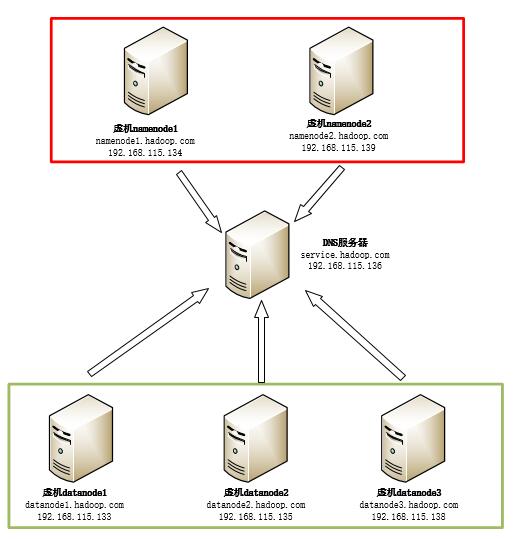

三、DNS域名解析服务器的搭建

- 更改集群里所有主机的主机名,/etc/hosts

192.168.115.136 service.hadoop.com

192.168.115.134 namenode1.hadoop.com

192.168.115.139 namenode2.hadoop.com

192.168.115.133 datanode1.hadoop.com

192.168.115.138 datanode2.hadoop.com

192.168.115.135 datanode2.hadoop.com- 安装bind相应的软件包及软件包的检测;

[root@localhost grid]# yum -y install bind bind-utils bind-chroot

................

[root@localhost grid]# rpm -qa |grep '^bind'

bind-utils-9.8.2-0.47.rc1.el6_8.3.x86_64

bind-libs-9.8.2-0.47.rc1.el6_8.3.x86_64

bind-9.8.2-0.47.rc1.el6_8.3.x86_64

bind-chroot-9.8.2-0.47.rc1.el6_8.3.x86_64- 修改/etc/named.conf;

[root@service Desktop] vi /etc/named.confoptions {

listen-on port 53 { any; };

listen-on-v6 port 53 { ::1; };

directory "/var/named";

dump-file "/var/named/data/cache_dump.db";

statistics-file "/var/named/data/named_stats.txt";

memstatistics-file "/var/named/data/named_mem_stats.txt";

allow-query { any; };

recursion yes;

dnssec-enable yes;

dnssec-validation yes;

/* Path to ISC DLV key */

bindkeys-file "/etc/named.iscdlv.key";

managed-keys-directory "/var/named/dynamic";

};

-

修改/etc/named.rfc1912.zones 将两个zone增加在/etc/named.rfc1912.zones 文件中的,也可以直接写在named.conf中;

[root@service Desktop]vi /etc/named.rfc1912.zoneszone "hadoop.com" IN {

type master;

file "named.hadoop.com";

allow-update { none; };

};

zone "115.168.192.in-addr.arpa" IN {

type master;

file "named.192.168.115.zone";

allow-update { none; };

};

- 配置正向解析文件,进入/var/named目录,拷贝并修改一个模板;

[root@service Desktop]# cd /var/named/

[root@service named]# ls

chroot data dynamic named.ca named.empty named.localhost named.loopback slaves

[root@service named]# cp -p named.localhost named.hadoop.com

[root@service named]# ls

chroot data dynamic named.ca named.empty named.hadoop.com named.localhost named.loopback slaves

[root@service named]# vi named.hadoop.com

TTL 1D

@ IN SOA service.hadoop.com. grid.service.hadoop.com. (

0 ; serial

1D ; refresh

1H ; retry

1W ; expire

3H ) ; minimum

@ IN NS service.hadoop.com.

service.hadoop.com. IN A 192.168.115.136

namenode1.hadoop.com. IN A 192.168.115.134

namenode2.hadoop.com. IN A 192.168.115.139

datanode1.hadoop.com. IN A 192.168.115.133

datanode2.hadoop.com. IN A 192.168.115.138

datanode3.hadoop.com. IN A 192.168.115.135

- 配置反向解析文件,进入/var/named目录,拷贝并修改一个模板;

[root@service named]# cp -p named.localhost named.192.168.115.zone

[root@service named]# vi named.192.168.115.zone$TTL 1D

@ IN SOA service.hadoop.com. grid.service.hadoop.com. (

0 ; serial

1D ; refresh

1H ; retry

1W ; expire

3H ) ; minimum

@ IN NS service.hadoop.com.

136 IN PTR service.hadoop.com.

134 IN PTR namenode1.hadoop.com.

139 IN PTR namenode2.hadoop.com.

133 IN PTR datanode1.hadoop.com.

138 IN PTR datanode2.hadoop.com.

135 IN PTR datanode3.hadoop.com.

OK Cancel - 添加DNS域名服务器ip,在每个节点的 /etc/sysconfig/network-scripts/ifcfg-eth0文件中加入 服务器ip地址,修改/etc/resolv.conf 添加 DNS 域名服务器 ip;

[root@namenode2 Desktop]# cat /etc/resolv.conf

# Generated by NetworkManager

domain localdomain

search localdomain

nameserver 192.168.115.136

- 测试之前关闭集群里所有主机的防火墙

#关闭:

service iptables stop

#查看:

service iptables status

#重启不启动:

chkconfig iptables off

#重启启动:

chkconfig iptables on- 启动DNS并设置开机启动

service named start

chkconfig named on[root@service Desktop]# chkconfig --list named

named 0:off 1:off 2:on 3:on 4:on 5:on 6:off

- 验证测试

[root@namenode1 Desktop]# nslookup namenode1.hadoop.com

Server: 192.168.115.128

Address: 192.168.115.128#53

Name: namenode1.hadoop.com

Address: 192.168.115.137

[root@namenode1 Desktop]# nslookup 192.168.115.137

Server: 192.168.115.128

Address: 192.168.115.128#53

137.115.168.192.in-addr.arpa name = namenode1.hadoop.com.

[root@namenode1 Desktop]# ping namenode2.hadoop.com

PING namenode2.hadoop.com (192.168.115.130) 56(84) bytes of data.

64 bytes from namenode2.hadoop.com (192.168.115.130): icmp_seq=1 ttl=64 time=2.21 ms

64 bytes from namenode2.hadoop.com (192.168.115.130): icmp_seq=2 ttl=64 time=0.220 ms

64 bytes from namenode2.hadoop.com (192.168.115.130): icmp_seq=3 ttl=64 time=0.489 ms

64 bytes from namenode2.hadoop.com (192.168.115.130): icmp_seq=4 ttl=64 time=0.484 ms

[root@service Desktop]# tail -n 30 /var/log/messages |grep named

Dec 17 22:18:46 service named[7303]: listening on IPv4 interface eth3, 192.168.115.128#53

Dec 17 22:18:46 service named[7303]: listening on IPv6 interface lo, ::1#53

Dec 17 22:18:46 service named[7303]: generating session key for dynamic DNS

Dec 17 22:18:46 service named[7303]: sizing zone task pool based on 8 zones

Dec 17 22:18:46 service named[7303]: set up managed keys zone for view _default, file '/var/named/dynamic/managed-keys.bind'

Dec 17 22:18:46 service named[7303]: Warning: 'empty-zones-enable/disable-empty-zone' not set: disabling RFC 1918 empty zones

Dec 17 22:18:46 service named[7303]: automatic empty zone: 127.IN-ADDR.ARPA

Dec 17 22:18:46 service named[7303]: automatic empty zone: 254.169.IN-ADDR.ARPA

Dec 17 22:18:46 service named[7303]: automatic empty zone: 2.0.192.IN-ADDR.ARPA

Dec 17 22:18:46 service named[7303]: automatic empty zone: 100.51.198.IN-ADDR.ARPA

Dec 17 22:18:46 service named[7303]: automatic empty zone: 113.0.203.IN-ADDR.ARPA

Dec 17 22:18:46 service named[7303]: automatic empty zone: 255.255.255.255.IN-ADDR.ARPA

Dec 17 22:18:46 service named[7303]: automatic empty zone: 0.0.0.0.0.0.0.0.0.0.0.0.0.0.0.0.0.0.0.0.0.0.0.0.0.0.0.0.0.0.0.0.IP6.ARPA

Dec 17 22:18:46 service named[7303]: automatic empty zone: D.F.IP6.ARPA

Dec 17 22:18:46 service named[7303]: automatic empty zone: 8.E.F.IP6.ARPA

Dec 17 22:18:46 service named[7303]: automatic empty zone: 9.E.F.IP6.ARPA

Dec 17 22:18:46 service named[7303]: automatic empty zone: A.E.F.IP6.ARPA

Dec 17 22:18:46 service named[7303]: automatic empty zone: B.E.F.IP6.ARPA

Dec 17 22:18:46 service named[7303]: automatic empty zone: 8.B.D.0.1.0.0.2.IP6.ARPA

Dec 17 22:18:46 service named[7303]: command channel listening on 127.0.0.1#953

Dec 17 22:18:46 service named[7303]: command channel listening on ::1#953

Dec 17 22:18:46 service named[7303]: zone 0.in-addr.arpa/IN: loaded serial 0

Dec 17 22:18:46 service named[7303]: zone 1.0.0.127.in-addr.arpa/IN: loaded serial 0

Dec 17 22:18:46 service named[7303]: zone 115.168.192.in-addr.arpa/IN: loaded serial 0

Dec 17 22:18:46 service named[7303]: zone 1.0.0.0.0.0.0.0.0.0.0.0.0.0.0.0.0.0.0.0.0.0.0.0.0.0.0.0.0.0.0.0.ip6.arpa/IN: loaded serial 0

Dec 17 22:18:46 service named[7303]: zone hadoop.com/IN: loaded serial 0

Dec 17 22:18:46 service named[7303]: zone localhost.localdomain/IN: loaded serial 0

Dec 17 22:18:46 service named[7303]: zone localhost/IN: loaded serial 0

Dec 17 22:18:46 service named[7303]: managed-keys-zone ./IN: loaded serial 2

Dec 17 22:18:46 service named[7303]: running

四、NFS服务器的搭建

- 安装并验证NFS(与DNS相同的主机上);

[root@service grid]# yum -y install nfs-utils rpcbind

[root@service grid]# rpm -qa |grep nfs- 设置开机启劢;

[root@service grid]# chkconfig rpcbind on

[root@service grid]# chkconfig nfs on- 启动NFS服务;

[root@service grid]# service rpcbind start

[root@service grid]# service nfs start- 查询NFS服务器状态;

[root@service grid]# service nfs status

rpc.svcgssd is stopped

rpc.mountd (pid 2016) is running...

nfsd (pid 2031 2030 2029 2028 2027 2026 2025 2024) is running...

rpc.rquotad (pid 2012) is running...

- 客户端NFS服务器的安装与配置,重复操作到集群中的每一台客户主机,在此处以一台客户机为例

[root@namenode1 grid]# yum -y install nfs-utils rpcbind

[root@namenode1 grid]# service rpcbind start

Starting rpcbind: [ OK ]

[root@namenode1 grid]# chkconfig rpcbind on

- 在服务器端,设置共享目录(设置共享目录为/home/grid/);

- 编辑修改/etc/exports;

[root@service grid]# vi /etc/exports/home/grid *(rw,sync,no_root_squash)

- 在服务器端重启rpcbind 和nfs 服务(注意要先重启rpcbind ,后重启nfs),集群里每个节点都需配置;;

[root@service grid]# service rpcbind restart

Stopping rpcbind: [ OK ]

Starting rpcbind: [ OK ]

[root@service grid]# service nfs restart

Shutting down NFS daemon: [ OK ]

Shutting down NFS mountd: [ OK ]

Shutting down NFS quotas: [ OK ]

Shutting down RPC idmapd: [ OK ]

Starting NFS services: [ OK ]

Starting NFS quotas: [ OK ]

Starting NFS mountd: [ OK ]

Starting NFS daemon: [ OK ]

Starting RPC idmapd: [ OK ]

- 在namenode1节点上验证;

[root@namenode1 grid]# showmount -e 192.168.115.136

Export list for 192.168.115.136:

/home/grid *

- 在namenode1节点上将共享目录挂载到本地,集群里每个节点都需配置;

[root@namenode1 grid]# cd /

[root@namenode1 /]# mkdir /nfs_share

[root@namenode1 /]# ls

bin dev home lib64 media nfs_share proc sbin srv tmp var

boot etc lib lost+found mnt opt root selinux sys usr

[root@namenode1 /]# mount -t nfs 192.168.115.136:/home/grid /nfs_share/

[root@namenode1 /]# su grid

[grid@namenode1 /]$ cd /nfs_share/

[grid@namenode1 nfs_share]$ ls

Desktop Documents Downloads Music Pictures Public Templates Videos

[grid@namenode1 nfs_share]$ ls

111 Desktop Documents Downloads Music Pictures Public Templates Videos

[grid@namenode1 nfs_share]$ ls

Desktop Documents Downloads Music Pictures Public Templates Videos

[grid@namenode1 nfs_share]$

- 查看

[grid@namenode1 nfs_share]$ mount

/dev/sda2 on / type ext4 (rw)

proc on /proc type proc (rw)

sysfs on /sys type sysfs (rw)

devpts on /dev/pts type devpts (rw,gid=5,mode=620)

tmpfs on /dev/shm type tmpfs (rw,rootcontext="system_u:object_r:tmpfs_t:s0")

/dev/sda1 on /boot type ext4 (rw)

none on /proc/sys/fs/binfmt_misc type binfmt_misc (rw)

vmware-vmblock on /var/run/vmblock-fuse type fuse.vmware-vmblock (rw,nosuid,nodev,default_permissions,allow_other)

sunrpc on /var/lib/nfs/rpc_pipefs type rpc_pipefs (rw)

192.168.115.136:/home/grid on /nfs_share type nfs (rw,vers=4,addr=192.168.115.136,clientaddr=192.168.115.134)

- 设置开机自动挂载,在客户端修改/etc/fstab,集群里每个节点都需配置;

#

# /etc/fstab

# Created by anaconda on Mon Dec 12 06:26:34 2016

#

# Accessible filesystems, by reference, are maintained under '/dev/disk'

# See man pages fstab(5), findfs(8), mount(8) and/or blkid(8) for more info

#

UUID=68861cb0-d93c-4ed5-94ff-197c6431b786 / ext4 defaults 1 1

UUID=3b5d32de-89a4-46df-bd3c-c8d8415ad55c /boot ext4 defaults 1 2

UUID=1a6b34da-e747-4cfe-8a6a-01fe4882a8dc swap swap defaults 0 0

tmpfs /dev/shm tmpfs defaults 0 0

devpts /dev/pts devpts gid=5,mode=620 0 0

sysfs /sys sysfs defaults 0 0

proc /proc proc defaults 0 0

#添加下面一行

192.168.115.136:/home/grid /nfs_share nfs defaults 1 1- 进入.ssh文件夹

[grid@service ~]$ cd .ssh/

[grid@service .ssh]$ ls

id_rsa id_rsa.pub

- 创建authorized_keys文件

[grid@service .ssh]$ cat ~/.ssh/id_rsa.pub >> ~/.ssh/authorized_keys

[grid@service .ssh]$ ls

authorized_keys id_rsa id_rsa.pub

[grid@service .ssh]$ cat ~/.ssh/authorized_keys

ssh-rsa AAAAB3NzaC1yc2EAAAABIwAAAQEAqDkb+jnKYwVuvuj5+R8WdvnGnWZxVH8FzWlZweqdz22Z/Bc2OThlwxpXSI/oMNwtbx19+05846fbikKjENALfkjXSJrn/uxh0iinBItplufww/AMtTKSxTiJu17cGi4RbSEqRnCzV19VVM174XrsqoCGQ/qRycrQ2thEz/7PECk9xbVXRnPqvjYVIPjH0iF9zjj7iF+ggT3aX1qa3QKliR4PnUgYRyjtBLmTOzrY2bzsxauPePzhRYzmc40mJm5CUOruArx1SALW4JeqOvd3gWotNvB9v1gW+f5PwV0Ht2G2onG8lU5cE0SGLyo3O2Uv0e7k6Zk05nhuzmfRLjEKJw== grid@service

- 删除原来分发的密钥文件;使用挂载过来的密钥

- 在NFS服务器端

[grid@service .ssh]$ cat ~/.ssh/id_rsa.pub >> ~/.ssh/authorized_keys- 将所有密钥发送到服务器端;

[grid@service .ssh]$ ssh namenode1.hadoop.com cat ~/.ssh/id_rsa.pub >> ~/.ssh/authorized_keys

[grid@service .ssh]$ ssh namenode2.hadoop.com cat ~/.ssh/id_rsa.pub >> ~/.ssh/authorized_keys

[grid@service .ssh]$ ssh datanode1.hadoop.com cat ~/.ssh/id_rsa.pub >> ~/.ssh/authorized_keys

[grid@service .ssh]$ ssh datanode2.hadoop.com cat ~/.ssh/id_rsa.pub >> ~/.ssh/authorized_keys

[grid@service .ssh]$ ssh datanode3.hadoop.com cat ~/.ssh/id_rsa.pub >> ~/.ssh/authorized_keys- 查看

[grid@service .ssh]$ cat ~/.ssh/authorized_keys

ssh-rsa AAAAB3NzaC1yc2EAAAABIwAAAQEAqDkb+jnKYwVuvuj5+R8WdvnGnWZxVH8FzWlZweqdz22Z/Bc2OThlwxpXSI/oMNwtbx19+05846fbikKjENALfkjXSJrn/uxh0iinBItplufww/AMtTKSxTiJu17cGi4RbSEqRnCzV19VVM174XrsqoCGQ/qRycrQ2thEz/7PECk9xbVXRnPqvjYVIPjH0iF9zjj7iF+ggT3aX1qa3QKliR4PnUgYRyjtBLmTOzrY2bzsxauPePzhRYzmc40mJm5CUOruArx1SALW4JeqOvd3gWotNvB9v1gW+f5PwV0Ht2G2onG8lU5cE0SGLyo3O2Uv0e7k6Zk05nhuzmfRLjEKJw== grid@service

ssh-rsa AAAAB3NzaC1yc2EAAAABIwAAAQEA+ClkPJGxLE6MjkwBhyYTEfAlFeZyV+CVNGOJ26WWpNU3KuNUjrkx5ec2Ti7CZNob1MdTPYrR65b4CKHLziuRBK4kaVOZsBtEWDPY2jsby+r2zz3hnyUyq10qtGXOUNGUe+2V0VVlpAQIpHvLMbS1GmQmstICLlPKBhu6RgSJunve5vbqF7wgjpmYdvhfjtxnilMzSLqqtE8isaD6TcIbHbLa13hr08cS8Nj5xR39L79cdsDHOfrBOC6ogCdQa0sFL6OXl7YnAoCKO0QfVnVqZ3PwqUysGAuFXpyGQ5ni6D2c4DUBR2OjH+AhJjmFifjYe3wdIIYDiMps0Pj34LXUbQ== grid@namenode1

ssh-rsa AAAAB3NzaC1yc2EAAAABIwAAAQEA3TEF8h6tbM3557dz/MYoguCWjhBXEjP0eFNP6SVc5cyr972Y2elyfkIPDTmnvZo+IgbIDpDe1o4uQrsKawqI04hLd6LmbASEDXo2VHue2k1JIHo1aiUX6gEh9L8Cc2SvmszGcj1gn3Qpv3coTEh50DJLfuQTgA4keTci5ONaGzaG18KEISE8WpU6vhGJ//vWk9VKK1QldDfDTjRT4Xb8wwQ/1sABL3q3AcAorMmkOAkNQ8jWpE3D7QHOfwKi20fuXwlhzkpQJ7Br71eHij9dXXwuv5M6jph9sLfa+ezjhrZmdIut7P3fL/Z4x6S6gD1qnQz5fIdCWopK/IzrWnYk4Q== grid@namenode2

ssh-rsa AAAAB3NzaC1yc2EAAAABIwAAAQEA0gsRz0ZvESH6673l08dlKztW6XPDg6JUwVYF38LeasCdDrEQMEGwzkgOG0R+A2qnLCarvMYAQ4R+/beb/eaXJGEpyXaTk0tUT7undNNaMG+mrSd9N2mehuqYdT6lLcesBmx+L4wMz7dMnFuJYASJsCUzk5RtI4GpS71G26jtBXF4jVS+Tr0baANYJnhGPxn5DW0kCmQCs4CEAeIiajWAlr+LjucQ0fCe0dPvnzfBKvDQkMCQGGDtml3zWU1w9AA7+agSEm60qi24q0tI0KwvYELuribcCXbq9l66jiIHd1M4JFu/qBSc1gfm6YEJapLvUyAKJFb191g8Sz3rSQq2oQ== grid@datanode1

ssh-rsa AAAAB3NzaC1yc2EAAAABIwAAAQEA3+NvgrMN5zQ+XM4V9iTng+ujpdvsOckk2m5E0/DFJe8QYFLbxHy/zQYEEl5MrdQD4us2vwjKpYTdsfOWZyJe3V3RMPnDnjsJCZicemz7eR2bw9s+eAMPhnoPlAEBGKtYNGgG7RXjmUK9eUNvqZJ+T2yCjC8kA6gxQRgjtxCoVjO7b5QJ6uHt7+Jm1H6KP6ykHVGUUJ0Xfrt5y3Ot3HBT149AjQyFwT1Rfg/aP5WhEtTqYe7v/1HndnpLQcxma+E+LKXMOCw6H2XDK0ryxK5PfS0KcUldZ5aK/0L8S0lz8bLslcM3avgz5tk67JlLxlvp51H+x7C1cp5c+EwtLNYMfQ== grid@datanode2

ssh-rsa AAAAB3NzaC1yc2EAAAABIwAAAQEAuecNSU/RG/ft2JxuqCxA4YnH5ROfpUZ5HvjRG3dZLmEFqXGcTuePOWmVph7K5/KL89/j3mBYFYCAZIPeqQn/TF9pJrqL40XeWJu4TdhHvDwGQ9BRtMPIi+dqL8BDP2g8TzvexlATUDLEFU9Rn+emH10WisN7LufuWebNUamxi9uwvSPmpbhQPfednZhoDdmh3SZAMUz7k0vBlj23c2urujuC1Wi/t9bK59aClkf7WYYAVMYQoZgWUHdM/jUKqPO7seqxBq8p1AsjyOWHP97OWwRrMS1YBp+4VC5d7Dd48h0Ob3ibRS8f08jZ7FJg6QMMUFteSFr3ybuk8mupWB4CvQ== grid@datanode3

[grid@service .ssh]$ ll

total 16

-rw-rw-r--. 1 grid grid 2374 Dec 19 07:42 authorized_keys

-rw-------. 1 grid grid 1675 Dec 19 07:29 id_rsa

-rw-r--r--. 1 grid grid 394 Dec 19 07:29 id_rsa.pub

-rw-r--r--. 1 grid grid 2090 Dec 19 07:42 known_hosts

- 更改authorized_keys 的权限

[grid@service .ssh]$ chmod 644 authorized_keys

[grid@service .ssh]$ ll

total 16

-rw-r--r--. 1 grid grid 2374 Dec 19 07:42 authorized_keys

-rw-------. 1 grid grid 1675 Dec 19 07:29 id_rsa

-rw-r--r--. 1 grid grid 394 Dec 19 07:29 id_rsa.pub

-rw-r--r--. 1 grid grid 2090 Dec 19 07:42 known_hosts

- 在各节点创建共享目录文件authorized_keys的软连接( authorized_keys 文件权限为644),此处以namenode1节点举例;

[grid@namenode1 ~]$ cd .ssh/

[grid@namenode1 .ssh]$ ls

id_rsa id_rsa.pub

[grid@namenode1 .ssh]$ ln -s /nfs_share/.ssh/authorized_keys ~/.ssh/authorized_keys

[grid@namenode1 .ssh]$ ls

authorized_keys id_rsa id_rsa.pub

[grid@namenode1 .ssh]$ cat authorized_keys

ssh-rsa AAAAB3NzaC1yc2EAAAABIwAAAQEAqDkb+jnKYwVuvuj5+R8WdvnGnWZxVH8FzWlZweqdz22Z/Bc2OThlwxpXSI/oMNwtbx19+05846fbikKjENALfkjXSJrn/uxh0iinBItplufww/AMtTKSxTiJu17cGi4RbSEqRnCzV19VVM174XrsqoCGQ/qRycrQ2thEz/7PECk9xbVXRnPqvjYVIPjH0iF9zjj7iF+ggT3aX1qa3QKliR4PnUgYRyjtBLmTOzrY2bzsxauPePzhRYzmc40mJm5CUOruArx1SALW4JeqOvd3gWotNvB9v1gW+f5PwV0Ht2G2onG8lU5cE0SGLyo3O2Uv0e7k6Zk05nhuzmfRLjEKJw== grid@service

ssh-rsa AAAAB3NzaC1yc2EAAAABIwAAAQEA+ClkPJGxLE6MjkwBhyYTEfAlFeZyV+CVNGOJ26WWpNU3KuNUjrkx5ec2Ti7CZNob1MdTPYrR65b4CKHLziuRBK4kaVOZsBtEWDPY2jsby+r2zz3hnyUyq10qtGXOUNGUe+2V0VVlpAQIpHvLMbS1GmQmstICLlPKBhu6RgSJunve5vbqF7wgjpmYdvhfjtxnilMzSLqqtE8isaD6TcIbHbLa13hr08cS8Nj5xR39L79cdsDHOfrBOC6ogCdQa0sFL6OXl7YnAoCKO0QfVnVqZ3PwqUysGAuFXpyGQ5ni6D2c4DUBR2OjH+AhJjmFifjYe3wdIIYDiMps0Pj34LXUbQ== grid@namenode1

ssh-rsa AAAAB3NzaC1yc2EAAAABIwAAAQEA3TEF8h6tbM3557dz/MYoguCWjhBXEjP0eFNP6SVc5cyr972Y2elyfkIPDTmnvZo+IgbIDpDe1o4uQrsKawqI04hLd6LmbASEDXo2VHue2k1JIHo1aiUX6gEh9L8Cc2SvmszGcj1gn3Qpv3coTEh50DJLfuQTgA4keTci5ONaGzaG18KEISE8WpU6vhGJ//vWk9VKK1QldDfDTjRT4Xb8wwQ/1sABL3q3AcAorMmkOAkNQ8jWpE3D7QHOfwKi20fuXwlhzkpQJ7Br71eHij9dXXwuv5M6jph9sLfa+ezjhrZmdIut7P3fL/Z4x6S6gD1qnQz5fIdCWopK/IzrWnYk4Q== grid@namenode2

ssh-rsa AAAAB3NzaC1yc2EAAAABIwAAAQEA0gsRz0ZvESH6673l08dlKztW6XPDg6JUwVYF38LeasCdDrEQMEGwzkgOG0R+A2qnLCarvMYAQ4R+/beb/eaXJGEpyXaTk0tUT7undNNaMG+mrSd9N2mehuqYdT6lLcesBmx+L4wMz7dMnFuJYASJsCUzk5RtI4GpS71G26jtBXF4jVS+Tr0baANYJnhGPxn5DW0kCmQCs4CEAeIiajWAlr+LjucQ0fCe0dPvnzfBKvDQkMCQGGDtml3zWU1w9AA7+agSEm60qi24q0tI0KwvYELuribcCXbq9l66jiIHd1M4JFu/qBSc1gfm6YEJapLvUyAKJFb191g8Sz3rSQq2oQ== grid@datanode1

ssh-rsa AAAAB3NzaC1yc2EAAAABIwAAAQEA3+NvgrMN5zQ+XM4V9iTng+ujpdvsOckk2m5E0/DFJe8QYFLbxHy/zQYEEl5MrdQD4us2vwjKpYTdsfOWZyJe3V3RMPnDnjsJCZicemz7eR2bw9s+eAMPhnoPlAEBGKtYNGgG7RXjmUK9eUNvqZJ+T2yCjC8kA6gxQRgjtxCoVjO7b5QJ6uHt7+Jm1H6KP6ykHVGUUJ0Xfrt5y3Ot3HBT149AjQyFwT1Rfg/aP5WhEtTqYe7v/1HndnpLQcxma+E+LKXMOCw6H2XDK0ryxK5PfS0KcUldZ5aK/0L8S0lz8bLslcM3avgz5tk67JlLxlvp51H+x7C1cp5c+EwtLNYMfQ== grid@datanode2

ssh-rsa AAAAB3NzaC1yc2EAAAABIwAAAQEAuecNSU/RG/ft2JxuqCxA4YnH5ROfpUZ5HvjRG3dZLmEFqXGcTuePOWmVph7K5/KL89/j3mBYFYCAZIPeqQn/TF9pJrqL40XeWJu4TdhHvDwGQ9BRtMPIi+dqL8BDP2g8TzvexlATUDLEFU9Rn+emH10WisN7LufuWebNUamxi9uwvSPmpbhQPfednZhoDdmh3SZAMUz7k0vBlj23c2urujuC1Wi/t9bK59aClkf7WYYAVMYQoZgWUHdM/jUKqPO7seqxBq8p1AsjyOWHP97OWwRrMS1YBp+4VC5d7Dd48h0Ob3ibRS8f08jZ7FJg6QMMUFteSFr3ybuk8mupWB4CvQ== grid@datanode3

- 验证SSH互信

[grid@namenode1 .ssh]$ ssh namenode1.hadoop.com

The authenticity of host 'namenode1.hadoop.com (192.168.115.134)' can't be established.

RSA key fingerprint is b2:87:ea:5c:28:b0:52:85:ca:95:b6:2c:c5:82:9e:a1.

Are you sure you want to continue connecting (yes/no)? yes

Warning: Permanently added 'namenode1.hadoop.com,192.168.115.134' (RSA) to the list of known hosts.

Last login: Mon Dec 19 05:53:17 2016 from 192.168.115.1

五、 基于DNS解析主机名和NFS共享秘钥部署企业级集群

- 相关文件的配置

相关文件的配置在这里就不做赘述了,具体操作见本人的博文第五节内容:

https://my.oschina.net/TaoPengFeiBlog/blog/795191

- 向各节点复制hadoop

[grid@namenode1 ~]$ cat /home/grid/Downloads/hadoop-2.3.0/etc/hadoop/slaves | awk '{print "scp -rp /home/grid/Downloads/hadoop-2.3.0 grid@"$1":/home/grid"}'

scp -rp /home/grid/Downloads/hadoop-2.3.0 grid@namenode2.hadoop.com:/home/grid

scp -rp /home/grid/Downloads/hadoop-2.3.0 grid@datanode1.hadoop.com:/home/grid

scp -rp /home/grid/Downloads/hadoop-2.3.0 grid@datanode2.hadoop.com:/home/grid

scp -rp /home/grid/Downloads/hadoop-2.3.0 grid@datanode3.hadoop.com:/home/grid

[grid@namenode1 ~]$ cat /home/grid/Downloads/hadoop-2.3.0/etc/hadoop/slaves | awk '{print "scp -rp /home/grid/Downloads/hadoop-2.3.0 grid@"$1":/home/grid"}' > copyNodes

[grid@namenode1 ~]$ ls

copyNodes core.3155 Desktop Documents Downloads eclipse Music Pictures Public Templates Videos workspace

[grid@namenode1 ~]$ cat copyNodes

scp -rp /home/grid/Downloads/hadoop-2.3.0 grid@namenode2.hadoop.com:/home/grid

scp -rp /home/grid/Downloads/hadoop-2.3.0 grid@datanode1.hadoop.com:/home/grid

scp -rp /home/grid/Downloads/hadoop-2.3.0 grid@datanode2.hadoop.com:/home/grid

scp -rp /home/grid/Downloads/hadoop-2.3.0 grid@datanode3.hadoop.com:/home/grid

[grid@namenode1 ~]$ chmod a+x copyNodes

[grid@namenode1 ~]$ ll

total 5052

-rwxrwxr-x. 1 grid grid 316 Dec 20 08:55 copyNodes

-rw-------. 1 grid grid 29507584 Dec 13 08:17 core.3155

drwxr-xr-x. 2 grid grid 4096 Dec 11 22:46 Desktop

drwxr-xr-x. 2 grid grid 4096 Dec 11 22:46 Documents

drwxr-xr-x. 3 grid grid 4096 Dec 19 08:23 Downloads

drwxrwxr-x. 3 grid grid 4096 Dec 13 21:07 eclipse

drwxr-xr-x. 2 grid grid 4096 Dec 11 22:46 Music

drwxr-xr-x. 2 grid grid 4096 Dec 11 22:46 Pictures

drwxr-xr-x. 2 grid grid 4096 Dec 11 22:46 Public

drwxr-xr-x. 2 grid grid 4096 Dec 11 22:46 Templates

drwxr-xr-x. 2 grid grid 4096 Dec 11 22:46 Videos

drwxrwxr-x. 5 grid grid 4096 Dec 13 22:06 workspace

[grid@namenode1 ~]$ sh copyNodes

- 格式化namenode1

[grid@namenode1 hadoop-2.3.0]$ bin/hadoop namenode -format

DEPRECATED: Use of this script to execute hdfs command is deprecated.

Instead use the hdfs command for it.

16/12/23 08:01:58 INFO namenode.NameNode: STARTUP_MSG:

/************************************************************

STARTUP_MSG: Starting NameNode

STARTUP_MSG: host = namenode1.hadoop.com/192.168.115.134

STARTUP_MSG: args = [-format]

STARTUP_MSG: version = 2.3.0

STARTUP_MSG: classpath = /home/grid/hadoop-2.3.0/etc/hadoop:/home/grid/hadoop-2.3.0/share/hadoop/common/lib/activation-1.1.jar:/home/grid/hadoop-2.3.0/share/hadoop/common/lib/jsp-api-2.1.jar:/home/grid/hadoop-2.3.0/share/hadoop/common/lib/commons-digester-1.8.jar:/home/grid/hadoop-2.3.0/share/hadoop/common/lib/hadoop-auth-2.3.0.jar:/home/grid/hadoop-2.3.0/share/hadoop/common/lib/junit-4.8.2.jar:/home/grid/hadoop-2.3.0/share/hadoop/common/lib/zookeeper-3.4.5.jar:/home/grid/hadoop-2.3.0/share/hadoop/common/lib/jasper-compiler-5.5.23.jar:/home/grid/hadoop-2.3.0/share/hadoop/common/lib/jsr305-1.3.9.jar:/home/grid/hadoop-2.3.0/share/hadoop/common/lib/commons-compress-1.4.1.jar:/home/grid/hadoop-2.3.0/share/hadoop/common/lib/jersey-server-1.9.jar:/home/grid/hadoop-2.3.0/share/hadoop/common/lib/jersey-core-1.9.jar:/home/grid/hadoop-2.3.0/share/hadoop/common/lib/java-xmlbuilder-0.4.jar:/home/grid/hadoop-2.3.0/share/hadoop/common/lib/slf4j-api-1.7.5.jar:/home/grid/hadoop-2.3.0/share/hadoop/common/lib/commons-beanutils-1.7.0.jar:/home/grid/hadoop-2.3.0/share/hadoop/common/lib/jaxb-impl-2.2.3-1.jar:/home/grid/hadoop-2.3.0/share/hadoop/common/lib/httpcore-4.2.5.jar:/home/grid/hadoop-2.3.0/share/hadoop/common/lib/commons-logging-1.1.3.jar:/home/grid/hadoop-2.3.0/share/hadoop/common/lib/commons-lang-2.6.jar:/home/grid/hadoop-2.3.0/share/hadoop/common/lib/asm-3.2.jar:/home/grid/hadoop-2.3.0/share/hadoop/common/lib/jasper-runtime-5.5.23.jar:/home/grid/hadoop-2.3.0/share/hadoop/common/lib/commons-beanutils-core-1.8.0.jar:/home/grid/hadoop-2.3.0/share/hadoop/common/lib/jetty-6.1.26.jar:/home/grid/hadoop-2.3.0/share/hadoop/common/lib/commons-codec-1.4.jar:/home/grid/hadoop-2.3.0/share/hadoop/common/lib/jsch-0.1.42.jar:/home/grid/hadoop-2.3.0/share/hadoop/common/lib/log4j-1.2.17.jar:/home/grid/hadoop-2.3.0/share/hadoop/common/lib/xz-1.0.jar:/home/grid/hadoop-2.3.0/share/hadoop/common/lib/servlet-api-2.5.jar:/home/grid/hadoop-2.3.0/share/hadoop/common/lib/stax-api-1.0-2.jar:/home/grid/hadoop-2.3.0/share/hadoop/common/lib/paranamer-2.3.jar:/home/grid/hadoop-2.3.0/share/hadoop/common/lib/commons-math3-3.1.1.jar:/home/grid/hadoop-2.3.0/share/hadoop/common/lib/jackson-core-asl-1.8.8.jar:/home/grid/hadoop-2.3.0/share/hadoop/common/lib/jackson-jaxrs-1.8.8.jar:/home/grid/hadoop-2.3.0/share/hadoop/common/lib/snappy-java-1.0.4.1.jar:/home/grid/hadoop-2.3.0/share/hadoop/common/lib/netty-3.6.2.Final.jar:/home/grid/hadoop-2.3.0/share/hadoop/common/lib/commons-el-1.0.jar:/home/grid/hadoop-2.3.0/share/hadoop/common/lib/jets3t-0.9.0.jar:/home/grid/hadoop-2.3.0/share/hadoop/common/lib/jetty-util-6.1.26.jar:/home/grid/hadoop-2.3.0/share/hadoop/common/lib/slf4j-log4j12-1.7.5.jar:/home/grid/hadoop-2.3.0/share/hadoop/common/lib/httpclient-4.2.5.jar:/home/grid/hadoop-2.3.0/share/hadoop/common/lib/commons-cli-1.2.jar:/home/grid/hadoop-2.3.0/share/hadoop/common/lib/commons-net-3.1.jar:/home/grid/hadoop-2.3.0/share/hadoop/common/lib/commons-configuration-1.6.jar:/home/grid/hadoop-2.3.0/share/hadoop/common/lib/protobuf-java-2.5.0.jar:/home/grid/hadoop-2.3.0/share/hadoop/common/lib/jackson-mapper-asl-1.8.8.jar:/home/grid/hadoop-2.3.0/share/hadoop/common/lib/xmlenc-0.52.jar:/home/grid/hadoop-2.3.0/share/hadoop/common/lib/guava-11.0.2.jar:/home/grid/hadoop-2.3.0/share/hadoop/common/lib/commons-httpclient-3.1.jar:/home/grid/hadoop-2.3.0/share/hadoop/common/lib/commons-collections-3.2.1.jar:/home/grid/hadoop-2.3.0/share/hadoop/common/lib/avro-1.7.4.jar:/home/grid/hadoop-2.3.0/share/hadoop/common/lib/jaxb-api-2.2.2.jar:/home/grid/hadoop-2.3.0/share/hadoop/common/lib/mockito-all-1.8.5.jar:/home/grid/hadoop-2.3.0/share/hadoop/common/lib/commons-io-2.4.jar:/home/grid/hadoop-2.3.0/share/hadoop/common/lib/jackson-xc-1.8.8.jar:/home/grid/hadoop-2.3.0/share/hadoop/common/lib/jersey-json-1.9.jar:/home/grid/hadoop-2.3.0/share/hadoop/common/lib/jettison-1.1.jar:/home/grid/hadoop-2.3.0/share/hadoop/common/lib/hadoop-annotations-2.3.0.jar:/home/grid/hadoop-2.3.0/share/hadoop/common/hadoop-nfs-2.3.0.jar:/home/grid/hadoop-2.3.0/share/hadoop/common/hadoop-common-2.3.0-tests.jar:/home/grid/hadoop-2.3.0/share/hadoop/common/hadoop-common-2.3.0.jar:/home/grid/hadoop-2.3.0/share/hadoop/hdfs:/home/grid/hadoop-2.3.0/share/hadoop/hdfs/lib/jsp-api-2.1.jar:/home/grid/hadoop-2.3.0/share/hadoop/hdfs/lib/jsr305-1.3.9.jar:/home/grid/hadoop-2.3.0/share/hadoop/hdfs/lib/jersey-server-1.9.jar:/home/grid/hadoop-2.3.0/share/hadoop/hdfs/lib/jersey-core-1.9.jar:/home/grid/hadoop-2.3.0/share/hadoop/hdfs/lib/commons-logging-1.1.3.jar:/home/grid/hadoop-2.3.0/share/hadoop/hdfs/lib/commons-lang-2.6.jar:/home/grid/hadoop-2.3.0/share/hadoop/hdfs/lib/asm-3.2.jar:/home/grid/hadoop-2.3.0/share/hadoop/hdfs/lib/jasper-runtime-5.5.23.jar:/home/grid/hadoop-2.3.0/share/hadoop/hdfs/lib/jetty-6.1.26.jar:/home/grid/hadoop-2.3.0/share/hadoop/hdfs/lib/commons-codec-1.4.jar:/home/grid/hadoop-2.3.0/share/hadoop/hdfs/lib/log4j-1.2.17.jar:/home/grid/hadoop-2.3.0/share/hadoop/hdfs/lib/servlet-api-2.5.jar:/home/grid/hadoop-2.3.0/share/hadoop/hdfs/lib/jackson-core-asl-1.8.8.jar:/home/grid/hadoop-2.3.0/share/hadoop/hdfs/lib/netty-3.6.2.Final.jar:/home/grid/hadoop-2.3.0/share/hadoop/hdfs/lib/commons-el-1.0.jar:/home/grid/hadoop-2.3.0/share/hadoop/hdfs/lib/jetty-util-6.1.26.jar:/home/grid/hadoop-2.3.0/share/hadoop/hdfs/lib/commons-cli-1.2.jar:/home/grid/hadoop-2.3.0/share/hadoop/hdfs/lib/protobuf-java-2.5.0.jar:/home/grid/hadoop-2.3.0/share/hadoop/hdfs/lib/jackson-mapper-asl-1.8.8.jar:/home/grid/hadoop-2.3.0/share/hadoop/hdfs/lib/xmlenc-0.52.jar:/home/grid/hadoop-2.3.0/share/hadoop/hdfs/lib/guava-11.0.2.jar:/home/grid/hadoop-2.3.0/share/hadoop/hdfs/lib/commons-daemon-1.0.13.jar:/home/grid/hadoop-2.3.0/share/hadoop/hdfs/lib/commons-io-2.4.jar:/home/grid/hadoop-2.3.0/share/hadoop/hdfs/hadoop-hdfs-2.3.0.jar:/home/grid/hadoop-2.3.0/share/hadoop/hdfs/hadoop-hdfs-nfs-2.3.0.jar:/home/grid/hadoop-2.3.0/share/hadoop/hdfs/hadoop-hdfs-2.3.0-tests.jar:/home/grid/hadoop-2.3.0/share/hadoop/yarn/lib/activation-1.1.jar:/home/grid/hadoop-2.3.0/share/hadoop/yarn/lib/guice-servlet-3.0.jar:/home/grid/hadoop-2.3.0/share/hadoop/yarn/lib/zookeeper-3.4.5.jar:/home/grid/hadoop-2.3.0/share/hadoop/yarn/lib/jsr305-1.3.9.jar:/home/grid/hadoop-2.3.0/share/hadoop/yarn/lib/aopalliance-1.0.jar:/home/grid/hadoop-2.3.0/share/hadoop/yarn/lib/commons-compress-1.4.1.jar:/home/grid/hadoop-2.3.0/share/hadoop/yarn/lib/jersey-server-1.9.jar:/home/grid/hadoop-2.3.0/share/hadoop/yarn/lib/jersey-core-1.9.jar:/home/grid/hadoop-2.3.0/share/hadoop/yarn/lib/guice-3.0.jar:/home/grid/hadoop-2.3.0/share/hadoop/yarn/lib/jaxb-impl-2.2.3-1.jar:/home/grid/hadoop-2.3.0/share/hadoop/yarn/lib/commons-logging-1.1.3.jar:/home/grid/hadoop-2.3.0/share/hadoop/yarn/lib/commons-lang-2.6.jar:/home/grid/hadoop-2.3.0/share/hadoop/yarn/lib/asm-3.2.jar:/home/grid/hadoop-2.3.0/share/hadoop/yarn/lib/jetty-6.1.26.jar:/home/grid/hadoop-2.3.0/share/hadoop/yarn/lib/commons-codec-1.4.jar:/home/grid/hadoop-2.3.0/share/hadoop/yarn/lib/log4j-1.2.17.jar:/home/grid/hadoop-2.3.0/share/hadoop/yarn/lib/xz-1.0.jar:/home/grid/hadoop-2.3.0/share/hadoop/yarn/lib/servlet-api-2.5.jar:/home/grid/hadoop-2.3.0/share/hadoop/yarn/lib/stax-api-1.0-2.jar:/home/grid/hadoop-2.3.0/share/hadoop/yarn/lib/jackson-core-asl-1.8.8.jar:/home/grid/hadoop-2.3.0/share/hadoop/yarn/lib/jackson-jaxrs-1.8.8.jar:/home/grid/hadoop-2.3.0/share/hadoop/yarn/lib/jline-0.9.94.jar:/home/grid/hadoop-2.3.0/share/hadoop/yarn/lib/jetty-util-6.1.26.jar:/home/grid/hadoop-2.3.0/share/hadoop/yarn/lib/commons-cli-1.2.jar:/home/grid/hadoop-2.3.0/share/hadoop/yarn/lib/protobuf-java-2.5.0.jar:/home/grid/hadoop-2.3.0/share/hadoop/yarn/lib/jackson-mapper-asl-1.8.8.jar:/home/grid/hadoop-2.3.0/share/hadoop/yarn/lib/jersey-guice-1.9.jar:/home/grid/hadoop-2.3.0/share/hadoop/yarn/lib/guava-11.0.2.jar:/home/grid/hadoop-2.3.0/share/hadoop/yarn/lib/commons-httpclient-3.1.jar:/home/grid/hadoop-2.3.0/share/hadoop/yarn/lib/javax.inject-1.jar:/home/grid/hadoop-2.3.0/share/hadoop/yarn/lib/jaxb-api-2.2.2.jar:/home/grid/hadoop-2.3.0/share/hadoop/yarn/lib/commons-io-2.4.jar:/home/grid/hadoop-2.3.0/share/hadoop/yarn/lib/jackson-xc-1.8.8.jar:/home/grid/hadoop-2.3.0/share/hadoop/yarn/lib/jersey-json-1.9.jar:/home/grid/hadoop-2.3.0/share/hadoop/yarn/lib/jettison-1.1.jar:/home/grid/hadoop-2.3.0/share/hadoop/yarn/lib/jersey-client-1.9.jar:/home/grid/hadoop-2.3.0/share/hadoop/yarn/hadoop-yarn-api-2.3.0.jar:/home/grid/hadoop-2.3.0/share/hadoop/yarn/hadoop-yarn-applications-unmanaged-am-launcher-2.3.0.jar:/home/grid/hadoop-2.3.0/share/hadoop/yarn/hadoop-yarn-server-nodemanager-2.3.0.jar:/home/grid/hadoop-2.3.0/share/hadoop/yarn/hadoop-yarn-server-tests-2.3.0.jar:/home/grid/hadoop-2.3.0/share/hadoop/yarn/hadoop-yarn-server-common-2.3.0.jar:/home/grid/hadoop-2.3.0/share/hadoop/yarn/hadoop-yarn-client-2.3.0.jar:/home/grid/hadoop-2.3.0/share/hadoop/yarn/hadoop-yarn-common-2.3.0.jar:/home/grid/hadoop-2.3.0/share/hadoop/yarn/hadoop-yarn-server-resourcemanager-2.3.0.jar:/home/grid/hadoop-2.3.0/share/hadoop/yarn/hadoop-yarn-applications-distributedshell-2.3.0.jar:/home/grid/hadoop-2.3.0/share/hadoop/yarn/hadoop-yarn-server-web-proxy-2.3.0.jar:/home/grid/hadoop-2.3.0/share/hadoop/mapreduce/lib/hamcrest-core-1.1.jar:/home/grid/hadoop-2.3.0/share/hadoop/mapreduce/lib/guice-servlet-3.0.jar:/home/grid/hadoop-2.3.0/share/hadoop/mapreduce/lib/aopalliance-1.0.jar:/home/grid/hadoop-2.3.0/share/hadoop/mapreduce/lib/commons-compress-1.4.1.jar:/home/grid/hadoop-2.3.0/share/hadoop/mapreduce/lib/jersey-server-1.9.jar:/home/grid/hadoop-2.3.0/share/hadoop/mapreduce/lib/jersey-core-1.9.jar:/home/grid/hadoop-2.3.0/share/hadoop/mapreduce/lib/guice-3.0.jar:/home/grid/hadoop-2.3.0/share/hadoop/mapreduce/lib/asm-3.2.jar:/home/grid/hadoop-2.3.0/share/hadoop/mapreduce/lib/log4j-1.2.17.jar:/home/grid/hadoop-2.3.0/share/hadoop/mapreduce/lib/xz-1.0.jar:/home/grid/hadoop-2.3.0/share/hadoop/mapreduce/lib/paranamer-2.3.jar:/home/grid/hadoop-2.3.0/share/hadoop/mapreduce/lib/jackson-core-asl-1.8.8.jar:/home/grid/hadoop-2.3.0/share/hadoop/mapreduce/lib/snappy-java-1.0.4.1.jar:/home/grid/hadoop-2.3.0/share/hadoop/mapreduce/lib/netty-3.6.2.Final.jar:/home/grid/hadoop-2.3.0/share/hadoop/mapreduce/lib/protobuf-java-2.5.0.jar:/home/grid/hadoop-2.3.0/share/hadoop/mapreduce/lib/jackson-mapper-asl-1.8.8.jar:/home/grid/hadoop-2.3.0/share/hadoop/mapreduce/lib/jersey-guice-1.9.jar:/home/grid/hadoop-2.3.0/share/hadoop/mapreduce/lib/javax.inject-1.jar:/home/grid/hadoop-2.3.0/share/hadoop/mapreduce/lib/avro-1.7.4.jar:/home/grid/hadoop-2.3.0/share/hadoop/mapreduce/lib/commons-io-2.4.jar:/home/grid/hadoop-2.3.0/share/hadoop/mapreduce/lib/junit-4.10.jar:/home/grid/hadoop-2.3.0/share/hadoop/mapreduce/lib/hadoop-annotations-2.3.0.jar:/home/grid/hadoop-2.3.0/share/hadoop/mapreduce/hadoop-mapreduce-client-hs-plugins-2.3.0.jar:/home/grid/hadoop-2.3.0/share/hadoop/mapreduce/hadoop-mapreduce-client-jobclient-2.3.0.jar:/home/grid/hadoop-2.3.0/share/hadoop/mapreduce/hadoop-mapreduce-client-app-2.3.0.jar:/home/grid/hadoop-2.3.0/share/hadoop/mapreduce/hadoop-mapreduce-examples-2.3.0.jar:/home/grid/hadoop-2.3.0/share/hadoop/mapreduce/hadoop-mapreduce-client-jobclient-2.3.0-tests.jar:/home/grid/hadoop-2.3.0/share/hadoop/mapreduce/hadoop-mapreduce-client-common-2.3.0.jar:/home/grid/hadoop-2.3.0/share/hadoop/mapreduce/hadoop-mapreduce-client-shuffle-2.3.0.jar:/home/grid/hadoop-2.3.0/share/hadoop/mapreduce/hadoop-mapreduce-client-core-2.3.0.jar:/home/grid/hadoop-2.3.0/share/hadoop/mapreduce/hadoop-mapreduce-client-hs-2.3.0.jar:/contrib/capacity-scheduler/*.jar:/contrib/capacity-scheduler/*.jar

STARTUP_MSG: build = Unknown -r 1771539; compiled by 'root' on 2016-11-27T16:31Z

STARTUP_MSG: java = 1.8.0_111

************************************************************/

16/12/23 08:01:58 INFO namenode.NameNode: registered UNIX signal handlers for [TERM, HUP, INT]

Formatting using clusterid: CID-d89cd496-6fe5-4540-894d-14e57ae2222c

16/12/23 08:02:01 INFO namenode.FSNamesystem: fsLock is fair:true

16/12/23 08:02:01 INFO namenode.HostFileManager: read includes:

HostSet(

)

16/12/23 08:02:01 INFO namenode.HostFileManager: read excludes:

HostSet(

)

16/12/23 08:02:01 INFO blockmanagement.DatanodeManager: dfs.block.invalidate.limit=1000

16/12/23 08:02:01 INFO blockmanagement.DatanodeManager: dfs.namenode.datanode.registration.ip-hostname-check=true

16/12/23 08:02:01 INFO util.GSet: Computing capacity for map BlocksMap

16/12/23 08:02:01 INFO util.GSet: VM type = 64-bit

16/12/23 08:02:01 INFO util.GSet: 2.0% max memory 966.7 MB = 19.3 MB

16/12/23 08:02:01 INFO util.GSet: capacity = 2^21 = 2097152 entries

16/12/23 08:02:01 INFO blockmanagement.BlockManager: dfs.block.access.token.enable=false

16/12/23 08:02:01 INFO blockmanagement.BlockManager: defaultReplication = 1

16/12/23 08:02:01 INFO blockmanagement.BlockManager: maxReplication = 512

16/12/23 08:02:01 INFO blockmanagement.BlockManager: minReplication = 1

16/12/23 08:02:01 INFO blockmanagement.BlockManager: maxReplicationStreams = 2

16/12/23 08:02:01 INFO blockmanagement.BlockManager: shouldCheckForEnoughRacks = false

16/12/23 08:02:01 INFO blockmanagement.BlockManager: replicationRecheckInterval = 3000

16/12/23 08:02:01 INFO blockmanagement.BlockManager: encryptDataTransfer = false

16/12/23 08:02:01 INFO blockmanagement.BlockManager: maxNumBlocksToLog = 1000

16/12/23 08:02:01 INFO namenode.FSNamesystem: fsOwner = grid (auth:SIMPLE)

16/12/23 08:02:01 INFO namenode.FSNamesystem: supergroup = supergroup

16/12/23 08:02:01 INFO namenode.FSNamesystem: isPermissionEnabled = true

16/12/23 08:02:01 INFO namenode.FSNamesystem: HA Enabled: false

16/12/23 08:02:01 INFO namenode.FSNamesystem: Append Enabled: true

16/12/23 08:02:03 INFO util.GSet: Computing capacity for map INodeMap

16/12/23 08:02:03 INFO util.GSet: VM type = 64-bit

16/12/23 08:02:03 INFO util.GSet: 1.0% max memory 966.7 MB = 9.7 MB

16/12/23 08:02:03 INFO util.GSet: capacity = 2^20 = 1048576 entries

16/12/23 08:02:03 INFO namenode.NameNode: Caching file names occuring more than 10 times

16/12/23 08:02:03 INFO util.GSet: Computing capacity for map cachedBlocks

16/12/23 08:02:03 INFO util.GSet: VM type = 64-bit

16/12/23 08:02:03 INFO util.GSet: 0.25% max memory 966.7 MB = 2.4 MB

16/12/23 08:02:03 INFO util.GSet: capacity = 2^18 = 262144 entries

16/12/23 08:02:03 INFO namenode.FSNamesystem: dfs.namenode.safemode.threshold-pct = 0.9990000128746033

16/12/23 08:02:03 INFO namenode.FSNamesystem: dfs.namenode.safemode.min.datanodes = 0

16/12/23 08:02:03 INFO namenode.FSNamesystem: dfs.namenode.safemode.extension = 30000

16/12/23 08:02:03 INFO namenode.FSNamesystem: Retry cache on namenode is enabled

16/12/23 08:02:03 INFO namenode.FSNamesystem: Retry cache will use 0.03 of total heap and retry cache entry expiry time is 600000 millis

16/12/23 08:02:03 INFO util.GSet: Computing capacity for map Namenode Retry Cache

16/12/23 08:02:03 INFO util.GSet: VM type = 64-bit

16/12/23 08:02:03 INFO util.GSet: 0.029999999329447746% max memory 966.7 MB = 297.0 KB

16/12/23 08:02:03 INFO util.GSet: capacity = 2^15 = 32768 entries

Re-format filesystem in Storage Directory /home/grid/hadoop-2.3.0/name ? (Y or N) Y

16/12/23 08:02:06 INFO common.Storage: Storage directory /home/grid/hadoop-2.3.0/name has been successfully formatted.

16/12/23 08:02:06 INFO namenode.FSImage: Saving image file /home/grid/hadoop-2.3.0/name/current/fsimage.ckpt_0000000000000000000 using no compression

16/12/23 08:02:06 INFO namenode.FSImage: Image file /home/grid/hadoop-2.3.0/name/current/fsimage.ckpt_0000000000000000000 of size 216 bytes saved in 0 seconds.

16/12/23 08:02:06 INFO namenode.NNStorageRetentionManager: Going to retain 1 images with txid >= 0

16/12/23 08:02:06 INFO util.ExitUtil: Exiting with status 0

16/12/23 08:02:06 INFO namenode.NameNode: SHUTDOWN_MSG:

/************************************************************

SHUTDOWN_MSG: Shutting down NameNode at namenode1.hadoop.com/192.168.115.134

************************************************************/

- 启动hadoop

[grid@namenode1 hadoop-2.3.0]$ ./sbin/start-all.sh

This script is Deprecated. Instead use start-dfs.sh and start-yarn.sh

Starting namenodes on [namenode1.hadoop.com]

namenode1.hadoop.com: starting namenode, logging to /home/grid/hadoop-2.3.0/logs/hadoop-grid-namenode-namenode1.hadoop.com.out

datanode1.hadoop.com: starting datanode, logging to /home/grid/hadoop-2.3.0/logs/hadoop-grid-datanode-datanode1.hadoop.com.out

datanode2.hadoop.com: starting datanode, logging to /home/grid/hadoop-2.3.0/logs/hadoop-grid-datanode-datanode2.hadoop.com.out

datanode3.hadoop.com: starting datanode, logging to /home/grid/hadoop-2.3.0/logs/hadoop-grid-datanode-datanode3.hadoop.com.out

namenode2.hadoop.com: starting datanode, logging to /home/grid/hadoop-2.3.0/logs/hadoop-grid-datanode-namenode2.hadoop.com.out

Starting secondary namenodes [namenode1.hadoop.com]

namenode1.hadoop.com: starting secondarynamenode, logging to /home/grid/hadoop-2.3.0/logs/hadoop-grid-secondarynamenode-namenode1.hadoop.com.out

starting yarn daemons

starting resourcemanager, logging to /home/grid/hadoop-2.3.0/logs/yarn-grid-resourcemanager-namenode1.hadoop.com.out

namenode2.hadoop.com: starting nodemanager, logging to /home/grid/hadoop-2.3.0/logs/yarn-grid-nodemanager-namenode2.hadoop.com.out

datanode3.hadoop.com: starting nodemanager, logging to /home/grid/hadoop-2.3.0/logs/yarn-grid-nodemanager-datanode3.hadoop.com.out

datanode2.hadoop.com: starting nodemanager, logging to /home/grid/hadoop-2.3.0/logs/yarn-grid-nodemanager-datanode2.hadoop.com.out

datanode1.hadoop.com: starting nodemanager, logging to /home/grid/hadoop-2.3.0/logs/yarn-grid-nodemanager-datanode1.hadoop.com.out

- 用jps检查后台进程是否成功运行

#1

[grid@namenode1 hadoop-2.3.0]$ jps

6416 NameNode

6565 SecondaryNameNode

6726 ResourceManager

6798 Jps

#2

[grid@namenode2 .ssh]$ jps

5364 Jps

5324 NodeManager

5263 DataNode

#3

[grid@datanode1 .ssh]$ jps

5781 Jps

5739 NodeManager

5677 DataNode

#4

[grid@datanode2 .ssh]$ jps

5296 DataNode

5404 Jps

5358 NodeManager

#5

[grid@datanode3 .ssh]$ jps

5449 Jps

5308 NodeManager

5247 DataNode

- 检查报告

[grid@namenode1 hadoop-2.3.0]$ ./bin/hadoop dfsadmin -report

DEPRECATED: Use of this script to execute hdfs command is deprecated.

Instead use the hdfs command for it.

Configured Capacity: 96254541824 (89.64 GB)

Present Capacity: 76588535808 (71.33 GB)

DFS Remaining: 76588437504 (71.33 GB)

DFS Used: 98304 (96 KB)

DFS Used%: 0.00%

Under replicated blocks: 0

Blocks with corrupt replicas: 0

Missing blocks: 0

-------------------------------------------------

Datanodes available: 4 (4 total, 0 dead)

Live datanodes:

Name: 192.168.115.133:50010 (datanode1.hadoop.com)

Hostname: datanode1.hadoop.com

Decommission Status : Normal

Configured Capacity: 24063635456 (22.41 GB)

DFS Used: 24576 (24 KB)

Non DFS Used: 4874244096 (4.54 GB)

DFS Remaining: 19189366784 (17.87 GB)

DFS Used%: 0.00%

DFS Remaining%: 79.74%

Configured Cache Capacity: 0 (0 B)

Cache Used: 0 (0 B)

Cache Remaining: 0 (0 B)

Cache Used%: 100.00%

Cache Remaining%: 0.00%

Last contact: Fri Dec 23 08:07:50 PST 2016

Name: 192.168.115.139:50010 (namenode2.hadoop.com)

Hostname: namenode2.hadoop.com

Decommission Status : Normal

Configured Capacity: 24063635456 (22.41 GB)

DFS Used: 24576 (24 KB)

Non DFS Used: 5044436992 (4.70 GB)

DFS Remaining: 19019173888 (17.71 GB)

DFS Used%: 0.00%

DFS Remaining%: 79.04%

Configured Cache Capacity: 0 (0 B)

Cache Used: 0 (0 B)

Cache Remaining: 0 (0 B)

Cache Used%: 100.00%

Cache Remaining%: 0.00%

Last contact: Fri Dec 23 08:07:51 PST 2016

Name: 192.168.115.138:50010 (datanode2.hadoop.com)

Hostname: datanode2.hadoop.com

Decommission Status : Normal

Configured Capacity: 24063635456 (22.41 GB)

DFS Used: 24576 (24 KB)

Non DFS Used: 4873277440 (4.54 GB)

DFS Remaining: 19190333440 (17.87 GB)

DFS Used%: 0.00%

DFS Remaining%: 79.75%

Configured Cache Capacity: 0 (0 B)

Cache Used: 0 (0 B)

Cache Remaining: 0 (0 B)

Cache Used%: 100.00%

Cache Remaining%: 0.00%

Last contact: Fri Dec 23 08:07:50 PST 2016

Name: 192.168.115.135:50010 (datanode3.hadoop.com)

Hostname: datanode3.hadoop.com

Decommission Status : Normal

Configured Capacity: 24063635456 (22.41 GB)

DFS Used: 24576 (24 KB)

Non DFS Used: 4874047488 (4.54 GB)

DFS Remaining: 19189563392 (17.87 GB)

DFS Used%: 0.00%

DFS Remaining%: 79.75%

Configured Cache Capacity: 0 (0 B)

Cache Used: 0 (0 B)

Cache Remaining: 0 (0 B)

Cache Used%: 100.00%

Cache Remaining%: 0.00%

Last contact: Fri Dec 23 08:07:49 PST 2016

--------------以上就是企业级hadoop2.3.0集群的搭建----------------

六、集群测试

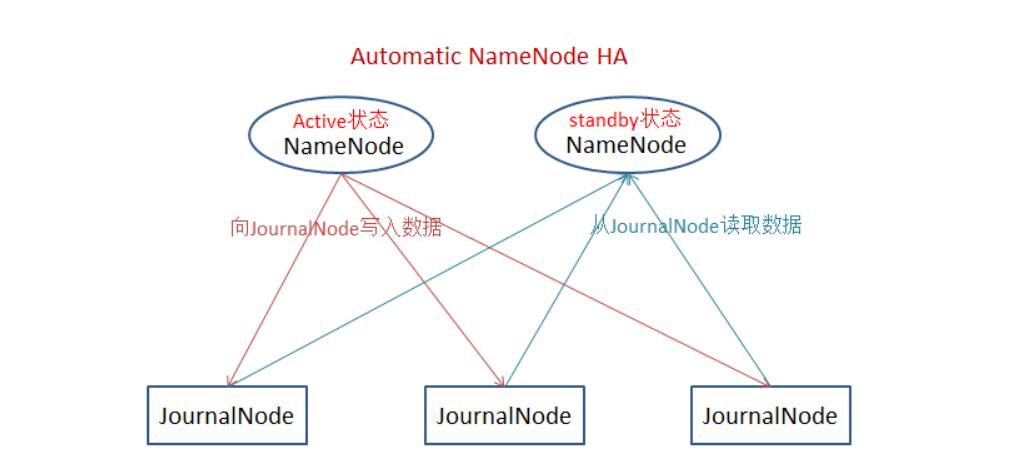

七、Hadoop2.3.0集群上实现HA

八、Hadoop2.3.0集群上实现HDFS联邦

九、Hadoop2.3.0集群上同时实现HDFS HA+联邦+Resource Manager HA

740

740

被折叠的 条评论

为什么被折叠?

被折叠的 条评论

为什么被折叠?