ceph-disk源码分析

原文 http://www.hl10502.com/2017/06/23/ceph-disk-1/#more

ceph-disk是一个用于部署osd数据及journal分区或目录的工具,基于Python开发。ceph-disk工具放在ceph-base包中,安装ceph-base rpm包将默认安装此工具,比如Jewel版ceph-10.2.7中的ceph-base-10.2.7-0.el7.x86_64.rpm。

ceph-disk命令行

ceph-disk 命令格式如下

-

ceph-disk [-h] [-v] [--log-stdout] [--prepend-to-path PATH] [--statedir PATH] [--sysconfdir PATH] [--setuser USER] [--setgroup GROUP] {prepare,activate,activate-lockbox,activate-block,activate-journal,activate-all,list,suppress-activate,unsuppress-activate,deactivate,destroy,zap,trigger} ... - prepare:使用一个文件目录或磁盘来准备创建OSD

- activate:激活一个OSD

- activate-lockbox:激活一个lockbox

- activate-block:通过块设备激活一个OSD

- activate-journal:通过journal激活一个OSD

- activate-all:激活所有标记的OSD分区

- list:列出磁盘、分区和OSD

- suppress-activate:禁止激活一个设备

- unsuppress-activate:停止禁止激活一个设备

- deactivate:停用一个OSD

- destroy:销毁一个OSD

- zap:清除设备分区

- trigger:激活任何设备(由udev调用)

ceph-disk工作机制

通过ceph-disk创建osd, 数据分区和journal分区将自动mount。创建osd,主要是prepare和activate。

假设/dev/sdb是OSD要使用的数据盘,OSD要使用的journal分区在/dev/sdc上创建,/dev/sdc是SSD, 创建激活OSD的命令如下:

- ceph-disk prepare /dev/sdb /dev/sdc

- ceph-disk activate /dev/sdb1

sgdisk 命令参考 https://linux.die.net/man/8/sgdisk

udevadm 命令参考 https://linux.die.net/man/8/udevadm

prepare过程

- 使用sgdisk命令销毁数据盘/dev/sdb的GPT和MBR,清除所有分区

- 获取osd_journal_size大小,默认5120M,可以指定设置,准备journal分区

- 一个SSD很可能被多个OSD共享来划分各自的journal分区,/dev/sdc上已分好的区不变,使用sgdisk在分区上增加新的分区作为journal,不影响原来的分区,如果不指定创建分区的uuid,自动为journal分区生成一个journal_uuid,journal分区的typecode为45b0969e-9b03-4f30-b4c6-b4b80ceff106,journal是一个link,指向一个固定的位置,再由这个link指向真正的journal分区,这样可以解决盘符漂移带来的问题

- 使用sgdisk创建数据分区,使用--largest-new来使用磁盘最大可能空间,即将所有的空间用来创建数据分区/dev/sdb1

- 格式化数据分区/dev/sdb1为xfs

- 挂载数据分区/dev/sdb1到临时目录

- 在临时目录下写入ceph_fsid、fsid、magic、journal_uuid四个临时文件,文件内容相应写入

- 创建journal链接,使用ln -s将sdc新建的journal分区连接到临时目录journal文件

- 卸载、删除临时目录

- 修改OSD分区的typecode为4fbd7e29-9d25-41b8-afd0-062c0ceff05d

- udevadm trigger强制内核触发设备事件

activate过程

/lib/udev/rules.d/目录下的两个rules文件60-ceph-by-parttypeuuid.rules、95-ceph-osd.rules

其实并不需要显式的调用activate这个命令。是因为prepare最后的udevadm trigger强制内核触发设备事件,udev event调用了ceph-disk trigger命令,分析该分区的typecode,是ceph OSD的数据分区,会自动调用ceph-disk activate /dev/sdb1。typecode为journal的分区则通过ceph-disk activate-journal来激活。

[root@ceph ~]# cat /lib/udev/rules.d/95-ceph-osd.rules

# OSD_UUID

ACTION=="add", SUBSYSTEM=="block", \

ENV{DEVTYPE}=="partition", \

ENV{ID_PART_ENTRY_TYPE}=="4fbd7e29-9d25-41b8-afd0-062c0ceff05d", \

OWNER:="ceph", GROUP:="ceph", MODE:="660", \

RUN+="/usr/sbin/ceph-disk --log-stdout -v trigger /dev/$name"

ACTION=="change", SUBSYSTEM=="block", \

ENV{ID_PART_ENTRY_TYPE}=="4fbd7e29-9d25-41b8-afd0-062c0ceff05d", \

OWNER="ceph", GROUP="ceph", MODE="660"

- 获取文件系统类型xfs、osd_mount_options_xfs、osd_fs_mount_options_xfs

- 挂载/dev/sdb1到临时目录

- 卸载、删除临时目录

- 启动OSD进程

源码结构

ceph-disk就两个文件

- __init__.py:空白初始化文件

- main.py:所有的ceph-disk命令操作在这个文件中,代码超5000行

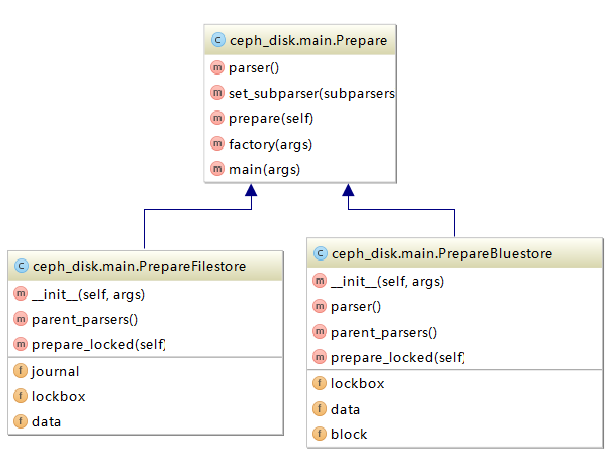

类图

main.py所有类图:ceph-disk.png

Prepare类

Prepare是准备OSD的操作。两个子类PrepareBluestore、PrepareFilestore分别对应Bluestore、Filestore。目前Jewel10.2.7默认Filestore

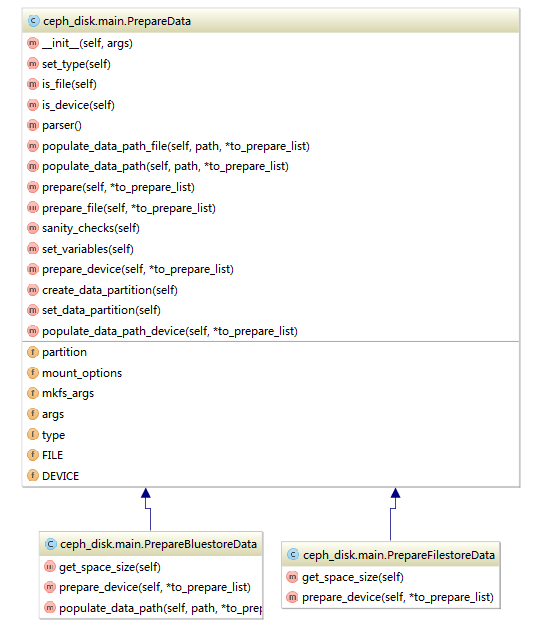

PrepareData类

PrepareData是准备OSD的数据操作,磁盘数据分区、Journal分区。两个子类PrepareFilestoreData、PrepareBluestoreData

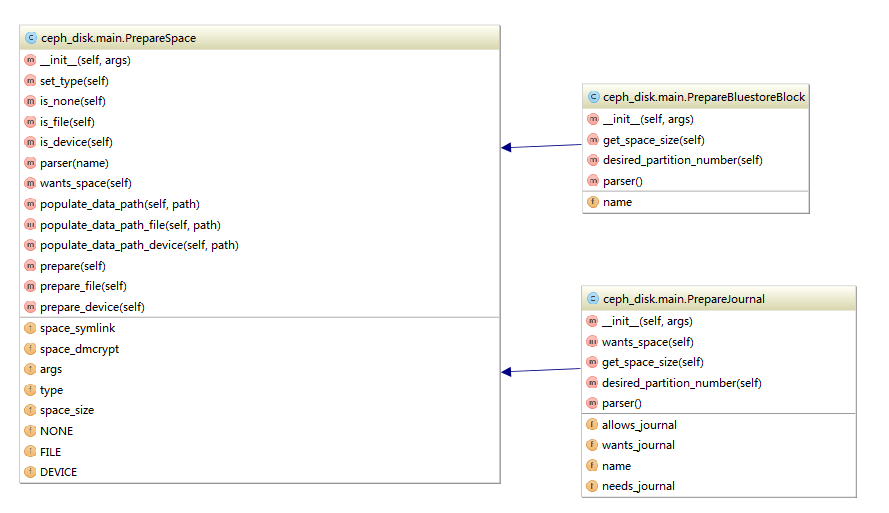

PrepareSpace类

PrepareSpace是用来获取磁盘分区大小。两个子类PrepareJournal、PrepareBluestoreBlock

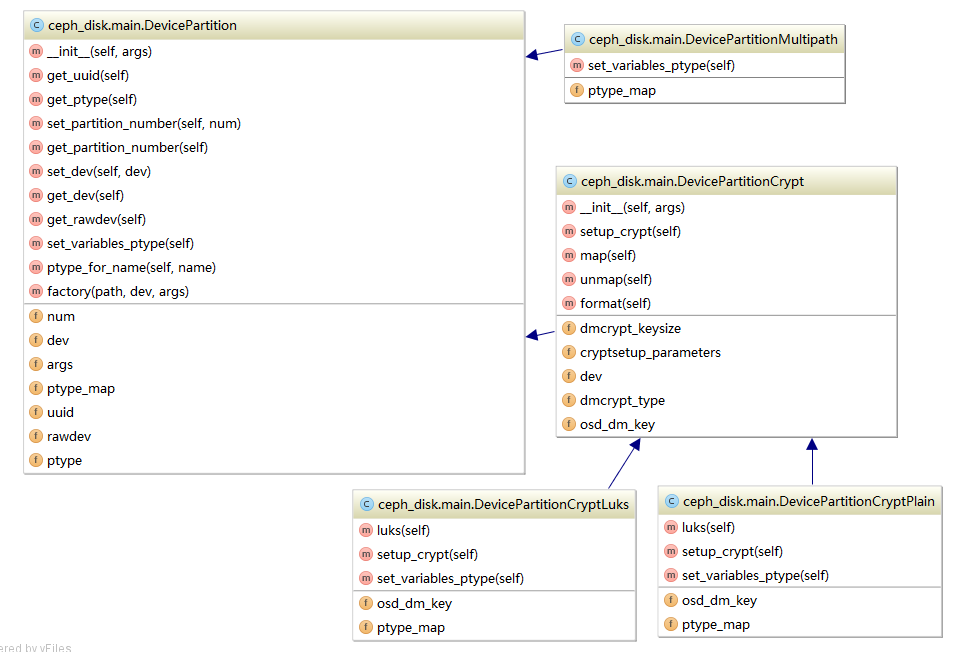

DevicePartition类

DevicePartition是设备分区的加密模式。四个子类DevicePartitionCrypt、DevicePartitionCryptLuks、DevicePartitionCryptPlain、DevicePartitionMultipath对应四种不同的dmcrypt

OSD管理

创建OSD主要是分为prepare与activate两个操作。

main.py主函数

if __name__ == '__main__':

main(sys.argv[1:])

warned_about = {}main函数

def main(argv):

# 命令行解析

args = parse_args(argv)

#设置日志级别

setup_logging(args.verbose, args.log_stdout)

if args.prepend_to_path != '':

path = os.environ.get('PATH', os.defpath)

os.environ['PATH'] = args.prepend_to_path + ":" + path

# 设置ceph-disk.prepare.lock、ceph-disk.activate.lock的目录/var/lib/ceph/tmp

setup_statedir(args.statedir)

# 设置配置文件目录/etc/ceph/

setup_sysconfdir(args.sysconfdir)

global CEPH_PREF_USER

CEPH_PREF_USER = args.setuser

global CEPH_PREF_GROUP

CEPH_PREF_GROUP = args.setgroup

# 执行子命令函数

if args.verbose:

args.func(args)

else:

main_catch(args.func, args)

parse_args函数解析子命令

def parse_args(argv):

parser = argparse.ArgumentParser(

'ceph-disk',

)

...

...

...

# prepare 子命令解析

Prepare.set_subparser(subparsers)

# activate 子命令解析

make_activate_parser(subparsers)

make_activate_lockbox_parser(subparsers)

make_activate_block_parser(subparsers)

make_activate_journal_parser(subparsers)

make_activate_all_parser(subparsers)

make_list_parser(subparsers)

make_suppress_parser(subparsers)

make_deactivate_parser(subparsers)

make_destroy_parser(subparsers)

make_zap_parser(subparsers)

make_trigger_parser(subparsers)

args = parser.parse_args(argv)

return argsmain_catch函数

def main_catch(func, args):

try:

func(args)

except Error as e:

raise SystemExit(

'{prog}: {msg}'.format(

prog=args.prog,

msg=e,

)

)

except CephDiskException as error:

exc_name = error.__class__.__name__

raise SystemExit(

'{prog} {exc_name}: {msg}'.format(

prog=args.prog,

exc_name=exc_name,

msg=error,

)

)

prepare

ceph-disk prepare命令行格式为:

ceph-disk prepare [-h] [--cluster NAME] [--cluster-uuid UUID]

[--osd-uuid UUID] [--dmcrypt]

[--dmcrypt-key-dir KEYDIR] [--prepare-key PATH]

[--fs-type FS_TYPE] [--zap-disk] [--data-dir]

[--data-dev] [--lockbox LOCKBOX]

[--lockbox-uuid UUID] [--journal-uuid UUID]

[--journal-file] [--journal-dev] [--bluestore]

[--block-uuid UUID] [--block-file] [--block-dev]

DATA [JOURNAL] [BLOCK]

Prepare类set_subparser函数解析子命令,默认函数是main

@staticmethod

def set_subparser(subparsers):

parents = [

Prepare.parser(),

PrepareData.parser(),

Lockbox.parser(),

]

parents.extend(PrepareFilestore.parent_parsers())

parents.extend(PrepareBluestore.parent_parsers())

parser = subparsers.add_parser(

'prepare',

parents=parents,

help='Prepare a directory or disk for a Ceph OSD',

)

parser.set_defaults(

func=Prepare.main,

)

return parser

调用factory函数

@staticmethod

def main(args):

Prepare.factory(args).prepare()

默认PrepareFilestore

@staticmethod

def factory(args):

if args.bluestore:

return PrepareBluestore(args)

else:

return PrepareFilestore(args)

PrepareFilestore类初始化

- PrepareFilestoreData初始化,继承PrepareData

- PrepareJournal初始化

-

def __init__(self, args): if args.dmcrypt: self.lockbox = Lockbox(args) self.data = PrepareFilestoreData(args) self.journal = PrepareJournal(args)

PrepareData初始化,获取fsid、生成新的osd_uuid

- 执行/usr/bin/ceph-osd --cluster=ceph --show-config-value=fsid获取fsid

- 生成新的osd_uuid

-

def __init__(self, args): self.args = args self.partition = None self.set_type() if self.args.cluster_uuid is None: self.args.cluster_uuid = get_fsid(cluster=self.args.cluster) if self.args.osd_uuid is None: self.args.osd_uuid = str(uuid.uuid4())

PrepareJournal初始化,继承PrepareSpace类

- 调用check_journal_reqs函数,获取check-allows-journal、check-wants-journal、check-needs-journal并校验

- 调用父类初始化

-

def __init__(self, args): self.name = 'journal' (self.allows_journal, self.wants_journal, self.needs_journal) = check_journal_reqs(args) if args.journal and not self.allows_journal: raise Error('journal specified but not allowed by osd backend') super(PrepareJournal, self).__init__(args)

PrepareSpace类初始化

- 调用get_space_size函数获取osd_journal_size大小

-

def __init__(self, args): self.args = args self.set_type() self.space_size = self.get_space_size() if getattr(self.args, self.name + '_uuid') is None: setattr(self.args, self.name + '_uuid', str(uuid.uuid4())) self.space_symlink = None self.space_dmcrypt = None

子类PrepareJournal的get_space_size函数

- 执行/usr/bin/ceph-osd --cluster=ceph --show-config-value=osd_journal_size命令

-

def get_space_size(self): return int(get_conf_with_default( cluster=self.args.cluster, variable='osd_journal_size', ))

由于PrepareFilestore、PrepareBluestore继承Prepare类,prepare函数在Prepare类中定义

def prepare(self):

with prepare_lock:

self.prepare_locked()

PrepareFilestore类的prepare_locked函数,调用PrepareFilestoreData的prepare函数,PrepareJournal作为参数

def prepare_locked(self):

if self.data.args.dmcrypt:

self.lockbox.prepare()

self.data.prepare(self.journal)

PrepareFilestoreData的父类PrepareData的prepare函数,如果是device设备,调用prepare_device函数

def prepare(self, *to_prepare_list):

if self.type == self.DEVICE:

self.prepare_device(*to_prepare_list)

elif self.type == self.FILE:

self.prepare_file(*to_prepare_list)

else:

raise Error('unexpected type ', self.type)

PrepareFilestoreData类的prepare_device函数

- 调用父类PrepareData的prepare_device函数

- 调用set_data_partition函数

- 调用populate_data_path_device函数

-

def prepare_device(self, *to_prepare_list): # 父类PrepareData的prepare_device函数 super(PrepareFilestoreData, self).prepare_device(*to_prepare_list) for to_prepare in to_prepare_list: # PrepareJournal类的prepare函数,调用prepare_device函数创建journal分区 to_prepare.prepare() # 设置创建数据分区 self.set_data_partition() # 创建OSD self.populate_data_path_device(*to_prepare_list)

PrepareData的prepare_device函数

- 调用sanity_checks函数校验设备是否已使用

- 调用set_variables函数设置变量

- 调用zap函数清除分区,并使分区生效

-

def prepare_device(self, *to_prepare_list): # 校验device self.sanity_checks() # 设置变量 self.set_variables() if self.args.zap_disk is not None: # 清除分区,并使分区生效 zap(self.args.data)

调用zap函数清除分区,并使分区生效。[dev]为设备,比如/dev/sdb

- /usr/sbin/sgdisk --zap-all -- [dev]

- /usr/sbin/sgdisk --clear --mbrtogpt -- [dev]

- /usr/bin/udevadm settle --timeout=600

- /usr/bin/flock -s [dev] /usr/sbin/partprobe [dev]

- /usr/bin/udevadm settle --timeout=600

-

def zap(dev): """ Destroy the partition table and content of a given disk. """ dev = os.path.realpath(dev) dmode = os.stat(dev).st_mode if not stat.S_ISBLK(dmode) or is_partition(dev): raise Error('not full block device; cannot zap', dev) try: LOG.debug('Zapping partition table on %s', dev) # try to wipe out any GPT partition table backups. sgdisk # isn't too thorough. lba_size = 4096 size = 33 * lba_size with open(dev, 'wb') as dev_file: dev_file.seek(-size, os.SEEK_END) dev_file.write(size * b'\0') # 清除分区 command_check_call( [ 'sgdisk', '--zap-all', '--', dev, ], ) command_check_call( [ 'sgdisk', '--clear', '--mbrtogpt', '--', dev, ], ) # 使分区生效 update_partition(dev, 'zapped')

PrepareJournal类的prepare函数

def prepare(self):

if self.type == self.DEVICE:

self.prepare_device()

elif self.type == self.FILE:

self.prepare_file()

elif self.type == self.NONE:

pass

else:

raise Error('unexpected type ', self.type)prepare_device函数,调用Device类的create_partition函数创建journal分区

...

...

device = Device.factory(getattr(self.args, self.name), self.args)

# 创建journal分区

num = device.create_partition(

uuid=getattr(self.args, self.name + '_uuid'),

name=self.name,

size=self.space_size,

num=num)

...

...create_partition函数创建journal分区

- 调用ptype_tobe_for_name函数,获取journal的typecode:45b0969e-9b03-4f30-b4c6-b4b80ceff106

- 创建journal分区

- /usr/sbin/sgdisk --new=2:0:+5120M --change-name=2:ceph journal --partition-guid=2:f693b826-e070-4b42-af3e-07d011994583 --typecode=2:45b0969e-9b03-4f30-b4c6-b4b80ceff106 --mbrtogpt -- /dev/sdb

- 分区生效

- /usr/bin/udevadm settle --timeout=600

- /usr/bin/flock -s /dev/sdb /usr/sbin/partprobe /dev/sdb

- /usr/bin/udevadm settle --timeout=600

-

def create_partition(self, uuid, name, size=0, num=0): ptype = self.ptype_tobe_for_name(name) if num == 0: num = get_free_partition_index(dev=self.path) if size > 0: new = '--new={num}:0:+{size}M'.format(num=num, size=size) if size > self.get_dev_size(): LOG.error('refusing to create %s on %s' % (name, self.path)) LOG.error('%s size (%sM) is bigger than device (%sM)' % (name, size, self.get_dev_size())) raise Error('%s device size (%sM) is not big enough for %s' % (self.path, self.get_dev_size(), name)) else: new = '--largest-new={num}'.format(num=num) LOG.debug('Creating %s partition num %d size %d on %s', name, num, size, self.path) command_check_call( [ 'sgdisk', new, '--change-name={num}:ceph {name}'.format(num=num, name=name), '--partition-guid={num}:{uuid}'.format(num=num, uuid=uuid), '--typecode={num}:{uuid}'.format(num=num, uuid=ptype), '--mbrtogpt', '--', self.path, ] ) # 使分区生效 update_partition(self.path, 'created') return num

set_data_partition函数,调用create_data_partition函数创建数据分区

def set_data_partition(self):

if is_partition(self.args.data):

LOG.debug('OSD data device %s is a partition',

self.args.data)

self.partition = DevicePartition.factory(

path=None, dev=self.args.data, args=self.args)

ptype = self.partition.get_ptype()

ready = Ptype.get_ready_by_name('osd')

if ptype not in ready:

LOG.warning('incorrect partition UUID: %s, expected %s'

% (ptype, str(ready)))

else:

LOG.debug('Creating osd partition on %s',

self.args.data)

self.partition = self.create_data_partition()调用Device类的create_partition创建数据分区并使分区生效

- /usr/sbin/sgdisk --largest-new=1 --change-name=1:ceph data --partition-guid=1:1b9521d7-ee24-4043-96a7-1a3140bbff27 --typecode=1:89c57f98-2fe5-4dc0-89c1-f3ad0ceff2be --mbrtogpt -- /dev/sdb

- /usr/bin/udevadm settle --timeout=600

- /usr/bin/flock -s /dev/sdb /usr/sbin/partprobe /dev/sdb

- /usr/bin/udevadm settle --timeout=600

-

def create_data_partition(self): device = Device.factory(self.args.data, self.args) partition_number = 1 device.create_partition(uuid=self.args.osd_uuid, name='data', num=partition_number, size=self.get_space_size()) return device.get_partition(partition_number)

populate_data_path_device函数创建OSD

- 格式化数据分区为xfs

- 创建临时目录并挂载

- ceph_fsid、fsid、magic、journal_uuid文件写入OSD的临时文件

- 执行restorecon命令,恢复文件安全

- 卸载、删除临时目录

- 更改OSD分区的typecode为4fbd7e29-9d25-41b8-afd0-062c0ceff05d,对应为ready

- 使分区生效

- 强制内核触发设备事件

-

def populate_data_path_device(self, *to_prepare_list): partition = self.partition if isinstance(partition, DevicePartitionCrypt): partition.map() try: args = [ 'mkfs', '-t', self.args.fs_type, ] if self.mkfs_args is not None: args.extend(self.mkfs_args.split()) if self.args.fs_type == 'xfs': args.extend(['-f']) # always force else: args.extend(MKFS_ARGS.get(self.args.fs_type, [])) args.extend([ '--', partition.get_dev(), ]) try: LOG.debug('Creating %s fs on %s', self.args.fs_type, partition.get_dev()) # 格式化数据分区为xfs command_check_call(args) except subprocess.CalledProcessError as e: raise Error(e) # 挂载临时目录 path = mount(dev=partition.get_dev(), fstype=self.args.fs_type, options=self.mount_options) try: # OSD的ceph_fsid、fsid、magic、journal_uuid文件写入临时文件 self.populate_data_path(path, *to_prepare_list) finally: # 执行restorecon命令,恢复文件安全 path_set_context(path) # 卸载临时目录,并删除临时目录 unmount(path) finally: if isinstance(partition, DevicePartitionCrypt): partition.unmap() if not is_partition(self.args.data): try: # 更改OSD分区的typecode为4fbd7e29-9d25-41b8-afd0-062c0ceff05d,对应为ready command_check_call( [ 'sgdisk', '--typecode=%d:%s' % (partition.get_partition_number(), partition.ptype_for_name('osd')), '--', self.args.data, ], ) except subprocess.CalledProcessError as e: raise Error(e) # 使分区生效 update_partition(self.args.data, 'prepared') # 强制内核触发设备事件 command_check_call(['udevadm', 'trigger', '--action=add', '--sysname-match', os.path.basename(partition.rawdev)])

activate

ceph-disk activate命令行格式为:

ceph-disk activate [-h] [--mount] [--activate-key PATH]

[--mark-init INITSYSTEM] [--no-start-daemon]

[--dmcrypt] [--dmcrypt-key-dir KEYDIR]

[--reactivate]

PATHactivate子命令解析make_activate_parser函数,默认的执行函数是main_activate。

- 调用mount_activate函数,挂载OSD

- 获取挂载点,校验journal文件

- 启动OSD进程

-

def main_activate(args): cluster = None osd_id = None LOG.info('path = ' + str(args.path)) if not os.path.exists(args.path): raise Error('%s does not exist' % args.path) if is_suppressed(args.path): LOG.info('suppressed activate request on %s', args.path) return # ceph-disk.activate.lock文件:/var/lib/ceph/tmp/ceph-disk.activate.lock with activate_lock: mode = os.stat(args.path).st_mode if stat.S_ISBLK(mode): if (is_partition(args.path) and (get_partition_type(args.path) == PTYPE['mpath']['osd']['ready']) and not is_mpath(args.path)): raise Error('%s is not a multipath block device' % args.path) # 挂载数据分区 (cluster, osd_id) = mount_activate( dev=args.path, activate_key_template=args.activate_key_template, init=args.mark_init, dmcrypt=args.dmcrypt, dmcrypt_key_dir=args.dmcrypt_key_dir, reactivate=args.reactivate, ) # 获取挂载点 osd_data = get_mount_point(cluster, osd_id) elif stat.S_ISDIR(mode): (cluster, osd_id) = activate_dir( path=args.path, activate_key_template=args.activate_key_template, init=args.mark_init, ) osd_data = args.path else: raise Error('%s is not a directory or block device' % args.path) # exit with 0 if the journal device is not up, yet # journal device will do the activation # 校验journal文件 osd_journal = '{path}/journal'.format(path=osd_data) if os.path.islink(osd_journal) and not os.access(osd_journal, os.F_OK): LOG.info("activate: Journal not present, not starting, yet") return if (not args.no_start_daemon and args.mark_init == 'none'): command_check_call( [ 'ceph-osd', '--cluster={cluster}'.format(cluster=cluster), '--id={osd_id}'.format(osd_id=osd_id), '--osd-data={path}'.format(path=osd_data), '--osd-journal={journal}'.format(journal=osd_journal), ], ) if (not args.no_start_daemon and args.mark_init not in (None, 'none')): # 启动OSD进程 start_daemon( cluster=cluster, osd_id=osd_id, )

mount_activate函数

def mount_activate(

dev,

activate_key_template,

init,

dmcrypt,

dmcrypt_key_dir,

reactivate=False,

):

if dmcrypt:

# 获取分区UUID

part_uuid = get_partition_uuid(dev)

dev = dmcrypt_map(dev, dmcrypt_key_dir)

try:

# 获取文件系统类型xfs

fstype = detect_fstype(dev=dev)

except (subprocess.CalledProcessError,

TruncatedLineError,

TooManyLinesError) as e:

raise FilesystemTypeError(

'device {dev}'.format(dev=dev),

e,

)

# TODO always using mount options from cluster=ceph for

# now; see http://tracker.newdream.net/issues/3253

# 获取osd_mount_options_xfs

mount_options = get_conf(

cluster='ceph',

variable='osd_mount_options_{fstype}'.format(

fstype=fstype,

),

)

if mount_options is None:

# 获取osd_fs_mount_options_xfs

mount_options = get_conf(

cluster='ceph',

variable='osd_fs_mount_options_{fstype}'.format(

fstype=fstype,

),

)

# remove whitespaces from mount_options

if mount_options is not None:

mount_options = "".join(mount_options.split())

# 挂载临时目录

path = mount(dev=dev, fstype=fstype, options=mount_options)

# check if the disk is deactive, change the journal owner, group

# mode for correct user and group.

if os.path.exists(os.path.join(path, 'deactive')):

# logging to syslog will help us easy to know udev triggered failure

if not reactivate:

unmount(path)

# we need to unmap again because dmcrypt map will create again

# on bootup stage (due to deactivate)

if '/dev/mapper/' in dev:

part_uuid = dev.replace('/dev/mapper/', '')

dmcrypt_unmap(part_uuid)

LOG.info('OSD deactivated! reactivate with: --reactivate')

raise Error('OSD deactivated! reactivate with: --reactivate')

# flag to activate a deactive osd.

deactive = True

else:

deactive = False

osd_id = None

cluster = None

try:

# 挂载OSD

(osd_id, cluster) = activate(path, activate_key_template, init)

# Now active successfully

# If we got reactivate and deactive, remove the deactive file

if deactive and reactivate:

os.remove(os.path.join(path, 'deactive'))

LOG.info('Remove `deactive` file.')

# check if the disk is already active, or if something else is already

# mounted there

active = False

other = False

src_dev = os.stat(path).st_dev

# 校验是否已经激活(挂载到正确目录)

try:

dst_dev = os.stat((STATEDIR + '/osd/{cluster}-{osd_id}').format(

cluster=cluster,

osd_id=osd_id)).st_dev

if src_dev == dst_dev:

active = True

else:

parent_dev = os.stat(STATEDIR + '/osd').st_dev

if dst_dev != parent_dev:

other = True

elif os.listdir(get_mount_point(cluster, osd_id)):

LOG.info(get_mount_point(cluster, osd_id) +

" is not empty, won't override")

other = True

except OSError:

pass

if active:

LOG.info('%s osd.%s already mounted in position; unmounting ours.'

% (cluster, osd_id))

# 卸载临时目录,并删除临时目录

unmount(path)

elif other:

raise Error('another %s osd.%s already mounted in position '

'(old/different cluster instance?); unmounting ours.'

% (cluster, osd_id))

else:

move_mount(

dev=dev,

path=path,

cluster=cluster,

osd_id=osd_id,

fstype=fstype,

mount_options=mount_options,

)

return cluster, osd_id

except:

LOG.error('Failed to activate')

unmount(path)

raise

finally:

# remove our temp dir

# 删除临时目录

if os.path.exists(path):

os.rmdir(path)

手工管理OSD

准备OSD

以 /usr/sbin/ceph-disk -v prepare --zap-disk --cluster ceph --fs-type xfs -- /dev/sdb为例,ceph-disk prepare命令执行过程如下。

查看journal参数

[root@ceph-231 ~]# /usr/bin/ceph-osd --check-allows-journal -i 0 --cluster ceph --setuser ceph --setgroup ceph

yes

[root@ceph-231 ~]# /usr/bin/ceph-osd --check-wants-journal -i 0 --cluster ceph --setuser ceph --setgroup ceph

yes

[root@ceph-231 ~]# /usr/bin/ceph-osd --check-needs-journal -i 0 --cluster ceph --setuser ceph --setgroup ceph

no查看已挂载的设备,/dev/sdb未被挂载,可以用来创建OSD

[root@ceph-231 ~]# cat /proc/mounts

...

...

/dev/sda1 / ext3 rw,relatime,errors=continue,user_xattr,acl,barrier=1,data=ordered 0 0

...

...

/dev/sda5 /var/log ext3 rw,relatime,errors=continue,user_xattr,acl,barrier=1,data=ordered 0 0

...

...清除分区

[root@ceph-231 ~]# /usr/sbin/sgdisk --zap-all -- /dev/sdb

[root@ceph-231 ~]# /usr/sbin/sgdisk --clear --mbrtogpt -- /dev/sdb

[root@ceph-231 ~]# /usr/bin/udevadm settle --timeout=600

[root@ceph-231 ~]# /usr/bin/flock -s /dev/sdb /usr/sbin/partprobe /dev/sdb

[root@ceph-231 ~]# /usr/bin/udevadm settle --timeout=600获取osd_journal_size,默认5120M

[root@ceph-231 ~]# /usr/bin/ceph-osd --cluster=ceph --show-config-value=osd_journal_size

5120生成journal_uuid

[root@ceph-231 ~]# uuidgen

f693b826-e070-4b42-af3e-07d011994583

创建journal分区,{num}用具体数字替换

- 如果数据盘与journal分区是同一个磁盘,{num}为2

- 如果数据盘与journal分区不在同一个磁盘,查看journal盘的分区信息,{num}为分区数+1

- 执行 parted –machine – /dev/sdb print 查看journal盘分区信息

[root@ceph-231 ~]# /usr/sbin/sgdisk --new={num}:0:+5120M --change-name={num}:"ceph journal" --partition-guid={num}:f693b826-e070-4b42-af3e-07d011994583 --typecode={num}:45b0969e-9b03-4f30-b4c6-b4b80ceff106 --mbrtogpt -- /dev/sdb

[root@ceph-231 ~]# /usr/bin/udevadm settle --timeout=600

[root@ceph-231 ~]# /usr/bin/flock -s /dev/sdb /usr/sbin/partprobe /dev/sdb

[root@ceph-231 ~]# /usr/bin/udevadm settle --timeout=600生成data分区uuid

[root@ceph-231 ~]# uuidgen

1b9521d7-ee24-4043-96a7-1a3140bbff27

创建data分区

[root@ceph-231 ~]# /usr/sbin/sgdisk --largest-new=1 --change-name=1:"ceph data" --partition-guid=1:1b9521d7-ee24-4043-96a7-1a3140bbff27 --typecode=1:89c57f98-2fe5-4dc0-89c1-f3ad0ceff2be --mbrtogpt -- /dev/sdb

[root@ceph-231 ~]# /usr/bin/udevadm settle --timeout=600

[root@ceph-231 ~]# /usr/bin/flock -s /dev/sdb /usr/sbin/partprobe /dev/sdb

[root@ceph-231 ~]# /usr/bin/udevadm settle --timeout=600格式化数据分区为xfs

[root@ceph-231 ~]# /usr/sbin/mkfs -t xfs -f -i size=2048 -- /dev/sdb1

查看挂载属性

[root@ceph-231 ~]# /usr/bin/ceph-conf --cluster=ceph --name=osd. --lookup osd_mkfs_options_xfs

[root@ceph-231 ~]# /usr/bin/ceph-conf --cluster=ceph --name=osd. --lookup osd_fs_mkfs_options_xfs

[root@ceph-231 ~]# /usr/bin/ceph-conf --cluster=ceph --name=osd. --lookup osd_mount_options_xfs

[root@ceph-231 ~]# /usr/bin/ceph-conf --cluster=ceph --name=osd. --lookup osd_fs_mount_options_xfs

osd_mkfs_options_xfs、osd_fs_mkfs_options_xfs、osd_mount_options_xfs、osd_fs_mount_options_xfs四个值均为空,xfs的默认挂载属性为noatime,inode64,挂载临时目录

[root@ceph-231 ~]# mkdir /var/lib/ceph/tmp/mnt.uCrLyH

[root@ceph-231 ~]# /usr/bin/mount -t xfs -o noatime,inode64 -- /dev/sdb1 /var/lib/ceph/tmp/mnt.uCrLyH

[root@ceph-231 ~]# /usr/sbin/restorecon /var/lib/ceph/tmp/mnt.uCrLyH获取集群fsid

[root@ceph-231 ~]# /usr/bin/ceph-osd --cluster=ceph --show-config-value=fsid

ad3bdf51-ae79-44c3-b634-0c9f4995bbf5集群fs_id写入ceph_fsid临时文件

[root@ceph-231 ~]# vi /var/lib/ceph/tmp/mnt.uCrLyH/ceph_fsid.1308.tmp

[root@ceph-231 ~]# /usr/sbin/restorecon -R /var/lib/ceph/tmp/mnt.uCrLyH/ceph_fsid.1308.tmp

[root@ceph-231 ~]# /usr/bin/chown -R ceph:ceph /var/lib/ceph/tmp/mnt.uCrLyH/ceph_fsid.1308.tmp

[root@ceph-231 ~]# mv /var/lib/ceph/tmp/mnt.uCrLyH/ceph_fsid.1308.tmp /var/lib/ceph/tmp/mnt.uCrLyH/ceph_fsid

生成osd_uuid

[root@ceph-231 ~]# uuidgen

410fa9bc-cdbf-469e-a08a-c246048d5e9b

osd_uuid写入fsid文件临时文件

[root@ceph-231 ~]# vi /var/lib/ceph/tmp/mnt.uCrLyH/fsid.1308.tmp

[root@ceph-231 ~]# /usr/sbin/restorecon -R /var/lib/ceph/tmp/mnt.uCrLyH/fsid.1308.tmp

[root@ceph-231 ~]# /usr/bin/chown -R ceph:ceph /var/lib/ceph/tmp/mnt.uCrLyH/fsid.1308.tmp

[root@ceph-231 ~]# mv /var/lib/ceph/tmp/mnt.uCrLyH/fsid.1308.tmp /var/lib/ceph/tmp/mnt.uCrLyH/fsid

写入magic临时文件,内容为 ceph osd volume v026

[root@ceph-231 ~]# vi /var/lib/ceph/tmp/mnt.uCrLyH/magic.1308.tmp

[root@ceph-231 ~]# /usr/sbin/restorecon -R /var/lib/ceph/tmp/mnt.uCrLyH/magic.1308.tmp

[root@ceph-231 ~]# /usr/bin/chown -R ceph:ceph /var/lib/ceph/tmp/mnt.uCrLyH/magic.1308.tmp

[root@ceph-231 ~]# mv /var/lib/ceph/tmp/mnt.uCrLyH/magic.1308.tmp /var/lib/ceph/tmp/mnt.uCrLyH/magic

查看journal盘sdb2的uuid

[root@ceph-231 ~]# ll /dev/disk/by-partuuid/ | grep sdb2

lrwxrwxrwx 1 root root 10 Jun 27 19:21 f693b826-e070-4b42-af3e-07d011994583 -> ../../sdb2

journal_uuid写入临时文件

[root@ceph-231 ~]# vi /var/lib/ceph/tmp/mnt.uCrLyH/journal_uuid.1308.tmp

[root@ceph-231 ~]# /usr/sbin/restorecon -R /var/lib/ceph/tmp/mnt.uCrLyH/journal_uuid.1308.tmp

[root@ceph-231 ~]# /usr/bin/chown -R ceph:ceph /var/lib/ceph/tmp/mnt.uCrLyH/journal_uuid.1308.tmp

[root@ceph-231 ~]# mv /var/lib/ceph/tmp/mnt.uCrLyH/journal_uuid.1308.tmp /var/lib/ceph/tmp/mnt.uCrLyH/journal_uuid

创建journal链接

[root@ceph-231 ~]# ln -s /dev/disk/by-partuuid/f693b826-e070-4b42-af3e-07d011994583 /var/lib/ceph/tmp/mnt.uCrLyH/journal

restorecon命令,恢复文件安全

[root@ceph-231 ~]# /usr/sbin/restorecon -R /var/lib/ceph/tmp/mnt.uCrLyH

[root@ceph-231 ~]# /usr/bin/chown -R ceph:ceph /var/lib/ceph/tmp/mnt.uCrLyH卸载、删除临时目录

[root@ceph-231 ~]# /bin/umount -- /var/lib/ceph/tmp/mnt.uCrLyH

[root@ceph-231 ~]# rm -rf /var/lib/ceph/tmp/mnt.uCrLyH修改OSD分区的typecode为4fbd7e29-9d25-41b8-afd0-062c0ceff05d,对应为ready

[root@ceph-231 ~]# /usr/sbin/sgdisk --typecode=1:4fbd7e29-9d25-41b8-afd0-062c0ceff05d -- /dev/sdb

[root@ceph-231 ~]# /usr/bin/udevadm settle --timeout=600

[root@ceph-231 ~]# /usr/bin/flock -s /dev/sdb /usr/sbin/partprobe /dev/sdb

[root@ceph-231 ~]# /usr/bin/udevadm settle --timeout=600强制内核触发设备事件

[root@ceph-231 ~]# /usr/bin/udevadm trigger --action=add --sysname-match sdb1

激活OSD

以/usr/sbin/ceph-disk -v activate --mark-init systemd --mount /dev/sdb1为例,ceph-disk activate命令执行过程如下。

获取文件系统类型xfs

[root@ceph-231 ~]# /sbin/blkid -p -s TYPE -o value -- /dev/sdb1

xfs获取osd_mount_options_xfs

[root@ceph-231 ~]# /usr/bin/ceph-conf --cluster=ceph --name=osd. --lookup osd_mount_options_xfs

获取osd_fs_mount_options_xfs

[root@ceph-231 ~]# /usr/bin/ceph-conf --cluster=ceph --name=osd. --lookup osd_fs_mount_options_xfs

osd_mount_options_xfs与osd_fs_mount_options_xfs为空,xfs的默认挂载属性为noatime,inode64,挂载临时目录/var/lib/ceph/tmp/mnt.GoeBOu

[root@ceph-231 ~]# mkdir /var/lib/ceph/tmp/mnt.GoeBOu

[root@ceph-231 ~]# /usr/bin/mount -t xfs -o noatime,inode64 -- /dev/sdb1 /var/lib/ceph/tmp/mnt.GoeBOu

[root@ceph-231 ~]# /usr/sbin/restorecon /var/lib/ceph/tmp/mnt.GoeBOu卸载、删除临时目录

[root@ceph-231 ~]# /bin/umount -- /var/lib/ceph/tmp/mnt.GoeBOu

[root@ceph-231 ~]# rm -rf /var/lib/ceph/tmp/mnt.GoeBOu启动OSD进程,0为osd id

[root@ceph-231 ~]# /usr/bin/systemctl disable ceph-osd@0

[root@ceph-231 ~]# /usr/bin/systemctl enable --runtime ceph-osd@0

[root@ceph-231 ~]# /usr/bin/systemctl start ceph-osd@0

1747

1747

被折叠的 条评论

为什么被折叠?

被折叠的 条评论

为什么被折叠?