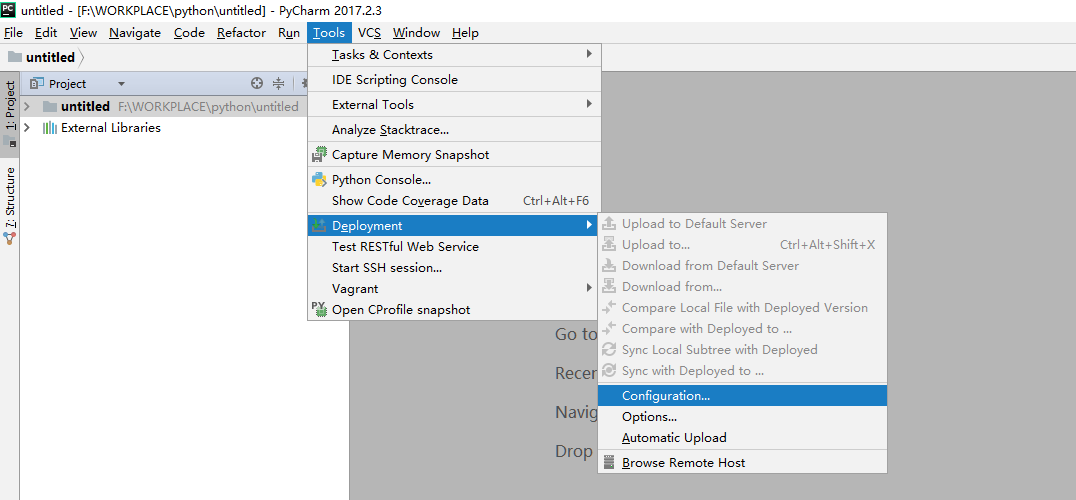

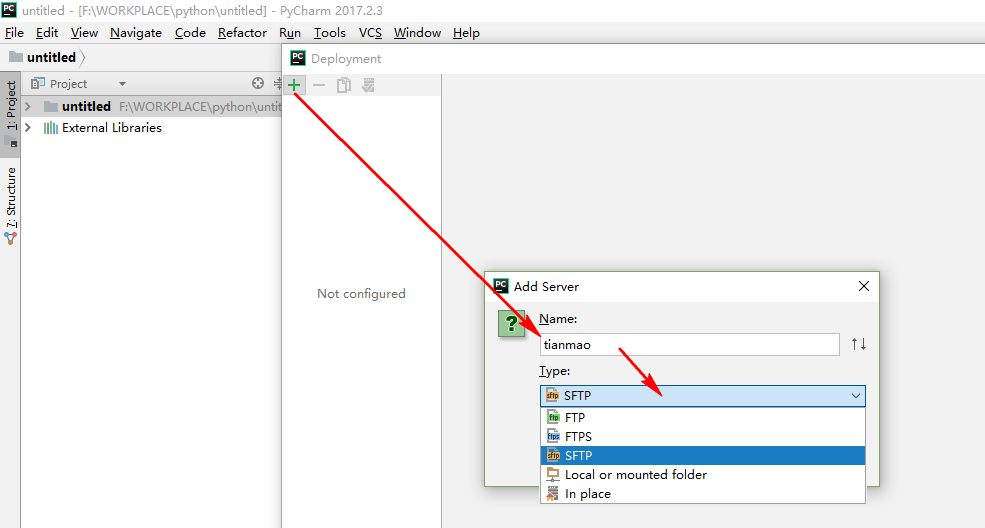

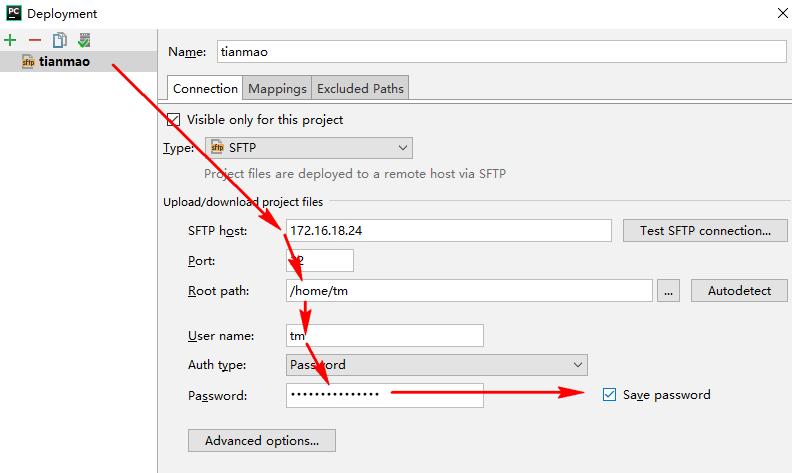

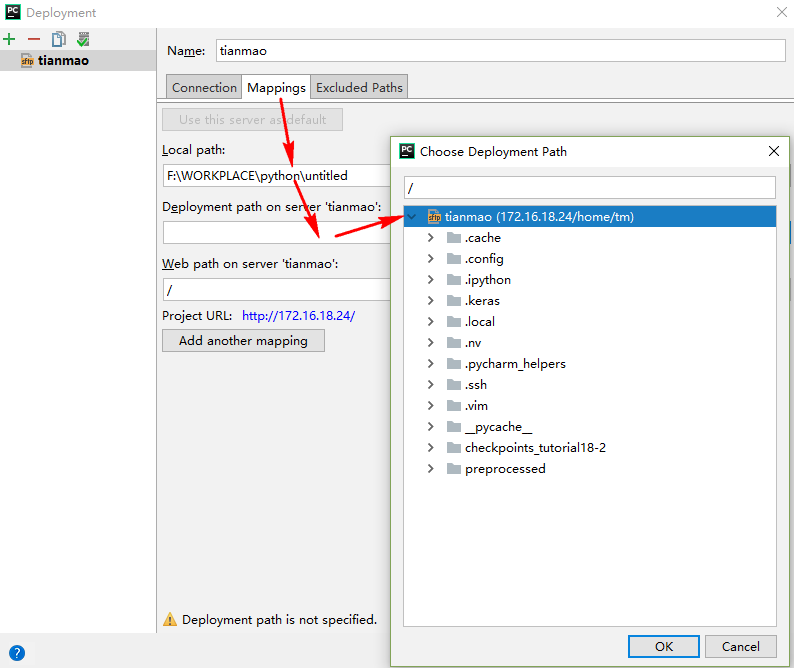

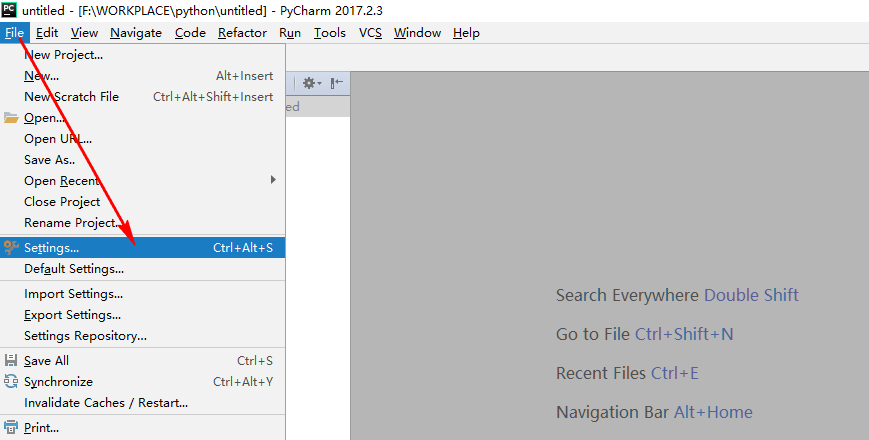

pycharm配置远程interpreter

- 配置SFTP

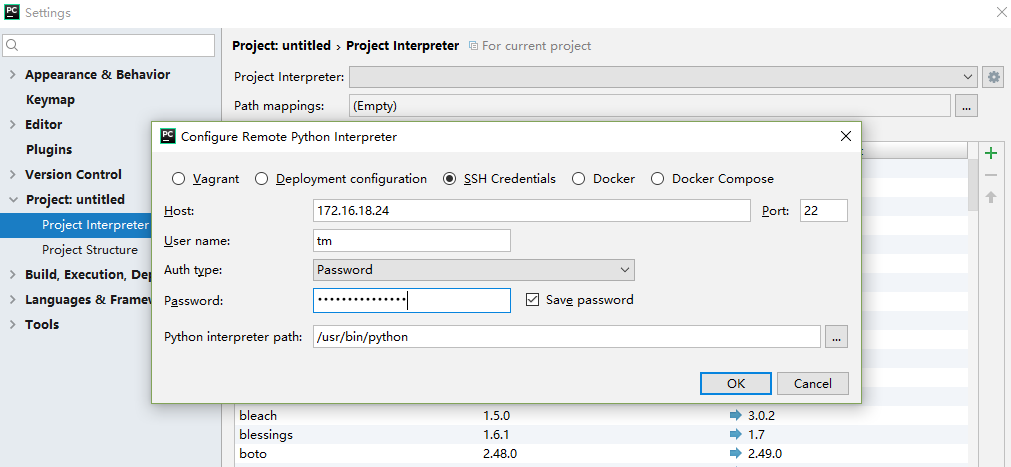

2. 配置Interpreter

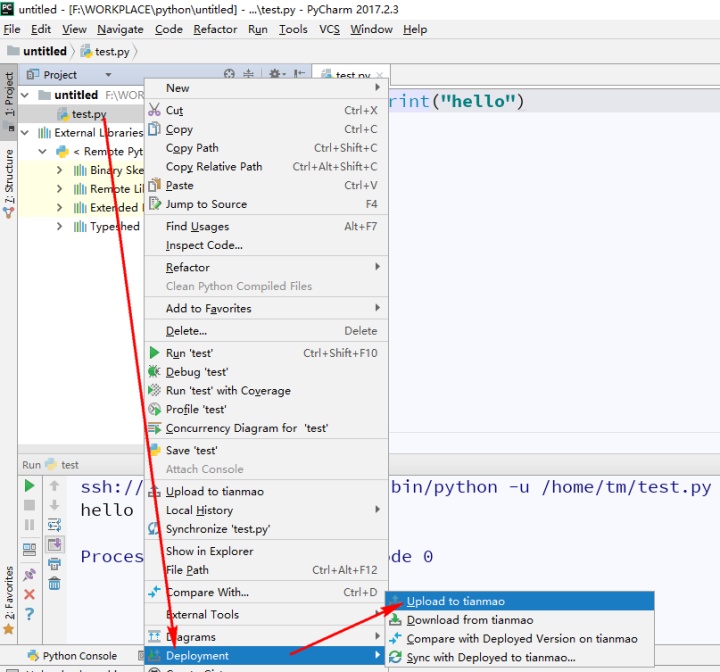

3.部署代码

4. 执行

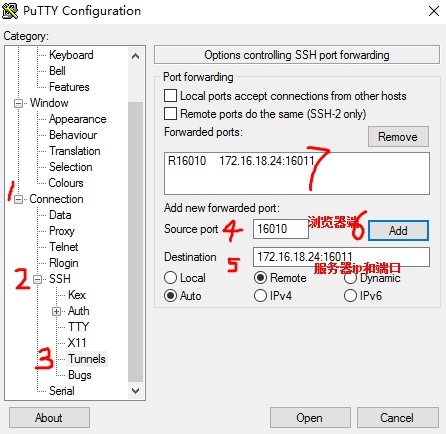

设置putty以查看TensorBoard

我的单机计算资源有限,所以选择在服务器上训练tensorflow模型。为了在本地机器的浏览器上查看Tensorboard,进行以下操作:

主要就是使用putty建立端口映射:

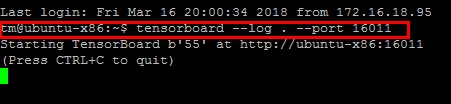

使用putty登陆服务器,使用以下命令启动tensorboard:

tensorboard --log . --port 16011

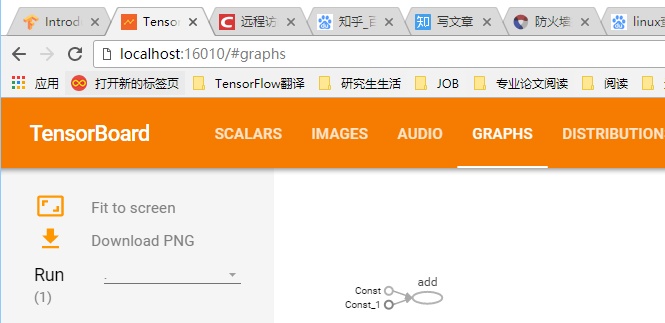

在本地浏览器中输入:localhost:16010即可访问tensorboard.

IDEA插件编写

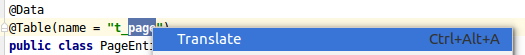

- 选取单词右键有道翻译

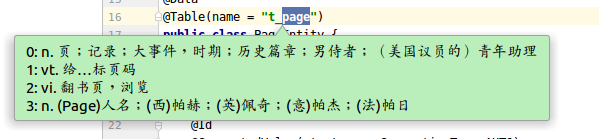

- 效果

- 选取单词右键

-

- 翻译结果

- 代码

- 主程序

package -

- 配置plugin.xml

<actions>

git的使用

- 忽略push的文件

# 编写gitignore文件,注意项目路径的写法,不要使用“./文件夹”表示从当前开始,直接就是“文件夹即可”

git rm -r --cached .

git add .

git commit -m 'update .gitignore'- 分支相关

- 创建并切换到新分支

git checkout -b panda

- 查看本地分支

git branch

- 查看分支结构图

git log --graph

git log --decorate

git log --oneline

git log --simplify-by-decoration

git log --all

git log --help- 将develop分支merge到master分支

git add .

git commit -m ''

git push

git checkout master

# checkout不成功可能需要执行git stash命令

git merge develop //将develop 分支与master分支合并

git push //将合并的本地master分支推送到远程mastergithub相关

- 条件检索

xxx in:name,readme,description

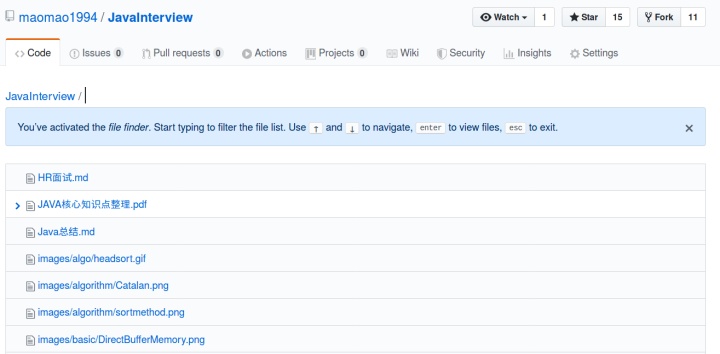

- 搜索 t

- 高亮代码

高亮1行,地址后面紧跟#L数字:代码地址#L13第十三行高亮

高亮多行,地址后面紧跟#L数字-L数字2:代码地址#L13-L20高亮13到20行

cloudera常用命令

升级jdk,直接rm掉以前的jdk,修改/etc/profile,关闭服务后重启

# 关闭服务

service cloudera-scm-agent stop

service cloudera-scm-server stop

service hadoop-hdfs-datanode stop

service hadoop-hdfs-journalnode stop

service hadoop-hdfs-namenode stop

service hadoop-hdfs-secondarynamenode stop

service hadoop-httpfs stop

service hadoop-mapreduce-historyserver stop

service hadoop-yarn-nodemanager stop

service hadoop-yarn-proxyserver stop

service hadoop-yarn-resourcemanager stop

service hbase-master stop

service hbase-regionserver stop

service hbase-rest stop

service hbase-solr-indexer stop

service hbase-thrift stop

service hive-metastore stop

service hive-server2 stop

service impala-catalog stop

service impala-server stop

service impala-state-store stop

service oozie stop

service solr-server stop

service spark-history-server stop

service sqoop2-server stop

service sqoop-metastore stop

service zookeeper-server stop# 重启

service cloudera-scm-agent start

service cloudera-scm-server start

# ps:重启后,需要等待一定时间,等待服务全部启动以后使用

Docker使用

- Docker常用命令及tips

- 查看和宿主机器共享文件夹

- 启动容器

- docker run命令来启动容器

- 启动容器(启动已经存在的容器)

- docker start cdh

- 进入容器

- docker exec -it cdh /bin/bash

- 向docker容器中复制文件

- docker cp '/home/mao/jdk-8u201-linux-x64.tar.gz' cdh:/home

2.搭建我的第一个Docker应用栈

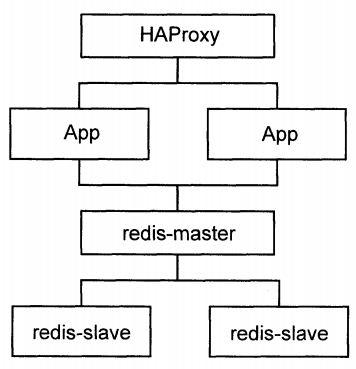

概述

我搭建的这个Docker应用栈的结构如下图所示,我主要参考的是浙江大学SEL实验室出版的 "Docker容器与容器云 第二版",由于版本的关系,书中的一些例子在这里不能够完全实验,尤其是一些配置文件的编写存在着差异.

我们使用的软件的版本信息如下表所示:

软件名 版本

HAProxy 1.9.0 2018/12/19

Redis 5.0.3

Django 1.10.4

- 镜像和容器

# 拉取镜像

sudo docker pull ubuntu

sudo docker pull django

sudo docker pull haproxy

sudo docker pull redis

# 查看镜像

sudo docker images

# 启动redis容器

sudo docker run -it --name redis-master redis /bin/bash

# 将redis-master改名为master,启动后在/etc/hosts中会加入master的IP

sudo docker run -it --name redis-slave1 --link redis-master:master redis /bin/bash

sudo docker run -it --name redis-slave2 --link redis-master:master redis /bin/bash

# 启动Django容器

sudo docker run -it --name APP1 --link redis-master:db -v ~/Projects/Django/APP1:/usr/src/app django /bin/bash

sudo docker run -it --name APP2 --link redis-master:db -v ~/Projects/Django/APP2:/usr/src/app django /bin/bash

# 启动HAProxy容器

sudo docker run -it --name HAProxy --link APP1:APP1 --link APP2:APP2 -p 6301:6301 -v ~/Projects/HAProxy:/tmp haproxy /bin/bash

# 查看挂载的volume信息

sudo docker inspect "ID" grep "volume"

# 查看IP

sudo docker inspect 4267e591b78e

- 修改Redis配置文件(模板首先从官网获取)

修改配置文件,在模板文件中检索以下的信息,并作修改.

- master对应的修改

daemonize yes

pidfile /var/run/redis.pid

# 必须绑定自身的ip,否则slave节点无法连接

bind 127.0.0.1 172.17.2

- slave节点的修改

daemonize yes

pidfile /var/run/redis.pid

replicaof master 6397

- 启动redis节点

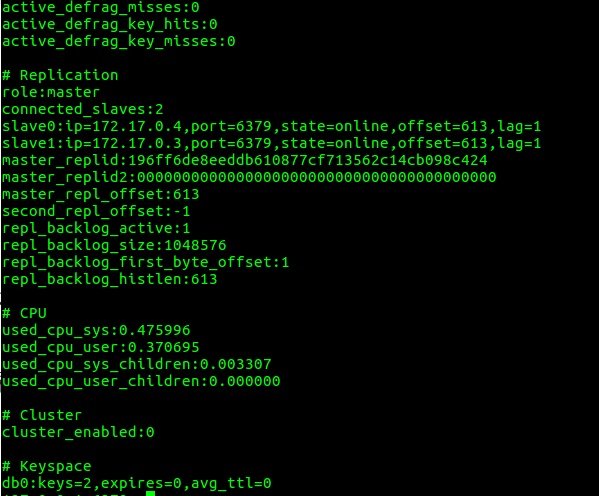

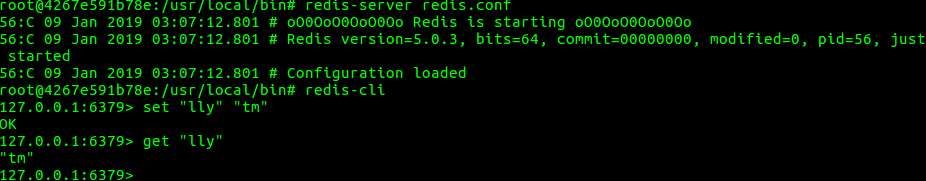

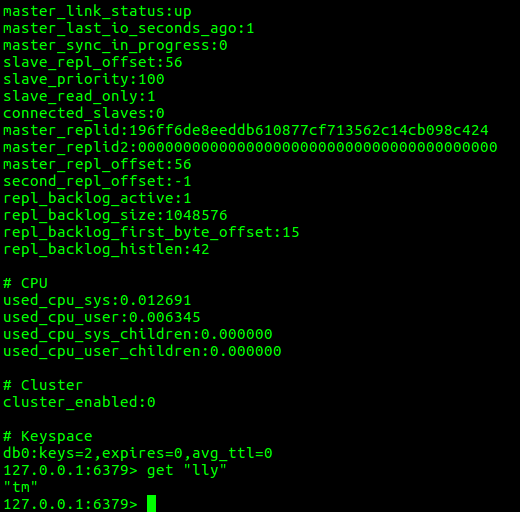

使用redis-server+配置文件启动主节点,使用redis-cli客户端来操作,通过info命令来查看启动之后的信息,可见:连接上的slave节点有两个.

在master节点上set一个消息,key是"lly",value是"tm",通过key可以拿到value的值.

在slave节点上使用redis-cli指令启动客户端,使用info查看节点的信息,可见:当前的masterlinkstatus已经up起来了,之前,我在master的配置文件中没有绑定IP,导致这个状态一直是down,也算是一个大坑,在坑里待了半天.在redis-cli中使用get key来获取value(可见,master已经replica一份给slave节点了)

Django

- 建立工程

# 在容器中

cd /usr/src/app

mkdir dockerweb

cd dockerweb

django-admin.py startproject redisweb

cd redisweb

python manage.py startapp helloworld

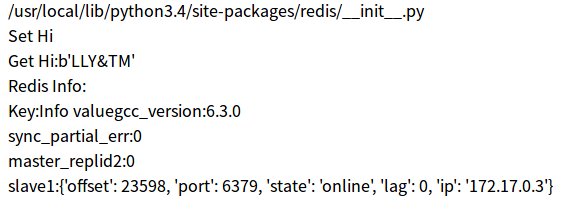

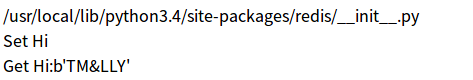

- 在宿主机器上,编写代码:以下的两段代码大致相同,功能就是往redis中写入键值对,请求不同的APP将会返回不同的页面.

# APP1

from django.shortcuts import render

from django.http import HttpResponse

# Create your views here.

import redis

def hello(request):

str=redis.__file__

str+="<br>"

r=redis.Redis(host='db',port=6379,db=0)

info=http://r.info()

str+=("Set Hi<br>")

r.set("LLY","LLY&TM")

str+=("Get Hi:%s<br>"%r.get('LLY'))

str+=("Redis Info:<br>")

str+=("Key:Info value")

for key in info:

str+=("%s:%s<br>"%(key,info[key]))

return HttpResponse(str)

# APP2

from django.shortcuts import render

from django.http import HttpResponse

# Create your views here.

import redis

def hello(request):

str=redis.__file__

str+="<br>"

r=redis.Redis(host='db',port=6379,db=0)

info=http://r.info()

str+=("Set Hi<br>")

r.set("TM","TM&LLY")

str+=("Get Hi:%s<br>"%r.get('TM'))

str+=("Redis Info:<br>")

str+=("Key:Info value")

for key in info:

str+=("%s:%s<br>"%(key,info[key]))

return HttpResponse(str)

- 配置文件的修改:

# setting.py的修改,添加helloworld

INSTALLED_APPS = [

'django.contrib.admin',

'django.contrib.auth',

'django.contrib.contenttypes',

'django.contrib.sessions',

'django.contrib.messages',

'django.contrib.staticfiles',

'helloworld',

]

# urls.py的修改

from django.conf.urls import url

from django.contrib import admin

from helloworld.views import hello

urlpatterns = [

url(r'^admin/', admin.site.urls),

url(r'^helloworld$',hello)

]

- 以上操作完成以后,在目录/usr/src/app/dockerweb/redisweb 下分别执行:

python manage.py makemigrations

python manage.py migrate

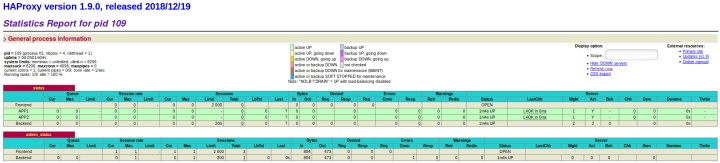

HAProxy

对于HAProxy的配置参考这里 我们最终编写的配置文件如下,执行操作haproxy -f haproxy.cfg

global

log 127.0.0.1 local0

maxconn 4096

chroot /usr/local/sbin

daemon

nbproc 4

pidfile /usr/local/sbin/haproxy.pid

defaults

log 127.0.0.1 local3

mode http

option dontlognull

option redispatch

retries 2

maxconn 2000

balance roundrobin

timeout connect 5000ms

timeout client 50000ms

timeout server 50000ms

listen status

bind 0.0.0.0:6301

stats enable

stats uri /haproxy-stats

server APP1 APP1:8001 check inter 2000 rise 2 fall 5

server APP2 APP2:8002 check inter 2000 rise 2 fall 5

listen admin_status

bind 0.0.0.0:32795

mode http

stats uri /haproxy

stats realm Global statistics

stats auth admin:admin

终极结果

我在浏览器中访问同一个地址"http://172.17.0.8:6301/helloworld", 快速刷新页面,将会得到不同的页面效果,也就是说返回的可能源自APP1,也可能源自APP2.

- 源自APP1:

- 源自APP2

- 通过HAProxy管理界面查看,访问地址"http://172.17.0.8:32795/haproxy"

使用Docker搭建kafka集群

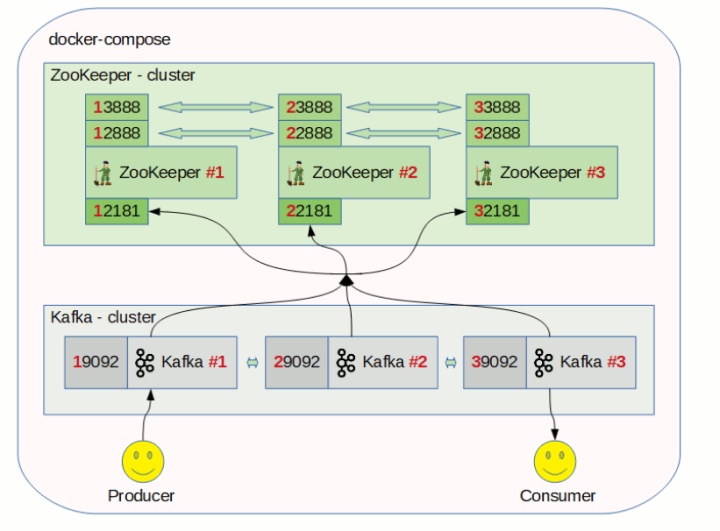

通过docker-compose创建集群

- 架构

- docker-compose.yml文件

version: '2'

services:

zookeeper-1:

image: confluentinc/cp-zookeeper:latest

hostname: zookeeper-1

ports:

- "12181:12181"

environment:

ZOOKEEPER_SERVER_ID: 1

ZOOKEEPER_CLIENT_PORT: 12181

ZOOKEEPER_TICK_TIME: 2000

ZOOKEEPER_INIT_LIMIT: 5

ZOOKEEPER_SYNC_LIMIT: 2

ZOOKEEPER_SERVERS: zookeeper-1:12888:13888;zookeeper-2:22888:23888;zookeeper-3:32888:33888

zookeeper-2:

image: confluentinc/cp-zookeeper:latest

hostname: zookeeper-2

ports:

- "22181:22181"

environment:

ZOOKEEPER_SERVER_ID: 2

ZOOKEEPER_CLIENT_PORT: 22181

ZOOKEEPER_TICK_TIME: 2000

ZOOKEEPER_INIT_LIMIT: 5

ZOOKEEPER_SYNC_LIMIT: 2

ZOOKEEPER_SERVERS: zookeeper-1:12888:13888;zookeeper-2:22888:23888;zookeeper-3:32888:33888

zookeeper-3:

image: confluentinc/cp-zookeeper:latest

hostname: zookeeper-3

ports:

- "32181:32181"

environment:

ZOOKEEPER_SERVER_ID: 3

ZOOKEEPER_CLIENT_PORT: 32181

ZOOKEEPER_TICK_TIME: 2000

ZOOKEEPER_INIT_LIMIT: 5

ZOOKEEPER_SYNC_LIMIT: 2

ZOOKEEPER_SERVERS: zookeeper-1:12888:13888;zookeeper-2:22888:23888;zookeeper-3:32888:33888

kafka-1:

image: confluentinc/cp-kafka:latest

hostname: kafka-1

ports:

- "19092:19092"

depends_on:

- zookeeper-1

- zookeeper-2

- zookeeper-3

environment:

KAFKA_BROKER_ID: 1

KAFKA_ZOOKEEPER_CONNECT: zookeeper-1:12181,zookeeper-2:12181,zookeeper-3:12181

KAFKA_ADVERTISED_LISTENERS: PLAINTEXT://kafka-1:19092

kafka-2:

image: confluentinc/cp-kafka:latest

hostname: kafka-2

ports:

- "29092:29092"

depends_on:

- zookeeper-1

- zookeeper-2

- zookeeper-3

environment:

KAFKA_BROKER_ID: 2

KAFKA_ZOOKEEPER_CONNECT: zookeeper-1:12181,zookeeper-2:12181,zookeeper-3:12181

KAFKA_ADVERTISED_LISTENERS: PLAINTEXT://kafka-2:29092

kafka-3:

image: confluentinc/cp-kafka:latest

hostname: kafka-3

ports:

- "39092:39092"

depends_on:

- zookeeper-1

- zookeeper-2

- zookeeper-3

environment:

KAFKA_BROKER_ID: 3

KAFKA_ZOOKEEPER_CONNECT: zookeeper-1:12181,zookeeper-2:12181,zookeeper-3:12181

KAFKA_ADVERTISED_LISTENERS: PLAINTEXT://kafka-3:39092

- 启动

- docker-compose up

安装客户端工具

sudo apt-get install kafkacat

测试

- 修改客户端的hosts,添加kafka节点的ip信息

- 172.20.0.6 kafka-1

172.20.0.5 kafka-2

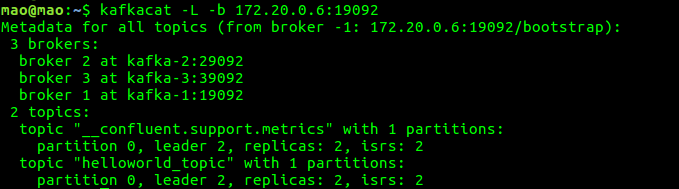

172.20.0.7 kafka-3 - 查看集群的信息

- kafkacat -L -b kafka-1:19092

- 查看zookeeper信息

- zookeeper-shell 127.0.0.1:12181

ls /

#查看broker的id

ls /brokers/ids

#查看消息

ls /brokers/topics

#查看broker的信息

get /brokers/ids/0

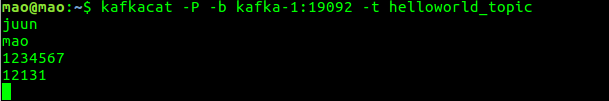

- 开启producer

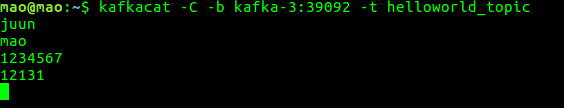

- 开启consumer

本文详细介绍了如何使用Koder代码编辑器,包括配置PyCharm的远程解释器、设置Putty以在本地查看TensorBoard,以及编写IDEA插件。此外,还涵盖了Git的使用,如忽略文件、分支管理和GitHub操作。进一步讨论了Docker的使用,包括Docker命令、Docker Compose和Docker下搭建Django、Redis、HAProxy和Kafka集群的步骤。最后,提到了Cloudera命令和代码高亮。

本文详细介绍了如何使用Koder代码编辑器,包括配置PyCharm的远程解释器、设置Putty以在本地查看TensorBoard,以及编写IDEA插件。此外,还涵盖了Git的使用,如忽略文件、分支管理和GitHub操作。进一步讨论了Docker的使用,包括Docker命令、Docker Compose和Docker下搭建Django、Redis、HAProxy和Kafka集群的步骤。最后,提到了Cloudera命令和代码高亮。

1431

1431

被折叠的 条评论

为什么被折叠?

被折叠的 条评论

为什么被折叠?