1.Dropout: A simple way to prevent neural networks from overfitting

摘要中提出,对于深度神经网络,过拟合是一个严重的问题,并且对于参数庞大的网络,计算速度很慢,没有办法采用传统的用多个模型集成的方法来解决过拟合。因此提出了Dropout的方法来解决过拟合问题,Dropout的核心是在训练时随机的丢掉一些unit。

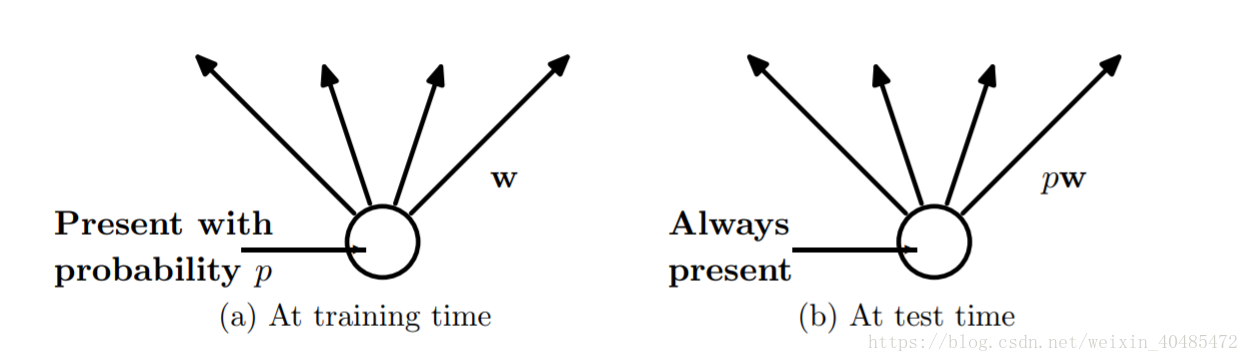

在训练时,相当于随机sample出了指数级数目的“瘦”的网络;在测试时,相当于使用这些网络的平均预测效果(ensemble的思想),这种方法可以有效的解决过拟合问题,比其他的泛化方法更加有效。

提到了一些其他的防止过拟合的方法:

1.early stopping(根据validation集的表现);2.系数正则项(l1或者l2正则);

3.soft weight sharing

对于有n个units的神经网络,可以sample出个“瘦”的网络,但因为这个网络中的参数是共享的,因此计算时间复杂度没有变,

。在测试时,使用一个没有dropout的网络,这个网络的权重,是经过scaled-down的权重(权重乘以dropout probability)

Dropping out 20% of the input units and 50% of the hidden units was often found to be optimal.

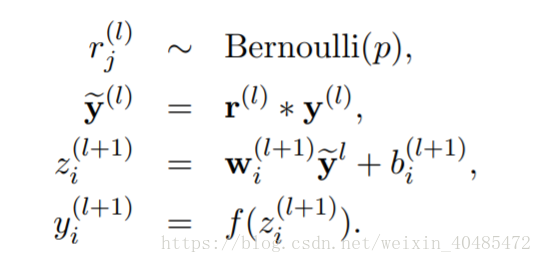

模型:

Although dropout alone gives significant improvements, using dropout along with maxnorm regularization, large decaying learning rates and high momentum provides a significant boost over just using dropout.

A possible justification is that constraining weight vectors to lie inside a ball of fixed radius makes it possible to use a huge learning rate without the possibility of weights blowing up.

The noise provided by dropout then allows the optimization process to explore different regions of the weight space that would have otherwise been difficult to reach.

As the learning rate decays, the optimization takes shorter steps, thereby doing less exploration and eventually settles into a minimum.

droppout使得权重稀疏:

We found that as a side-effect of doing dropout, the activations of the hidden units become sparse, even when no sparsity inducing regularizers are present. Thus, dropout automatically leads to sparse representations.

2.Dropout for RNN

342

342

被折叠的 条评论

为什么被折叠?

被折叠的 条评论

为什么被折叠?