34*8# IOS 语音识别:

例如:语音项目,总是要考虑价格,可是…,总之去现场测试时,客户问:x x x合同里费用的事是怎么写。我*&&^&&%**^%$#。这事次数不少,我也不知道怎么怎么评价。【请忽略这些抱怨】。ios有自带的a

pi可以语音识别,语音合成

至于效果好不好,智不智能,请君自辨。

步骤:

iOS官网有例子可以下载学习api

- 录音

先打开录音,录取声音 - 识别

把录取的声音文件,放入SFSpeechRecognizer 中识别 - 加入unity注意事项

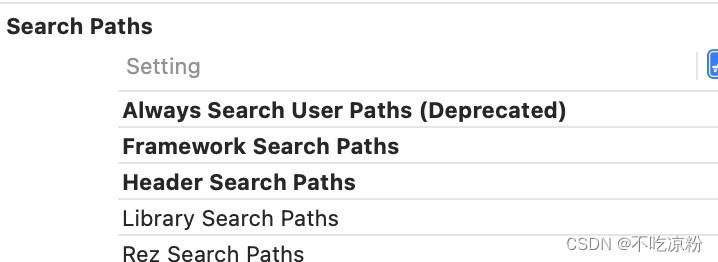

3.1 加载语音静态库

Bulid Phases-> Link Binary With Libraries 中添加 Speech静态库。不能正确读取到静态库

3.2 解决问题

找到speech.库,放到xcode文件添加进去 【$(SRCROOT)/…/…/lib】

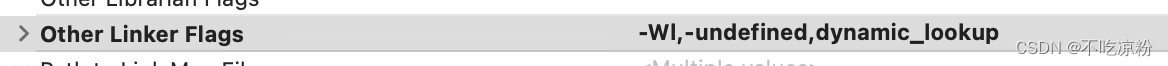

如果还不行 修改 OtherLinker Flags + -Wl,-undefined,dynamic_lookup

- unity调用

#import <Speech/Speech.h>

#import "UnityInterface.h"

@interface SpeechFunction : NSObject<AVAudioRecorderDelegate,AVAudioPlayerDelegate>

@property(nonatomic,strong) NSURL *url;// 识别路径

@property (nonatomic, strong) NSArray *videoArray; // 录音文件

@property(nonatomic,strong)AVAudioRecorder *audioRecorder;

@property (nonatomic, strong) AVAudioPlayer *audioPlayer; // 音频播放器

@property (nonatomic, strong) UIProgressView *audioPower; // 音频波动

-(NSURL *)getSavePath;

@end

@implementation SpeechFunction

static SpeechFunction *speech=nil;

-(instancetype)init

{

self=[super init];

return self;

}

+(SpeechFunction *)instatanceSpeech

{

if(speech==nil)

speech=[[SpeechFunction alloc]init];

return speech;

}

#pragma mark -- speech --

#pragma mark -- 路径读取 --

- (NSURL *)getSavePath

{

NSString *urlStr = [NSSearchPathForDirectoriesInDomains(NSDocumentDirectory, NSUserDomainMask, YES) lastObject];

NSInteger audioNum = [[NSUserDefaults standardUserDefaults] integerForKey:@"audioNum"];

urlStr = [urlStr stringByAppendingPathComponent:[NSString stringWithFormat:@"myAudio%ld.caf", audioNum - 1]];

self.url = [NSURL fileURLWithPath:urlStr];

NSLog(@"url :%@", self.url);

return self.url;

}

#pragma mark -- 取得录音文件设置 --

- (NSDictionary *)getAudioSetting

{

NSMutableDictionary *dicM = [NSMutableDictionary dictionary];

// 设置录音格式

[dicM setObject:@(kAudioFormatLinearPCM) forKey:AVFormatIDKey];

// 设置录音采样率

[dicM setObject:@(44100.0) forKey:AVSampleRateKey];

// 设置通道 1为单声道

[dicM setObject:@(2) forKey:AVNumberOfChannelsKey];

// 每个采样点数 分为8 16 24 32

[dicM setObject:@(16) forKey:AVLinearPCMBitDepthKey];

// 是否使用浮点采样

[dicM setObject:@(YES) forKey:AVLinearPCMIsFloatKey];

//录音质量

[dicM setObject:[NSNumber numberWithInt:AVAudioQualityHigh] forKey:AVEncoderAudioQualityKey];

return dicM;

}

#pragma mark -- 获取录音机对象 --

- (AVAudioRecorder *)audioRecorder

{

if (!_audioRecorder) {

// 创建录音文件保存路径

NSURL *url = [self getSavePath];

// 创建录音格式设置

NSDictionary *setting = [self getAudioSetting];

// 创建录音机

NSError *error = nil;

_audioRecorder = [[AVAudioRecorder alloc] initWithURL:url settings:setting error:&error];

_audioRecorder.delegate = self;

_audioRecorder.meteringEnabled = YES; // 如果要监控声波则设置为YES

if (error) {

NSLog(@"创建录音机对象发生错误,错误信息:%@", error.localizedDescription);

return nil;

}

}

return _audioRecorder;

}

#pragma mark -- 录音 --

- (void)recorderWithUrl:(NSURL *)url

{

// 创建录音格式设置

NSDictionary *setting = [self getAudioSetting];

// 创建录音机

NSError *error = nil;

[[AVAudioSession sharedInstance] setCategory:AVAudioSessionCategoryPlayAndRecord withOptions:AVAudioSessionCategoryOptionDefaultToSpeaker error:&error]; // 将播放设置调为录音模式

self.audioRecorder = [[AVAudioRecorder alloc] initWithURL:url settings:setting error:&error];

self.audioRecorder.delegate = self;

self.audioRecorder.meteringEnabled = YES; // 如果要监控声波则设置为YES

if (error) {

NSLog(@"创建录音机对象发生错误,错误信息:%@", error.localizedDescription);

}

else if (![self.audioRecorder isRecording]) {

[self.audioRecorder record];

}

}

#pragma mark -- 结束 --

- (void)stopClick

{

if ([self.audioRecorder isRecording]) {NSLog(@"audio stop");

[self.audioRecorder stop];

self.timer.fireDate = [NSDate distantFuture];

self.audioPower.progress = 0.0;

NSString *urlStr = [NSSearchPathForDirectoriesInDomains(NSDocumentDirectory, NSUserDomainMask, YES) lastObject];

NSLog(@"file path == %@", urlStr);

NSInteger audioNum = [[NSUserDefaults standardUserDefaults] integerForKey:@"audioNum"];

audioNum += 1;

[[NSUserDefaults standardUserDefaults] setInteger:audioNum forKey:@"audioNum"];

[[NSUserDefaults standardUserDefaults] synchronize];

}

}

- (void)speechRecognitionWithUrl:(NSURL *)url

{

[SFSpeechRecognizer requestAuthorization:^(SFSpeechRecognizerAuthorizationStatus status) {

if (status == 3) {

NSLog(@"授权成功");

}

else {

NSLog(@"授权失败");

[self OSCallUnity:@"error:未授权"];

return;

}

}];

//Undefined symbol: _OBJC_CLASS_$_SFSpeechRecognizer

NSLocale *loc = [NSLocale localeWithLocaleIdentifier: @"zh-CN"]; // zh-CN

// 创建语音识别操作类对象

SFSpeechRecognizer *rec = [[SFSpeechRecognizer alloc] initWithLocale:loc];

SFSpeechRecognitionRequest *request = [[SFSpeechURLRecognitionRequest alloc] initWithURL:url];

// 进行请求

[rec recognitionTaskWithRequest:request resultHandler:^(SFSpeechRecognitionResult * _Nullable result, NSError * _Nullable error) {

NSLog(@"result %@", result.bestTranscription.formattedString);

NSURL *Str = result.bestTranscription.formattedString ? result.bestTranscription.formattedString : @"无法识别";

[speech OSCallUnity:Str];

}];

}

#pragma mark --end speech--

void OnInitSpeech()

{

if(speech==nil)

{

[SpeechFunction instatanceSpeech];

}

}

void startRe()

{

if(speech==nil)

{

NSLog(@"speech isn't init");

return;

}

[speech recorderWithUrl:[speech getSavePath]];

}

void endRe()

{

if(speech==nil)

{

NSLog(@"speech isn't init");

return;

}

[speech stopClick];

[speech speechRecognitionWithUrl:speech.url];

}

-(void) OSCallUnity:(NSString *)info

{

const char *s=[info cStringUsingEncoding:NSUTF8StringEncoding];

UnitySendMessage("CallObj", "OSCallUnityEven", s);

}

@end

测试结果:

例如:

- 周一至周五晚上 7 点—晚上9点

语音合成:

之前已经有篇技术文章

参考:

参考技术忘了保存,感谢!!!!

本文介绍了如何在iOS中使用内置API进行语音识别和录音,包括SFSpeechRecognizer的使用方法,以及AVAudioRecorder用于录音的步骤。同时,提到了在现场测试中遇到的关于合同费用的问题,但主要焦点在于技术实现上。

本文介绍了如何在iOS中使用内置API进行语音识别和录音,包括SFSpeechRecognizer的使用方法,以及AVAudioRecorder用于录音的步骤。同时,提到了在现场测试中遇到的关于合同费用的问题,但主要焦点在于技术实现上。

571

571

被折叠的 条评论

为什么被折叠?

被折叠的 条评论

为什么被折叠?