在上一篇中,我们实现了按 cookieId 和 time 进行二次排序,现在又有新问题:假如我需要按 cookieId 和 cookieId&time 的组合进行分析呢?此时最好的办法是自定义 InputFormat,让 mapreduce 一次读取一个 cookieId 下的所有记录,然后再按 time 进行切分 session,逻辑伪码如下:

for OneSplit in MyInputFormat.getSplit() // OneSplit 是某个 cookieId 下的所有记录

for session in OneSplit // session 是按 time 把 OneSplit 进行了二次分割

for line in session // line 是 session 中的每条记录,对应原始日志的某条记录

1、原理:

InputFormat是MapReduce中一个很常用的概念,它在程序的运行中到底起到了什么作用呢?

InputFormat其实是一个接口,包含了两个方法:

public interface InputFormat<K, V> {

InputSplit[] getSplits(JobConf job, int numSplits) throws IOException;

RecordReader<K, V> getRecordReader(InputSplit split,

JobConf job,

Reporter reporter) throws IOException;

}

这两个方法有分别完成着以下工作:

方法 getSplits 将输入数据切分成splits,splits的个数即为map tasks的个数,splits的大小默认为块大小,即64M

方法

getRecordReader 将每个 split

解析成records, 再依次将record解析成<K,V>对

也就是说

InputFormat完成以下工作:

InputFile -->

splits

-->

<K,V>

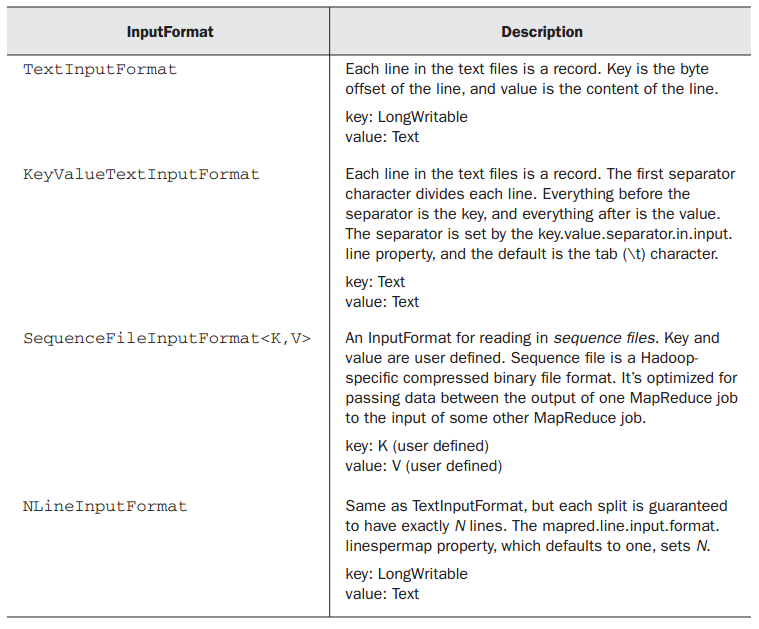

系统常用的

InputFormat 又有哪些呢?

其中Text

InputFormat便是最常用的,它的

<K,V>就代表

<行偏移,该行内容>

然而系统所提供的这几种固定的将

InputFile转换为

<K,V>的方式有时候并不能满足我们的需求:

此时需要我们自定义

InputFormat ,从而使Hadoop框架按照我们预设的方式来将

InputFile解析为<K,V>

在领会自定义

InputFormat 之前,需要弄懂一下几个抽象类、接口及其之间的关系:

InputFormat(interface), FileInputFormat(abstract class), TextInputFormat(class),

RecordReader

(interface), Line

RecordReader(class)的关系

FileInputFormat implements

InputFormat

TextInputFormat extends

FileInputFormat

TextInputFormat.get

RecordReader calls

Line

RecordReader

Line

RecordReader

implements

RecordReader

对于InputFormat接口,上面已经有详细的描述

再看看

FileInputFormat,它实现了

InputFormat接口中的

getSplits方法,而将

getRecordReader与isSplitable留给具体类(如

TextInputFormat

)实现,

isSplitable方法通常不用修改,所以只需要在自定义的

InputFormat中实现

getRecordReader方法即可,而该方法的核心是调用

Line

RecordReader(即由LineRecorderReader类来实现 "

将每个s

plit解析成records, 再依次将record解析成<K,V>对"

),该方法实现了接口RecordReader

public interface RecordReader<K, V> {

boolean

next(K key, V value) throws IOException;

K

createKey();

V

createValue();

long

getPos() throws IOException;

public void

close() throws IOException;

float

getProgress() throws IOException;

}

因此自定义InputFormat的核心是自定义一个实现接口RecordReader类似于LineRecordReader的类,该类的核心也正是重写接口RecordReader中的几大方法,

定义一个InputFormat的核心是定义一个类似于LineRecordReader的,自己的RecordReader

2、代码:

01 | package MyInputFormat; |

03 | import org.apache.hadoop.fs.Path; |

04 | import org.apache.hadoop.io.LongWritable; |

05 | import org.apache.hadoop.io.Text; |

06 | import org.apache.hadoop.io.compress.CompressionCodec; |

07 | import org.apache.hadoop.io.compress.CompressionCodecFactory; |

08 | import org.apache.hadoop.mapreduce.InputSplit; |

09 | import org.apache.hadoop.mapreduce.JobContext; |

10 | import org.apache.hadoop.mapreduce.RecordReader; |

11 | import org.apache.hadoop.mapreduce.TaskAttemptContext; |

12 | import org.apache.hadoop.mapreduce.lib.input.FileInputFormat; |

14 | public class TrackInputFormat extends FileInputFormat<LongWritable, Text> { |

16 | @SuppressWarnings("deprecation") |

18 | public RecordReader<LongWritable, Text> createRecordReader( |

19 | InputSplit split, TaskAttemptContext context) { |

20 | return new TrackRecordReader(); |

24 | protected boolean isSplitable(JobContext context, Path file) { |

25 | CompressionCodec codec = new CompressionCodecFactory( |

26 | context.getConfiguration()).getCodec(file); |

001 | package MyInputFormat; |

003 | import java.io.IOException; |

004 | import java.io.InputStream; |

006 | import org.apache.commons.logging.Log; |

007 | import org.apache.commons.logging.LogFactory; |

008 | import org.apache.hadoop.conf.Configuration; |

009 | import org.apache.hadoop.fs.FSDataInputStream; |

010 | import org.apache.hadoop.fs.FileSystem; |

011 | import org.apache.hadoop.fs.Path; |

012 | import org.apache.hadoop.io.LongWritable; |

013 | import org.apache.hadoop.io.Text; |

014 | import org.apache.hadoop.io.compress.CompressionCodec; |

015 | import org.apache.hadoop.io.compress.CompressionCodecFactory; |

016 | import org.apache.hadoop.mapreduce.InputSplit; |

017 | import org.apache.hadoop.mapreduce.RecordReader; |

018 | import org.apache.hadoop.mapreduce.TaskAttemptContext; |

019 | import org.apache.hadoop.mapreduce.lib.input.FileSplit; |

022 | * Treats keys as offset in file and value as line. |

025 | * {@link org.apache.hadoop.mapreduce.lib.input.LineRecordReader} |

028 | public class TrackRecordReader extends RecordReader<LongWritable, Text> { |

029 | private static final Log LOG = LogFactory.getLog(TrackRecordReader.class); |

031 | private CompressionCodecFactory compressionCodecs = null; |

035 | private NewLineReader in; |

036 | private int maxLineLength; |

037 | private LongWritable key = null; |

038 | private Text value = null; |

041 | private byte[] separator = "END\n".getBytes(); |

045 | public void initialize(InputSplit genericSplit, TaskAttemptContext context) |

047 | FileSplit split = (FileSplit) genericSplit; |

048 | Configuration job = context.getConfiguration(); |

049 | this.maxLineLength = job.getInt("mapred.linerecordreader.maxlength", |

051 | start = split.getStart(); |

052 | end = start + split.getLength(); |

053 | final Path file = split.getPath(); |

054 | compressionCodecs = new CompressionCodecFactory(job); |

055 | final CompressionCodec codec = compressionCodecs.getCodec(file); |

057 | FileSystem fs = file.getFileSystem(job); |

058 | FSDataInputStream fileIn = fs.open(split.getPath()); |

059 | boolean skipFirstLine = false; |

061 | in = new NewLineReader(codec.createInputStream(fileIn), job); |

062 | end = Long.MAX_VALUE; |

065 | skipFirstLine = true; |

066 | this.start -= separator.length; |

070 | in = new NewLineReader(fileIn, job); |

073 | start += in.readLine(new Text(), 0, |

074 | (int) Math.min((long) Integer.MAX_VALUE, end - start)); |

079 | public boolean nextKeyValue() throws IOException { |

081 | key = new LongWritable(); |

089 | newSize = in.readLine(value, maxLineLength, |

090 | Math.max((int) Math.min(Integer.MAX_VALUE, end - pos), |

096 | if (newSize < maxLineLength) { |

100 | LOG.info("Skipped line of size " + newSize + " at pos " |

113 | public LongWritable getCurrentKey() { |

118 | public Text getCurrentValue() { |

123 | * Get the progress within the split |

125 | public float getProgress() { |

129 | return Math.min(1.0f, (pos - start) / (float) (end - start)); |

133 | public synchronized void close() throws IOException { |

139 | public class NewLineReader { |

140 | private static final int DEFAULT_BUFFER_SIZE = 64 * 1024; |

141 | private int bufferSize = DEFAULT_BUFFER_SIZE; |

142 | private InputStream in; |

143 | private byte[] buffer; |

144 | private int bufferLength = 0; |

145 | private int bufferPosn = 0; |

147 | public NewLineReader(InputStream in) { |

148 | this(in, DEFAULT_BUFFER_SIZE); |

151 | public NewLineReader(InputStream in, int bufferSize) { |

153 | this.bufferSize = bufferSize; |

154 | this.buffer = new byte[this.bufferSize]; |

157 | public NewLineReader(InputStream in, Configuration conf) |

159 | this(in, conf.getInt("io.file.buffer.size", DEFAULT_BUFFER_SIZE)); |

162 | public void close() throws IOException { |

166 | public int readLine(Text str, int maxLineLength, int maxBytesToConsume) |

169 | Text record = new Text(); |

171 | long bytesConsumed = 0L; |

172 | boolean newline = false; |

176 | if (this.bufferPosn >= this.bufferLength) { |

178 | bufferLength = in.read(buffer); |

180 | if (bufferLength <= 0) { |

184 | int startPosn = this.bufferPosn; |

185 | for (; bufferPosn < bufferLength; bufferPosn++) { |

187 | if (sepPosn > 0 && buffer[bufferPosn] != separator[sepPosn]) { |

191 | if (buffer[bufferPosn] == separator[sepPosn]) { |

195 | for (++sepPosn; sepPosn < separator.length; i++, sepPosn++) { |

197 | if (bufferPosn + i >= bufferLength) { |

202 | if (this.buffer[this.bufferPosn + i] != separator[sepPosn]) { |

208 | if (sepPosn == separator.length) { |

216 | int readLength = this.bufferPosn - startPosn; |

217 | bytesConsumed += readLength; |

219 | if (readLength > maxLineLength - txtLength) { |

220 | readLength = maxLineLength - txtLength; |

222 | if (readLength > 0) { |

223 | record.append(this.buffer, startPosn, readLength); |

224 | txtLength += readLength; |

227 | str.set(record.getBytes(), 0, record.getLength() |

231 | } while (!newline && (bytesConsumed < maxBytesToConsume)); |

232 | if (bytesConsumed > (long) Integer.MAX_VALUE) { |

233 | throw new IOException("Too many bytes before newline: " |

237 | return (int) bytesConsumed; |

240 | public int readLine(Text str, int maxLineLength) throws IOException { |

241 | return readLine(str, maxLineLength, Integer.MAX_VALUE); |

244 | public int readLine(Text str) throws IOException { |

245 | return readLine(str, Integer.MAX_VALUE, Integer.MAX_VALUE); |

01 | package MyInputFormat; |

03 | import java.io.IOException; |

05 | import org.apache.hadoop.conf.Configuration; |

06 | import org.apache.hadoop.fs.FileSystem; |

07 | import org.apache.hadoop.fs.Path; |

08 | import org.apache.hadoop.io.LongWritable; |

09 | import org.apache.hadoop.io.Text; |

10 | import org.apache.hadoop.mapreduce.Job; |

11 | import org.apache.hadoop.mapreduce.Mapper; |

12 | import org.apache.hadoop.mapreduce.lib.input.FileInputFormat; |

14 | public class TestMyInputFormat { |

16 | public static class MapperClass extends Mapper<LongWritable, Text, Text, Text> { |

18 | public void map(LongWritable key, Text value, Context context) throws IOException, |

19 | InterruptedException { |

20 | System.out.println("key:\t " + key); |

21 | System.out.println("value:\t " + value); |

22 | System.out.println("-------------------------"); |

26 | public static void main(String[] args) throws IOException, InterruptedException, ClassNotFoundException { |

27 | Configuration conf = new Configuration(); |

28 | Path outPath = new Path("/hive/11"); |

29 | FileSystem.get(conf).delete(outPath, true); |

30 | Job job = new Job(conf, "TestMyInputFormat"); |

31 | job.setInputFormatClass(TrackInputFormat.class); |

32 | job.setJarByClass(TestMyInputFormat.class); |

33 | job.setMapperClass(TestMyInputFormat.MapperClass.class); |

34 | job.setNumReduceTasks(0); |

35 | job.setMapOutputKeyClass(Text.class); |

36 | job.setMapOutputValueClass(Text.class); |

38 | FileInputFormat.addInputPath(job, new Path(args[0])); |

39 | org.apache.hadoop.mapreduce.lib.output.FileOutputFormat.setOutputPath(job, outPath); |

41 | System.exit(job.waitForCompletion(true) ? 0 : 1); |

3、测试数据:

cookieId time url cookieOverFlag

4、结果:

05 | ------------------------- |

10 | ------------------------- |

15 | ------------------------- |

REF:

自定义hadoop map/reduce输入文件切割InputFormat

http://hi.baidu.com/lzpsky/item/0d9d84c05afb43ba0c0a7b27

MapReduce高级编程之自定义InputFormat

http://datamining.xmu.edu.cn/bbs/home.php?mod=space&uid=91&do=blog&id=190

http://irwenqiang.iteye.com/blog/1448164

2169

2169

被折叠的 条评论

为什么被折叠?

被折叠的 条评论

为什么被折叠?