插件

话说Hadoop 1.0.2/src/contrib/eclipse-plugin只有插件的源代码,这里给出一个我打包好的对应的Eclipse插件:

下载地址

下载后扔到eclipse/dropins目录下即可,当然eclipse/plugins也是可以的,前者更为轻便,推荐;重启Eclipse,即可在透视图(Perspective)中看到Map/Reduce。

配置

点击蓝色的小象图标,新建一个Hadoop连接:

注意,一定要填写正确,修改了某些端口,以及默认运行的用户名等

具体的设置,可见

正常情况下,可以在项目区域可以看到

这样可以正常的进行HDFS分布式文件系统的管理:上传,删除等操作。

为下面测试做准备,需要先建了一个目录 user/root/input2,然后上传两个txt文件到此目录:

intput1.txt 对应内容:Hello Hadoop Goodbye Hadoop

intput2.txt 对应内容:Hello World Bye World

HDFS的准备工作好了,下面可以开始测试了。

Hadoop工程

新建一个Map/Reduce Project工程,设定好本地的hadoop目录

新建一个测试类WordCountTest:

| 1 2 3 4 5 6 7 8 9 10 11 12 13 14 15 16 17 18 19 20 21 22 23 24 25 26 27 28 29 30 31 32 33 34 35 36 37 38 39 40 41 42 43 44 45 46 47 48 49 50 51 52 53 54 55 56 57 58 59 60 61 62 63 64 65 66 67 68 69 70 71 72 73 74 75 76 77 78 79 80 81 82 83 84 85 86 87 |

|

右键,选择“Run Configurations”,弹出窗口,点击“Arguments”选项卡,在“Program argumetns”处预先输入参数:

hdfs://master:9000/user/root/input2 dfs://master:9000/user/root/output2

备注:参数为了在本地调试使用,而非真实环境。

然后,点击“Apply”,然后“Close”。现在可以右键,选择“Run on Hadoop”,运行。

但此时会出现类似异常信息:

12/04/24 15:32:44 WARN util.NativeCodeLoader: Unable to load native-hadoop library for your platform... using builtin-java classes where applicable

12/04/24 15:32:44 ERROR security.UserGroupInformation: PriviledgedActionException as:Administrator cause:java.io.IOException: Failed to set permissions of path: \tmp\hadoop-Administrator\mapred\staging\Administrator-519341271\.staging to 0700

Exception in thread "main" java.io.IOException: Failed to set permissions of path: \tmp\hadoop-Administrator\mapred\staging\Administrator-519341271\.staging to 0700

at org.apache.hadoop.fs.FileUtil.checkReturnValue(FileUtil.java:682)

at org.apache.hadoop.fs.FileUtil.setPermission(FileUtil.java:655)

at org.apache.hadoop.fs.RawLocalFileSystem.setPermission(RawLocalFileSystem.java:509)

at org.apache.hadoop.fs.RawLocalFileSystem.mkdirs(RawLocalFileSystem.java:344)

at org.apache.hadoop.fs.FilterFileSystem.mkdirs(FilterFileSystem.java:189)

at org.apache.hadoop.mapreduce.JobSubmissionFiles.getStagingDir(JobSubmissionFiles.java:116)

at org.apache.hadoop.mapred.JobClient$2.run(JobClient.java:856)

at org.apache.hadoop.mapred.JobClient$2.run(JobClient.java:850)

at java.security.AccessController.doPrivileged(Native Method)

at javax.security.auth.Subject.doAs(Subject.java:396)

at org.apache.hadoop.security.UserGroupInformation.doAs(UserGroupInformation.java:1093)

at org.apache.hadoop.mapred.JobClient.submitJobInternal(JobClient.java:850)

at org.apache.hadoop.mapreduce.Job.submit(Job.java:500)

at org.apache.hadoop.mapreduce.Job.waitForCompletion(Job.java:530)

at com.hadoop.learn.test.WordCountTest.main(WordCountTest.java:85)

这个是Windows下文件权限问题,在Linux下可以正常运行,不存在这样的问题。

解决方法是,修改/hadoop-1.0.2/src/core/org/apache/hadoop/fs/FileUtil.java里面的checkReturnValue,注释掉即可(有些粗暴,在Window下,可以不用检查):

| 1 2 3 4 5 6 7 8 9 10 11 12 13 |

|

重新编译打包hadoop-core-1.0.2.jar,替换掉hadoop-1.0.2根目录下的hadoop-core-1.0.2.jar即可。

这里提供一份修改版的hadoop-core-1.0.2-modified.jar文件,替换原hadoop-core-1.0.2.jar即可。

替换之后,刷新项目,设置好正确的jar包依赖,现在再运行WordCountTest,即可。

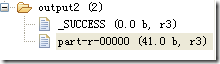

成功之后,在Eclipse下刷新HDFS目录,可以看到生成了ouput2目录:

点击“ part-r-00000”文件,可以看到排序结果:

Bye 1

Goodbye 1

Hadoop 2

Hello 2

World 2

嗯,一样可以正常Debug调试该程序,设置断点(右键 –> Debug As – > Java Application),即可(每次运行之前,都需要收到删除输出目录)。

另外,该插件会在eclipse对应的workspace\.metadata\.plugins\org.apache.hadoop.eclipse下,自动生成jar文件,以及其他文件,包括Haoop的一些具体配置等。

嗯,更多细节,慢慢体验吧。

遇到的异常

org.apache.hadoop.ipc.RemoteException: org.apache.hadoop.hdfs.server.namenode.SafeModeException: Cannot create directory /user/root/output2/_temporary. Name node is in safe mode.

The ratio of reported blocks 0.5000 has not reached the threshold 0.9990. Safe mode will be turned off automatically.

at org.apache.hadoop.hdfs.server.namenode.FSNamesystem.mkdirsInternal(FSNamesystem.java:2055)

at org.apache.hadoop.hdfs.server.namenode.FSNamesystem.mkdirs(FSNamesystem.java:2029)

at org.apache.hadoop.hdfs.server.namenode.NameNode.mkdirs(NameNode.java:817)

at sun.reflect.NativeMethodAccessorImpl.invoke0(Native Method)

at sun.reflect.NativeMethodAccessorImpl.invoke(NativeMethodAccessorImpl.java:39)

at sun.reflect.DelegatingMethodAccessorImpl.invoke(DelegatingMethodAccessorImpl.java:25)

at java.lang.reflect.Method.invoke(Method.java:597)

at org.apache.hadoop.ipc.RPC$Server.call(RPC.java:563)

at org.apache.hadoop.ipc.Server$Handler$1.run(Server.java:1388)

at org.apache.hadoop.ipc.Server$Handler$1.run(Server.java:1384)

at java.security.AccessController.doPrivileged(Native Method)

at javax.security.auth.Subject.doAs(Subject.java:396)

at org.apache.hadoop.security.UserGroupInformation.doAs(UserGroupInformation.java:1093)

at org.apache.hadoop.ipc.Server$Handler.run(Server.java:1382)

在主节点处,关闭掉安全模式:

#bin/hadoop dfsadmin –safemode leave

如何打包

将创建的Map/Reduce项目打包成jar包,很简单的事情,无需多言。保证jar文件的META-INF/MANIFEST.MF文件中存在Main-Class映射:

Main-Class: com.hadoop.learn.test.TestDriver

若使用到第三方jar包,那么在MANIFEST.MF中增加Class-Path好了。

另外可使用插件提供的MapReduce Driver向导,可以帮忙我们在Hadoop中运行,直接指定别名,尤其是包含多个Map/Reduce作业时,很有用。

一个MapReduce Driver只要包含一个main函数,指定别名:

| 1 2 3 4 5 6 7 8 9 10 11 12 13 14 15 16 17 18 19 20 21 22 23 24 25 26 27 28 |

|

这里有一个小技巧,MapReduce Driver类上面,右键运行,Run on Hadoop,会在Eclipse的workspace\.metadata\.plugins\org.apache.hadoop.eclipse目录下自动生成jar包,上传到HDFS,或者远程hadoop根目录下,运行它:

# bin/hadoop jar LearnHadoop_TestDriver.java-460881982912511899.jar testcount input2 output3

OK,本文结束。

2038

2038

被折叠的 条评论

为什么被折叠?

被折叠的 条评论

为什么被折叠?