详细资料可以参考https://www.cnblogs.com/xingshansi/p/6445625.html

一、概念

主成分分析(PCA)是一种统计方法。通过正交变换将一组可能存在相关性的变量转化为一组线性不相关的变量,转换后的这组变量叫主成分。

二、思想

PCA的思想是将n维特征映射到k维上(k<n),这k维是全新的正交特征,称为主成分,是重新构造出来的k维特征,而不是简单的从n维特征中去除n-k维的特征。

三、PCA的计算过程

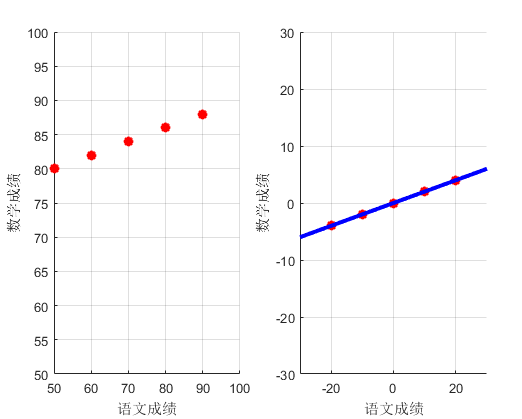

现在假设有一组数据,是五个同学期中考试的语文和数学成绩

| 语文成绩 | 学生1 | 学生2 | 学生3 | 学生4 | 学生5 |

| 语文成绩 | 50 | 60 | 70 | 80 | 90 |

| 数学成绩 | 80 | 82 | 84 | 86 | 88 |

现在我们利用PCA来降维,也就是把这个二维数据降到一维

算法步骤如下:

- 步骤一:数据中心化——去均值,根据需要,有的需要归一化——Normalized;

- 步骤二:求解协方差矩阵;

- 步骤三:利用特征值分解/奇异值分解 求解特征值以及特征向量;

- 步骤四:利用特征向量构造投影矩阵;

- 步骤五:利用投影矩阵,得出降维的数据。

MATLAB程序如下:

clc;clear all;close all;

set(0,'defaultfigurecolor','w') ;

x = [50 60 70 80 90];%语文成绩

y = [80 82 84 86 88];%数学成绩

%绘图

figure()

subplot(1,2,1)

scatter(x,y,'r*','linewidth',5);

xlim([50,100]);

ylim([50,100]);

grid on;

xlabel('语文成绩');

ylabel('数学成绩');

data = [x;y];

%步骤一:中心化

mu = mean(data,2);%按行取平均值

data(1,:) = data(1,:)-mu(1);%去均值

data(2,:) = data(2,:)-mu(2);

%步骤二:求协方差矩阵

R = data*data';

%步骤三:求特征值、特征向量

%利用:特征值分解

[V,D] = eig(R);

[EigR,PosR] = sort(diag(D),'descend');%特征值按降序排列

VecR = V(PosR,:);

%步骤四:利用特征向量构造投影矩阵

%假设降到一维

K = 1;%降到一维

Proj = VecR(1:K,:);

%步骤五:利用投影矩阵,得出降维的数据

DataPCA = Proj*data;

x0 = -30:30;

subplot 122

scatter(data(1,:),data(2,:),'r*','linewidth',5);hold on;

plot(x0,Proj(2)/Proj(1)*x0,'b','linewidth',3);hold on;%绘出投影方向

xlim([-30,30]);

ylim([-30,30]);

grid on;

xlabel('语文成绩');

ylabel('数学成绩');结果:

四、PCA人脸识别MATLAB代码

把别人的代码修改了一下,可以直接运行这个程序来做人脸识别,人脸识别数据库为orl face database

function T = CreateTrainingSet(TrainingSetPath)

TrainFiles = dir(TrainingSetPath);

Train_Class_Number = 0;%训练类别的个数,使用的数据集共40个类(40个人),每个人有10张脸

for i = 1:size(TrainFiles,1)

if not(strcmp(TrainFiles(i).name,'.')|strcmp(TrainFiles(i).name,'..')|strcmp(TrainFiles(i).name,'Thumbs.db'))

%strcmp(S1,S2)S1和S2是否完全匹配

Train_Class_Number = Train_Class_Number + 1; % Number of all images in the training database

end

end

%%%%%%%%%%%%%%%%%%%%%%%% Construction of 2D matrix from 1D image vectors

T = [];

Each_Class_Train_Num=5; % Choose top-5 faces in each class for training 每个样本中选择五个

for i = 1 : Train_Class_Number

str='';

% s是因为文件夹命名为s1 s2等

%str是每个样本的路径

str = strcat(TrainingSetPath,'\s',int2str(i),'\');%这里只到了每个类的路径,还没有读到图片strcat将两个char类型连接

for j=1:Each_Class_Train_Num

tmpstr='';

tmpstr=strcat(str,int2str(j),'.pgm');

img=imread(tmpstr); %读出图像

if length(size(img))>2 %如果图片大于二维

img=rgb2gray(img);

end

vecimg=double(reshape(img,1,size(img,1)*size(img,2)));

T=cat(1,T,vecimg);

end

end

[MeanFace, MeanNormFaces, EigenFaces] = EigenfaceCore(T) ;

TestImagePath ='D:\data\copy\att_faces.tar\att_faces\s40\6.pgm';%单张测试的人脸照

OutputName = Recognition(TestImagePath, MeanFace, MeanNormFaces, EigenFaces);

end

function [MeanFace, MeanNormFaces, EigenFaces] = EigenfaceCore(T)

% Revised by Jianzhu Wang email:jzwangATbjtuDOTeduDOTcn

% Use Principle Component Analysis (PCA) to determine the most discriminating features between images of faces.

% Description: This function gets a 2D matrix, containing all training image vectors

% Input:T is a 2D matrix containing all 1D image vectors. Suppose we

% totally choose P training images with the same size of M*N. Each training

% image is then vectorized into a 'row' vector with length equals to M*N.

% That is , we finally get a P*MN 2D matrix.

% Output:

% MeanFace - (1*MN) mean vector of faces

% EigenFaces -

%- (M*Nx(P-1)) Eigen vectors of the covariance matrix of the training database

% MeanNormFaces - (M*NxP) Matrix of centered image vectors

%% Calculate meanface

MeanFace=mean(T,1);%(Default each row in T corresponds to a face)

TrainNumber=size(T,1); % We totally have Train_Number training images

%% Mean-normalize 均值规范化

MeanNormFaces=[];

for i=1:TrainNumber

MeanNormFaces(i,:)=double(T(i,:)-MeanFace);

end

%% Recall some linear algebra theory

% If a matrix with size M*N, then matrices AA' and A'*A have same non-zero

% eigenvales. And if x is an eigenvector of AA', then A'x is eigenvector of

% A'A. This can be easily proved. Note that if x is eigenvector of a

% matrix, then a*x (a is a constant) is also the eigenvector of the matrix.

% Thus, eigenvector result for A'A obtained from matlab may not be same as A'x.

% Use L to replace covariance matrix C=A'*A so as to decrease dimension

L=MeanNormFaces*MeanNormFaces'; %200*200代替协方差矩阵

[E, D] = eig(L); %求特征值特征向量

%sort eigenvalues and corresponding eigenvectors

eigenValue=diag(wrev(diag(D))); %wrev得到时间序列的逆序,eigenValue按照递减的顺序排列

%accroding to the eigenvector relationship between AA' and A'A

EE=MeanNormFaces'*E;

eigenVector=fliplr(EE);

EigenFaces=[];

SumOfAllEigenValue=sum(eigenValue(:));

TmpSumOfEigenValue=0;

for i=1:size(eigenValue,1)

TmpSumOfEigenValue=TmpSumOfEigenValue+eigenValue(i,i);

ChooseEigenValueNum=i;

if(TmpSumOfEigenValue/SumOfAllEigenValue>0.85) %累计贡献率达到百分之八十五以上

break;

end

end

for i=1:ChooseEigenValueNum

EigenFaces(i,:)=eigenVector(:,i)';

end

end

%%

function OutputName = Recognition(TestImagePath, MeanFace, MeanNormFaces, EigenFaces)

% Description: This function compares two faces by projecting the images into facespace and

% measuring the Euclidean distance between them.

% Input: TestImagePath - Path of test face image

% MeanFace -(1*MN) mean vector, which is one

% of the output of 'EigenfaceCore.m'

%

% MeanNormFaces -(P*MN) matrix with each row

% represents a mean-normalized

% face, which is one of the output

% of 'EigenfaceCore.m'

% EigenFaces

%%%%%%%%%%%%%%%%%%%%%%%% Projecting centered image vectors into facespace

% All centered images are projected into facespace by multiplying in

% Eigenface basis's. Projected vector of each face will be its corresponding

% feature vector.

ProjectedImages = [];

% I think here should be the number of centered training faces rather than

% number of eigenfaces

Train_Number = size(MeanNormFaces,1);

for i = 1 : Train_Number

temp = (EigenFaces*MeanNormFaces(i,:)')'; % Projection of centered images into facespace

ProjectedImages(i,:) =temp; % each row corresponds to a feature

end

%%%%%%%%%%%%%%%%%%%%%%%% Extracting the PCA features from test image

InputImage = imread(TestImagePath);

VecInput=reshape(InputImage,1,size(InputImage,1)*size(InputImage,2));

MeanNormInput = double(VecInput)-MeanFace; % Centered test image

ProjectedTestImage = (EigenFaces*MeanNormInput')'; % Test image feature vector

%%%%%%%%%%%%%%%%%%%%%%%% Calculating Euclidean distances

% Euclidean distances between the projected test image and the projection

% of all centered training images are calculated. Test image is

% supposed to have minimum distance with its corresponding image in the

% training database.

Euc_dist = [];

for i = 1 : Train_Number

temp = ( norm( ProjectedTestImage - ProjectedImages(i,:) ) )^2;

Euc_dist = [Euc_dist temp];

end

[Euc_dist_min , Recognized_index] = min(Euc_dist);

OutputName = strcat('s',int2str((Recognized_index-1)/5+1),'class');

figure,

subplot(121);

imshow(InputImage,[]);

title('输入人脸');

subplot(122);

imshow(reshape((MeanNormFaces(Recognized_index,:)+MeanFace),112,92),[]);

title(strcat('最相似人脸,类别:',int2str((Recognized_index-1)/5+1)));

end

1630

1630

被折叠的 条评论

为什么被折叠?

被折叠的 条评论

为什么被折叠?