1.GaussianNB

# -*- coding: utf-8 -*-

"""

Created on Wed Jun 20 19:23:06 2018

@author: 12046

"""

from sklearn import datasets,cross_validation

from sklearn.naive_bayes import GaussianNB

from sklearn.svm import SVC

from sklearn.ensemble import RandomForestClassifier

from sklearn import metrics

x,y=datasets.make_classification(n_samples=1000,n_features=10,n_classes=2)

kf=cross_validation.KFold(len(x),n_folds=10,shuffle=True)

for train_index,test_index in kf:

x_train,y_train=x[train_index],y[train_index]

x_test,y_test=x[test_index],y[test_index]

clf=GaussianNB()

clf.fit(x_train,y_train)

pred=clf.predict(x_test)

acc = metrics.accuracy_score(y_test, pred)

print("Accuracy_score: "+str(acc))

f1 = metrics.f1_score(y_test, pred)

print("f1_score: "+str(f1))

auc = metrics.roc_auc_score(y_test, pred)

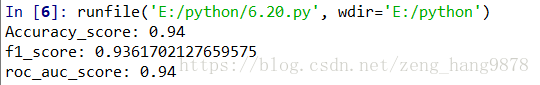

print("roc_auc_score: "+str(auc)) 结果如下图

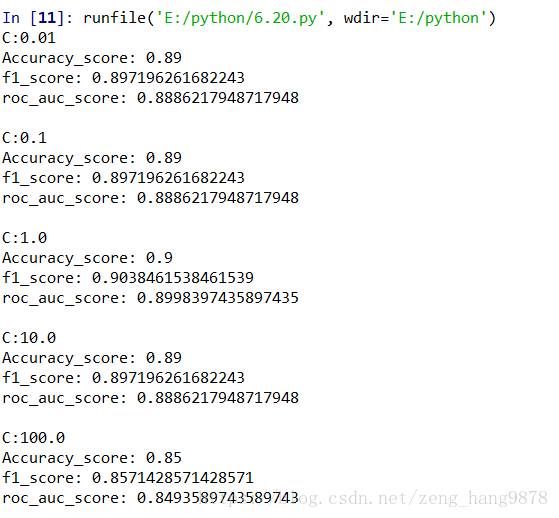

2.SVC

# -*- coding: utf-8 -*-

"""

Created on Wed Jun 20 19:23:06 2018

@author: 12046

"""

from sklearn import datasets,cross_validation

from sklearn.naive_bayes import GaussianNB

from sklearn.svm import SVC

from sklearn.ensemble import RandomForestClassifier

from sklearn import metrics

x,y=datasets.make_classification(n_samples=1000,n_features=10,n_classes=2)

kf=cross_validation.KFold(len(x),n_folds=10,shuffle=True)

for train_index,test_index in kf:

x_train,y_train=x[train_index],y[train_index]

x_test,y_test=x[test_index],y[test_index]

Cvalues=[1e-02, 1e-01, 1e00, 1e01, 1e02]

for C in Cvalues:

clf = SVC(C=1e-01, kernel='rbf', gamma=0.1)

clf.fit(x_train, y_train)

pred = clf.predict(x_test)

print("C:"+str(C))

acc = metrics.accuracy_score(y_test, pred)

print("Accuracy_score: "+str(acc))

f1 = metrics.f1_score(y_test, pred)

print("f1_score: "+str(f1))

auc = metrics.roc_auc_score(y_test, pred)

print("roc_auc_score: "+str(auc))

print()

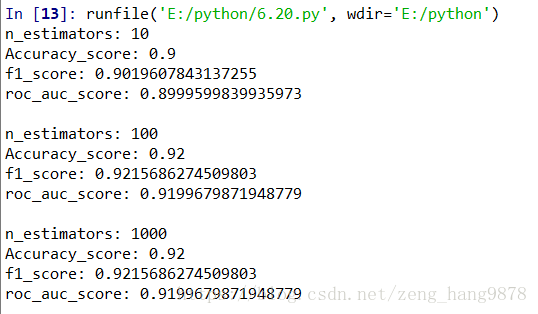

3.RandomForestClassifier

# -*- coding: utf-8 -*-

"""

Created on Wed Jun 20 19:23:06 2018

@author: 12046

"""

from sklearn import datasets,cross_validation

from sklearn.naive_bayes import GaussianNB

from sklearn.svm import SVC

from sklearn.ensemble import RandomForestClassifier

from sklearn import metrics

x,y=datasets.make_classification(n_samples=1000,n_features=10,n_classes=2)

kf=cross_validation.KFold(len(x),n_folds=10,shuffle=True)

for train_index,test_index in kf:

x_train,y_train=x[train_index],y[train_index]

x_test,y_test=x[test_index],y[test_index]

n_estimators_values=[10,100,1000]

for n_estimators in n_estimators_values:

clf = RandomForestClassifier(n_estimators)

clf.fit(x_train, y_train)

pred = clf.predict(x_test)

print("n_estimators: "+str(n_estimators))

acc = metrics.accuracy_score(y_test, pred)

print("Accuracy_score: "+str(acc))

f1 = metrics.f1_score(y_test, pred)

print("f1_score: "+str(f1))

auc = metrics.roc_auc_score(y_test, pred)

print("roc_auc_score: "+str(auc))

print()

分析:三种模型的预测效果都差不多

7614

7614

被折叠的 条评论

为什么被折叠?

被折叠的 条评论

为什么被折叠?