一. 前言

DirectoryScanner的主要任务是定期扫描磁盘上的数据块, 检查磁盘上的数据块信息是否与FsDatasetImpl中保存的数据块信息一致, 如果不一致则对FsDatasetImpl中的信息进行更新。

注意, DirectoryScanner只会检查内存和磁盘上FINALIZED状态的数据块是否一致

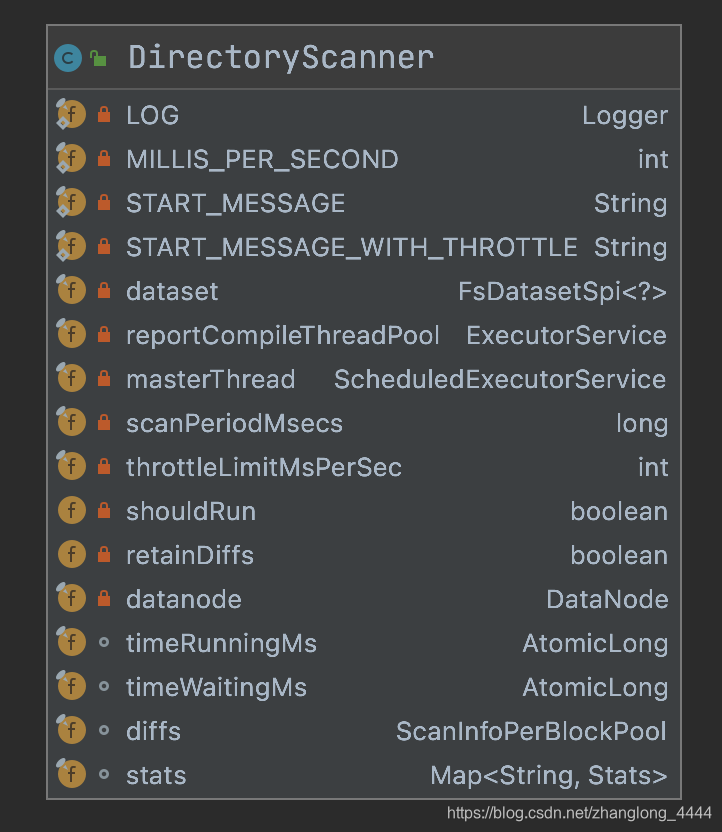

二.属性

■ reportCompileThreadPool: 异步收集磁盘上数据块信息的线程池。

■ masterThread: 主线程, 定期调用DirectoryScanner.run()方法, 执行整个扫描逻辑。

■ diffs: 描述磁盘上保存的数据块信息与内存之间差异的数据结构, 在扫描的过程中更新, 扫描结束后把diffs更新到FsDatasetImpl对象上。

二.方法

DirectoryScanner对象会定期(由dfs.datanode.directoryscan.interval配置, 默认为21600秒 [6小时] ) 在线程池对象masterThread上触发扫描任务, 这个扫描任务是由DirectoryScanner.reconcile()方法执行的。 reconcile()会首先调用scan()方法收集磁盘上数据块与内存中数据块的差异信息, 并把这些差异信息保存在diffs字段中。 scan()方法在获取磁盘上存储的数据块时使用了reportCompileThreadPool线程池, 异步地完成磁盘数据块的扫描任务。 reconcile()方法拿到scan()更新的diffs对象后, 调用FsDataset的checkAndUpdate()方法, 更新FsDatasetImpl保存的数据块副本信息, 完成与磁盘上数据块副本的同步操作。

2.1. 构造方法

构造方法调用链:

BPServiceActor#run() -> BPOfferService#verifyAndSetNamespaceInfo -> Datenode#initBlockPool -> new Datenode#initDirectoryScanner

/**

* Create a new directory scanner, but don't cycle it running yet.

*

* @param datanode the parent datanode

* @param dataset the dataset to scan

* @param conf the Configuration object

*/

public DirectoryScanner(DataNode datanode, FsDatasetSpi<?> dataset,

Configuration conf) {

this.datanode = datanode;

this.dataset = dataset;

// dfs.datanode.directoryscan.interval : 21600 (6小时)

int interval = (int) conf.getTimeDuration(

DFSConfigKeys.DFS_DATANODE_DIRECTORYSCAN_INTERVAL_KEY,

DFSConfigKeys.DFS_DATANODE_DIRECTORYSCAN_INTERVAL_DEFAULT,

TimeUnit.SECONDS);

//扫描周期

scanPeriodMsecs = interval * MILLIS_PER_SECOND; //msec

// dfs.datanode.directoryscan.throttle.limit.ms.per.sec : 1000

int throttle =

conf.getInt(

DFSConfigKeys.DFS_DATANODE_DIRECTORYSCAN_THROTTLE_LIMIT_MS_PER_SEC_KEY,

DFSConfigKeys.DFS_DATANODE_DIRECTORYSCAN_THROTTLE_LIMIT_MS_PER_SEC_DEFAULT);

if ((throttle > MILLIS_PER_SECOND) || (throttle <= 0)) {

if (throttle > MILLIS_PER_SECOND) {

LOG.error(

DFSConfigKeys.DFS_DATANODE_DIRECTORYSCAN_THROTTLE_LIMIT_MS_PER_SEC_KEY

+ " set to value above 1000 ms/sec. Assuming default value of " +

DFSConfigKeys.DFS_DATANODE_DIRECTORYSCAN_THROTTLE_LIMIT_MS_PER_SEC_DEFAULT);

} else {

LOG.error(

DFSConfigKeys.DFS_DATANODE_DIRECTORYSCAN_THROTTLE_LIMIT_MS_PER_SEC_KEY

+ " set to value below 1 ms/sec. Assuming default value of " +

DFSConfigKeys.DFS_DATANODE_DIRECTORYSCAN_THROTTLE_LIMIT_MS_PER_SEC_DEFAULT);

}

throttleLimitMsPerSec =

DFSConfigKeys.DFS_DATANODE_DIRECTORYSCAN_THROTTLE_LIMIT_MS_PER_SEC_DEFAULT;

} else {

throttleLimitMsPerSec = throttle;

}

// dfs.datanode.directoryscan.threads : 1

int threads =

conf.getInt(DFSConfigKeys.DFS_DATANODE_DIRECTORYSCAN_THREADS_KEY,

DFSConfigKeys.DFS_DATANODE_DIRECTORYSCAN_THREADS_DEFAULT);

// 异步收集磁盘上数据块信息的线程池

reportCompileThreadPool = Executors.newFixedThreadPool(threads,

new Daemon.DaemonFactory());

masterThread = new ScheduledThreadPoolExecutor(1,

new Daemon.DaemonFactory());

}

2.2. run()

run()方法是在调用start方法时候,由masterThread线程池调用. 核心是调用reconcile方法.

/**

* Main program loop for DirectoryScanner. Runs {@link reconcile()}

* and handles any exceptions.

*/

@Override

public void run() {

try {

if (!shouldRun) {

//shutdown has been activated

LOG.warn("this cycle terminating immediately because 'shouldRun' has been deactivated");

return;

}

//We're are okay to run - do it

reconcile();

} catch (Exception e) {

//Log and continue - allows Executor to run again next cycle

LOG.error("Exception during DirectoryScanner execution - will continue next cycle", e);

} catch (Error er) {

//Non-recoverable error - re-throw after logging the problem

LOG.error("System Error during DirectoryScanner execution - permanently terminating periodic scanner", er);

throw er;

}

}

2.3.reconcile()

/**

* Reconcile differences between disk and in-memory blocks

*/

@VisibleForTesting

public void reconcile() throws IOException {

//调用scan()方法收集磁盘上数据块与内存中数据块的差异信息

// 不同的地方会放到diffs集合中

scan();

//调用FsDatasetImpl.checkAndUpdate()更新FsDataset保存的数据块, 完成同步

for (Entry<String, LinkedList<ScanInfo>> entry : diffs.entrySet()) {

String bpid = entry.getKey();

LinkedList<ScanInfo> diff = entry.getValue();

for (ScanInfo info : diff) {

dataset.checkAndUpdate(bpid, info);

}

}

if (!retainDiffs) clear();

}

2.4.scan ()

扫描磁盘和内存block之间的差异

只扫描磁盘和内存的“finalized block ”列表。

/**

* Scan for the differences between disk and in-memory blocks

* Scan only the "finalized blocks" lists of both disk and memory.

*/

private void scan() {

clear();

Map<String, ScanInfo[]> diskReport = getDiskReport();

// Hold FSDataset lock to prevent further changes to the block map

try(AutoCloseableLock lock = dataset.acquireDatasetLock()) {

for (Entry<String, ScanInfo[]> entry : diskReport.entrySet()) {

String bpid = entry.getKey();

ScanInfo[] blockpoolReport = entry.getValue();

Stats statsRecord = new Stats(bpid);

stats.put(bpid, statsRecord);

LinkedList<ScanInfo> diffRecord = new LinkedList<ScanInfo>();

diffs.put(bpid, diffRecord);

statsRecord.totalBlocks = blockpoolReport.length;

final List<ReplicaInfo> bl = dataset.getFinalizedBlocks(bpid);

Collections.sort(bl); // Sort based on blockId

int d = 0; // index for blockpoolReport

int m = 0; // index for memReprot

while (m < bl.size() && d < blockpoolReport.length) {

ReplicaInfo memBlock = bl.get(m);

ScanInfo info = blockpoolReport[d];

if (info.getBlockId() < memBlock.getBlockId()) {

if (!dataset.isDeletingBlock(bpid, info.getBlockId())) {

// Block is missing in memory

statsRecord.missingMemoryBlocks++;

addDifference(diffRecord, statsRecord, info);

}

d++;

continue;

}

if (info.getBlockId() > memBlock.getBlockId()) {

// Block is missing on the disk

addDifference(diffRecord, statsRecord,

memBlock.getBlockId(), info.getVolume());

m++;

continue;

}

// Block file and/or metadata file exists on the disk

// Block exists in memory

if (info.getVolume().getStorageType() != StorageType.PROVIDED &&

info.getBlockFile() == null) {

// Block metadata file exits and block file is missing

addDifference(diffRecord, statsRecord, info);

} else if (info.getGenStamp() != memBlock.getGenerationStamp()

|| info.getBlockLength() != memBlock.getNumBytes()) {

// Block metadata file is missing or has wrong generation stamp,

// or block file length is different than expected

statsRecord.mismatchBlocks++;

addDifference(diffRecord, statsRecord, info);

} else if (memBlock.compareWith(info) != 0) {

// volumeMap record and on-disk files don't match.

statsRecord.duplicateBlocks++;

addDifference(diffRecord, statsRecord, info);

}

d++;

if (d < blockpoolReport.length) {

// There may be multiple on-disk records for the same block, don't increment

// the memory record pointer if so.

ScanInfo nextInfo = blockpoolReport[d];

if (nextInfo.getBlockId() != info.getBlockId()) {

++m;

}

} else {

++m;

}

}

while (m < bl.size()) {

ReplicaInfo current = bl.get(m++);

addDifference(diffRecord, statsRecord,

current.getBlockId(), current.getVolume());

}

while (d < blockpoolReport.length) {

if (!dataset.isDeletingBlock(bpid, blockpoolReport[d].getBlockId())) {

statsRecord.missingMemoryBlocks++;

addDifference(diffRecord, statsRecord, blockpoolReport[d]);

}

d++;

}

LOG.info(statsRecord.toString());

} //end for

} //end synchronized

}

503

503

被折叠的 条评论

为什么被折叠?

被折叠的 条评论

为什么被折叠?