摘要:这篇文章介绍微信小程序“大家来找茬”怎么使用程序自动“找茬”,使用到的工具主要是Python3和adb工具。

作者:yooongchun

微信公众号: yooongchun小屋

腾讯官方出了一个小程序的找茬游戏,如下示意:

很多时候“眼疾手快”比不过别人,只好寻找一种便捷的玩法:程序自动实现!

这里使用的是Python3

- 第一步:获取手机截图

os.system("adb.exe exec-out screencap -p >screenshot.png")上面的命令获得的截图在windows系统上会出错,这是由于windows默认使用的换行符为\r\n 而Andriod系统使用的是Linux内核,其换行表示为\n ,在手机端把二进制数据流传输给电脑时,windows会自动把\n 替换为\r\n 因而为了正确显示,还需要一个转换,我们编写Python的转换代码如下:

# 转换图片格式

# adb 工具直接截图保存到电脑的二进制数据流在windows下"\n" 会被解析为"\r\n",

# 这是由于Linux系统下和Windows系统下表示的不同造成的,而Andriod使用的是Linux内核

def convert_img(path):

with open(path, "br") as f:

bys = f.read()

bys_ = bys.replace(b"\r\n", b"\n") # 二进制流中的"\r\n" 替换为"\n"

with open(path, "bw") as f:

f.write(bys_)

- 第二步:图片裁剪

获得的图片有多余的部分,需要进行裁剪,使用Python的opencv库,代码如下:

# 裁剪图片

def crop_image(im, box=(0.20, 0.93, 0.05, 0.95), gap=38, dis=2):

'''

:param path: 图片路径

:param box: 裁剪的参数:比例

:param gap: 中间裁除区域

:param dis: 偏移距离

:return: 返回裁剪出来的区域

'''

h, w = im.shape[0], im.shape[1]

region = im[int(h * box[2]):int(h * box[3]), int(w * box[0]):int(w * box[1])]

rh, rw = region.shape[0], region.shape[1]

region_1 = region[0 + dis: int(rh / 2) - gap + dis, 0: rw]

region_2 = region[rh - int(rh / 2) + gap: rh, 0:rw]

return region_1, region_2, region

- 第三步:图片差异对比

图片差异对比这就很好理解了,把两张图片叠到一起,相减,剩下的就是不同的地方了,当然,这里有几个细节需要注意:原图的截取,上面从手机获取的截图有很多非目标区域,因而我们需要定义截图区域,这就是我们程序中需要给出的box参数:

box=(0.2,0.93,0.05,0.95)

这里,参数依次代表:

开始截取的列=0.2*图片宽,停止截取的列=0.93*图片宽

开始截取的行=0.05*图片高,开始截取的行=0.95*图片高

然后,仔细观察你会发现中间还有一块多余的区域,把上下两张图分开只需要给出中间区域要截除的像素值,这也就是我们程序运行的第二个参数:

gap=38

这里代表把第一次截图得到的图片二分后分别截去38像素的高度。

这时,还有一个问题要注意的是,我们截图参数是根据肉眼分辨设置的,你截图的结果可能并不是严格的目标图片的开始行列,这时,得到的两张图片会存在很小的错位,为了微调这个错位,我们给出程序的第三个参数:

dis=2

这代表两张图片在进行相减作差的时候会微调两行。

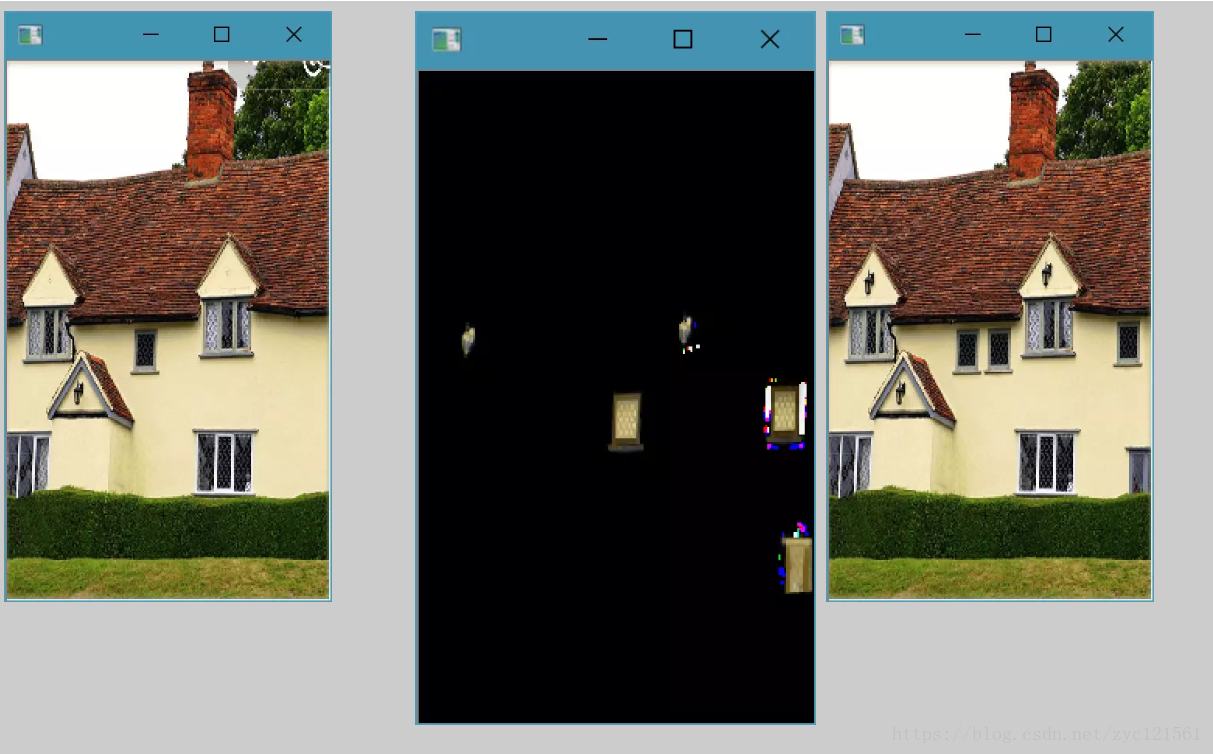

好了,得到差异图片后我们来看看效果

哈,五个不同的地方,终于“原形毕露”!

# 查找不同返回差值图

def diff(img1, img2):

diff = (img1 - img2)

# 形态学开运算滤波

kernel = np.ones((5, 5), np.uint8)

opening = cv2.morphologyEx(diff, cv2.MORPH_OPEN, kernel)

return opening这时,你就可以看着这张差异图去“找茬”了。

当然,上面这张丑陋的差异图是不能忍受的,没事,我们接着改进。

找到了差异,如何“优雅”的展示差异呢?我的第一反应就是:在原图上画个圈出来,这样既直观又不失“优雅”。好吧,说干就干!

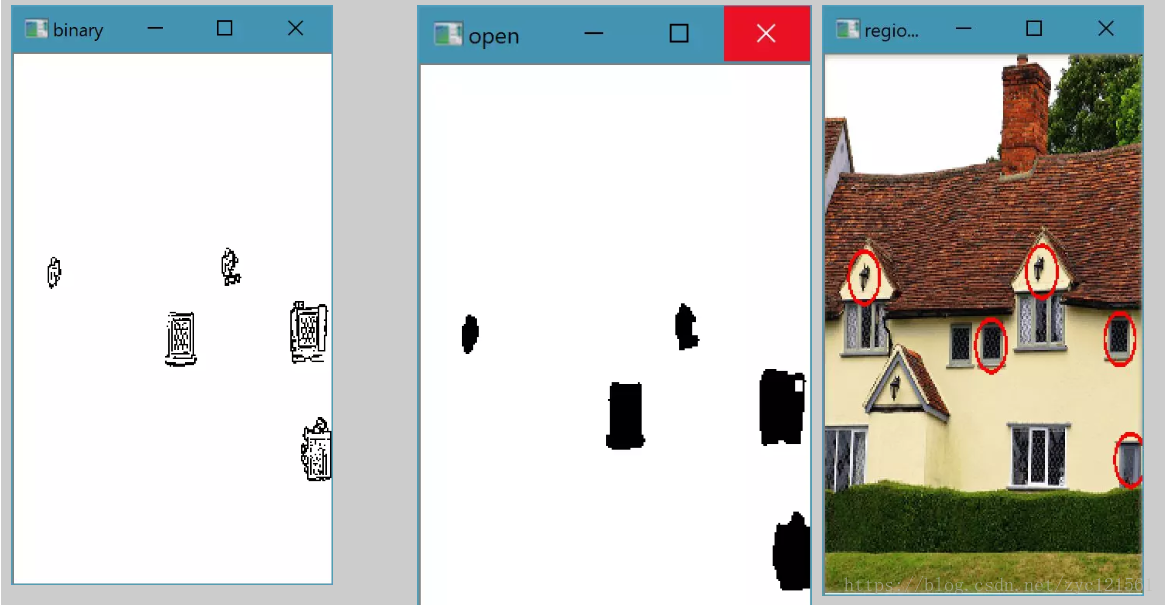

第一步,使用Opencv库检索差异图的轮廓。这里,值得一提的是在图片的右上角有个小程序的返回图标,这会干扰我们提取轮廓,因而需要先把这个图标去除。查找到轮廓之前需要把图片转换为二值图,然后运用形态学开运算去除噪声,这里涉及程序的第四个参数:滤波核尺寸:

filter_sz=25

最后查找外轮廓并根据轮廓周长保存前n个轮廓,这就是程序里的第五个参数:

num=5

然后检测轮廓的最小外接圆,找到圆心和半径,绘制到原图上,效果如下:

这么样,效果是不是更“优雅”一些了呢!

# 去除右上角的多余区域,即显示小程序返回及分享的灰色区域块

def dispose_region(img):

h, w = img.shape[0], img.shape[1]

img[0:int(0.056 * h), int(0.68 * w):w] = 0

return img

# 查找轮廓中心返回坐标值

def contour_pos(img, num=5, filter_size=5):

'''

:param img: 查找的目标图,需为二值图

:param num: 返回的轮廓数量,如果该值大于轮廓总数,则返回轮廓总数

:return: 返回值为轮廓的最小外接圆的圆心坐标和半径,存放在一个list中

'''

position = [] # 保存返回值

# 计算轮廓

gray = cv2.cvtColor(img, cv2.COLOR_BGR2GRAY)

binary = cv2.adaptiveThreshold(gray, 255, cv2.ADAPTIVE_THRESH_GAUSSIAN_C, cv2.THRESH_BINARY, 11, 2)

cv2.namedWindow("binary", cv2.WINDOW_NORMAL)

cv2.imshow("binary", binary)

kernel = np.ones((filter_size, filter_size), np.uint8)

opening = cv2.morphologyEx(binary, cv2.MORPH_OPEN, kernel) # 开运算

cv2.namedWindow("open", cv2.WINDOW_NORMAL)

cv2.imshow("open", opening)

image, contours, hierarchy = cv2.findContours(np.max(opening) - opening, cv2.RETR_EXTERNAL, cv2.CHAIN_APPROX_SIMPLE)

# 根据轮廓周长大小决定返回的轮廓

arclen = [cv2.arcLength(contour, True) for contour in contours]

arc = arclen.copy()

arc.sort(reverse=True)

if len(arc) >= num:

thresh = arc[num - 1]

else:

thresh = arc[len(arc) - 1]

for index, contour in enumerate(contours):

if cv2.arcLength(contour, True) < thresh:

continue

(x, y), radius = cv2.minEnclosingCircle(contour)

center = (int(x), int(y))

radius = int(radius)

position.append({"center": center, "radius": radius})

return position

# 在原图上显示

def dip_diff(origin, region, region_1, region_2, dispose_img, position, box, setting_radius=40, gap=38, dis=2):

for pos in position:

center, radius = pos["center"], pos["radius"]

if setting_radius is not None:

radius = setting_radius

cv2.circle(region_2, center, radius, (0, 0, 255), 5)

cv2.namedWindow("region2",cv2.WINDOW_NORMAL)

cv2.imshow("region2",region_2)

gray = cv2.cvtColor(dispose_img, cv2.COLOR_BGR2GRAY)

binary = cv2.adaptiveThreshold(gray, 255, cv2.ADAPTIVE_THRESH_GAUSSIAN_C, cv2.THRESH_BINARY, 11, 2)

kernel = np.ones((15, 15), np.uint8)

opening = cv2.morphologyEx(binary, cv2.MORPH_OPEN, kernel) # 开运算

merged = 255 - cv2.merge([opening, opening, opening])

h, w = region_1.shape[0], region_1.shape[1]

region[0:h, 0:w] *= merged

region[0:h, 0:w] += region_1

region[h + gap * 2 - dis:2 * h + gap * 2 - dis, 0:w] = region_2

orih, oriw = origin.shape[0], origin.shape[1]

origin[int(orih * box[2]):int(orih * box[3]), int(oriw * box[0]):int(oriw * box[1])] = region

cv2.namedWindow("show diff", cv2.WINDOW_NORMAL)

cv2.imshow("show diff", origin)

cv2.waitKey(0)

# 自动点击

def auto_click(origin, region_1, box, position, gap=38, dis=2):

h, w = origin.shape[0], origin.shape[1]

rh = region_1.shape[0]

for pos in position:

center, radius = pos["center"], pos["radius"]

x = int(w * box[0] + center[0])

y = int(h * box[2] + rh - dis + 2 * gap + center[1])

os.system("adb.exe shell input tap %d %d" % (x, y))

logging.info("tap:(%d,%d)" % (x, y))

time.sleep(0.05)

最后贴上完整的代码:

"""

大家来找茬微信小程序腾讯官方版 自动找出图片差异

"""

__author__ = "yooongchun"

__email__ = "yooongchun@foxmail.com"

__site__ = "www.yooongchun.com"

import cv2

import numpy as np

import os

import time

import sys

import logging

import threading

logging.basicConfig(level=logging.INFO)

DEBUG = True # 开启debug模式,供调试用,正常使用情况下请不要修改

# 转换图片格式

# adb 工具直接截图保存到电脑的二进制数据流在windows下"\n" 会被解析为"\r\n",

# 这是由于Linux系统下和Windows系统下表示的不同造成的,而Andriod使用的是Linux内核

def convert_img(path):

with open(path, "br") as f:

bys = f.read()

bys_ = bys.replace(b"\r\n", b"\n") # 二进制流中的"\r\n" 替换为"\n"

with open(path, "bw") as f:

f.write(bys_)

# 裁剪图片

def crop_image(im, box=(0.20, 0.93, 0.05, 0.95), gap=38, dis=2):

'''

:param path: 图片路径

:param box: 裁剪的参数:比例

:param gap: 中间裁除区域

:param dis: 偏移距离

:return: 返回裁剪出来的区域

'''

h, w = im.shape[0], im.shape[1]

region = im[int(h * box[2]):int(h * box[3]), int(w * box[0]):int(w * box[1])]

rh, rw = region.shape[0], region.shape[1]

region_1 = region[0 + dis: int(rh / 2) - gap + dis, 0: rw]

region_2 = region[rh - int(rh / 2) + gap: rh, 0:rw]

return region_1, region_2, region

# 查找不同返回差值图

def diff(img1, img2):

diff = (img1 - img2)

# 形态学开运算滤波

kernel = np.ones((5, 5), np.uint8)

opening = cv2.morphologyEx(diff, cv2.MORPH_OPEN, kernel)

return opening

# 去除右上角的多余区域,即显示小程序返回及分享的灰色区域块

def dispose_region(img):

h, w = img.shape[0], img.shape[1]

img[0:int(0.056 * h), int(0.68 * w):w] = 0

return img

# 查找轮廓中心返回坐标值

def contour_pos(img, num=5, filter_size=5):

'''

:param img: 查找的目标图,需为二值图

:param num: 返回的轮廓数量,如果该值大于轮廓总数,则返回轮廓总数

:return: 返回值为轮廓的最小外接圆的圆心坐标和半径,存放在一个list中

'''

position = [] # 保存返回值

# 计算轮廓

gray = cv2.cvtColor(img, cv2.COLOR_BGR2GRAY)

binary = cv2.adaptiveThreshold(gray, 255, cv2.ADAPTIVE_THRESH_GAUSSIAN_C, cv2.THRESH_BINARY, 11, 2)

cv2.namedWindow("binary", cv2.WINDOW_NORMAL)

cv2.imshow("binary", binary)

kernel = np.ones((filter_size, filter_size), np.uint8)

opening = cv2.morphologyEx(binary, cv2.MORPH_OPEN, kernel) # 开运算

cv2.namedWindow("open", cv2.WINDOW_NORMAL)

cv2.imshow("open", opening)

image, contours, hierarchy = cv2.findContours(np.max(opening) - opening, cv2.RETR_EXTERNAL, cv2.CHAIN_APPROX_SIMPLE)

# 根据轮廓周长大小决定返回的轮廓

arclen = [cv2.arcLength(contour, True) for contour in contours]

arc = arclen.copy()

arc.sort(reverse=True)

if len(arc) >= num:

thresh = arc[num - 1]

else:

thresh = arc[len(arc) - 1]

for index, contour in enumerate(contours):

if cv2.arcLength(contour, True) < thresh:

continue

(x, y), radius = cv2.minEnclosingCircle(contour)

center = (int(x), int(y))

radius = int(radius)

position.append({"center": center, "radius": radius})

return position

# 在原图上显示

def dip_diff(origin, region, region_1, region_2, dispose_img, position, box, setting_radius=40, gap=38, dis=2):

for pos in position:

center, radius = pos["center"], pos["radius"]

if setting_radius is not None:

radius = setting_radius

cv2.circle(region_2, center, radius, (0, 0, 255), 5)

cv2.namedWindow("region2",cv2.WINDOW_NORMAL)

cv2.imshow("region2",region_2)

gray = cv2.cvtColor(dispose_img, cv2.COLOR_BGR2GRAY)

binary = cv2.adaptiveThreshold(gray, 255, cv2.ADAPTIVE_THRESH_GAUSSIAN_C, cv2.THRESH_BINARY, 11, 2)

kernel = np.ones((15, 15), np.uint8)

opening = cv2.morphologyEx(binary, cv2.MORPH_OPEN, kernel) # 开运算

merged = 255 - cv2.merge([opening, opening, opening])

h, w = region_1.shape[0], region_1.shape[1]

region[0:h, 0:w] *= merged

region[0:h, 0:w] += region_1

region[h + gap * 2 - dis:2 * h + gap * 2 - dis, 0:w] = region_2

orih, oriw = origin.shape[0], origin.shape[1]

origin[int(orih * box[2]):int(orih * box[3]), int(oriw * box[0]):int(oriw * box[1])] = region

cv2.namedWindow("show diff", cv2.WINDOW_NORMAL)

cv2.imshow("show diff", origin)

cv2.waitKey(0)

# 在原图上绘制圆

def draw_circle(origin, region_1, position, box, gap=38, dis=2):

h, w = origin.shape[0], origin.shape[1]

rh = region_1.shape[0]

for pos in position:

center, radius = pos["center"], pos["radius"]

radius = 40

x = int(w * box[0] + center[0])

y = int(h * box[2] + rh - dis + 2 * gap + center[1])

cv2.circle(origin, (x, y), radius, (0, 0, 255), 3)

cv2.namedWindow("origin with diff", cv2.WINDOW_NORMAL)

cv2.imshow("origin with diff", origin)

cv2.waitKey(0)

# 自动点击

def auto_click(origin, region_1, box, position, gap=38, dis=2):

h, w = origin.shape[0], origin.shape[1]

rh = region_1.shape[0]

for pos in position:

center, radius = pos["center"], pos["radius"]

x = int(w * box[0] + center[0])

y = int(h * box[2] + rh - dis + 2 * gap + center[1])

os.system("adb.exe shell input tap %d %d" % (x, y))

logging.info("tap:(%d,%d)" % (x, y))

time.sleep(0.05)

# 主函数入口

def main(argv):

# 参数列表,程序运行需要提供的参数

# box = None # 裁剪原始图像的参数,分别为宽和高的比例倍

# gap = None # 图像中间间隔的一半大小

# dis = None # 图像移位,微调系数

# num = None # 显示差异的数量

# filter_sz = None # 滤波核大小

# auto_clicked=True

# 仅有一个参数,则使用默认参数

if len(argv) == 1:

box = (0.20, 0.93, 0.05, 0.95)

gap = 38

dis = 2

num = 5

filter_sz = 13

auto_clicked = "True"

else: # 多个参数时需要进行参数解析,参数使用等号分割

try:

# 设置参数

para_pairs = {}

paras = argv[1:] # 参数

for para in paras:

para_pairs[para.split("=")[0]] = para.split("=")[1]

# 参数配对

if "gap" in para_pairs.keys():

gap = int(para_pairs["gap"])

else:

gap = 38

if "box" in para_pairs.keys():

box = tuple([float(i) for i in para_pairs["box"][1:-1].split(",")])

else:

box = (0.20, 0.93, 0.05, 0.95)

if "dis" in para_pairs.keys():

dis = int(para_pairs["dis"])

else:

dis = 2

if "num" in para_pairs.keys():

num = int(para_pairs["num"])

else:

num = 5

if "filter_sz" in para_pairs.keys():

filter_sz = int(para_pairs["filter_sz"])

else:

filter_sz = 13

if "auto_clicked" in para_pairs.keys():

auto_clicked = para_pairs["auto_clicked"]

else:

auto_clicked = "True"

except IOError:

logging.info("参数出错,请重新输入!")

return

st = time.time()

try:

os.system("adb.exe exec-out screencap -p >screenshot.png")

convert_img("screenshot.png")

except IOError:

logging.info("从手机获取图片出错,请检查adb工具是否安装及手机是否正常连接!")

return

logging.info(">>>从手机截图用时:%0.2f 秒\n" % (time.time() - st))

st = time.time()

try:

origin = cv2.imread("screenshot.png") # 原始图像

region_1, region_2, region = crop_image(origin, box=box, gap=gap, dis=dis)

diff_img = diff(region_1, region_2)

dis_img = dispose_region(diff_img)

position = contour_pos(dis_img, num=num, filter_size=filter_sz)

while len(position) < num and filter_sz > 3:

filter_sz -= 1

position = contour_pos(dis_img, num=num, filter_size=filter_sz)

except IOError:

logging.info("处理图片出错!")

return

try:

if auto_clicked is "True":

threading.Thread(target=auto_click, args=(origin, region_1, box, position, gap, dis)).start()

except IOError:

logging.info(">>>尝试点击出错!")

logging.info(">>>处理图片用时:%0.2f 秒\n" % (time.time() - st))

try:

dip_diff(origin, region, region_1, region_2, dis_img, position, box)

# draw_circle(origin, region_1, position, box, gap=gap, dis=dis)

except IOError:

logging.info("重组显示出错!")

return

if __name__ == "__main__":

if not DEBUG:

while True:

main(sys.argv)

else:

box = (0.19, 0.95, 0.05, 0.95)

gap = 38

dis = 2

num = 5

filter_sz = 13

origin = cv2.imread("c:/users/fanyu/desktop/adb/screenshot.png") # 原始图像

region_1, region_2, region = crop_image(origin, box=box, gap=gap, dis=dis)

cv2.namedWindow("", cv2.WINDOW_NORMAL)

cv2.imshow("", region_2)

diff_img = diff(region_1, region_2)

dis_img = dispose_region(diff_img)

cv2.namedWindow(" ", cv2.WINDOW_NORMAL)

cv2.imshow(" ", region_1)

cv2.imshow("", dis_img)

position = contour_pos(dis_img, num=num, filter_size=filter_sz)

dip_diff(origin, region, region_1, region_2, dis_img, position, box)

# draw_circle(origin, region_1, position, box, gap=gap, dis=dis)

另外,可到我的github下载完整版:

https://github.com/yooongchun/auto_find_difference

也可以到微信公众号查看完整的文章:yooongchun小屋

1093

1093

被折叠的 条评论

为什么被折叠?

被折叠的 条评论

为什么被折叠?