第一部分:大模型本地部署完整教程

1.1 环境准备与硬件要求

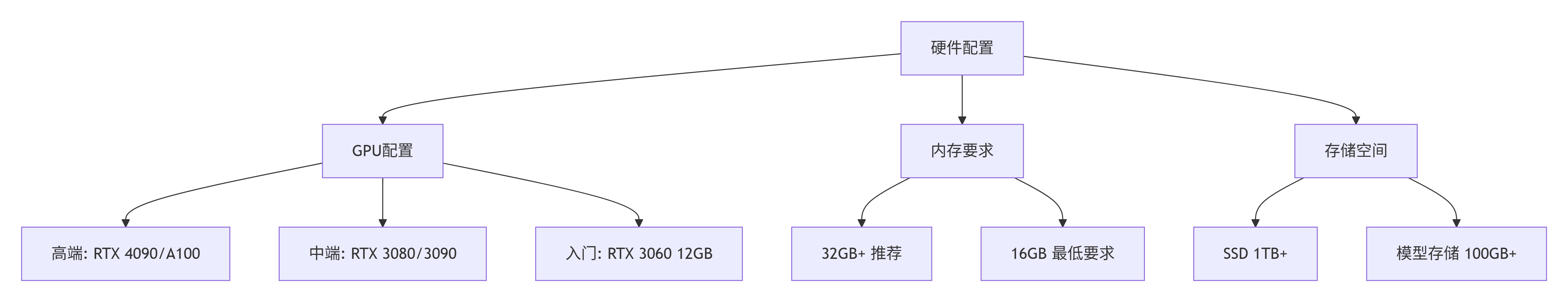

硬件配置要求

graph TD

A[硬件配置] --> B[GPU配置]

A --> C[内存要求]

A --> D[存储空间]

B --> B1[高端: RTX 4090/A100]

B --> B2[中端: RTX 3080/3090]

B --> B3[入门: RTX 3060 12GB]

C --> C1[32GB+ 推荐]

C --> C2[16GB 最低要求]

D --> D1[SSD 1TB+]

D --> D2[模型存储 100GB+]

基础环境配置

python

# 环境检查脚本

import torch

import psutil

import GPUtil

import platform

def check_environment():

print("=" * 50)

print("环境检查报告")

print("=" * 50)

# 系统信息

print(f"操作系统: {platform.system()} {platform.release()}")

print(f"Python版本: {platform.python_version()}")

# CPU信息

print(f"CPU核心数: {psutil.cpu_count()}")

print(f"内存总量: {psutil.virtual_memory().total / (1024**3):.2f} GB")

# GPU信息

if torch.cuda.is_available():

print(f"CUDA可用: 是")

print(f"CUDA版本: {torch.version.cuda}")

print(f"GPU数量: {torch.cuda.device_count()}")

for i in range(torch.cuda.device_count()):

gpu = GPUtil.getGPUs()[i]

print(f"GPU {i}: {gpu.name}, 显存: {gpu.memoryTotal}MB")

else:

print("CUDA可用: 否")

# PyTorch信息

print(f"PyTorch版本: {torch.__version__}")

if __name__ == "__main__":

check_environment()

1.2 模型选择与下载

常用开源模型比较

| 模型名称 | 参数量 | 最小显存 | 推荐显存 | 特点 |

|---|---|---|---|---|

| Llama-2-7B | 70亿 | 16GB | 24GB | 质量好,许可证友好 |

| ChatGLM3-6B | 60亿 | 12GB | 16GB | 中英双语,推理能力强 |

| Qwen-7B | 70亿 | 16GB | 24GB | 中文优化,多轮对话 |

| Baichuan2-7B | 70亿 | 16GB | 24GB | 中文能力强,商业化友好 |

模型下载脚本

python

import os

import requests

import huggingface_hub

from huggingface_hub import snapshot_download

from transformers import AutoTokenizer, AutoModelForCausalLM

import torch

class ModelDownloader:

def __init__(self, cache_dir="./models"):

self.cache_dir = cache_dir

os.makedirs(cache_dir, exist_ok=True)

def download_model(self, model_name, local_dir=None):

"""下载HuggingFace模型"""

if local_dir is None:

local_dir = os.path.join(self.cache_dir, model_name.replace('/', '_'))

print(f"开始下载模型: {model_name}")

print(f"保存路径: {local_dir}")

try:

# 使用snapshot_download下载整个模型仓库

snapshot_download(

repo_id=model_name,

local_dir=local_dir,

local_dir_use_symlinks=False,

resume_download=True

)

print(f"模型下载完成: {local_dir}")

return local_dir

except Exception as e:

print(f"下载失败: {e}")

return None

def load_model(self, model_path, model_name):

"""加载模型和tokenizer"""

print(f"正在加载模型: {model_name}")

# 加载tokenizer

tokenizer = AutoTokenizer.from_pretrained(

model_path,

trust_remote_code=True

)

# 加载模型

model = AutoModelForCausalLM.from_pretrained(

model_path,

torch_dtype=torch.float16,

device_map="auto",

trust_remote_code=True,

low_cpu_mem_usage=True

)

print("模型加载完成!")

return model, tokenizer

# 使用示例

if __name__ == "__main__":

downloader = ModelDownloader()

# 下载ChatGLM3-6B模型

model_path = downloader.download_model("THUDM/chatglm3-6b")

if model_path:

model, tokenizer = downloader.load_model(model_path, "ChatGLM3-6B")

1.3 量化部署方案

python

import torch

import torch.nn as nn

from transformers import AutoModel, AutoTokenizer

from accelerate import infer_auto_device_map, init_empty_weights

class QuantizedModelLoader:

def __init__(self):

self.supported_quant_types = ['int8', 'int4', 'fp16']

def load_quantized_model(self, model_name, quant_type='int8', device_map='auto'):

"""

加载量化模型

"""

if quant_type == 'int8':

return self._load_int8_model(model_name, device_map)

elif quant_type == 'int4':

return self._load_int4_model(model_name, device_map)

else:

return self._load_fp16_model(model_name, device_map)

def _load_int8_model(self, model_name, device_map):

"""加载8位量化模型"""

from transformers import BitsAndBytesConfig

quantization_config = BitsAndBytesConfig(

load_in_8bit=True,

llm_int8_threshold=6.0,

llm_int8_has_fp16_weight=False,

)

model = AutoModel.from_pretrained(

model_name,

quantization_config=quantization_config,

device_map=device_map,

trust_remote_code=True

)

return model

def _load_int4_model(self, model_name, device_map):

"""加载4位量化模型"""

from transformers import BitsAndBytesConfig

quantization_config = BitsAndBytesConfig(

load_in_4bit=True,

bnb_4bit_compute_dtype=torch.float16,

bnb_4bit_use_double_quant=True,

bnb_4bit_quant_type="nf4",

)

model = AutoModel.from_pretrained(

model_name,

quantization_config=quantization_config,

device_map=device_map,

trust_remote_code=True,

torch_dtype=torch.float16,

)

return model

def _load_fp16_model(self, model_name, device_map):

"""加载FP16模型"""

model = AutoModel.from_pretrained(

model_name,

torch_dtype=torch.float16,

device_map=device_map,

trust_remote_code=True,

low_cpu_mem_usage=True

)

return model

# 量化部署示例

def demo_quantized_models():

loader = QuantizedModelLoader()

tokenizer = AutoTokenizer.from_pretrained("THUDM/chatglm3-6b", trust_remote_code=True)

print("正在加载4位量化模型...")

model_int4 = loader.load_quantized_model("THUDM/chatglm3-6b", 'int4')

# 测试推理

prompt = "你好,请介绍一下人工智能的历史"

inputs = tokenizer(prompt, return_tensors="pt")

with torch.no_grad():

outputs = model_int4.generate(

**inputs,

max_length=500,

temperature=0.7,

do_sample=True

)

response = tokenizer.decode(outputs[0], skip_special_tokens=True)

print(f"模型回复: {response}")

if __name__ == "__main__":

demo_quantized_models()

1.4 Web服务部署

python

from flask import Flask, request, jsonify

import torch

from transformers import AutoModel, AutoTokenizer

import threading

import queue

import time

class ModelServer:

def __init__(self, model_path, model_name):

self.app = Flask(__name__)

self.model_path = model_path

self.model_name = model_name

self.request_queue = queue.Queue()

self.result_dict = {}

self.request_id = 0

self.lock = threading.Lock()

# 初始化模型

self._setup_routes()

self._initialize_model()

self._start_worker()

def _initialize_model(self):

"""初始化模型"""

print("正在加载模型...")

self.tokenizer = AutoTokenizer.from_pretrained(

self.model_path,

trust_remote_code=True

)

self.model = AutoModel.from_pretrained(

self.model_path,

torch_dtype=torch.float16,

device_map="auto",

trust_remote_code=True

).eval()

print("模型加载完成!")

def _setup_routes(self):

"""设置路由"""

@self.app.route('/chat', methods=['POST'])

def chat():

data = request.json

message = data.get('message', '')

history = data.get('history', [])

max_length = data.get('max_length', 1000)

temperature = data.get('temperature', 0.7)

# 生成请求ID

with self.lock:

request_id = self.request_id

self.request_id += 1

# 放入处理队列

self.request_queue.put({

'request_id': request_id,

'message': message,

'history': history,

'max_length': max_length,

'temperature': temperature

})

# 等待结果

start_time = time.time()

while request_id not in self.result_dict:

if time.time() - start_time > 60: # 超时60秒

return jsonify({'error': '请求超时'}), 500

time.sleep(0.1)

result = self.result_dict.pop(request_id)

return jsonify(result)

@self.app.route('/health', methods=['GET'])

def health():

return jsonify({'status': 'healthy', 'model': self.model_name})

def _process_requests(self):

"""处理请求的工作线程"""

while True:

try:

request_data = self.request_queue.get(timeout=1)

self._handle_request(request_data)

except queue.Empty:

continue

def _handle_request(self, request_data):

"""处理单个请求"""

try:

request_id = request_data['request_id']

message = request_data['message']

history = request_data['history']

# 构建输入

最低0.47元/天 解锁文章

最低0.47元/天 解锁文章

被折叠的 条评论

为什么被折叠?

被折叠的 条评论

为什么被折叠?