先初始化,再使用!

package com.laifeng.control;

import java.io.FileNotFoundException;

import java.io.IOException;

import java.net.URI;

import java.util.Iterator;

import java.util.logging.*;

import org.apache.commons.lang.StringUtils;

import org.apache.hadoop.conf.Configuration;

import org.apache.hadoop.fs.FileSystem;

import org.apache.hadoop.fs.Path;

import org.apache.hadoop.io.Text;

import org.apache.hadoop.mapreduce.Job;

import org.apache.hadoop.mapreduce.Mapper;

import org.apache.hadoop.mapreduce.Partitioner;

import org.apache.hadoop.mapreduce.Reducer;

import org.apache.hadoop.mapreduce.Mapper.Context;

import org.apache.hadoop.mapreduce.lib.input.FileInputFormat;

import org.apache.hadoop.mapreduce.lib.output.FileOutputFormat;

import org.apache.hadoop.util.GenericOptionsParser;

import com.laifeng.control.module.HandleLineRpt;

import com.laifeng.control.module.PreReduceRpt;

import com.laifeng.control.module.HandleTime;

import com.laifeng.control.module.UploadSmoothnesWholeStats;//whole

public class LaifengUploadFlowrateStatWhole {

static String timesize;

/**

* @param args

*/

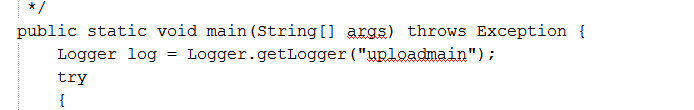

public static void main(String[] args) throws Exception {

Logger log = Logger.getLogger("uploadmain");

try

{

try{

FileHandler fileHandler = new FileHandler("/workspace/yule/lcdn/logs/upload/main.log");

log.setLevel(Level.ALL);

log.addHandler(fileHandler);

}

catch (Exception e) {

e.printStackTrace();

}

log.info("main starting");

/* JobConf conf = new JobConf(LaifengUploadFlowrateStatWhole.class);

conf.setJobName("LaifengUploadFlowrateStat");

String[] otherArgs = new GenericOptionsParser(conf, args).getRemainingArgs();

if (otherArgs.length != 3) {

System.err.println("have no enough params");

log.info("have no enough params");

System.exit(3);

}

//get the timesize

timesize = otherArgs[2].toString();

//set the map and reduce class

conf.setMapperClass(MyMapper.class);

conf.setReducerClass(MyReducer.class);

//set map output class

conf.setMapOutputKeyClass(IntWritable.class);

conf.setMapOutputValueClass(Text.class);

// set reducer output class

conf.setOutputKeyClass(IntWritable.class);

conf.setOutputValueClass(Text.class);

log.info("otherArgs[0]:"+ otherArgs[0]);

log.info("otherArgs[1]:"+ otherArgs[1]);

//set the input and output path

FileInputFormat.setInputPaths(conf, new Path(otherArgs[0]));

FileOutputFormat.setOutputPath(conf, new Path(otherArgs[1]));

//set the input and output content

conf.setInputFormat(TextInputFormat.class);

conf.setOutputFormat(TextOutputFormat.class);

//run the map

JobClient.runJob(conf);

System.exit(0);*/

// 新版废弃了JobConf类,直接使用JobConf的父类Configuration来进行一些配置的管理。

Configuration conf = new Configuration();

String[] otherArgs = new GenericOptionsParser(conf, args).getRemainingArgs();

if (otherArgs.length != 3) {

System.err.println("Usage: wordcount <in> <out>");

System.exit(2);

}

log.info("lcdnMapper otherArgs[0]:"+ otherArgs[0]);

log.info("lcdnMapper otherArgs[1]:"+ otherArgs[1]);

log.info("lcdnMapper otherArgs[2]:"+ otherArgs[2]);

// 新版直接使用类Job来描述一个job相关的各种信息。

Job job = new Job(conf, "lcdn");

// 通过Job类的方法,设置一个Job在运行过程中所需的所有相关参数

job.setJarByClass(LaifengUploadFlowrateStatWhole.class);

// 设置reduce的数量

// job.setNumReduceTasks(20);

// 设置Partitioner类

// job.setPartitionerClass(MyPartitioner.class);

// 设置Map、Combine和Reduce处理类

job.setMapperClass(lcdnMapper.class);

// 需要combiner

job.setCombinerClass(lcdnReducer.class);

job.setReducerClass(lcdnReducer.class);

// 设置输出类型

// job.setMapOutputKeyClass(Text.class);

// job.setMapOutputValueClass(Text.class);

job.setOutputKeyClass(Text.class);

job.setOutputValueClass(Text.class);

FileInputFormat.addInputPath(job, new Path(otherArgs[0]));

FileOutputFormat.setOutputPath(job, new Path(otherArgs[1]));

timesize = otherArgs[2].toString();

// 删除输出目录

// deleteFromHdfs(otherArgs[1]);

// 直接通过Job类的方法来执行Job。

// 这里waitForCompletion函数会一直等待job结束才会返回,还有另外一个方法:commit,commit会在提交job之后立刻返回。

System.exit(job.waitForCompletion(true) ? 0 : 1);

}

catch (Exception e)

{

log.info("main Exception:"+ e.getMessage());

e.printStackTrace();

}

}

/**

* 从HDFS上删除文件

*/

private static void deleteFromHdfs(String dst) throws FileNotFoundException, IOException {

Configuration conf = new Configuration();

FileSystem fs = FileSystem.get(URI.create(dst), conf);

fs.deleteOnExit(new Path(dst));

fs.close();

}

public static class lcdnMapper extends Mapper<Object, Text, Text, Text>/*MapReduceBase implements Mapper<Object, Text, IntWritable, Text> */

{

private Text intkey = new Text();

private Text strvalue = new Text();

//private static final Logger log = Logger.getLogger("uploadfailuremapper");

public lcdnMapper() {

super();

/*try{

FileHandler fileHandler = new FileHandler("/workspace/yule/lcdn/logs/upload/345.log");

log.setLevel(Level.ALL);

log.addHandler(fileHandler);

}

catch (Exception e) {

e.printStackTrace();

}*/

}

// 在map开始前运行

protected void setup(Context context) throws IOException, InterruptedException {

//log.info("MyMapper setup");

}

public void map(Object key, Text value, Context context/*OutputCollector<IntWritable, Text> output, Reporter reporter*/) throws IOException, InterruptedException

{

try

{

//log.info("mapkey:"+ key.toString() + "mapvalue:"+ value.toString() );

HandleLineRpt rpt = HandleLineRpt.parser(value.toString());

//System.out.println(rpt.isvalid());//test true or false

if (rpt.isvalid())

{

intkey.set(rpt.getrptkey() + "");

strvalue.set(rpt.getrptvalue());

//log.info("inkey:"+intkey.toString() + "strvalue:"+ strvalue.toString());

//output.collect(intkey, strvalue);

Text sv = new Text();

sv.set(rpt.getrptvalue());

context.write(intkey, sv);

}

}

catch(InterruptedException e){

//log.info("InterruptedException:"+ e.getMessage());

e.printStackTrace();

}

catch (IOException e)

{

//log.info("IOException:"+ e.getMessage());

e.printStackTrace();

}

}

}

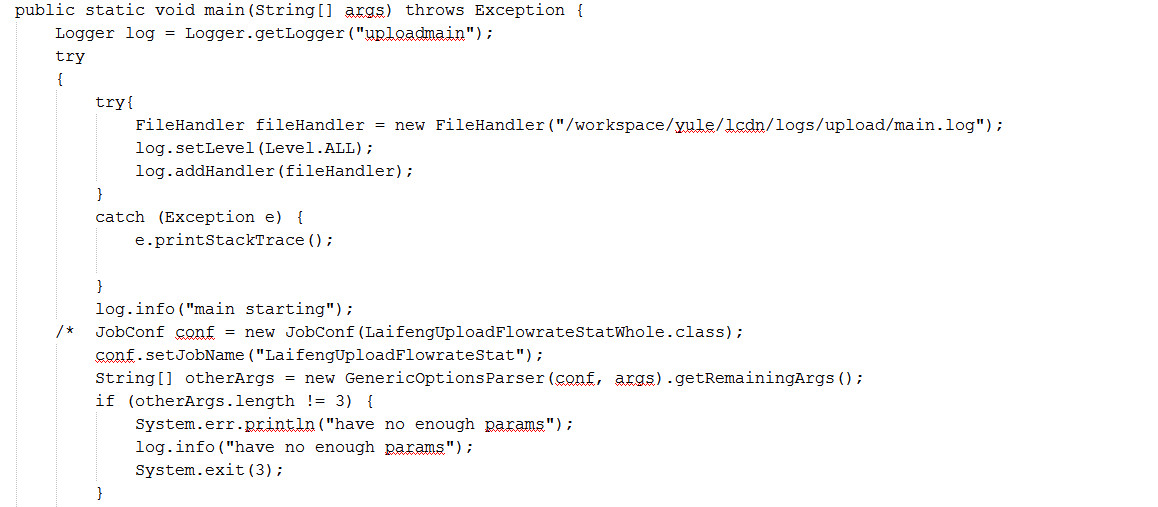

public static class lcdnReducer extends Reducer<Text, Text, Text, Text>/*extends MapReduceBase implements Reducer<IntWritable, Text, IntWritable, Text> */

{

private Text rtime = new Text();

private Text cntstream = new Text();

//private static final Logger _LOG = Logger.getLogger("123.log");

//private static final Logger log = Logger.getLogger("uploadfailure");

public lcdnReducer() {

super();

/* try{

FileHandler fileHandler = new FileHandler("/workspace/yule/lcdn/logs/upload/123.log");

log.setLevel(Level.ALL);

log.addHandler(fileHandler);

}

catch (Exception e) {

e.printStackTrace();

}*/

}

public void reduce(Text key, Iterator<Text> value, Context context/*OutputCollector<IntWritable, Text> output, Reporter reporter*/) throws IOException

{

try

{

//log.info("key:" + key.toString() + " value:" + value.toString());

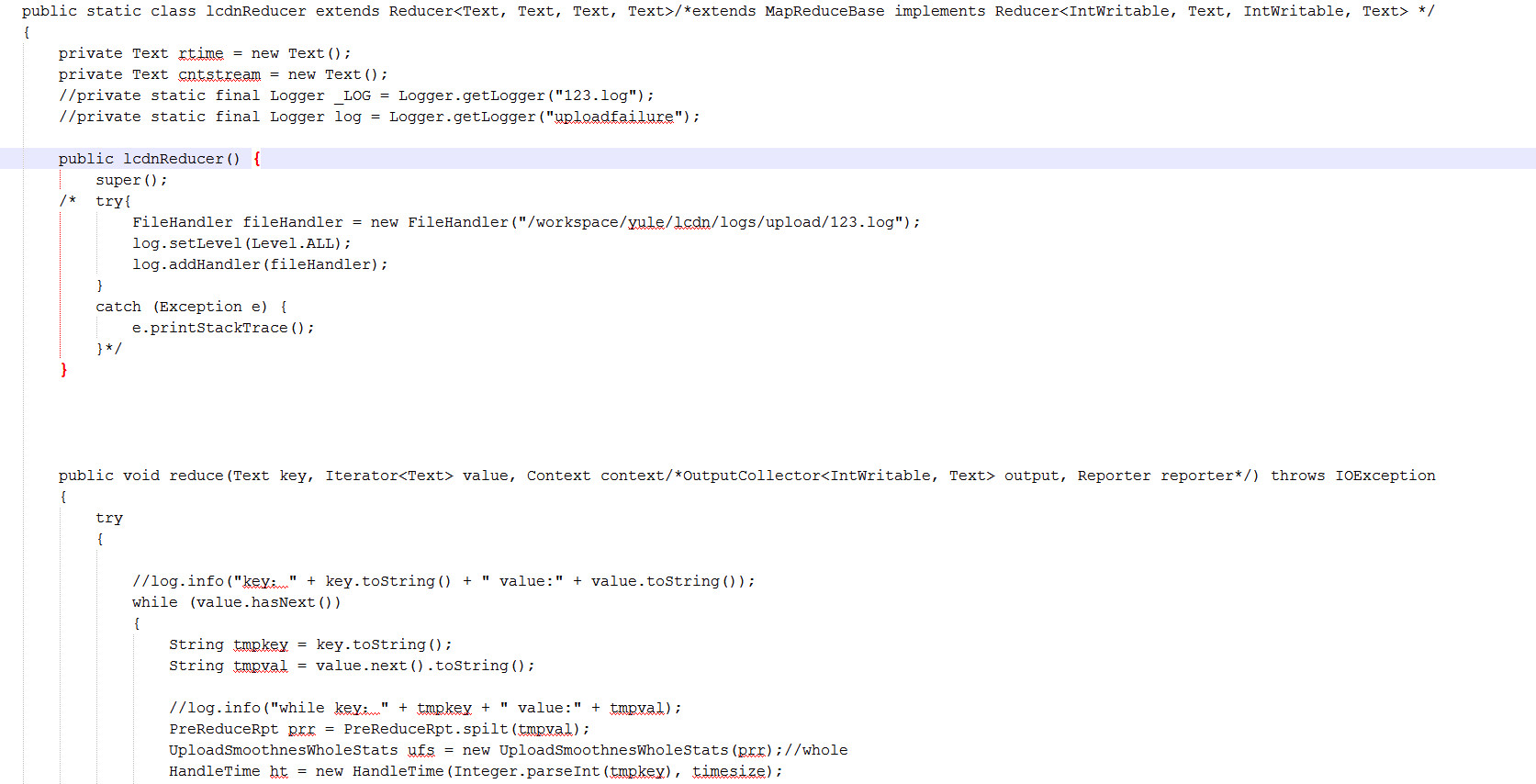

while (value.hasNext())

{

String tmpkey = key.toString();

String tmpval = value.next().toString();

//log.info("while key:" + tmpkey + " value:" + tmpval);

PreReduceRpt prr = PreReduceRpt.spilt(tmpval);

UploadSmoothnesWholeStats ufs = new UploadSmoothnesWholeStats(prr);//whole

HandleTime ht = new HandleTime(Integer.parseInt(tmpkey), timesize);

if(ht.matchtime())

{

ufs.append();//first step

}

else

{

ufs.put();//second step

String sum_cnt = String.valueOf(ufs.get_sum_cnt());

String sum_streamid = String.valueOf(ufs.get_sum_stream());

String fluent = String.valueOf((ufs.get_sum_cnt()*100)/(ufs.get_sum_stream()*30));

ufs.clear();//third step

//rtime.set(HandleTime.startbytimesize);

String strTemp = sum_cnt + "\t" + sum_streamid + "\t" + fluent;

//log.info(strTemp);

cntstream.set(strTemp);

//output.collect(rtime, cntstream);

try {

context.write(new Text(HandleTime.startbytimesize + ""), cntstream);

} catch (Exception e) {

e.printStackTrace();

}

}

}

}

catch (Exception e)

{

//log.info(e.getMessage());

e.printStackTrace();

}

}

}

}

1135

1135

被折叠的 条评论

为什么被折叠?

被折叠的 条评论

为什么被折叠?