出处:http://blog.csdn.net/jinshengtao/article/details/40900865

本次内容主要讲解什么是支持向量,SVM分类是如何推导的,最小序列SMO算法部分推导。

最后给出线性和非线性2分类问题的smo算法matlab实现代码。

一、什么是支持向量机(Support Vector Machine)

本节内容部分翻译Opencv教程:

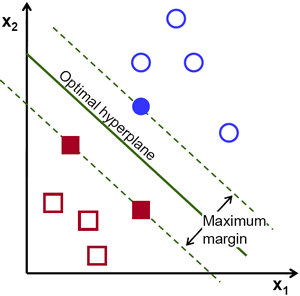

SVM是一个由分类超平面定义的判别分类器。也就是说给定一组带标签的训练样本,算法将会输出一个最优超平面对新样本(测试样本)进行分类。

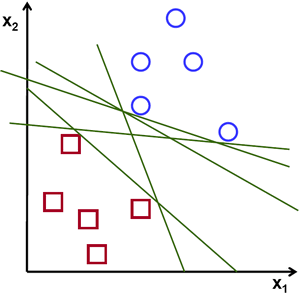

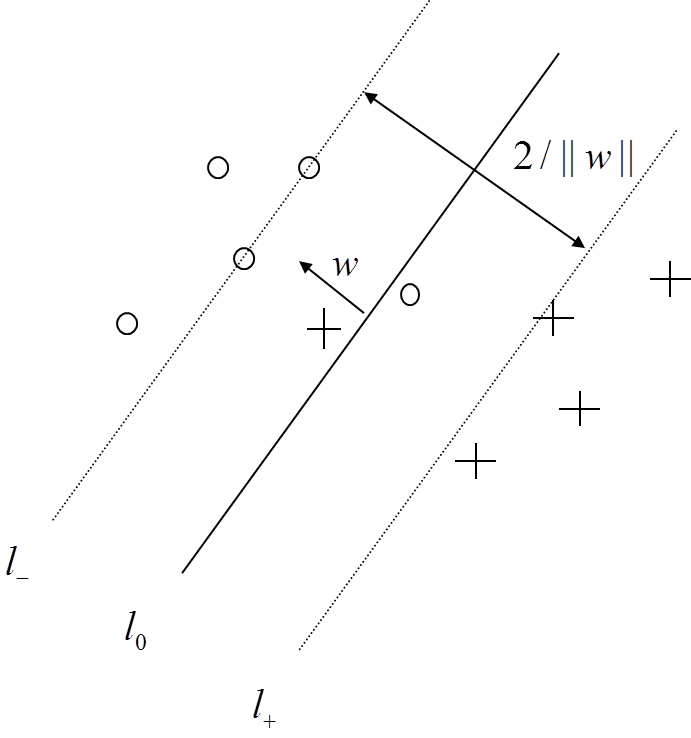

什么样的超平面才是最优的?我们考虑如下场景:对于一个由二维坐标点构成的线性可分集合,如何找到一条直线将两类坐标分隔开?

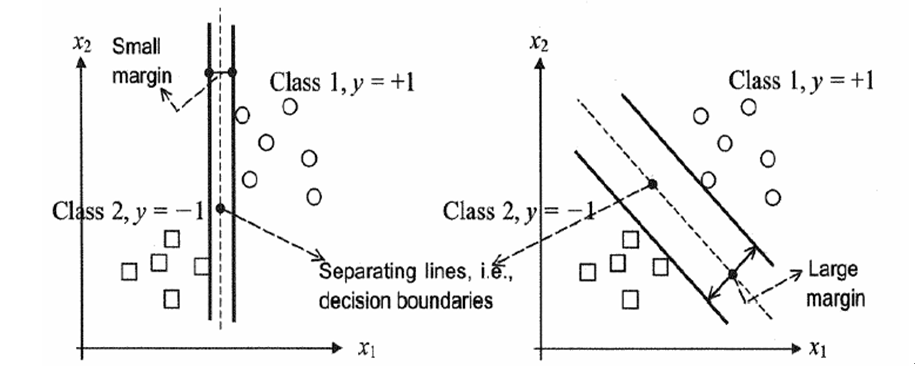

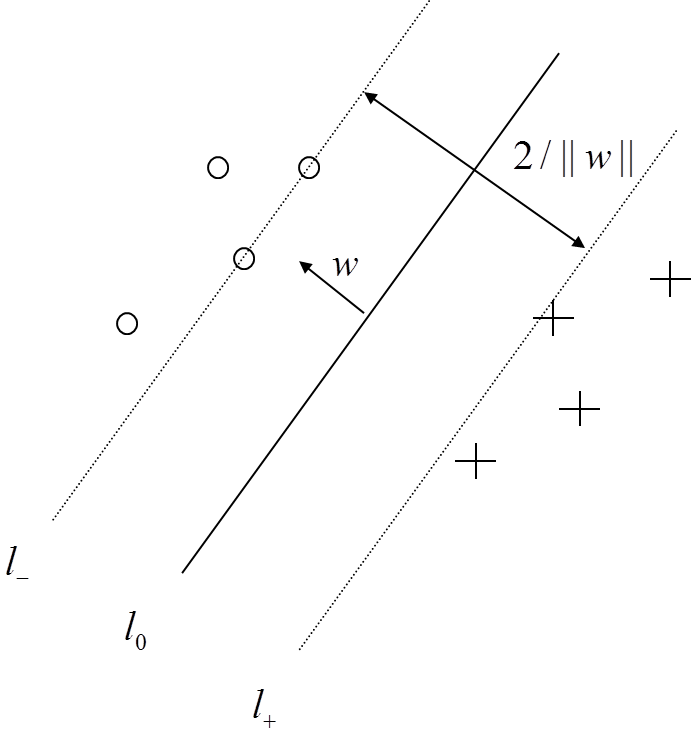

上图中,你可以看到存在多条直线将两类坐标分开。它们中有没有最好的?我们可以直观地定义如下规则:如果一条分割的直线离坐标点太近,那它就不是最佳的。因为它会对噪声敏感,不能正确的推广。因此,我们的目标是找到一条分割线,它要离所有的样本点都尽可能的远。

SVM算法就是找一个超平面,并且它到离他最近的训练样本的距离要最大。即最优分割超平面最大化训练样本边界。

原谅哥的翻译水平,看着自己也别扭,重新整理下:

对线性可分集,总能找到使样本正确划分的分界面,而且有无穷多个,哪个是最优? 必须寻找一种最优的分界准则,是两类模式分开的间隔最大。

在介绍如何推到之前,了解一个定义:

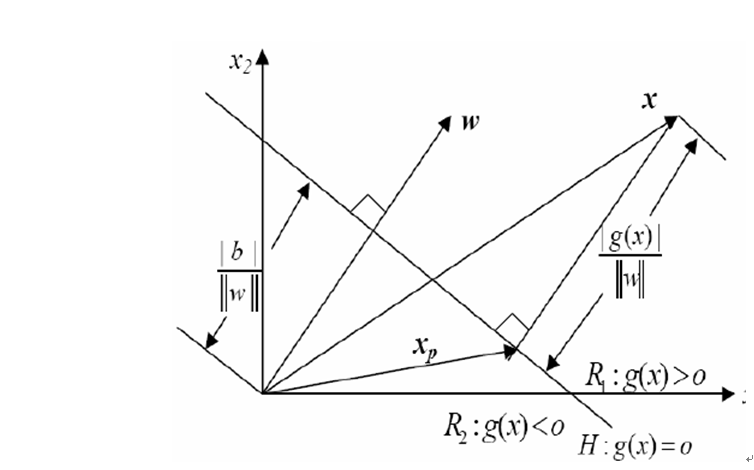

分隔超平面:上述将数据集分割开来的直线叫做分隔超平面。

超平面:如果数据集是N维的,那么就需要N-1维的某对象来对数据进行分割。该对象叫做超平面,也就是分类的决策边界。

间隔:

一个点到分割面的距离,称为点相对于分割面的距离。

数据集中所有的点到分割面的最小间隔的2倍,称为分类器或数据集的间隔。

最大间隔:SVM分类器是要找最大的数据集间隔。

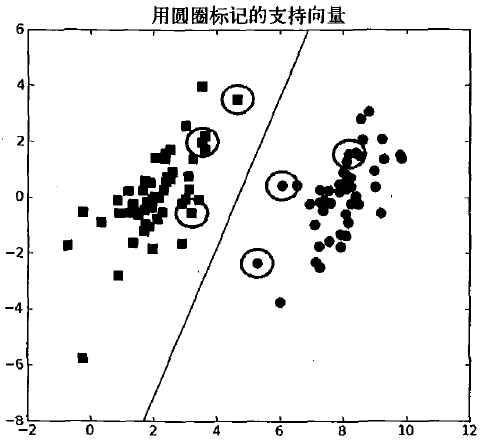

支持向量:离分割超平面最近的那些点。

SVM算法其实就是最大化支持向量到分割面的距离。另外,目前上面讲的都是针对线性可分的数据集。非线性的数据集,需要核函数转换空间,才具有非线性数据处理能力。

最优分类超平面只由少数支持向量决定,问题具有稀疏性。

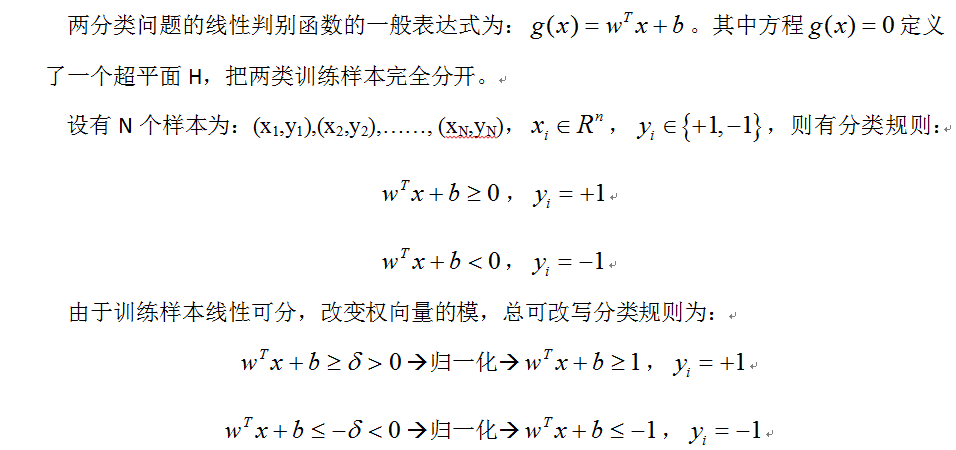

二、推导

下面讲解下SVM的数学原理,其实就是复述我之前写过的东西。

http://blog.csdn.net/jinshengtao/article/details/17636953

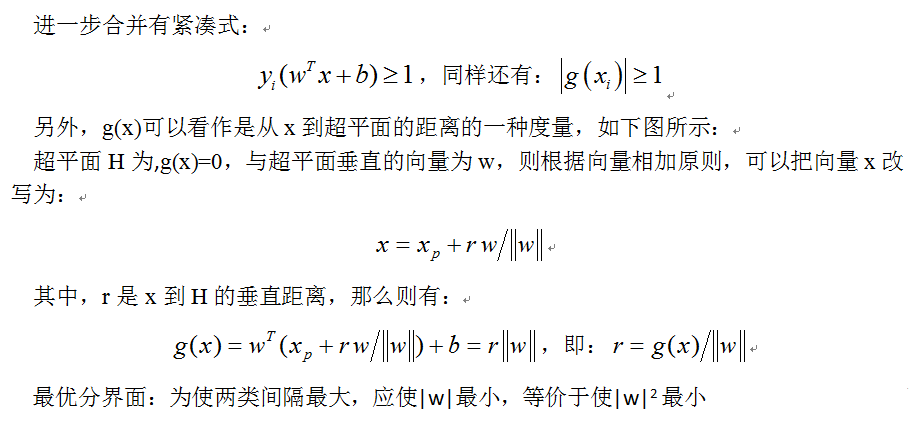

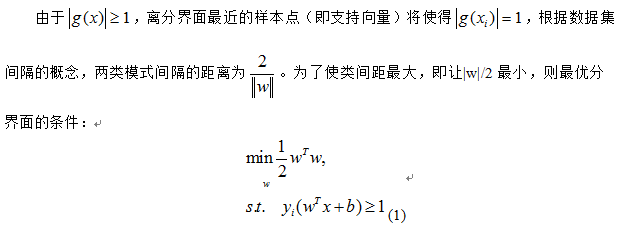

(这里yi(wTx+b)称为点到分割面的函数间隔。yi(wTx+b)/|w|称为点到分割面的几何间隔)

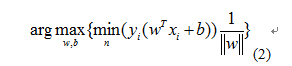

现在的目标就是找出分类器定义中的w和b。为此,我们必须找到具有最小间隔的数据点,而这些数据点也就是前面提到的支持向量。一旦找到具有最小间隔的数据点,我们就需要对该间隔最大化。这就可以写作:

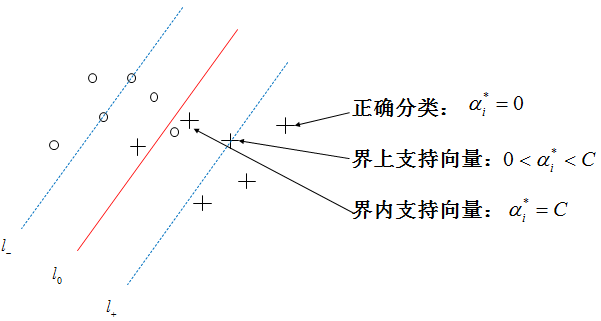

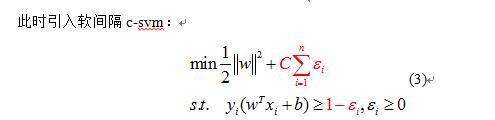

直接求解上面的公式很难。并且到目前为止,我们知道所有数都不那么干净,不能100%线性可分。我们可以通过引入松弛变量,来允许有些数据点可以处于分割面的错误的一侧。如下面几幅图所示:

εi是松弛变量,常数C代表惩罚系数,即如果某个x是属于某一类,但是它偏离了该类,跑到边界上后者其他类的地方去了,C越大表明越不想放弃这个点,边界就会缩小,错分点更少,但是过拟合情况会比较严重。

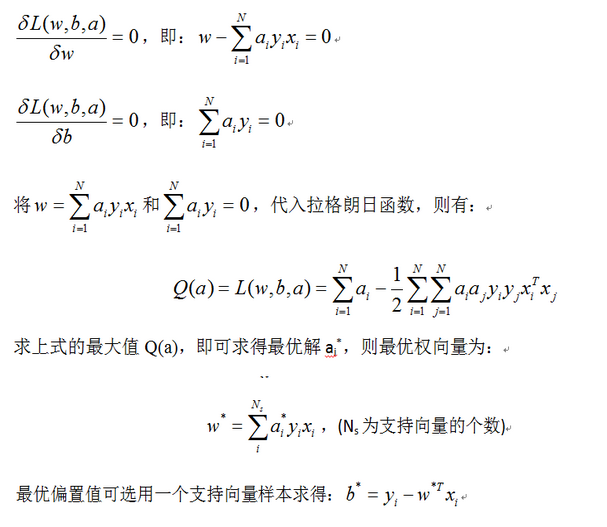

为此,我们引入拉格朗日乘子,用条件极值求解最优分界面,构造拉格朗日函数,也是之前公式(3)的对偶形式:

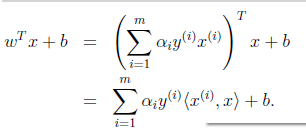

也就是说,只要求的拉格朗日乘子ai,我们就能得到分类边界。

三、序列最小化方法SMO

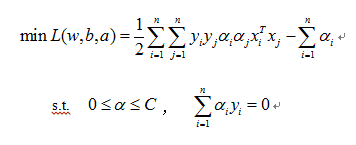

C-SVM是一个凸二次规划问题,SMO算法能快速解决SVM的二次规划问题,把它分解成一些子问题来解决。在每一步中,SMO算法仅选择两个拉格朗日乘子进行优化,然后再更新SVM以反应新的优化值。

对于C-SVM目标函数和优化过程中必须遵循的约束条件如下:

我们需要一种算法来求解最优化问题。(在以前的实现,我用了matlab提供的求条件极值的函数,http://blog.csdn.net/jinshengtao/article/details/17675653)。

现在打算自己用smo算法实现,下面就重点讲下smo算法。

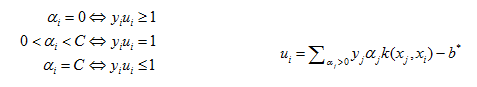

C-SVM的KTT条件为:

(KTT条件是指在满足一些有规则的条件下, 一个非线性规划(Nonlinear Programming)问题能有最优化解法的一个必要和充分条件.即最优解都需要满足KTT条件)。现在这里对于C-SVM的KTT条件为:

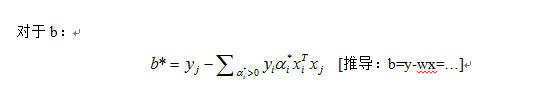

其中,b*由上面的公式计算得到。

SMO算法包括两个步骤:一是用分析的方法解一个简单的优化问题;二是选择待优化的拉格朗日乘子的策略。

(1) 简单的优化问题—-两个拉格朗日乘子优化问题的解

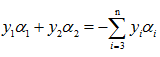

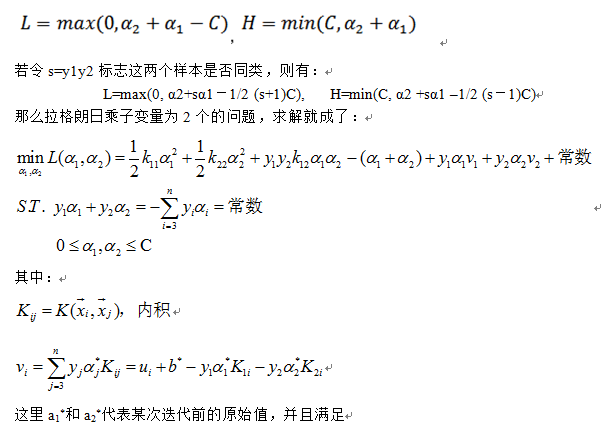

为了求解只有两个乘子的优化问题【因为(x i,y i)都已知】,SMO方法首先计算它的约束,然后再解带有约束的最小化问题。对于两个乘子,其二维约束很容易表达:边界约束使得乘子在方框内,而线性等式约束使得乘子在对角线上,这里y i∈{1,-1},

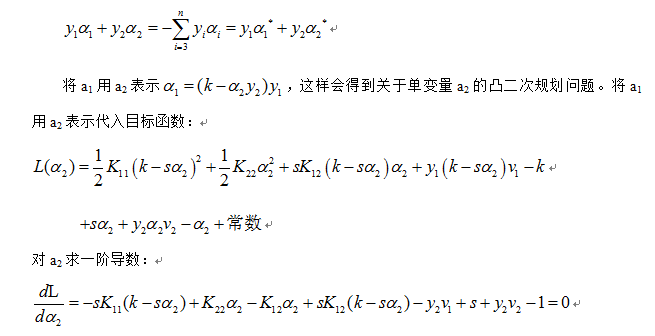

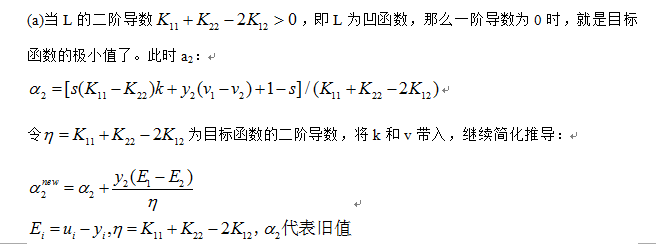

现在,在一条线段上求目标函数的极值,相当于一个一维的极值问题。我们可以把a1用a2表示,对a2求无条件极值,如果目标函数是严格凹的,最小值就一定在这一极值点(极值点在区间内)或在区间端点(极值点在区间外)。a2确定下来后,a1也确定了。因此,我们要先找到a2的优化区间,再在这个区间中对a2求最小值。

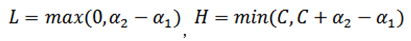

根据上面两幅图可以知道,a2优化区间:

当y1和y2异号时:【L、H的计算是由a2-a1正负未知所导致的】

当y1和y2同号时:

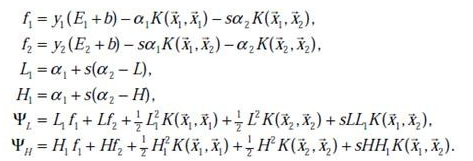

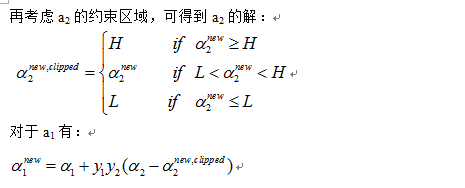

(b)当L的二阶导数为0,即训练样本中出现相同的特征时,我们计算目标函数是单调的。最小值在线段两个端点上的取值,我们将a2=L和a2=H分别代入目标函数,这样拉格朗日乘子就修正到目标函数较小的端点上,比较ΨL和ΨH就可以求得Ψ的最小值。最终得到a2的值,接着一串值都解出来了。

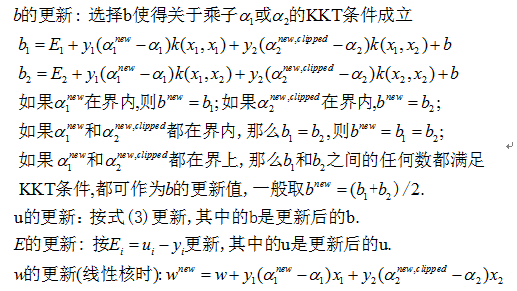

至于偏置值b,每一步都要更新,因为前面的KKT条件指出了ai和yiui关系,而ui和b有关,在每一步计算出ai后,根据KKT条件来调整b,分如下几种情况

(2) 选择策略—-选取两个拉格朗日乘子的启发规则

事实上即使我们不采用任何找点法,只是按顺序抽取ai,aj的所有组合进行优化,目标函数也会不断下降。直到任一对ai,aj都不能继续优化,目标函数就会收敛到极小值。我们采取某种找点方法只是为了使算法收敛得更快。

这种试探法先选择最有可能需要优化的a2,再针对这样的a2选择最有可能取得较大修正步长的a1。这样,我们在程序中使用两个层次的循环:

外层循环首先遍历所有样本查找违反KTT规则的乘子进行优化,当完成一次遍历后外层循环再遍历那些非边界乘子的样本(0<a<C),挑选那些违反KTT条件的样本进行优化。外层循环重复执行,直到所有的非边界样本在一定范围内均满足KKT条件。

在选定第一个拉格朗日乘子ai后,内层循环会通过最大化步长的方式来挑选第二个拉格朗日乘子,即最大化|Ei-Ej|,当Ei为正时最小化Ej,当为负Ei时最大化Ej.

下面给出matlab代码实现 :1.线性可分简单smo

- function [b,alphas] = smoSimple(data, class, C, toler, maxIter)

- b = 0;

- [m,n] = size(data);

- alphas = zeros(m,1);

- iter=0;

- while (iter < maxIter)

- alphasChanges = 0;

- for k=1:1:m

- fxk = (alphas .* class)’ * data * data(k,:)’ + b; % f = wx+b

- ek = fxk - class(k);

- if (((ek*class(k) < -toler) && (alphas(k) < C)) || ((ek*class(k) > toler) && (alphas(k) > 0)))

- j = selectJrand(k,m);

- fxj = (alphas .* class)’ * data * data(j,:)’ + b; % f = wx+b

- ej = fxj - class(j);

- temp_k = alphas(k);

- temp_j = alphas(j);

- if(class(k) ~= class(j))

- L = max(0, alphas(j) - alphas(k));

- H = min(C, C + alphas(j) - alphas(k));

- else

- L = max(0, alphas(k) + alphas(j) - C);

- H = min(C, alphas(k) + alphas(j));

- end

- if L == H

- continue;

- end

- eta = 2.0 * data(k,:) * data(j,:)’ - data(k,:) * data(k,:)’ - data(j,:) * data(j,:)’;

- if eta >= 0

- continue;

- end

- alphas(j) = alphas(j) - class(j) * (ek - ej) / eta;

- alphas(j) = clipalpha(alphas(j), H, L);

- if(abs(alphas(j) - temp_j) < 0.00001)

- continue;

- end

- alphas(k) = alphas(k) + class(k) * class(j) * (temp_j - alphas(j));

- b1 = b - ek - class(k) * (alphas(k) - temp_k) * data(k,:) * data(k,:)’ - class(j) * (alphas(j) - temp_j) * data(k,:) * data(j,:)’;

- b2 = b - ej - class(k) * (alphas(k) - temp_k) * data(k,:) * data(j,:)’ - class(j) * (alphas(j) - temp_j) * data(j,:) * data(j,:)’;

- if (alphas(k) > 0 && alphas(k) < C)

- b = b1;

- elseif(alphas(j) > 0 && alphas(j) < C)

- b = b2;

- else

- b = (b1 + b2)/2;

- end

- alphasChanges = alphasChanges + 1;

- end

- end

- if alphasChanges == 0

- iter = iter + 1;

- else

- iter = 0;

- end

- end

- end

- function index = selectJrand(k,m)

- index = k;

- while(index == k)

- index = randi([1,m],1,1);

- end

- end

- function res = clipalpha(a, H, L)

- if a > H

- a = H;

- end

- if a < L

- a = L;

- end

- res = a;

- end

function [b,alphas] = smoSimple(data, class, C, toler, maxIter)

b = 0;

[m,n] = size(data);

alphas = zeros(m,1);

iter=0;

while (iter < maxIter)

alphasChanges = 0;

for k=1:1:m

fxk = (alphas .* class)' * data * data(k,:)' + b; % f = wx+b

ek = fxk - class(k);

if (((ek*class(k) < -toler) && (alphas(k) < C)) || ((ek*class(k) > toler) && (alphas(k) > 0)))

j = selectJrand(k,m);

fxj = (alphas .* class)' * data * data(j,:)' + b; % f = wx+b

ej = fxj - class(j);

temp_k = alphas(k);

temp_j = alphas(j);

if(class(k) ~= class(j))

L = max(0, alphas(j) - alphas(k));

H = min(C, C + alphas(j) - alphas(k));

else

L = max(0, alphas(k) + alphas(j) - C);

H = min(C, alphas(k) + alphas(j));

end

if L == H

continue;

end

eta = 2.0 * data(k,:) * data(j,:)' - data(k,:) * data(k,:)' - data(j,:) * data(j,:)';

if eta >= 0

continue;

end

alphas(j) = alphas(j) - class(j) * (ek - ej) / eta;

alphas(j) = clipalpha(alphas(j), H, L);

if(abs(alphas(j) - temp_j) < 0.00001)

continue;

end

alphas(k) = alphas(k) + class(k) * class(j) * (temp_j - alphas(j));

b1 = b - ek - class(k) * (alphas(k) - temp_k) * data(k,:) * data(k,:)' - class(j) * (alphas(j) - temp_j) * data(k,:) * data(j,:)';

b2 = b - ej - class(k) * (alphas(k) - temp_k) * data(k,:) * data(j,:)' - class(j) * (alphas(j) - temp_j) * data(j,:) * data(j,:)';

if (alphas(k) > 0 && alphas(k) < C)

b = b1;

elseif(alphas(j) > 0 && alphas(j) < C)

b = b2;

else

b = (b1 + b2)/2;

end

alphasChanges = alphasChanges + 1;

end

end

if alphasChanges == 0

iter = iter + 1;

else

iter = 0;

end

end

end

function index = selectJrand(k,m)

index = k;

while(index == k)

index = randi([1,m],1,1);

end

end

function res = clipalpha(a, H, L)

if a > H

a = H;

end

if a < L

a = L;

end

res = a;

end- clc;

- clear;

- load Data

- [r,c] = size(Data);

- Test = Data(:,1:2);

- Label = Data(:,3);

- [b, alphas] = smoSimple(Test, Label, 0.6, 0.001, 40);

- %%画图

- figure(1)

- axis([-2 12 -8 6])

- for k = 1:1:r

- hold on

- if Data(k,3) == 1

- plot(Data(k,1),Data(k,2),’r+’);

- else

- plot(Data(k,1),Data(k,2),’b*’);

- end

- end

- %画支持向量及分割面

- %result=[];

- for k=1:1:r

- if alphas(k)~= 0

- hold on

- %result =[result;alphas(k)];

- QX = plot(Data(k,1:1),Data(k,2:2),’Ok’,’MarkerSize’,12);

- set(QX,’LineWidth’,2.0);

- end

- end

- W=(alphas.*Label)’*Data(:,1:2);

- y=(-W(1).* Data(:,1:1)-b) ./W(2);

- plot(Data(:,1:1),y);

clc;

clear;

load Data

[r,c] = size(Data);

Test = Data(:,1:2);

Label = Data(:,3);

[b, alphas] = smoSimple(Test, Label, 0.6, 0.001, 40);

%%画图

figure(1)

axis([-2 12 -8 6])

for k = 1:1:r

hold on

if Data(k,3) == 1

plot(Data(k,1),Data(k,2),'r+');

else

plot(Data(k,1),Data(k,2),'b*');

end

end

%画支持向量及分割面

%result=[];

for k=1:1:r

if alphas(k)~= 0

hold on

%result =[result;alphas(k)];

QX = plot(Data(k,1:1),Data(k,2:2),'Ok','MarkerSize',12);

set(QX,'LineWidth',2.0);

end

end

W=(alphas.*Label)'*Data(:,1:2);

y=(-W(1).* Data(:,1:1)-b) ./W(2);

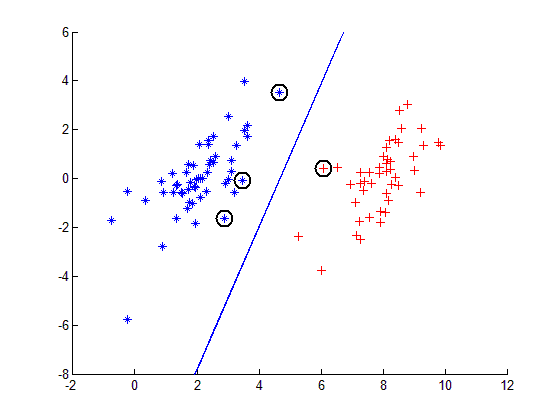

plot(Data(:,1:1),y);结果:

上述代码运行事件较长,此处仅是100个点的小规模数据集,对于更大的数据集收敛时间更长。

2.完整的smo算法

- function [b, res_alphas] = smoP(data, class, C, toler, maxIter)

- [m,n] = size(data);

- iter = 0;

- entireSet = 1;

- alphaPairsChanged = 0;

- oS = init(data,class,C,toler,m);

- while(((iter<maxIter)&&(alphaPairsChanged > 0)) || (entireSet == 1))

- alphaPairsChanged = 0;

- if entireSet == 1

- for k = 1:1:m

- [ret, oS] = innerL(k, oS);

- alphaPairsChanged = alphaPairsChanged + ret;

- end

- iter = iter + 1;

- else

- nonBoundIs = [];

- for k = 1:1:m

- if ((oS.alphas(k) < C) && (oS.alphas(k) > 0))

- nonBoundIs = [nonBoundIs k];

- end

- end

- [r,c] = size(nonBoundIs);

- for k = 1:1:c

- index = nonBoundIs(k);

- [ret, oS] = innerL(index, oS);

- alphaPairsChanged = alphaPairsChanged + ret;

- end

- iter = iter + 1;

- end

- if entireSet == 1

- entireSet = 0;

- elseif alphaPairsChanged == 0

- entireSet = 1;

- end

- end

- b = oS.b;

- res_alphas = oS.alphas;

- end

- function oS = init(data,class,C,toler,m)

- alphas = zeros(m,1);

- eCache = zeros(m,2);

- b = 0;

- oS.data = data;

- oS.class = class;

- oS.C = C;

- oS.toler = toler;

- oS.m = m;

- oS.alphas = alphas;

- oS.b = b;

- oS.eCache = eCache;

- end

- function [ret,oS] = innerL(k, oS)

- Ei = calcEk(oS, k);

- if(((oS.class(k)*Ei < -oS.toler) && (oS.alphas(k) < oS.C)) || ((oS.class(k)*Ei > oS.toler) && (oS.alphas(k) > 0)))

- [j, Ej] = selectJ(k, oS, Ei);

- temp_k = oS.alphas(k);

- temp_j = oS.alphas(j);

- if oS.class(k) ~= oS.class(j)

- L = max(0, oS.alphas(j) - oS.alphas(k));

- H = min(oS.C, oS.C +oS.alphas(j) - oS.alphas(k));

- else

- L = max(0, oS.alphas(j) + oS.alphas(k) - oS.C);

- H = min(oS.C, oS.alphas(j) + oS.alphas(k));

- end

- if L == H

- ret = 0;

- return;

- end

- eta = 2.0 * oS.data(k,:) * oS.data(j,:)’ - oS.data(k,:) * oS.data(k,:)’ - oS.data(j,:) * oS.data(j,:)’;

- if eta >=0

- ret = 0;

- return;

- end

- oS.alphas(j) = oS.alphas(j) - oS.class(j) * (Ei - Ej) / eta;

- oS.alphas(j) = clipalpha(oS.alphas(j), H, L);

- %update Ek

- Et = calcEk(oS, j);

- oS.eCache(j,:) = [1 Et];

- if(abs(oS.alphas(j) - temp_j) < 0.00001)

- ret = 0;

- return;

- end

- oS.alphas(k) = oS.alphas(k) + oS.class(j)*oS.class(k)*(temp_j - oS.alphas(j));

- Et = calcEk(oS, k);

- oS.eCache(k,:) = [1 Et];

- b1 = oS.b - Ei - oS.class(k) * (oS.alphas(k) - temp_k) * oS.data(k,:) * oS.data(k,:)’ - oS.class(j) * (oS.alphas(j) - temp_j) * oS.data(k,:) * oS.data(j,:)’;

- b2 = oS.b - Ej - oS.class(k) * (oS.alphas(k) - temp_k) * oS.data(k,:) * oS.data(j,:)’ - oS.class(j) * (oS.alphas(j) - temp_j) * oS.data(j,:) * oS.data(j,:)’;

- if (oS.alphas(k)>0) && (oS.alphas(k)<oS.C)

- oS.b = b1;

- elseif(oS.alphas(j)>0) && (oS.alphas(j)<oS.C)

- oS.b = b2;

- else

- oS.b = (b1+b2)/2.0;

- end

- ret = 1;

- return;

- else

- ret = 0;

- return;

- end

- end

- function Ek = calcEk(oS, k)

- fXk = (oS.alphas .* oS.class)’ * oS.data * oS.data(k,:)’ + oS.b;

- Ek = fXk - oS.class(k);

- end

- function [j, Ej] = selectJ(k, oS, Ei)

- maxK = -1;

- maxDeltaE = 0;

- Ej = 0;

- oS.eCache(k,:) =[1 Ei];

- validEcacheList = [];

- for l = 1:1:oS.m

- if oS.eCache(l,1:1) ~= 0

- validEcacheList = [validEcacheList l];

- end

- end

- [r, c] = size(validEcacheList);

- if c > 1

- for l=1:1:c

- index = validEcacheList(l)

- if index == k

- continue;

- end

- Ek = calcEk(oS,index);

- deltaE = abs(Ei - Ek);

- if(deltaE > maxDeltaE)

- maxK = index;

- maxDeltaE = deltaE;

- Ej = Ek;

- end

- end

- j = maxK;

- else

- j = selectJrand(k, oS.m);

- Ej = calcEk(oS, j);

- end

- end

- function index = selectJrand(k,m)

- index = k;

- while(index == k)

- index = randi([1,m],1,1);

- end

- end

- function res = clipalpha(a, H, L)

- if a > H

- a = H;

- end

- if a < L

- a = L;

- end

- res = a;

- end

function [b, res_alphas] = smoP(data, class, C, toler, maxIter)

[m,n] = size(data);

iter = 0;

entireSet = 1;

alphaPairsChanged = 0;

oS = init(data,class,C,toler,m);

while(((iter<maxIter)&&(alphaPairsChanged > 0)) || (entireSet == 1))

alphaPairsChanged = 0;

if entireSet == 1

for k = 1:1:m

[ret, oS] = innerL(k, oS);

alphaPairsChanged = alphaPairsChanged + ret;

end

iter = iter + 1;

else

nonBoundIs = [];

for k = 1:1:m

if ((oS.alphas(k) < C) && (oS.alphas(k) > 0))

nonBoundIs = [nonBoundIs k];

end

end

[r,c] = size(nonBoundIs);

for k = 1:1:c

index = nonBoundIs(k);

[ret, oS] = innerL(index, oS);

alphaPairsChanged = alphaPairsChanged + ret;

end

iter = iter + 1;

end

if entireSet == 1

entireSet = 0;

elseif alphaPairsChanged == 0

entireSet = 1;

end

end

b = oS.b;

res_alphas = oS.alphas;

end

function oS = init(data,class,C,toler,m)

alphas = zeros(m,1);

eCache = zeros(m,2);

b = 0;

oS.data = data;

oS.class = class;

oS.C = C;

oS.toler = toler;

oS.m = m;

oS.alphas = alphas;

oS.b = b;

oS.eCache = eCache;

end

function [ret,oS] = innerL(k, oS)

Ei = calcEk(oS, k);

if(((oS.class(k)*Ei < -oS.toler) && (oS.alphas(k) < oS.C)) || ((oS.class(k)*Ei > oS.toler) && (oS.alphas(k) > 0)))

[j, Ej] = selectJ(k, oS, Ei);

temp_k = oS.alphas(k);

temp_j = oS.alphas(j);

if oS.class(k) ~= oS.class(j)

L = max(0, oS.alphas(j) - oS.alphas(k));

H = min(oS.C, oS.C +oS.alphas(j) - oS.alphas(k));

else

L = max(0, oS.alphas(j) + oS.alphas(k) - oS.C);

H = min(oS.C, oS.alphas(j) + oS.alphas(k));

end

if L == H

ret = 0;

return;

end

eta = 2.0 * oS.data(k,:) * oS.data(j,:)' - oS.data(k,:) * oS.data(k,:)' - oS.data(j,:) * oS.data(j,:)';

if eta >=0

ret = 0;

return;

end

oS.alphas(j) = oS.alphas(j) - oS.class(j) * (Ei - Ej) / eta;

oS.alphas(j) = clipalpha(oS.alphas(j), H, L);

%update Ek

Et = calcEk(oS, j);

oS.eCache(j,:) = [1 Et];

if(abs(oS.alphas(j) - temp_j) < 0.00001)

ret = 0;

return;

end

oS.alphas(k) = oS.alphas(k) + oS.class(j)*oS.class(k)*(temp_j - oS.alphas(j));

Et = calcEk(oS, k);

oS.eCache(k,:) = [1 Et];

b1 = oS.b - Ei - oS.class(k) * (oS.alphas(k) - temp_k) * oS.data(k,:) * oS.data(k,:)' - oS.class(j) * (oS.alphas(j) - temp_j) * oS.data(k,:) * oS.data(j,:)';

b2 = oS.b - Ej - oS.class(k) * (oS.alphas(k) - temp_k) * oS.data(k,:) * oS.data(j,:)' - oS.class(j) * (oS.alphas(j) - temp_j) * oS.data(j,:) * oS.data(j,:)';

if (oS.alphas(k)>0) && (oS.alphas(k)<oS.C)

oS.b = b1;

elseif(oS.alphas(j)>0) && (oS.alphas(j)<oS.C)

oS.b = b2;

else

oS.b = (b1+b2)/2.0;

end

ret = 1;

return;

else

ret = 0;

return;

end

end

function Ek = calcEk(oS, k)

fXk = (oS.alphas .* oS.class)' * oS.data * oS.data(k,:)' + oS.b;

Ek = fXk - oS.class(k);

end

function [j, Ej] = selectJ(k, oS, Ei)

maxK = -1;

maxDeltaE = 0;

Ej = 0;

oS.eCache(k,:) =[1 Ei];

validEcacheList = [];

for l = 1:1:oS.m

if oS.eCache(l,1:1) ~= 0

validEcacheList = [validEcacheList l];

end

end

[r, c] = size(validEcacheList);

if c > 1

for l=1:1:c

index = validEcacheList(l)

if index == k

continue;

end

Ek = calcEk(oS,index);

deltaE = abs(Ei - Ek);

if(deltaE > maxDeltaE)

maxK = index;

maxDeltaE = deltaE;

Ej = Ek;

end

end

j = maxK;

else

j = selectJrand(k, oS.m);

Ej = calcEk(oS, j);

end

end

function index = selectJrand(k,m)

index = k;

while(index == k)

index = randi([1,m],1,1);

end

end

function res = clipalpha(a, H, L)

if a > H

a = H;

end

if a < L

a = L;

end

res = a;

end

- clc;

- clear;

- load Data

- [r,c] = size(Data);

- Test = Data(:,1:2);

- Label = Data(:,3);

- [b, alphas] = smoP(Test, Label, 0.6, 0.001, 40);

- %%画图

- figure(1)

- axis([-2 12 -8 6])

- for k = 1:1:r

- hold on

- if Data(k,3) == 1

- plot(Data(k,1),Data(k,2),’r+’);

- else

- plot(Data(k,1),Data(k,2),’b*’);

- end

- end

- %画支持向量及分割面

- %result=[];

- for k=1:1:r

- if alphas(k)~= 0

- hold on

- %result =[result;alphas(k)];

- QX = plot(Data(k,1:1),Data(k,2:2),’Ok’,’MarkerSize’,12);

- set(QX,’LineWidth’,2.0);

- end

- end

- W=(alphas.*Label)’*Data(:,1:2);

- y=(-W(1).* Data(:,1:1)-b) ./W(2);

- plot(Data(:,1:1),y);

clc;

clear;

load Data

[r,c] = size(Data);

Test = Data(:,1:2);

Label = Data(:,3);

[b, alphas] = smoP(Test, Label, 0.6, 0.001, 40);

%%画图

figure(1)

axis([-2 12 -8 6])

for k = 1:1:r

hold on

if Data(k,3) == 1

plot(Data(k,1),Data(k,2),'r+');

else

plot(Data(k,1),Data(k,2),'b*');

end

end

%画支持向量及分割面

%result=[];

for k=1:1:r

if alphas(k)~= 0

hold on

%result =[result;alphas(k)];

QX = plot(Data(k,1:1),Data(k,2:2),'Ok','MarkerSize',12);

set(QX,'LineWidth',2.0);

end

end

W=(alphas.*Label)'*Data(:,1:2);

y=(-W(1).* Data(:,1:1)-b) ./W(2);

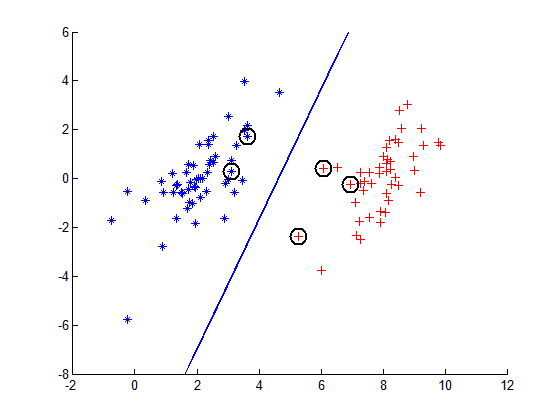

plot(Data(:,1:1),y);运行结果:

与第一个代码唯一的不同就是选择alphas的方式。第一个代码覆盖了所有数据集。常数C=0.6,一方面要保证所有样例的间隔不小于1.0,另一方面又要使得分类间隔尽可能大,并且要在 这两方面平衡。如果C很大,那么分类器将力图通过分割超平面对所有的样例都正确分类。小圆点标注的是支持向量。如果数据集非线性可分,支持向量 会在超平面附近聚集成团

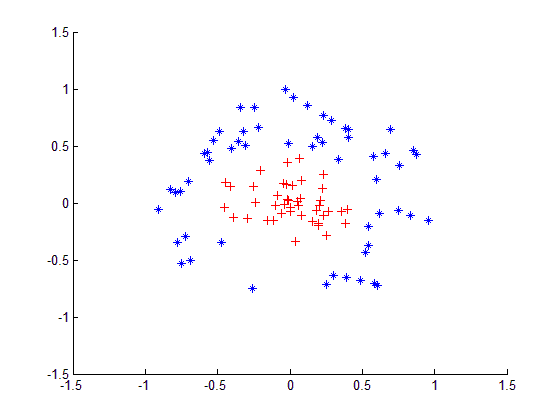

四、非线性可分问题

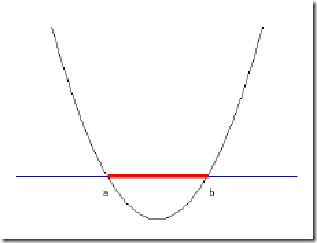

对于上图,在二维平面中很难用直线分割,但是这里明显存在着两类数据。接下来,我们就使用一种称为核函数的工具将数据转化成易于分类器理解的形式。

1. 利用核函数将数据映射到高维空间

对于上图而言,如果只在x和y构成的坐标系中插入直线进行分类的话,我们不会得到理想的结果。我们可以对数据进行转换从而得到某些新的变量来表示数据。在这种情况下,我们就更容易得到大于零或小于零的测试结果。数学家们将数据从一个特征空间转换到另一特征空间的过程称为特征空间映射,通常我们将低维特征空间映射到高维特征空间。下面举个例子来形象地理解核函数:

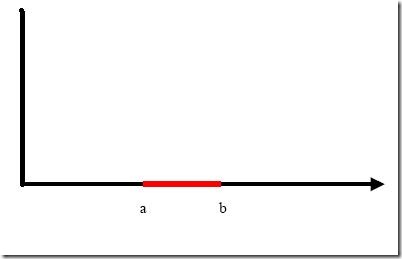

我们把横轴上端点a和b之间红色部分里的所有点定为正类,两边的黑色部分里的点定为负类。试问能找到一个线性函数把两类正确分开么?不能,因为二维空间里的线性函数就是指直线,显然找不到符合条件的直线。但我们可以找到一条曲线,例如下面这一条:

显然通过点在这条曲线的上方还是下方就可以判断点所属的类别(你在横轴上随便找一点,算算这一点的函数值,会发现负类的点函数值一定比0大,而正类的一定比0小)。这条曲线就是我们熟知的二次曲线。

上述过程即完成了一维空间向二维空间的映射。

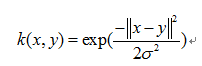

对于SVM分类问题,所有的运算都可以写成内积形式(点积),我们把内积运算替换成核函数,即可完成特征映射。核函数主要有:

l 多项式核

l 傅立叶核

l B样条核

l Sigmod核

l 高斯径向基核

核函数并不仅仅应用于支持向量机,很多其他机器学习算法也要用到。下面就介绍高斯径向基核函数。

径向基函数是一种采用向量作为自变量的函数,能够基于向量距离输出一个标量,具体数学公式:

其中,σ是用户定义的用于确定到达率或者说是函数值跌落到0的速度参数。这个高斯核函数将数据从其特征空间映射到更高维的空间,具体说来这里是映射到一个无穷维的空间。我们不用确切地理解数据是如何表现的。

【这里扯一下我的同学,他的论文《基于矩阵运算的单隐层Madaline网络批量学习》,人家提出数据往低维空间映射,比较神奇哈】

最终的分类平面:(推导参考:http://blog.csdn.net/wangran51/article/details/7354915http://blog.csdn.net/wangran51/article/details/7354915)

代码:

- function [b, res_alphas] = rbf_smoP(data, class, C, toler, maxIter, k1)

- [m,n] = size(data);

- iter = 0;

- entireSet = 1;

- alphaPairsChanged = 0;

- oS = init(data, class, C, toler, m, k1);

- while(((iter<maxIter)&&(alphaPairsChanged > 0)) || (entireSet == 1))

- alphaPairsChanged = 0;

- if entireSet == 1

- for k = 1:1:m

- [ret, oS] = innerL(k, oS);

- alphaPairsChanged = alphaPairsChanged + ret;

- end

- iter = iter + 1;

- else

- nonBoundIs = [];

- for k = 1:1:m

- if ((oS.alphas(k) < C) && (oS.alphas(k) > 0))

- nonBoundIs = [nonBoundIs k];

- end

- end

- [r,c] = size(nonBoundIs);

- for k = 1:1:c

- index = nonBoundIs(k);

- [ret, oS] = innerL(index, oS);

- alphaPairsChanged = alphaPairsChanged + ret;

- end

- iter = iter + 1;

- end

- if entireSet == 1

- entireSet = 0;

- elseif alphaPairsChanged == 0

- entireSet = 1;

- end

- end

- b = oS.b;

- res_alphas = oS.alphas;

- end

- function K = kernelTrans(X, A, k1)

- [m, n] = size(X);

- K = zeros(m,1);

- for j = 1:1:m

- deltaRow = X(j,:) - A;

- K(j) = deltaRow * deltaRow’;

- end

- K = exp(K./(-2*k1));

- end

- function oS = init(data,class,C,toler,m,k1)

- alphas = zeros(m,1);

- eCache = zeros(m,2);

- b = 0;

- oS.data = data;

- oS.class = class;

- oS.C = C;

- oS.toler = toler;

- oS.m = m;

- oS.alphas = alphas;

- oS.b = b;

- oS.eCache = eCache;

- oS.K = zeros(m,m);

- for j = 1:1:m

- oS.K(:,j) = kernelTrans(oS.data,oS.data(j,:),k1);

- end

- end

- function [ret,oS] = innerL(k, oS)

- Ei = calcEk(oS, k);

- if(((oS.class(k)*Ei < -oS.toler) && (oS.alphas(k) < oS.C)) || ((oS.class(k)*Ei > oS.toler) && (oS.alphas(k) > 0)))

- [j, Ej] = selectJ(k, oS, Ei);

- temp_k = oS.alphas(k);

- temp_j = oS.alphas(j);

- if oS.class(k) ~= oS.class(j)

- L = max(0, oS.alphas(j) - oS.alphas(k));

- H = min(oS.C, oS.C +oS.alphas(j) - oS.alphas(k));

- else

- L = max(0, oS.alphas(j) + oS.alphas(k) - oS.C);

- H = min(oS.C, oS.alphas(j) + oS.alphas(k));

- end

- if L == H

- ret = 0;

- return;

- end

- eta = 2.0 * oS.K(k,j) - oS.K(k,k) - oS.K(j,j);

- if eta >=0

- ret = 0;

- return;

- end

- oS.alphas(j) = oS.alphas(j) - oS.class(j) * (Ei - Ej) / eta;

- oS.alphas(j) = clipalpha(oS.alphas(j), H, L);

- %update Ek

- Et = calcEk(oS, j);

- oS.eCache(j,:) = [1 Et];

- if(abs(oS.alphas(j) - temp_j) < 0.00001)

- ret = 0;

- return;

- end

- oS.alphas(k) = oS.alphas(k) + oS.class(j)*oS.class(k)*(temp_j - oS.alphas(j));

- Et = calcEk(oS, k);

- oS.eCache(k,:) = [1 Et];

- b1 = oS.b - Ei - oS.class(k) * (oS.alphas(k) - temp_k) * oS.K(k,k) - oS.class(j) * (oS.alphas(j) - temp_j) * oS.K(k,j);

- b2 = oS.b - Ej - oS.class(k) * (oS.alphas(k) - temp_k) * oS.K(k,j) - oS.class(j) * (oS.alphas(j) - temp_j) * oS.K(j,j);

- if (oS.alphas(k)>0) && (oS.alphas(k)<oS.C)

- oS.b = b1;

- elseif(oS.alphas(j)>0) && (oS.alphas(j)<oS.C)

- oS.b = b2;

- else

- oS.b = (b1+b2)/2.0;

- end

- ret = 1;

- return;

- else

- ret = 0;

- return;

- end

- end

- function Ek = calcEk(oS, k)

- fXk = (oS.alphas .* oS.class)’ * oS.K(:,k) + oS.b;

- Ek = fXk - oS.class(k);

- end

- function [j, Ej] = selectJ(k, oS, Ei)

- maxK = -1;

- maxDeltaE = 0;

- Ej = 0;

- oS.eCache(k,:) =[1 Ei];

- validEcacheList = [];

- for l = 1:1:oS.m

- if oS.eCache(l,1:1) ~= 0

- validEcacheList = [validEcacheList l];

- end

- end

- [r, c] = size(validEcacheList);

- if c > 1

- for l=1:1:c

- index = validEcacheList(l);

- if index == k

- continue;

- end

- Ek = calcEk(oS,index);

- deltaE = abs(Ei - Ek);

- if(deltaE > maxDeltaE)

- maxK = index;

- maxDeltaE = deltaE;

- Ej = Ek;

- end

- end

- j = maxK;

- else

- j = selectJrand(k, oS.m);

- Ej = calcEk(oS, j);

- end

- end

- function index = selectJrand(k,m)

- index = k;

- while(index == k)

- index = randi([1,m],1,1);

- end

- end

- function res = clipalpha(a, H, L)

- if a > H

- a = H;

- end

- if a < L

- a = L;

- end

- res = a;

- end

function [b, res_alphas] = rbf_smoP(data, class, C, toler, maxIter, k1)

[m,n] = size(data);

iter = 0;

entireSet = 1;

alphaPairsChanged = 0;

oS = init(data, class, C, toler, m, k1);

while(((iter<maxIter)&&(alphaPairsChanged > 0)) || (entireSet == 1))

alphaPairsChanged = 0;

if entireSet == 1

for k = 1:1:m

[ret, oS] = innerL(k, oS);

alphaPairsChanged = alphaPairsChanged + ret;

end

iter = iter + 1;

else

nonBoundIs = [];

for k = 1:1:m

if ((oS.alphas(k) < C) && (oS.alphas(k) > 0))

nonBoundIs = [nonBoundIs k];

end

end

[r,c] = size(nonBoundIs);

for k = 1:1:c

index = nonBoundIs(k);

[ret, oS] = innerL(index, oS);

alphaPairsChanged = alphaPairsChanged + ret;

end

iter = iter + 1;

end

if entireSet == 1

entireSet = 0;

elseif alphaPairsChanged == 0

entireSet = 1;

end

end

b = oS.b;

res_alphas = oS.alphas;

end

function K = kernelTrans(X, A, k1)

[m, n] = size(X);

K = zeros(m,1);

for j = 1:1:m

deltaRow = X(j,:) - A;

K(j) = deltaRow * deltaRow';

end

K = exp(K./(-2*k1));

end

function oS = init(data,class,C,toler,m,k1)

alphas = zeros(m,1);

eCache = zeros(m,2);

b = 0;

oS.data = data;

oS.class = class;

oS.C = C;

oS.toler = toler;

oS.m = m;

oS.alphas = alphas;

oS.b = b;

oS.eCache = eCache;

oS.K = zeros(m,m);

for j = 1:1:m

oS.K(:,j) = kernelTrans(oS.data,oS.data(j,:),k1);

end

end

function [ret,oS] = innerL(k, oS)

Ei = calcEk(oS, k);

if(((oS.class(k)*Ei < -oS.toler) && (oS.alphas(k) < oS.C)) || ((oS.class(k)*Ei > oS.toler) && (oS.alphas(k) > 0)))

[j, Ej] = selectJ(k, oS, Ei);

temp_k = oS.alphas(k);

temp_j = oS.alphas(j);

if oS.class(k) ~= oS.class(j)

L = max(0, oS.alphas(j) - oS.alphas(k));

H = min(oS.C, oS.C +oS.alphas(j) - oS.alphas(k));

else

L = max(0, oS.alphas(j) + oS.alphas(k) - oS.C);

H = min(oS.C, oS.alphas(j) + oS.alphas(k));

end

if L == H

ret = 0;

return;

end

eta = 2.0 * oS.K(k,j) - oS.K(k,k) - oS.K(j,j);

if eta >=0

ret = 0;

return;

end

oS.alphas(j) = oS.alphas(j) - oS.class(j) * (Ei - Ej) / eta;

oS.alphas(j) = clipalpha(oS.alphas(j), H, L);

%update Ek

Et = calcEk(oS, j);

oS.eCache(j,:) = [1 Et];

if(abs(oS.alphas(j) - temp_j) < 0.00001)

ret = 0;

return;

end

oS.alphas(k) = oS.alphas(k) + oS.class(j)*oS.class(k)*(temp_j - oS.alphas(j));

Et = calcEk(oS, k);

oS.eCache(k,:) = [1 Et];

b1 = oS.b - Ei - oS.class(k) * (oS.alphas(k) - temp_k) * oS.K(k,k) - oS.class(j) * (oS.alphas(j) - temp_j) * oS.K(k,j);

b2 = oS.b - Ej - oS.class(k) * (oS.alphas(k) - temp_k) * oS.K(k,j) - oS.class(j) * (oS.alphas(j) - temp_j) * oS.K(j,j);

if (oS.alphas(k)>0) && (oS.alphas(k)<oS.C)

oS.b = b1;

elseif(oS.alphas(j)>0) && (oS.alphas(j)<oS.C)

oS.b = b2;

else

oS.b = (b1+b2)/2.0;

end

ret = 1;

return;

else

ret = 0;

return;

end

end

function Ek = calcEk(oS, k)

fXk = (oS.alphas .* oS.class)' * oS.K(:,k) + oS.b;

Ek = fXk - oS.class(k);

end

function [j, Ej] = selectJ(k, oS, Ei)

maxK = -1;

maxDeltaE = 0;

Ej = 0;

oS.eCache(k,:) =[1 Ei];

validEcacheList = [];

for l = 1:1:oS.m

if oS.eCache(l,1:1) ~= 0

validEcacheList = [validEcacheList l];

end

end

[r, c] = size(validEcacheList);

if c > 1

for l=1:1:c

index = validEcacheList(l);

if index == k

continue;

end

Ek = calcEk(oS,index);

deltaE = abs(Ei - Ek);

if(deltaE > maxDeltaE)

maxK = index;

maxDeltaE = deltaE;

Ej = Ek;

end

end

j = maxK;

else

j = selectJrand(k, oS.m);

Ej = calcEk(oS, j);

end

end

function index = selectJrand(k,m)

index = k;

while(index == k)

index = randi([1,m],1,1);

end

end

function res = clipalpha(a, H, L)

if a > H

a = H;

end

if a < L

a = L;

end

res = a;

end

- clc;

- clear;

- load NData

- load NTest

- Data = ndata;

- Data_Test = ntest;

- [r,c] = size(Data);

- Test = Data(:,1:2);

- Label = Data(:,3);

- [b, alphas] = rbf_smoP(Test, Label, 200, 0.0001, 1000,1.3);

- %%画图

- figure(1)

- axis([-1.5 1.5 -1.5 1.5])

- for k = 1:1:r

- hold on

- if Data(k,3) == 1

- plot(Data(k,1),Data(k,2),’r+’);

- else

- plot(Data(k,1),Data(k,2),’b*’);

- end

- end

- %%画支持向量

- support_vector = [];

- lable_sv = [];

- alphas_sv = [];

- for k=1:1:r

- if alphas(k)~= 0

- hold on

- support_vector = [support_vector; Test(k,1:2)];

- lable_sv = [lable_sv Label(k)];

- alphas_sv = [alphas_sv alphas(k)];

- %result =[result;alphas(k)];

- QX = plot(Data(k,1:1),Data(k,2:2),’Ok’,’MarkerSize’,12);

- set(QX,’LineWidth’,2.0);

- end

- end

- %%预测

- temp = lable_sv .* alphas_sv;

- [m, n] = size(Data_Test);

- errorCount = 0;

- for k = 1:1:m

- value = kernelTrans(support_vector, Data_Test(k,1:2),1.3);

- predict = temp * value + b;

- if predict > 0

- predict = 1;

- else

- predict = -1;

- end

- if predict ~= Data_Test(k,3:3)

- errorCount = errorCount + 1;

- end

- end

- errorCount

clc;

clear;

load NData

load NTest

Data = ndata;

Data_Test = ntest;

[r,c] = size(Data);

Test = Data(:,1:2);

Label = Data(:,3);

[b, alphas] = rbf_smoP(Test, Label, 200, 0.0001, 1000,1.3);

%%画图

figure(1)

axis([-1.5 1.5 -1.5 1.5])

for k = 1:1:r

hold on

if Data(k,3) == 1

plot(Data(k,1),Data(k,2),'r+');

else

plot(Data(k,1),Data(k,2),'b*');

end

end

%%画支持向量

support_vector = [];

lable_sv = [];

alphas_sv = [];

for k=1:1:r

if alphas(k)~= 0

hold on

support_vector = [support_vector; Test(k,1:2)];

lable_sv = [lable_sv Label(k)];

alphas_sv = [alphas_sv alphas(k)];

%result =[result;alphas(k)];

QX = plot(Data(k,1:1),Data(k,2:2),'Ok','MarkerSize',12);

set(QX,'LineWidth',2.0);

end

end

%%预测

temp = lable_sv .* alphas_sv;

[m, n] = size(Data_Test);

errorCount = 0;

for k = 1:1:m

value = kernelTrans(support_vector, Data_Test(k,1:2),1.3);

predict = temp * value + b;

if predict > 0

predict = 1;

else

predict = -1;

end

if predict ~= Data_Test(k,3:3)

errorCount = errorCount + 1;

end

end

errorCount

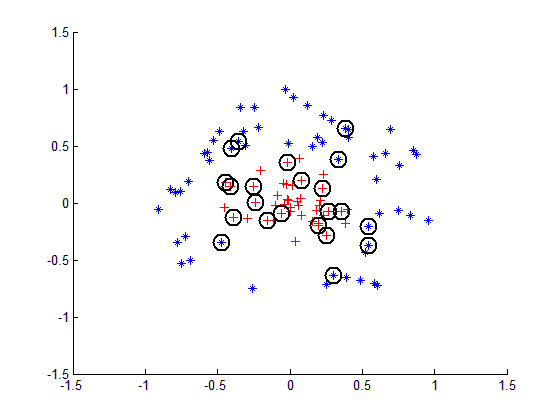

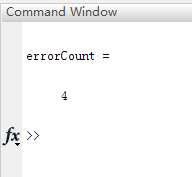

运行结果:

支持向量围绕超平面成团了。。。

预测结果,错分类2,效果不错。

代码地址:

http://download.csdn.net/detail/jinshengtao/8134089

华为比较辛苦,搞适配单板,bcm sdk啥的一点意思都没有,明年打算辞职咯

本文详细介绍了支持向量机(SVM)的基本概念、数学原理及其在机器学习中的应用,重点阐述了序列最小优化(SMO)算法的实现细节,并提供了MATLAB代码实例。

本文详细介绍了支持向量机(SVM)的基本概念、数学原理及其在机器学习中的应用,重点阐述了序列最小优化(SMO)算法的实现细节,并提供了MATLAB代码实例。

608

608

被折叠的 条评论

为什么被折叠?

被折叠的 条评论

为什么被折叠?