前言

最近在做一些Hadoop运维的相关工作,发现了一个有趣的问题,我们公司的Hadoop集群磁盘占比数值参差不齐,高的接近80%,低的接近40%,并没有充分利用好上面的资源,但是balance的操作跑的也是正常的啊,所以打算看一下Hadoop的balance的源代码,更深层次的去了解Hadoop Balance的机制。

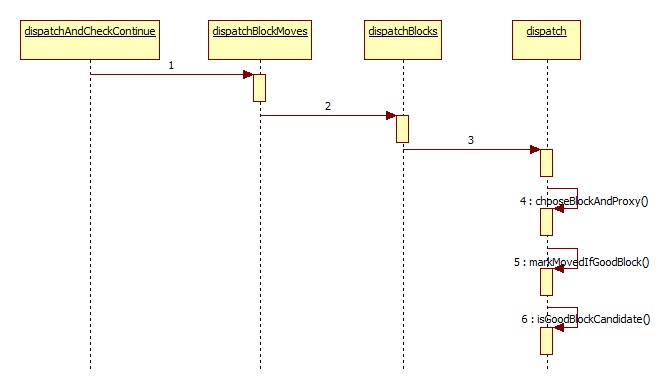

Balancer和Distpatch

上面2个类的设计就是与Hadoop Balance操作最紧密联系的类,Balancer类负载找出<source, target>这样的起始,目标结果对,然后存入到Distpatch中,然后通过Distpatch进行分发,不过在分发之前,会进行block的验证,判断此block是否能被移动,这里会涉及到一些条件的判断,具体的可以通过后面的代码中的注释去发现。

在Balancer的最后阶段,会将source和Target加入到dispatcher中,详见下面的代码:

/**

* 根据源节点和目标节点,构造任务对

*/

private void matchSourceWithTargetToMove(Source source, StorageGroup target) {

long size = Math.min(source.availableSizeToMove(), target.availableSizeToMove());

final Task task = new Task(target, size);

source.addTask(task);

target.incScheduledSize(task.getSize());

//加入分发器中

dispatcher.add(source, target);

LOG.info("Decided to move "+StringUtils.byteDesc(size)+" bytes from "

+ source.getDisplayName() + " to " + target.getDisplayName());

}/**

* For each datanode, choose matching nodes from the candidates. Either the

* datanodes or the candidates are source nodes with (utilization > Avg), and

* the others are target nodes with (utilization < Avg).

*/

private <G extends StorageGroup, C extends StorageGroup>

void chooseStorageGroups(Collection<G> groups, Collection<C> candidates,

Matcher matcher) {

for(final Iterator<G> i = groups.iterator(); i.hasNext();) {

final G g = i.next();

for(; choose4One(g, candidates, matcher); );

if (!g.hasSpaceForScheduling()) {

//如果候选节点没有空间调度,则直接移除掉

i.remove();

}

}

} /**

* Decide all <source, target> pairs and

* the number of bytes to move from a source to a target

* Maximum bytes to be moved per storage group is

* min(1 Band worth of bytes, MAX_SIZE_TO_MOVE).

* 从源节点列表和目标节点列表中各自选择节点组成一个个对,选择顺序优先为同节点组,同机架,然后是针对所有

* @return total number of bytes to move in this iteration

*/

private long chooseStorageGroups() {

// First, match nodes on the same node group if cluster is node group aware

if (dispatcher.getCluster().isNodeGroupAware()) {

//首先匹配的条件是同节点组

chooseStorageGroups(Matcher.SAME_NODE_GROUP);

}

// Then, match nodes on the same rack

//然后是同机架

chooseStorageGroups(Matcher.SAME_RACK);

// At last, match all remaining nodes

//最后是匹配所有的节点

chooseStorageGroups(Matcher.ANY_OTHER);

return dispatcher.bytesToMove();

}

最后核心的检验block块是否合适的代码为下面这个:

/**

* Decide if the block is a good candidate to be moved from source to target.

* A block is a good candidate if

* 1. the block is not in the process of being moved/has not been moved;

* 移动的块不是正在被移动的块

* 2. the block does not have a replica on the target;

* 在目标节点上没有移动的block块

* 3. doing the move does not reduce the number of racks that the block has

* 移动之后,不同机架上的block块的数量应该是不变的.

*/

private boolean isGoodBlockCandidate(Source source, StorageGroup target,

DBlock block) {

if (source.storageType != target.storageType) {

return false;

}

// check if the block is moved or not

//如果所要移动的块是存在于正在被移动的块列表,则返回false

if (movedBlocks.contains(block.getBlock())) {

return false;

}

//如果移动的块已经存在于目标节点上,则返回false,将不会予以移动

if (block.isLocatedOn(target)) {

return false;

}

//如果开启了机架感知的配置,则目标节点不应该有相同的block

if (cluster.isNodeGroupAware()

&& isOnSameNodeGroupWithReplicas(target, block, source)) {

return false;

}

//需要维持机架上的block块数量不变

if (reduceNumOfRacks(source, target, block)) {

return false;

}

return true;

}下面是Balancer.java和Dispatch.java类的完整代码解析:

Balancer.java:

/**

* Licensed to the Apache Software Foundation (ASF) under one

* or more contributor license agreements. See the NOTICE file

* distributed with this work for additional information

* regarding copyright ownership. The ASF licenses this file

* to you under the Apache License, Version 2.0 (the

* "License"); you may not use this file except in compliance

* with the License. You may obtain a copy of the License at

*

* http://www.apache.org/licenses/LICENSE-2.0

*

* Unless required by applicable law or agreed to in writing, software

* distributed under the License is distributed on an "AS IS" BASIS,

* WITHOUT WARRANTIES OR CONDITIONS OF ANY KIND, either express or implied.

* See the License for the specific language governing permissions and

* limitations under the License.

*/

package org.apache.hadoop.hdfs.server.balancer;

import static com.google.common.base.Preconditions.checkArgument;

import java.io.IOException;

import java.io.PrintStream;

import java.net.URI;

import java.text.DateFormat;

import java.util.ArrayList;

import java.util.Arrays;

import java.util.Collection;

import java.util.Collections;

import java.util.Date;

import java.util.Formatter;

import java.util.Iterator;

import java.util.LinkedList;

import java.util.List;

import java.util.Set;

import org.apache.commons.logging.Log;

import org.apache.commons.logging.LogFactory;

import org.apache.hadoop.classification.InterfaceAudience;

import org.apache.hadoop.conf.Configuration;

import org.apache.hadoop.conf.Configured;

import org.apache.hadoop.fs.Path;

import org.apache.hadoop.hdfs.DFSConfigKeys;

import org.apache.hadoop.hdfs.DFSUtil;

import org.apache.hadoop.hdfs.HdfsConfiguration;

import org.apache.hadoop.hdfs.StorageType;

import org.apache.hadoop.hdfs.server.balancer.Dispatcher.DDatanode;

import org.apache.hadoop.hdfs.server.balancer.Dispatcher.DDatanode.StorageGroup;

import org.apache.hadoop.hdfs.server.balancer.Dispatcher.Source;

import org.apache.hadoop.hdfs.server.balancer.Dispatcher.Task;

import org.apache.hadoop.hdfs.server.balancer.Dispatcher.Util;

import org.apache.hadoop.hdfs.server.blockmanagement.BlockPlacementPolicy;

import org.apache.hadoop.hdfs.server.blockmanagement.BlockPlacementPolicyDefault;

import org.apache.hadoop.hdfs.server.namenode.UnsupportedActionException;

import org.apache.hadoop.hdfs.server.protocol.DatanodeStorageReport;

import org.apache.hadoop.hdfs.server.protocol.StorageReport;

import org.apache.hadoop.util.StringUtils;

import org.apache.hadoop.util.Time;

import org.apache.hadoop.util.Tool;

import org.apache.hadoop.util.ToolRunner;

import com.google.common.base.Preconditions;

/** <p>The balancer is a tool that balances disk space usage on an HDFS cluster

* when some datanodes become full or when new empty nodes join the cluster.

* The tool is deployed as an application program that can be run by the

* cluster administrator on a live HDFS cluster while applications

* adding and deleting files.

*

* <p>SYNOPSIS

* <pre>

* To start:

* bin/start-balancer.sh [-threshold <threshold>]

* Example: bin/ start-balancer.sh

* start the balancer with a default threshold of 10%

* bin/ start-balancer.sh -threshold 5

* start the balancer with a threshold of 5%

* To stop:

* bin/ stop-balancer.sh

* </pre>

*

* <p>DESCRIPTION

* <p>The threshold parameter is a fraction in the range of (1%, 100%) with a

* default value of 10%. The threshold sets a target for whether the cluster

* is balanced. A cluster is balanced if for each datanode, the utilization

* of the node (ratio of used space at the node to total capacity of the node)

* differs from the utilization of the (ratio of used space in the cluster

* to total capacity of the cluster) by no more than the threshold value.

* The smaller the threshold, the more balanced a cluster will become.

* It takes more time to run the balancer for small threshold values.

* Also for a very small threshold the cluster may not be able to reach the

* balanced state when applications write and delete files concurrently.

*

* <p>The tool moves blocks from highly utilized datanodes to poorly

* utilized datanodes iteratively. In each iteration a datanode moves or

* receives no more than the lesser of 10G bytes or the threshold fraction

* of its capacity. Each iteration runs no more than 20 minutes.

* 每次移动不超过10G大小,每次移动不超过20分钟。

* At the end of each iteration, the balancer obtains updated datanodes

* information from the namenode.

*

* <p>A system property that limits the balancer's use of bandwidth is

* defined in the default configuration file:

* <pre>

* <property>

* <name>dfs.balance.bandwidthPerSec</name>

* <value>1048576</value>

* <description> Specifies the maximum bandwidth that each datanode

* can utilize for the balancing purpose in term of the number of bytes

* per second. </description>

* </property>

* </pre>

*

* <p>This property determines the maximum speed at which a block will be

* moved from one datanode to another. The default value is 1MB/s. The higher

* the bandwidth, the faster a cluster can reach the balanced state,

* but with greater competition with application processes. If an

* administrator changes the value of this property in the configuration

* file, the change is observed when HDFS is next restarted.

*

* <p>MONITERING BALANCER PROGRESS

* <p>After the balancer is started, an output file name where the balancer

* progress will be recorded is printed on the screen. The administrator

* can monitor the running of the balancer by reading the output file.

* The output shows the balancer's status iteration by iteration. In each

* iteration it prints the starting time, the iteration number, the total

* number of bytes that have been moved in the previous iterations,

* the total number of bytes that are left to move in order for the cluster

* to be balanced, and the number of bytes that are being moved in this

* iteration. Normally "Bytes Already Moved" is increasing while "Bytes Left

* To Move" is decreasing.

*

* <p>Running multiple instances of the balancer in an HDFS cluster is

* prohibited by the tool.

*

* <p>The balancer automatically exits when any of the following five

* conditions is satisfied:

* <ol>

* <li>The cluster is balanced;

* <li>No block can be moved;

* <li>No block has been moved for five consecutive(连续) iterations;

* <li>An IOException occurs while communicating with the namenode;

* <li>Another balancer is running.

* </ol>

* 下面5种情况会导致Balance操作的失败

* 1、整个集群已经达到平衡状态

* 2、经过计算发现没有可以被移动的block块

* 3、在连续5次的迭代中,没有block块被移动

* 4、当datanode节点与namenode节点通信的时候,发生IO异常

* 5、已经存在一个Balance操作

*

* <p>Upon exit, a balancer returns an exit code and prints one of the

* following messages to the output file in corresponding to the above exit

* reasons:

* <ol>

* <li>The cluster is balanced. Exiting

* <li>No block can be moved. Exiting...

* <li>No block has been moved for 5 iterations. Exiting...

* <li>Received an IO exception: failure reason. Exiting...

* <li>Another balancer is running. Exiting...

* </ol>

* 在下面的5种情况下,balancer操作会自动退出

* 1、整个集群已经达到平衡的状态

* 2、经过计算发现没有可以被移动block块

* 3、在5论的迭代没有block被移动

* 4、接收端发生了I异常

* 5、已经存在一个balanr进程在工作

*

* <p>The administrator can interrupt the execution of the balancer at any

* time by running the command "stop-balancer.sh" on the machine where the

* balancer is running.

*/

@InterfaceAudience.Private

public class Balancer {

static final Log LOG = LogFactory.getLog(Balancer.class);

private static final Path BALANCER_ID_PATH = new Path("/system/balancer.id");

private static final long GB = 1L << 30; //1GB

private static final long MAX_SIZE_TO_MOVE = 10*GB;

private static final String USAGE = "Usage: java "

+ Balancer.class.getSimpleName()

+ "\n\t[-policy <policy>]\tthe balancing policy: "

+ BalancingPolicy.Node.INSTANCE.getName() + " or "

+ BalancingPolicy.Pool.INSTANCE.getName()

+ "\n\t[-threshold <threshold>]\tPercentage of disk capacity"

+ "\n\t[-exclude [-f <hosts-file> | comma-sperated list of hosts]]"

+ "\tExcludes the specified datanodes."

+ "\n\t[-include [-f <hosts-file> | comma-sperated list of hosts]]"

+ "\tIncludes only the specified datanodes.";

private final Dispatcher dispatcher;

private final BalancingPolicy policy;

private final double threshold;

// all data node lists

//四种datanode节点类型

private final Collection<Source> overUtilized = new LinkedList<Source>();

private final Collection<Source> aboveAvgUtilized = new LinkedList<Source>();

private final Collection<StorageGroup> belowAvgUtilized

= new LinkedList<StorageGroup>();

private final Collection<StorageGroup> underUtilized

= new LinkedList<StorageGroup>();

/* Check that this Balancer is compatible(兼容) with the Block Placement Policy

* used by the Namenode.

* 检测此balancer均衡工具是否于与目前的namenode节点所用的block存放策略相兼容

*/

private static void checkReplicationPolicyCompatibility(Configuration conf

) throws UnsupportedActionException {

if (!(BlockPlacementPolicy.getInstance(conf, null, null, null) instanceof

BlockPlacementPolicyDefault)) {

throw new UnsupportedActionException(

//如果不兼容则抛异常

"Balancer without BlockPlacementPolicyDefault");

}

}

/**

* Construct a balancer.

* Initialize balancer. It sets the value of the threshold, and

* builds the communication prox

本文主要探讨了Hadoop集群中磁盘利用率不均的问题,并对Hadoop的Balancer和Dispatch机制进行了源码分析,揭示了如何找出并调度合适的block移动,以实现资源的均衡利用。

本文主要探讨了Hadoop集群中磁盘利用率不均的问题,并对Hadoop的Balancer和Dispatch机制进行了源码分析,揭示了如何找出并调度合适的block移动,以实现资源的均衡利用。

最低0.47元/天 解锁文章

最低0.47元/天 解锁文章

被折叠的 条评论

为什么被折叠?

被折叠的 条评论

为什么被折叠?