我们的 mongo 副本集有三台 mongo 服务器:一台主库两台从库。主库进行写操作,两台从库进行读操作(至于某次读操作究竟路由给了哪台,仲裁决定),实现了读写分离。这还不止,如果主库宕掉,还能实现不需要用户干预的情况下,将主库自动切换到另外两台从库中的某一台,真正实现了 db 的高可用。

https://www.mongodb.org/downloads#production

选择适合我们操作系统的版本下载,最新版本是 mongodb-linux-x86_64-ubuntu1404-3.2.1.tgz,大小 74MB。

$ tar zxvf mongodb-linux-x86_64-ubuntu1404-3.2.1.tgz

得到 mongodb-linux-x86_64-ubuntu1404-3.2.1 文件夹,其内有 bin 等文件/目录。

MongoDB 是绿色免安装软件,但我们准备把 MongoDB 作为安装软件放在 /usr/local 目录下:

$ mv mongodb-linux-x86_64-ubuntu1404-3.2.1 /usr/local/mongodb

最后将 MongoDB 加进环境变量并立即生效:

$ echo "export PATH=/usr/local/mongodb/bin:$PATH" >> ~/.bashrc

$ source ~/.bashrc

每个节点之间访问需要授权,因此需要同一个授权文件。

每个节点数据、日志目录创建:

$ cd /opt/

$ mkdir mongodb

$ cd mongodb/

$ mkdir arb-30000

$ mkdir rs1-27017

$ mkdir rs1-27018

$ mkdir rs1-27019

授权文件创建并修改其访问权限:

$ openssl rand -base64 512 > rs1.key

$ chmod 600 rs1.key

master 节点启动(端口号 27017):

$ mongod --port=27017 --fork --dbpath=/opt/mongodb/rs1-27017 --logpath=/opt/mongodb/rs1-27017/mongod.log --replSet=rs1

slave 节点之一启动(端口号 27018):

$ mongod --port=27018 --fork --dbpath=/opt/mongodb/rs1-27018 --logpath=/opt/mongodb/rs1-27018/mongod.log --replSet=rs1

slave 节点之二启动(端口号 27019):

$ mongod --port=27019 --fork --dbpath=/opt/mongodb/rs1-27019 --logpath=/opt/mongodb/rs1-27019/mongod.log --replSet=rs1

输出如下证明启动成功:

about to fork child process, waiting until server is ready for connections.

forked process: 21947

child process started successfully, parent exiting

最后是仲裁节点的启动(端口号 30000):

$ mongod --port=30000 --fork --dbpath=/opt/mongodb/arb-30000 --logpath=/opt/mongodb/arb-30000/mongod.log --replSet=rs1

$ mongo -port 27017

进入后执行副本集初始化:

rs.initiate()

建好以后查看本节点是否 master:

rs1:OTHER> rs.isMaster()

输出:

{

"hosts" : [

"somehost:27017"

],

"setName" : "rs1",

"setVersion" : 1,

"ismaster" : true,

"secondary" : false,

"primary" : "somehost:27017",

"me" : "somehost:27017",

"electionId" : ObjectId("56b175570000000000000001"),

"maxBsonObjectSize" : 16777216,

"maxMessageSizeBytes" : 48000000,

"maxWriteBatchSize" : 1000,

"localTime" : ISODate("2016-02-03T03:35:26.532Z"),

"maxWireVersion" : 4,

"minWireVersion" : 0,

"ok" : 1

}

之后依次把两个 slave 节点、仲裁节点加进来:

rs1:PRIMARY> rs.add('somehost:27018')

{ "ok" : 1 }

rs1:PRIMARY> rs.add('somehost:27019')

{ "ok" : 1 }

rs1:PRIMARY> rs.addArb('somehost:30000')

{ "ok" : 1 }

注意 somehost 是每个节点的主机名(可以使用 hostname 命令查看)。

验证副本集状态:

rs1:PRIMARY> rs.status()

{

"set" : "rs1",

"date" : ISODate("2016-02-03T03:38:56.519Z"),

"myState" : 1,

"term" : NumberLong(1),

"heartbeatIntervalMillis" : NumberLong(2000),

"members" : [

{

"_id" : 0,

"name" : "somehost:27017",

"health" : 1,

"state" : 1,

"stateStr" : "PRIMARY",

"uptime" : 560,

"optime" : {

"ts" : Timestamp(1454470707, 1),

"t" : NumberLong(1)

},

"optimeDate" : ISODate("2016-02-03T03:38:27Z"),

"electionTime" : Timestamp(1454470487, 2),

"electionDate" : ISODate("2016-02-03T03:34:47Z"),

"configVersion" : 4,

"self" : true

},

{

"_id" : 1,

"name" : "somehost:27018",

"health" : 1,

"state" : 2,

"stateStr" : "SECONDARY",

"uptime" : 102,

"optime" : {

"ts" : Timestamp(1454470707, 1),

"t" : NumberLong(1)

},

"optimeDate" : ISODate("2016-02-03T03:38:27Z"),

"lastHeartbeat" : ISODate("2016-02-03T03:38:55.923Z"),

"lastHeartbeatRecv" : ISODate("2016-02-03T03:38:55.927Z"

),

"pingMs" : NumberLong(0),

"syncingTo" : "somehost:27017",

"configVersion" : 4

},

{

"_id" : 2,

"name" : "somehost:27019",

"health" : 1,

"state" : 2,

"stateStr" : "SECONDARY",

"uptime" : 85,

"optime" : {

"ts" : Timestamp(1454470707, 1),

"t" : NumberLong(1)

},

"optimeDate" : ISODate("2016-02-03T03:38:27Z"),

"lastHeartbeat" : ISODate("2016-02-03T03:38:55.923Z"),

"lastHeartbeatRecv" : ISODate("2016-02-03T03:38:54.924Z"

),

"pingMs" : NumberLong(0),

"syncingTo" : "somehost:27018",

"configVersion" : 4

},

{

"_id" : 3,

"name" : "somehost:30000",

"health" : 1,

"state" : 7,

"stateStr" : "ARBITER",

"uptime" : 28,

"lastHeartbeat" : ISODate("2016-02-03T03:38:55.923Z"),

"lastHeartbeatRecv" : ISODate("2016-02-03T03:38:52.940Z"

),

"pingMs" : NumberLong(0),

"configVersion" : 4

}

],

"ok" : 1

}

switched to db admin

rs1:PRIMARY> db.createUser({user:"admin",pwd:"adminpwd",roles:["root"]})

Successfully added user: { "user" : "admin", "roles" : [ "root" ] }

rs1:PRIMARY> exit

bye

用户名 admin 密码 adminpwd 的数据库管理员用户已创建。

验证所创建的用户是否已同步到其他几个节点:

$ mongo -port 27018

rs1:SECONDARY> use admin

switched to db admin

rs1:SECONDARY> db.auth("admin","adminpwd")

1

rs1:SECONDARY> db.shutdownServer()

server should be down...

2016-02-03T11:46:36.234+0800 I NETWORK [thread1] trying reconnect to 127.0.0.1:27018 (127.0.0.1) failed

2016-02-03T11:46:36.235+0800 W NETWORK [thread1] Failed to connect to 127.0.0.1:27018, reason: errno:111 Connection refused

2016-02-03T11:46:36.235+0800 I NETWORK [thread1] reconnect 127.0.0.1:27018 (127.0.0.1) failed failed

2016-02-03T11:46:36.237+0800 I NETWORK [thread1] trying reconnect to 127.0.0.1:27018 (127.0.0.1) failed

2016-02-03T11:46:36.237+0800 W NETWORK [thread1] Failed to connect to 127.0.0.1:27018, reason: errno:111 Connection refused

2016-02-03T11:46:36.237+0800 I NETWORK [thread1] reconnect 127.0.0.1:27018 (127.0.0.1) failed failed

$ mongo -port 27019

rs1:SECONDARY> use admin

switched to db admin

rs1:SECONDARY> db.auth("admin","adminpwd")

1

rs1:SECONDARY> db.shutdownServer()

server should be down...

2016-02-03T11:48:01.191+0800 I NETWORK [thread1] trying reconnect to 127.0.0.1:27019 (127.0.0.1) failed

2016-02-03T11:48:01.191+0800 W NETWORK [thread1] Failed to connect to 127.0.0.:27019, reason: errno:111 Connection refused

2016-02-03T11:48:01.191+0800 I NETWORK [thread1] reconnect 127.0.0.1:27019 (12.0.0.1) failed failed

2016-02-03T11:48:01.193+0800 I NETWORK [thread1] trying reconnect to 127.0.0.1:27019 (127.0.0.1) failed

2016-02-03T11:48:01.193+0800 W NETWORK [thread1] Failed to connect to 127.0.0.1:27019, reason: errno:111 Connection refused

2016-02-03T11:48:01.193+0800 I NETWORK [thread1] reconnect 127.0.0.1:27019 (12.0.0.1) failed failed

同理关掉 master 节点和仲裁节点。

然后再按顺序依次启动,注意这次要加上 auth 参数了:

$ mongod --port=27017 --fork --dbpath=/opt/mongodb/rs1-27017 --logpath=/opt/mongodb/rs1-27017/mongod.log --replSet=rs1 --auth --keyFile=/opt/mongodb/rs1.key

about to fork child process, waiting until server is ready for connections.

forked process: 22803

child process started successfully, parent exiting

$ mongod --port=27018 --fork --dbpath=/opt/mongodb/rs1-27018 --logpath=/opt/mongodb/rs1-27018/mongod.log --replSet=rs1 --auth --keyFile=/opt/mongodb/rs1.key

about to fork child process, waiting until server is ready for connections.

forked process: 22803

child process started successfully, parent exiting

$ mongod --port=27019 --fork --dbpath=/opt/mongodb/rs1-27019 --logpath=/opt/mongodb/rs1-27019/mongod.log --replSet=rs1 --auth --keyFile=/opt/mongodb/rs1.key

about to fork child process, waiting until server is ready for connections.

forked process: 23068

child process started successfully, parent exiting

$ mongod --port=30000 --fork --dbpath=/opt/mongodb/arb-30000 --logpath=/opt/mongodb/arb-30000/mongod.log --replSet=rs1 --keyFile=/opt/mongodb/rs1.key

about to fork child process, waiting until server is ready for connections.

forked process: 23068

child process started successfully, parent exiting

每个节点启动以后,在主节点 test 库里插入一条记录测试是否同步:

$ mongo -port 27017

MongoDB shell version: 3.2.1

connecting to: 127.0.0.1:27017/test

rs1:PRIMARY> db.auth('admin','adminpwd')

1

rs1:PRIMARY> use test

switched to db test

rs1:PRIMARY> db.test.insert({test:"test"})

WriteResult({ "nInserted" : 1 })

rs1:PRIMARY> exit

bye

去从库看看是否同步:

$ mongo -port 27018

MongoDB shell version: 3.2.1

connecting to: 127.0.0.1:27018/test

rs1:SECONDARY> use test

switched to db test

rs1:SECONDARY> use admin

switched to db admin

rs1:SECONDARY> db.auth('admin','adminpwd')

1

rs1:SECONDARY> use test

switched to db test

rs1:SECONDARY> rs.slaveOk()

rs1:SECONDARY> db.test.find()

{ "_id" : ObjectId("56b17b7cafc9b56181378550"), "test" : "test" }

同步成功。

接下来创建生产数据库、生产用户,先登录 master 节点:

$ mongo -port 27017

rs1:PRIMARY> db.auth("admin","adminpwd")

rs1:PRIMARY> use quicktest

rs1:PRIMARY> db.createUser({user:"quicktest",pwd:"quicktest",roles:[{ role: "readWrite", db: "quicktest" }]})

Successfully added user: {

"user" : "quicktest",

"roles" : [

{

"role" : "readWrite",

"db" : "quicktest"

}

]

}

quicktest 生产用户创建成功。

rs1:PRIMARY> db.justtest.insert({test:"test"})

WriteResult({ "nInserted" : 1 })

rs1:PRIMARY> show dbs

admin 0.000GB

local 0.000GB

quicktest 0.000GB

test 0.000GB

quicktest 生产数据库创建成功。

$ mongo -port 27017

MongoDB shell version: 3.2.1

connecting to: 127.0.0.1:27017/test

rs1:PRIMARY> use quicktest

switched to db quicktest

rs1:PRIMARY> db.auth('quicktest','quicktest')

1

rs1:PRIMARY> show collections

justtest

masterlog

task

rs1:PRIMARY> db.user.insert({ "_id" : ObjectId("55ef883acb91f093af390689"), "userName" : "admin", "nickName" : "admin", "password" : "c6203112cd2a39dc956feac05ba676e1096c0e9d", "mail" : "admin@defonds.com", "phoneNum" : "13148143761", "userType" : "admin", "agentCode" : "", "subAgentCode" : "", "groupCode" : "", "merId" : "", "areaCode" : "222", "updateTime" : "2015-11-24 17:26:19", "loginTime" : "", "lockTime" : "" })

WriteResult({ "nInserted" : 1 })

$ kill -9 22803

$ mongo -port 27018

MongoDB shell version: 3.2.1

rs1:SECONDARY> exit

bye

$ mongo -port 27019

MongoDB shell version: 3.2.1

connecting to: 127.0.0.1:27019/test

rs1:PRIMARY>

可见 master 节点已经被选举移交给 27019 端口的那个节点。查看副本集状态:

rs1:PRIMARY> rs.status()

{

"set" : "rs1",

"date" : ISODate("2016-02-27T03:00:28.531Z"),

"myState" : 1,

"term" : NumberLong(3),

"heartbeatIntervalMillis" : NumberLong(2000),

"members" : [

{

"_id" : 0,

"name" : "somehost:27017",

"health" : 0,

"state" : 8,

"stateStr" : "(not reachable/healthy)",

"uptime" : 0,

"optime" : {

"ts" : Timestamp(0, 0),

"t" : NumberLong(-1)

},

"optimeDate" : ISODate("1970-01-01T00:00:00Z"),

"lastHeartbeat" : ISODate("2016-02-27T03:00:27.031Z"),

"lastHeartbeatRecv" : ISODate("2016-02-27T02:43:57.294Z"

),

"pingMs" : NumberLong(0),

"lastHeartbeatMessage" : "Connection refused",

"configVersion" : -1

},

{

"_id" : 1,

"name" : "somehost:27018",

"health" : 1,

"state" : 2,

"stateStr" : "SECONDARY",

"uptime" : 2070279,

"optime" : {

"ts" : Timestamp(1456542007, 2),

"t" : NumberLong(3)

},

"optimeDate" : ISODate("2016-02-27T03:00:07Z"),

"lastHeartbeat" : ISODate("2016-02-27T03:00:26.860Z"),

"lastHeartbeatRecv" : ISODate("2016-02-27T03:00:27.287Z"

),

"pingMs" : NumberLong(0),

"syncingTo" : "somehost:27019",

"configVersion" : 4

},

{

"_id" : 2,

"name" : "somehost:27019",

"health" : 1,

"state" : 1,

"stateStr" : "PRIMARY",

"uptime" : 2070280,

"optime" : {

"ts" : Timestamp(1456542007, 2),

"t" : NumberLong(3)

},

"optimeDate" : ISODate("2016-02-27T03:00:07Z"),

"electionTime" : Timestamp(1456541048, 1),

"electionDate" : ISODate("2016-02-27T02:44:08Z"),

"configVersion" : 4,

"self" : true

},

{

"_id" : 3,

"name" : "somehost:30000",

"health" : 1,

"state" : 7,

"stateStr" : "ARBITER",

"uptime" : 2070226,

"lastHeartbeat" : ISODate("2016-02-27T03:00:26.859Z"),

"lastHeartbeatRecv" : ISODate("2016-02-27T03:00:25.360Z"

),

"pingMs" : NumberLong(0),

"configVersion" : 4

}

],

"ok" : 1

}

原来的端口号为 27017 的主节点健康标记已为 0(不可用)。查看仲裁日志:

2016-02-27T10:44:02.924+0800 I ASIO [ReplicationExecutor] dropping unhealthy pooled connection to somehost:27017

2016-02-27T10:44:02.924+0800 I ASIO [ReplicationExecutor] after drop, pool was empty, going to spawn some connections

2016-02-27T10:44:02.924+0800 I REPL [ReplicationExecutor] Error in heartbeat request to somehost:27017; HostUnreachable Connection refused

可见虽然仲裁已经放弃了对 27017 的连接,但还是会一直给 27017 发心跳包以待其恢复正常后继续使用。为了验证这个我们再将 27017 重启:

$ mongod --port=27017 --fork --dbpath=/opt/mongodb/rs1-27017 --logpath=/opt/mongodb/rs1-27017/mongod.log --replSet=rs1 --auth --keyFile=/opt/mongodb/rs1.key

about to fork child process, waiting until server is ready for connections.

forked process: 31880

child process started successfully, parent exiting

回到目前的主节点 27019:

rs1:PRIMARY> rs.status()

{

"set" : "rs1",

"date" : ISODate("2016-02-27T03:16:59.520Z"),

"myState" : 1,

"term" : NumberLong(3),

"heartbeatIntervalMillis" : NumberLong(2000),

"members" : [

{

"_id" : 0,

"name" : "somehost:27017",

"health" : 1,

"state" : 2,

"stateStr" : "SECONDARY",

"uptime" : 57,

"optime" : {

"ts" : Timestamp(1456542907, 4),

"t" : NumberLong(3)

},

"optimeDate" : ISODate("2016-02-27T03:15:07Z"),

"lastHeartbeat" : ISODate("2016-02-27T03:16:57.609Z"),

"lastHeartbeatRecv" : ISODate("2016-02-27T03:16:59.124Z"

),

"pingMs" : NumberLong(0),

"syncingTo" : "somehost:27019",

"configVersion" : 4

},

{

"_id" : 1,

"name" : "somehost:27018",

"health" : 1,

"state" : 2,

"stateStr" : "SECONDARY",

"uptime" : 2071270,

"optime" : {

"ts" : Timestamp(1456542907, 4),

"t" : NumberLong(3)

},

"optimeDate" : ISODate("2016-02-27T03:15:07Z"),

"lastHeartbeat" : ISODate("2016-02-27T03:16:59.207Z"),

"lastHeartbeatRecv" : ISODate("2016-02-27T03:16:57.656Z"

),

"pingMs" : NumberLong(0),

"syncingTo" : "somehost:27019",

"configVersion" : 4

},

{

"_id" : 2,

"name" : "somehost:27019",

"health" : 1,

"state" : 1,

"stateStr" : "PRIMARY",

"uptime" : 2071271,

"optime" : {

"ts" : Timestamp(1456542907, 4),

"t" : NumberLong(3)

},

"optimeDate" : ISODate("2016-02-27T03:15:07Z"),

"electionTime" : Timestamp(1456541048, 1),

"electionDate" : ISODate("2016-02-27T02:44:08Z"),

"configVersion" : 4,

"self" : true

},

{

"_id" : 3,

"name" : "somehost:30000",

"health" : 1,

"state" : 7,

"stateStr" : "ARBITER",

"uptime" : 2071217,

"lastHeartbeat" : ISODate("2016-02-27T03:16:59.205Z"),

"lastHeartbeatRecv" : ISODate("2016-02-27T03:16:55.506Z"

),

"pingMs" : NumberLong(0),

"configVersion" : 4

}

],

"ok" : 1

}

可见 27017 节点已恢复正常,并作为 slave 节点进行服务。仲裁的日志也说明了这个:

2016-02-27T11:16:03.721+0800 I ASIO [NetworkInterfaceASIO-Replication-0] Successfully connected to somehost:27017

2016-02-27T11:16:03.722+0800 I REPL [ReplicationExecutor] Member somehost:27017 is now in state SECONDARY

slave 节点的故障及恢复基本类似与此。

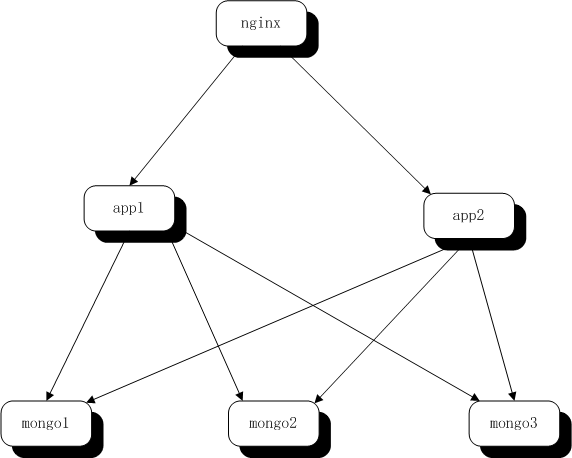

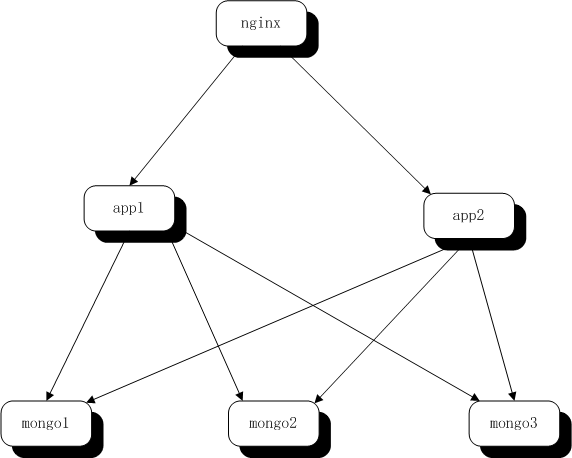

1. 背景

1.1 环境

- CPU核数:4

- 内存配置:8G

- 带宽:100MB

- 磁盘:系统盘 40G,数据盘 180G

- 操作系统版本:Ubuntu 14.04 64位

1.2 系统部署结构图

2. MongoDB 副本集环境搭建

2.1 安装包下载

最新 MongoDB 安装包下载地址:https://www.mongodb.org/downloads#production

选择适合我们操作系统的版本下载,最新版本是 mongodb-linux-x86_64-ubuntu1404-3.2.1.tgz,大小 74MB。

2.2 MongoDB 的安装和环境变量设置

解压到当前目录:$ tar zxvf mongodb-linux-x86_64-ubuntu1404-3.2.1.tgz

得到 mongodb-linux-x86_64-ubuntu1404-3.2.1 文件夹,其内有 bin 等文件/目录。

MongoDB 是绿色免安装软件,但我们准备把 MongoDB 作为安装软件放在 /usr/local 目录下:

$ mv mongodb-linux-x86_64-ubuntu1404-3.2.1 /usr/local/mongodb

最后将 MongoDB 加进环境变量并立即生效:

$ echo "export PATH=/usr/local/mongodb/bin:$PATH" >> ~/.bashrc

$ source ~/.bashrc

2.3 MongoDB 实例所需先决条件准备

根据目前的架构,MongoDB 副本集需要四个实例:一个仲裁节点、一个 master 节点、两个 slave 节点。每个实例数据、日志文件都需要有一个专门的目录存放,我们分别将其放在数据盘下的 rs1- 端口号 目录下。每个节点之间访问需要授权,因此需要同一个授权文件。

每个节点数据、日志目录创建:

$ cd /opt/

$ mkdir mongodb

$ cd mongodb/

$ mkdir arb-30000

$ mkdir rs1-27017

$ mkdir rs1-27018

$ mkdir rs1-27019

授权文件创建并修改其访问权限:

$ openssl rand -base64 512 > rs1.key

$ chmod 600 rs1.key

2.4 用户实例创建

2.4.1 副本集四个实例的首次启动

因为用户还没创建,所以首次启动 MongoDB 副本集,不要带上 auth 参数,不然无法创建用户。master 节点启动(端口号 27017):

$ mongod --port=27017 --fork --dbpath=/opt/mongodb/rs1-27017 --logpath=/opt/mongodb/rs1-27017/mongod.log --replSet=rs1

slave 节点之一启动(端口号 27018):

$ mongod --port=27018 --fork --dbpath=/opt/mongodb/rs1-27018 --logpath=/opt/mongodb/rs1-27018/mongod.log --replSet=rs1

slave 节点之二启动(端口号 27019):

$ mongod --port=27019 --fork --dbpath=/opt/mongodb/rs1-27019 --logpath=/opt/mongodb/rs1-27019/mongod.log --replSet=rs1

输出如下证明启动成功:

about to fork child process, waiting until server is ready for connections.

forked process: 21947

child process started successfully, parent exiting

最后是仲裁节点的启动(端口号 30000):

$ mongod --port=30000 --fork --dbpath=/opt/mongodb/arb-30000 --logpath=/opt/mongodb/arb-30000/mongod.log --replSet=rs1

2.4.2 副本集的创建

现在每个节点都已经启动成功了,现在我们要创建一个副本集,并把这些节点加进来。先登录主节点:$ mongo -port 27017

进入后执行副本集初始化:

rs.initiate()

建好以后查看本节点是否 master:

rs1:OTHER> rs.isMaster()

输出:

{

"hosts" : [

"somehost:27017"

],

"setName" : "rs1",

"setVersion" : 1,

"ismaster" : true,

"secondary" : false,

"primary" : "somehost:27017",

"me" : "somehost:27017",

"electionId" : ObjectId("56b175570000000000000001"),

"maxBsonObjectSize" : 16777216,

"maxMessageSizeBytes" : 48000000,

"maxWriteBatchSize" : 1000,

"localTime" : ISODate("2016-02-03T03:35:26.532Z"),

"maxWireVersion" : 4,

"minWireVersion" : 0,

"ok" : 1

}

之后依次把两个 slave 节点、仲裁节点加进来:

rs1:PRIMARY> rs.add('somehost:27018')

{ "ok" : 1 }

rs1:PRIMARY> rs.add('somehost:27019')

{ "ok" : 1 }

rs1:PRIMARY> rs.addArb('somehost:30000')

{ "ok" : 1 }

注意 somehost 是每个节点的主机名(可以使用 hostname 命令查看)。

验证副本集状态:

rs1:PRIMARY> rs.status()

{

"set" : "rs1",

"date" : ISODate("2016-02-03T03:38:56.519Z"),

"myState" : 1,

"term" : NumberLong(1),

"heartbeatIntervalMillis" : NumberLong(2000),

"members" : [

{

"_id" : 0,

"name" : "somehost:27017",

"health" : 1,

"state" : 1,

"stateStr" : "PRIMARY",

"uptime" : 560,

"optime" : {

"ts" : Timestamp(1454470707, 1),

"t" : NumberLong(1)

},

"optimeDate" : ISODate("2016-02-03T03:38:27Z"),

"electionTime" : Timestamp(1454470487, 2),

"electionDate" : ISODate("2016-02-03T03:34:47Z"),

"configVersion" : 4,

"self" : true

},

{

"_id" : 1,

"name" : "somehost:27018",

"health" : 1,

"state" : 2,

"stateStr" : "SECONDARY",

"uptime" : 102,

"optime" : {

"ts" : Timestamp(1454470707, 1),

"t" : NumberLong(1)

},

"optimeDate" : ISODate("2016-02-03T03:38:27Z"),

"lastHeartbeat" : ISODate("2016-02-03T03:38:55.923Z"),

"lastHeartbeatRecv" : ISODate("2016-02-03T03:38:55.927Z"

),

"pingMs" : NumberLong(0),

"syncingTo" : "somehost:27017",

"configVersion" : 4

},

{

"_id" : 2,

"name" : "somehost:27019",

"health" : 1,

"state" : 2,

"stateStr" : "SECONDARY",

"uptime" : 85,

"optime" : {

"ts" : Timestamp(1454470707, 1),

"t" : NumberLong(1)

},

"optimeDate" : ISODate("2016-02-03T03:38:27Z"),

"lastHeartbeat" : ISODate("2016-02-03T03:38:55.923Z"),

"lastHeartbeatRecv" : ISODate("2016-02-03T03:38:54.924Z"

),

"pingMs" : NumberLong(0),

"syncingTo" : "somehost:27018",

"configVersion" : 4

},

{

"_id" : 3,

"name" : "somehost:30000",

"health" : 1,

"state" : 7,

"stateStr" : "ARBITER",

"uptime" : 28,

"lastHeartbeat" : ISODate("2016-02-03T03:38:55.923Z"),

"lastHeartbeatRecv" : ISODate("2016-02-03T03:38:52.940Z"

),

"pingMs" : NumberLong(0),

"configVersion" : 4

}

],

"ok" : 1

}

2.4.3 管理员用户的创建

rs1:PRIMARY> use adminswitched to db admin

rs1:PRIMARY> db.createUser({user:"admin",pwd:"adminpwd",roles:["root"]})

Successfully added user: { "user" : "admin", "roles" : [ "root" ] }

rs1:PRIMARY> exit

bye

用户名 admin 密码 adminpwd 的数据库管理员用户已创建。

验证所创建的用户是否已同步到其他几个节点:

$ mongo -port 27018

rs1:SECONDARY> use admin

switched to db admin

rs1:SECONDARY> db.auth("admin","adminpwd")

1

2.4.4 生产库、生产用户的创建

先把原来启动的实例依次关闭,顺序是两个 slave 节点、主节点、仲裁节点:rs1:SECONDARY> db.shutdownServer()

server should be down...

2016-02-03T11:46:36.234+0800 I NETWORK [thread1] trying reconnect to 127.0.0.1:27018 (127.0.0.1) failed

2016-02-03T11:46:36.235+0800 W NETWORK [thread1] Failed to connect to 127.0.0.1:27018, reason: errno:111 Connection refused

2016-02-03T11:46:36.235+0800 I NETWORK [thread1] reconnect 127.0.0.1:27018 (127.0.0.1) failed failed

2016-02-03T11:46:36.237+0800 I NETWORK [thread1] trying reconnect to 127.0.0.1:27018 (127.0.0.1) failed

2016-02-03T11:46:36.237+0800 W NETWORK [thread1] Failed to connect to 127.0.0.1:27018, reason: errno:111 Connection refused

2016-02-03T11:46:36.237+0800 I NETWORK [thread1] reconnect 127.0.0.1:27018 (127.0.0.1) failed failed

$ mongo -port 27019

rs1:SECONDARY> use admin

switched to db admin

rs1:SECONDARY> db.auth("admin","adminpwd")

1

rs1:SECONDARY> db.shutdownServer()

server should be down...

2016-02-03T11:48:01.191+0800 I NETWORK [thread1] trying reconnect to 127.0.0.1:27019 (127.0.0.1) failed

2016-02-03T11:48:01.191+0800 W NETWORK [thread1] Failed to connect to 127.0.0.:27019, reason: errno:111 Connection refused

2016-02-03T11:48:01.191+0800 I NETWORK [thread1] reconnect 127.0.0.1:27019 (12.0.0.1) failed failed

2016-02-03T11:48:01.193+0800 I NETWORK [thread1] trying reconnect to 127.0.0.1:27019 (127.0.0.1) failed

2016-02-03T11:48:01.193+0800 W NETWORK [thread1] Failed to connect to 127.0.0.1:27019, reason: errno:111 Connection refused

2016-02-03T11:48:01.193+0800 I NETWORK [thread1] reconnect 127.0.0.1:27019 (12.0.0.1) failed failed

同理关掉 master 节点和仲裁节点。

然后再按顺序依次启动,注意这次要加上 auth 参数了:

$ mongod --port=27017 --fork --dbpath=/opt/mongodb/rs1-27017 --logpath=/opt/mongodb/rs1-27017/mongod.log --replSet=rs1 --auth --keyFile=/opt/mongodb/rs1.key

about to fork child process, waiting until server is ready for connections.

forked process: 22803

child process started successfully, parent exiting

$ mongod --port=27018 --fork --dbpath=/opt/mongodb/rs1-27018 --logpath=/opt/mongodb/rs1-27018/mongod.log --replSet=rs1 --auth --keyFile=/opt/mongodb/rs1.key

about to fork child process, waiting until server is ready for connections.

forked process: 22803

child process started successfully, parent exiting

$ mongod --port=27019 --fork --dbpath=/opt/mongodb/rs1-27019 --logpath=/opt/mongodb/rs1-27019/mongod.log --replSet=rs1 --auth --keyFile=/opt/mongodb/rs1.key

about to fork child process, waiting until server is ready for connections.

forked process: 23068

child process started successfully, parent exiting

$ mongod --port=30000 --fork --dbpath=/opt/mongodb/arb-30000 --logpath=/opt/mongodb/arb-30000/mongod.log --replSet=rs1 --keyFile=/opt/mongodb/rs1.key

about to fork child process, waiting until server is ready for connections.

forked process: 23068

child process started successfully, parent exiting

每个节点启动以后,在主节点 test 库里插入一条记录测试是否同步:

$ mongo -port 27017

MongoDB shell version: 3.2.1

connecting to: 127.0.0.1:27017/test

rs1:PRIMARY> db.auth('admin','adminpwd')

1

rs1:PRIMARY> use test

switched to db test

rs1:PRIMARY> db.test.insert({test:"test"})

WriteResult({ "nInserted" : 1 })

rs1:PRIMARY> exit

bye

去从库看看是否同步:

$ mongo -port 27018

MongoDB shell version: 3.2.1

connecting to: 127.0.0.1:27018/test

rs1:SECONDARY> use test

switched to db test

rs1:SECONDARY> use admin

switched to db admin

rs1:SECONDARY> db.auth('admin','adminpwd')

1

rs1:SECONDARY> use test

switched to db test

rs1:SECONDARY> rs.slaveOk()

rs1:SECONDARY> db.test.find()

{ "_id" : ObjectId("56b17b7cafc9b56181378550"), "test" : "test" }

同步成功。

接下来创建生产数据库、生产用户,先登录 master 节点:

$ mongo -port 27017

rs1:PRIMARY> db.auth("admin","adminpwd")

rs1:PRIMARY> use quicktest

rs1:PRIMARY> db.createUser({user:"quicktest",pwd:"quicktest",roles:[{ role: "readWrite", db: "quicktest" }]})

Successfully added user: {

"user" : "quicktest",

"roles" : [

{

"role" : "readWrite",

"db" : "quicktest"

}

]

}

quicktest 生产用户创建成功。

rs1:PRIMARY> db.justtest.insert({test:"test"})

WriteResult({ "nInserted" : 1 })

rs1:PRIMARY> show dbs

admin 0.000GB

local 0.000GB

quicktest 0.000GB

test 0.000GB

quicktest 生产数据库创建成功。

2.5 app 应用 admin 用户的创建

一些基础数据表如用户表等需要先行创建,至于交易表等非基础数据则在交易产生的时候会自行创建。$ mongo -port 27017

MongoDB shell version: 3.2.1

connecting to: 127.0.0.1:27017/test

rs1:PRIMARY> use quicktest

switched to db quicktest

rs1:PRIMARY> db.auth('quicktest','quicktest')

1

rs1:PRIMARY> show collections

justtest

masterlog

task

rs1:PRIMARY> db.user.insert({ "_id" : ObjectId("55ef883acb91f093af390689"), "userName" : "admin", "nickName" : "admin", "password" : "c6203112cd2a39dc956feac05ba676e1096c0e9d", "mail" : "admin@defonds.com", "phoneNum" : "13148143761", "userType" : "admin", "agentCode" : "", "subAgentCode" : "", "groupCode" : "", "merId" : "", "areaCode" : "222", "updateTime" : "2015-11-24 17:26:19", "loginTime" : "", "lockTime" : "" })

WriteResult({ "nInserted" : 1 })

2.6 验证副本集的高可用性

为了模拟 master 节点故障,我们手工将 master节点给 kill 掉:$ kill -9 22803

$ mongo -port 27018

MongoDB shell version: 3.2.1

rs1:SECONDARY> exit

bye

$ mongo -port 27019

MongoDB shell version: 3.2.1

connecting to: 127.0.0.1:27019/test

rs1:PRIMARY>

可见 master 节点已经被选举移交给 27019 端口的那个节点。查看副本集状态:

rs1:PRIMARY> rs.status()

{

"set" : "rs1",

"date" : ISODate("2016-02-27T03:00:28.531Z"),

"myState" : 1,

"term" : NumberLong(3),

"heartbeatIntervalMillis" : NumberLong(2000),

"members" : [

{

"_id" : 0,

"name" : "somehost:27017",

"health" : 0,

"state" : 8,

"stateStr" : "(not reachable/healthy)",

"uptime" : 0,

"optime" : {

"ts" : Timestamp(0, 0),

"t" : NumberLong(-1)

},

"optimeDate" : ISODate("1970-01-01T00:00:00Z"),

"lastHeartbeat" : ISODate("2016-02-27T03:00:27.031Z"),

"lastHeartbeatRecv" : ISODate("2016-02-27T02:43:57.294Z"

),

"pingMs" : NumberLong(0),

"lastHeartbeatMessage" : "Connection refused",

"configVersion" : -1

},

{

"_id" : 1,

"name" : "somehost:27018",

"health" : 1,

"state" : 2,

"stateStr" : "SECONDARY",

"uptime" : 2070279,

"optime" : {

"ts" : Timestamp(1456542007, 2),

"t" : NumberLong(3)

},

"optimeDate" : ISODate("2016-02-27T03:00:07Z"),

"lastHeartbeat" : ISODate("2016-02-27T03:00:26.860Z"),

"lastHeartbeatRecv" : ISODate("2016-02-27T03:00:27.287Z"

),

"pingMs" : NumberLong(0),

"syncingTo" : "somehost:27019",

"configVersion" : 4

},

{

"_id" : 2,

"name" : "somehost:27019",

"health" : 1,

"state" : 1,

"stateStr" : "PRIMARY",

"uptime" : 2070280,

"optime" : {

"ts" : Timestamp(1456542007, 2),

"t" : NumberLong(3)

},

"optimeDate" : ISODate("2016-02-27T03:00:07Z"),

"electionTime" : Timestamp(1456541048, 1),

"electionDate" : ISODate("2016-02-27T02:44:08Z"),

"configVersion" : 4,

"self" : true

},

{

"_id" : 3,

"name" : "somehost:30000",

"health" : 1,

"state" : 7,

"stateStr" : "ARBITER",

"uptime" : 2070226,

"lastHeartbeat" : ISODate("2016-02-27T03:00:26.859Z"),

"lastHeartbeatRecv" : ISODate("2016-02-27T03:00:25.360Z"

),

"pingMs" : NumberLong(0),

"configVersion" : 4

}

],

"ok" : 1

}

原来的端口号为 27017 的主节点健康标记已为 0(不可用)。查看仲裁日志:

2016-02-27T10:44:02.924+0800 I ASIO [ReplicationExecutor] dropping unhealthy pooled connection to somehost:27017

2016-02-27T10:44:02.924+0800 I ASIO [ReplicationExecutor] after drop, pool was empty, going to spawn some connections

2016-02-27T10:44:02.924+0800 I REPL [ReplicationExecutor] Error in heartbeat request to somehost:27017; HostUnreachable Connection refused

可见虽然仲裁已经放弃了对 27017 的连接,但还是会一直给 27017 发心跳包以待其恢复正常后继续使用。为了验证这个我们再将 27017 重启:

$ mongod --port=27017 --fork --dbpath=/opt/mongodb/rs1-27017 --logpath=/opt/mongodb/rs1-27017/mongod.log --replSet=rs1 --auth --keyFile=/opt/mongodb/rs1.key

about to fork child process, waiting until server is ready for connections.

forked process: 31880

child process started successfully, parent exiting

回到目前的主节点 27019:

rs1:PRIMARY> rs.status()

{

"set" : "rs1",

"date" : ISODate("2016-02-27T03:16:59.520Z"),

"myState" : 1,

"term" : NumberLong(3),

"heartbeatIntervalMillis" : NumberLong(2000),

"members" : [

{

"_id" : 0,

"name" : "somehost:27017",

"health" : 1,

"state" : 2,

"stateStr" : "SECONDARY",

"uptime" : 57,

"optime" : {

"ts" : Timestamp(1456542907, 4),

"t" : NumberLong(3)

},

"optimeDate" : ISODate("2016-02-27T03:15:07Z"),

"lastHeartbeat" : ISODate("2016-02-27T03:16:57.609Z"),

"lastHeartbeatRecv" : ISODate("2016-02-27T03:16:59.124Z"

),

"pingMs" : NumberLong(0),

"syncingTo" : "somehost:27019",

"configVersion" : 4

},

{

"_id" : 1,

"name" : "somehost:27018",

"health" : 1,

"state" : 2,

"stateStr" : "SECONDARY",

"uptime" : 2071270,

"optime" : {

"ts" : Timestamp(1456542907, 4),

"t" : NumberLong(3)

},

"optimeDate" : ISODate("2016-02-27T03:15:07Z"),

"lastHeartbeat" : ISODate("2016-02-27T03:16:59.207Z"),

"lastHeartbeatRecv" : ISODate("2016-02-27T03:16:57.656Z"

),

"pingMs" : NumberLong(0),

"syncingTo" : "somehost:27019",

"configVersion" : 4

},

{

"_id" : 2,

"name" : "somehost:27019",

"health" : 1,

"state" : 1,

"stateStr" : "PRIMARY",

"uptime" : 2071271,

"optime" : {

"ts" : Timestamp(1456542907, 4),

"t" : NumberLong(3)

},

"optimeDate" : ISODate("2016-02-27T03:15:07Z"),

"electionTime" : Timestamp(1456541048, 1),

"electionDate" : ISODate("2016-02-27T02:44:08Z"),

"configVersion" : 4,

"self" : true

},

{

"_id" : 3,

"name" : "somehost:30000",

"health" : 1,

"state" : 7,

"stateStr" : "ARBITER",

"uptime" : 2071217,

"lastHeartbeat" : ISODate("2016-02-27T03:16:59.205Z"),

"lastHeartbeatRecv" : ISODate("2016-02-27T03:16:55.506Z"

),

"pingMs" : NumberLong(0),

"configVersion" : 4

}

],

"ok" : 1

}

可见 27017 节点已恢复正常,并作为 slave 节点进行服务。仲裁的日志也说明了这个:

2016-02-27T11:16:03.721+0800 I ASIO [NetworkInterfaceASIO-Replication-0] Successfully connected to somehost:27017

2016-02-27T11:16:03.722+0800 I REPL [ReplicationExecutor] Member somehost:27017 is now in state SECONDARY

slave 节点的故障及恢复基本类似与此。

本文详细介绍了在一个实际投入生产的项目中,如何搭建MongoDB副本集以实现高可用性。从环境配置(4核CPU,8GB内存,100MB带宽,Ubuntu 14.04)开始,逐步讲解了MongoDB的安装、环境变量设置、实例准备、副本集创建、用户创建以及验证副本集的高可用性的全过程。

本文详细介绍了在一个实际投入生产的项目中,如何搭建MongoDB副本集以实现高可用性。从环境配置(4核CPU,8GB内存,100MB带宽,Ubuntu 14.04)开始,逐步讲解了MongoDB的安装、环境变量设置、实例准备、副本集创建、用户创建以及验证副本集的高可用性的全过程。

325

325

被折叠的 条评论

为什么被折叠?

被折叠的 条评论

为什么被折叠?