如果你还没有装HIVE,请看这:Hive集成Mysql作为元数据,写的很详细。

可能出现的问题:

1.按上面博客配置

mysql> GRANT ALL PRIVILEGES ON *.* TO 'hive'@'%' WITH GRANT OPTION;

Query OK, 0 rows affected (0.00 sec)

mysql> flush privileges;

Query OK, 0 rows affected (0.00 sec)当你本地登录时会被拒绝,因为'hive'@'%' %是允许除本地外的访问

[root@DEV21 hadoop]# mysql -uhive -p

Enter password:

ERROR 1045 (28000): Access denied for user 'hive'@'localhost' (using password: YES)解决办法:

[root@DEV21 hadoop]# mysql -uroot -p

Enter password:

Welcome to the MySQL monitor. Commands end with ; or \g.

Your MySQL connection id is 15

Server version: 5.1.73 Source distribution

......

mysql> grant all on hive.* to 'hive'@'localhost' identified by 'guo';

Query OK, 0 rows affected (0.00 sec)

mysql> flush privileges;

Query OK, 0 rows affected (0.00 sec)最后再改过来(你需要远程登录的话,不需要就不用改了)

mysql> GRANT ALL PRIVILEGES ON *.* TO 'hive'@'%' WITH GRANT OPTION;

Query OK, 0 rows affected (0.00 sec)

mysql> flush privileges;

Query OK, 0 rows affected (0.00 sec)或直接用root用户建数据库hive,然后赋权给hive用户

mysql> grant all on hive.* to 'hive'@'%' identified by 'guo';

2.[root@hadoop ~]# hive

15/02/04 09:38:06 WARN conf.HiveConf: DEPRECATED: Configuration property hive.metastore.local no longer has any effect. Make sure to provide a valid value for hive.metastore.uris if you are connecting to a remote metastore.

15/02/04 09:38:06 WARN conf.HiveConf: HiveConf of name hive.metastore.local does not exist

Logging initialized using configuration in jar:file:/usr/local/hive/lib/hive-common-0.14.0.jar!/hive-log4j.properties

hive>

解决方法:

在0.10 0.11或者之后的HIVE版本 hive.metastore.local 属性不再使用。

在配置文件里面:

<property>

<name>hive.metastore.local</name>

<value>false</value>

<description>controls whether to connect to remove metastore server or open a new metastore server in Hive Client JVM</description>

</property>

删除掉,再次登录警告就消失了

3.如果出现FAILED: Execution Error, return code 1 from org.apache.hadoop.hive.ql.exec.DDLTask.

请看这:http://blog.csdn.net/dr_guo/article/details/50987292简单使用演示

hive> create database guo;

hive> use guo;

hive> create table phone(id int, brand string, ram string, price double) #与java数据类型基本相同

> row format delimited

> fields terminated by '\t'; #表示字段以制表符隔开

hive> show tables;

OK

phone

hive> load data local inpath '/home/guo/hivedata/phone.data' into table phone; #上传数据,数据格式要与表的结构相同

Copying data from file:/home/guo/hivedata/phone.data

Copying file: file:/home/guo/hivedata/phone.data

Loading data to table guo.phone

Table guo.phone stats: [num_partitions: 0, num_files: 1, num_rows: 0, total_size: 62, raw_data_size: 0]

OK

Time taken: 45.09 seconds

hive> select * from phone;

OK

1<span style="white-space:pre"> </span>iphone5<span style="white-space:pre"> </span>2G<span style="white-space:pre"> </span>5999.0

2<span style="white-space:pre"> </span>oneplus<span style="white-space:pre"> </span>3G<span style="white-space:pre"> </span>2299.0

3<span style="white-space:pre"> </span>锤子T1<span style="white-space:pre"> </span>2G<span style="white-space:pre"> </span>1999.0

Time taken: 1.539 seconds, Fetched: 3 row(s)

hive> select count(*) from phone;

Total MapReduce jobs = 1

Launching Job 1 out of 1

Number of reduce tasks determined at compile time: 1

In order to change the average load for a reducer (in bytes):

set hive.exec.reducers.bytes.per.reducer=<number>

In order to limit the maximum number of reducers:

set hive.exec.reducers.max=<number>

In order to set a constant number of reducers:

set mapred.reduce.tasks=<number>

Starting Job = job_1458987137545_0001, Tracking URL = http://drguo1:8088/proxy/application_1458987137545_0001/

Kill Command = /opt/Hadoop/hadoop-2.7.2/bin/hadoop job -kill job_1458987137545_0001

Hadoop job information for Stage-1: number of mappers: 1; number of reducers: 1

2016-03-26 18:40:00,189 Stage-1 map = 0%, reduce = 0%

2016-03-26 18:40:33,017 Stage-1 map = 100%, reduce = 0%, Cumulative CPU 2.77 sec

2016-03-26 18:40:34,102 Stage-1 map = 100%, reduce = 0%, Cumulative CPU 2.77 sec

2016-03-26 18:40:35,212 Stage-1 map = 100%, reduce = 0%, Cumulative CPU 2.77 sec

2016-03-26 18:40:36,307 Stage-1 map = 100%, reduce = 0%, Cumulative CPU 2.77 sec

2016-03-26 18:40:37,409 Stage-1 map = 100%, reduce = 0%, Cumulative CPU 2.77 sec

2016-03-26 18:40:38,534 Stage-1 map = 100%, reduce = 0%, Cumulative CPU 2.77 sec

2016-03-26 18:40:39,645 Stage-1 map = 100%, reduce = 0%, Cumulative CPU 2.77 sec

2016-03-26 18:40:40,747 Stage-1 map = 100%, reduce = 0%, Cumulative CPU 2.77 sec

2016-03-26 18:40:41,826 Stage-1 map = 100%, reduce = 0%, Cumulative CPU 2.77 sec

2016-03-26 18:40:42,919 Stage-1 map = 100%, reduce = 0%, Cumulative CPU 2.77 sec

2016-03-26 18:40:43,996 Stage-1 map = 100%, reduce = 0%, Cumulative CPU 2.77 sec

2016-03-26 18:40:45,089 Stage-1 map = 100%, reduce = 0%, Cumulative CPU 2.77 sec

2016-03-26 18:40:46,201 Stage-1 map = 100%, reduce = 0%, Cumulative CPU 2.77 sec

2016-03-26 18:40:47,285 Stage-1 map = 100%, reduce = 0%, Cumulative CPU 2.77 sec

2016-03-26 18:40:48,361 Stage-1 map = 100%, reduce = 0%, Cumulative CPU 2.77 sec

2016-03-26 18:40:49,457 Stage-1 map = 100%, reduce = 0%, Cumulative CPU 2.77 sec

2016-03-26 18:40:50,531 Stage-1 map = 100%, reduce = 0%, Cumulative CPU 2.77 sec

2016-03-26 18:40:51,608 Stage-1 map = 100%, reduce = 0%, Cumulative CPU 2.77 sec

2016-03-26 18:40:52,667 Stage-1 map = 100%, reduce = 0%, Cumulative CPU 2.77 sec

2016-03-26 18:40:53,745 Stage-1 map = 100%, reduce = 0%, Cumulative CPU 2.77 sec

2016-03-26 18:40:54,832 Stage-1 map = 100%, reduce = 0%, Cumulative CPU 2.77 sec

2016-03-26 18:40:55,951 Stage-1 map = 100%, reduce = 0%, Cumulative CPU 2.77 sec

2016-03-26 18:40:57,106 Stage-1 map = 100%, reduce = 0%, Cumulative CPU 2.77 sec

2016-03-26 18:40:58,222 Stage-1 map = 100%, reduce = 0%, Cumulative CPU 2.77 sec

2016-03-26 18:40:59,338 Stage-1 map = 100%, reduce = 0%, Cumulative CPU 2.77 sec

2016-03-26 18:41:00,446 Stage-1 map = 100%, reduce = 0%, Cumulative CPU 2.77 sec

2016-03-26 18:41:01,565 Stage-1 map = 100%, reduce = 0%, Cumulative CPU 2.77 sec

2016-03-26 18:41:02,680 Stage-1 map = 100%, reduce = 0%, Cumulative CPU 2.77 sec

2016-03-26 18:41:03,828 Stage-1 map = 100%, reduce = 0%, Cumulative CPU 2.77 sec

2016-03-26 18:41:04,928 Stage-1 map = 100%, reduce = 0%, Cumulative CPU 2.77 sec

2016-03-26 18:41:06,058 Stage-1 map = 100%, reduce = 0%, Cumulative CPU 2.77 sec

2016-03-26 18:41:07,150 Stage-1 map = 100%, reduce = 0%, Cumulative CPU 2.77 sec

2016-03-26 18:41:08,226 Stage-1 map = 100%, reduce = 0%, Cumulative CPU 2.77 sec

2016-03-26 18:41:09,542 Stage-1 map = 100%, reduce = 100%, Cumulative CPU 5.19 sec

2016-03-26 18:41:10,647 Stage-1 map = 100%, reduce = 100%, Cumulative CPU 5.19 sec

2016-03-26 18:41:12,029 Stage-1 map = 100%, reduce = 100%, Cumulative CPU 6.27 sec

2016-03-26 18:41:13,104 Stage-1 map = 100%, reduce = 100%, Cumulative CPU 6.27 sec

2016-03-26 18:41:14,180 Stage-1 map = 100%, reduce = 100%, Cumulative CPU 6.27 sec

2016-03-26 18:41:15,264 Stage-1 map = 100%, reduce = 100%, Cumulative CPU 6.27 sec

2016-03-26 18:41:16,365 Stage-1 map = 100%, reduce = 100%, Cumulative CPU 6.27 sec

MapReduce Total cumulative CPU time: 6 seconds 270 msec

Ended Job = job_1458987137545_0001

MapReduce Jobs Launched:

Job 0: Map: 1 Reduce: 1 Cumulative CPU: 6.27 sec HDFS Read: 270 HDFS Write: 2 SUCCESS

Total MapReduce CPU Time Spent: 6 seconds 270 msec

OK

3

Time taken: 154.238 seconds, Fetched: 1 row(s)

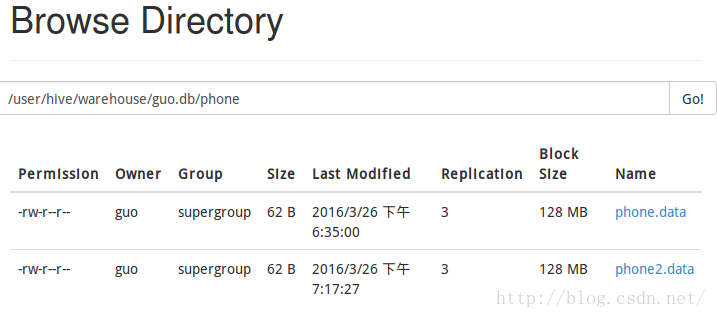

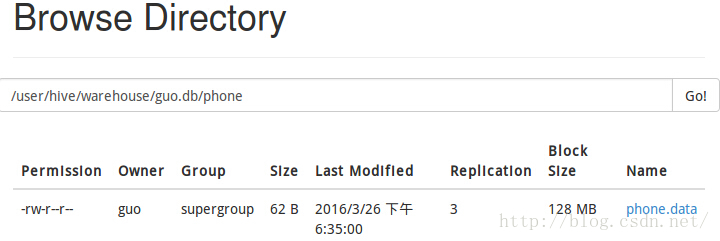

也可以直接传

guo@drguo1:~$ hdfs dfs -put /home/guo/hivedata/phone2.data /user/hive/warehouse/guo.db/phone

hive> select * from phone;

OK

1 iphone5 2G 5999.0

2 oneplus 3G 2299.0

3 锤子T1 2G 1999.0

4 iphone5 2G 5999.0

5 oneplus 3G 2299.0

6 锤子T1 2G 1999.0

Time taken: 7.56 seconds, Fetched: 6 row(s)

172

172

被折叠的 条评论

为什么被折叠?

被折叠的 条评论

为什么被折叠?