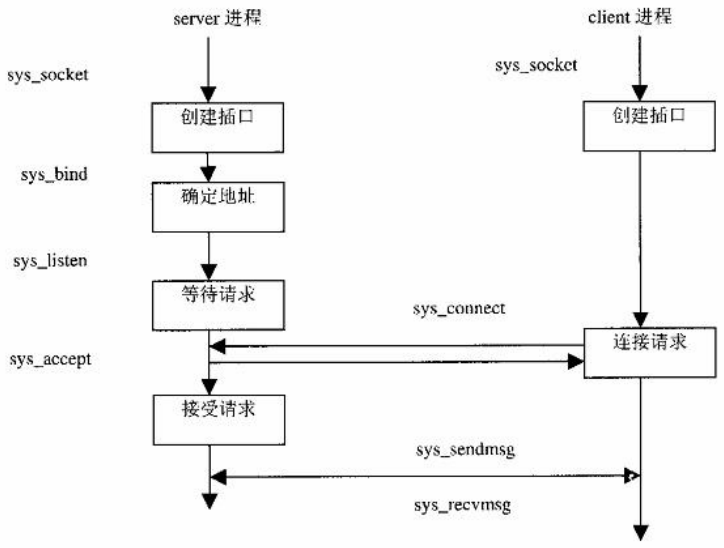

一、利用插口实现进程间通信的流程示意图如下:

有连接插口通信流程示意图

二、client_server_local_sock,利用Unix域协议进行通信的客户端程序和服务端程序。

我们以一个实际的例子来讲解socket进程间通信。

先看server端:

#include <sys/types.h>

#include <sys/socket.h>

#include <stdio.h>

#include <sys/un.h>

#include <unistd.h>

#include <stdlib.h>

int main()

{

int server_sockfd, client_sockfd;

int server_len, client_len;

struct sockaddr_un server_address;

struct sockaddr_un client_address;

unlink("/tmp/server_socket");//将可能已经存在的同名文件先删除

server_sockfd = socket(AF_UNIX, SOCK_STREAM,0);

if( server_sockfd < 0)

{

perror("server");

exit(1);

}

server_address.sun_family = AF_UNIX;

strcpy(server_address.sun_path, "/tmp/server_socket");

server_len =sizeof(server_address);

int result = 0;

result = bind(server_sockfd, (struct sockaddr *)&server_address, server_len);

if (result < 0)

{

perror("bind");

exit(1);

}

listen(server_sockfd, 5);

while(1)

{

char ch;

printf("server waiting\n");

client_len =sizeof(client_address);

//client_sockfd = accept(server_sockfd, (struct sockaddr *)&client_address, &client_len);

client_sockfd = accept(server_sockfd, NULL, NULL);//返回的是newsock对应的文件号

if (client_sockfd < 0)

{

perror("accept");

exit(1);

}

read(client_sockfd, &ch, 1);//client_sockfd是newsock对应的文件号

ch++;

write(client_sockfd, &ch, 1);

close(client_sockfd);

}

}

#include <sys/types.h>

#include <sys/socket.h>

#include <stdio.h>

#include <sys/un.h>

#include <unistd.h>

#include <stdlib.h>

int main()

{

int sockfd;

int len;

struct sockaddr_un address;

int result;

char ch = 'A';

sockfd =socket(AF_UNIX, SOCK_STREAM, 0);

if (sockfd < 0 )

{

perror("socket");

exit(1);

}

address.sun_family = AF_UNIX;

strcpy(address.sun_path, "/tmp/server_socket");

len = sizeof(address);

result = connect(sockfd, (struct sockaddr *)&address, len);

if(result < 0)

{

perror("oops: client");

exit(1);

}

write(sockfd, &ch,1);

read(sockfd, &ch, 1);

printf("char from server = %c\n", ch);

close(sockfd);

exit(0);

}

jltxgcy@jltxgcy-desktop:~/Desktop/client_server_local_sock-master/server$ ./server

server waiting

jltxgcy@jltxgcy-desktop:~/Desktop/client_server_local_sock-master/client$ ./client

char from server = B三、我们先来看server端,socket(AF_UNIX, SOCK_STREAM,0),如上图所示为创建插口。其系统调用为:

asmlinkage long sys_socket(int family, int type, int protocol)//family为AF_UNIX,type为SOCK_STREAM,protocol为0

{

int retval;

struct socket *sock;

retval = sock_create(family, type, protocol, &sock);

if (retval < 0)

goto out;

retval = sock_map_fd(sock);

if (retval < 0)

goto out_release;

out:

/* It may be already another descriptor 8) Not kernel problem. */

return retval;

out_release:

sock_release(sock);

return retval;

}

static struct vfsmount *sock_mnt;

static DECLARE_FSTYPE(sock_fs_type, "sockfs", sockfs_read_super,

FS_NOMOUNT|FS_SINGLE);在sys_socket中,我们首先调用sock_create,建立一个socket数据结构,然后调用sock_map_fd将其”映射“到一个已打开文件中。sock_create如下:

int sock_create(int family, int type, int protocol, struct socket **res)

{

int i;

struct socket *sock;

/*

* Check protocol is in range

*/

if(family<0 || family>=NPROTO)

return -EAFNOSUPPORT;

/* Compatibility.

This uglymoron is moved from INET layer to here to avoid

deadlock in module load.

*/

if (family == PF_INET && type == SOCK_PACKET) {//我们只关心Unix域,也就是family为AF_UNIX

static int warned;

if (!warned) {

warned = 1;

printk(KERN_INFO "%s uses obsolete (PF_INET,SOCK_PACKET)\n", current->comm);

}

family = PF_PACKET;

}

#if defined(CONFIG_KMOD) && defined(CONFIG_NET)//不关心条件编译

/* Attempt to load a protocol module if the find failed.

*

* 12/09/1996 Marcin: But! this makes REALLY only sense, if the user

* requested real, full-featured networking support upon configuration.

* Otherwise module support will break!

*/

if (net_families[family]==NULL)

{

char module_name[30];

sprintf(module_name,"net-pf-%d",family);

request_module(module_name);

}

#endif

net_family_read_lock();

if (net_families[family] == NULL) {//系统初始化或安装模块时,要将指向相应网域的这个数据结构的指针填入一个数组net_families[]中,否则就说明系统尚不支持给定的网域,Unix域的net_proto_family数据结构为unix_family_ops

i = -EAFNOSUPPORT;

goto out;

}

/*

* Allocate the socket and allow the family to set things up. if

* the protocol is 0, the family is instructed to select an appropriate

* default.

*/

if (!(sock = sock_alloc())) //分配一个socket数据结构并进行一些初始化

{

printk(KERN_WARNING "socket: no more sockets\n");

i = -ENFILE; /* Not exactly a match, but its the

closest posix thing */

goto out;

}

sock->type = type;//AF_UNIX

if ((i = net_families[family]->create(sock, protocol)) < 0) //创建插口sock结构

{

sock_release(sock);

goto out;

}

*res = sock;

out:

net_family_read_unlock();

return i;//返回fd

}unix_family_ops,如下:

struct net_proto_family unix_family_ops = {

PF_UNIX,

unix_create

};

struct socket *sock_alloc(void)

{

struct inode * inode;

struct socket * sock;

inode = get_empty_inode();

if (!inode)

return NULL;

inode->i_sb = sock_mnt->mnt_sb;

sock = socki_lookup(inode);

inode->i_mode = S_IFSOCK|S_IRWXUGO;//i_mode设置成S_IFSOCK

inode->i_sock = 1;//i_sock设置成1

inode->i_uid = current->fsuid;

inode->i_gid = current->fsgid;

sock->inode = inode;

init_waitqueue_head(&sock->wait);

sock->fasync_list = NULL;

sock->state = SS_UNCONNECTED;//刚分配socket时的状态

sock->flags = 0;

sock->ops = NULL;

sock->sk = NULL;

sock->file = NULL;

sockets_in_use[smp_processor_id()].counter++;

return sock;

}extern __inline__ struct socket *socki_lookup(struct inode *inode)

{

return &inode->u.socket_i;

}struct socket

{

socket_state state;

unsigned long flags;

struct proto_ops *ops;

struct inode *inode;

struct fasync_struct *fasync_list; /* Asynchronous wake up list */

struct file *file; /* File back pointer for gc */

struct sock *sk;

wait_queue_head_t wait;

short type;

unsigned char passcred;

};static int unix_create(struct socket *sock, int protocol)

{

if (protocol && protocol != PF_UNIX)

return -EPROTONOSUPPORT;

sock->state = SS_UNCONNECTED;

switch (sock->type) {

case SOCK_STREAM://有连接的socket通信

sock->ops = &unix_stream_ops;//执行了unix_stream_ops

break;

/*

* Believe it or not BSD has AF_UNIX, SOCK_RAW though

* nothing uses it.

*/

case SOCK_RAW:

sock->type=SOCK_DGRAM;

case SOCK_DGRAM:

sock->ops = &unix_dgram_ops;

break;

default:

return -ESOCKTNOSUPPORT;

}

return unix_create1(sock) ? 0 : -ENOMEM;

}struct proto_ops unix_stream_ops = {

family: PF_UNIX,

release: unix_release,

bind: unix_bind,

connect: unix_stream_connect,

socketpair: unix_socketpair,

accept: unix_accept,

getname: unix_getname,

poll: unix_poll,

ioctl: unix_ioctl,

listen: unix_listen,

shutdown: unix_shutdown,

setsockopt: sock_no_setsockopt,

getsockopt: sock_no_getsockopt,

sendmsg: unix_stream_sendmsg,

recvmsg: unix_stream_recvmsg,

mmap: sock_no_mmap,

};static struct sock * unix_create1(struct socket *sock)

{

struct sock *sk;

if (atomic_read(&unix_nr_socks) >= 2*files_stat.max_files)

return NULL;

MOD_INC_USE_COUNT;

sk = sk_alloc(PF_UNIX, GFP_KERNEL, 1);//分配了一个插口sock结构

if (!sk) {

MOD_DEC_USE_COUNT;

return NULL;

}

atomic_inc(&unix_nr_socks);

sock_init_data(sock,sk);

sk->write_space = unix_write_space;//指向了unix_write_space

sk->max_ack_backlog = sysctl_unix_max_dgram_qlen;

sk->destruct = unix_sock_destructor;

sk->protinfo.af_unix.dentry=NULL;

sk->protinfo.af_unix.mnt=NULL;

sk->protinfo.af_unix.lock = RW_LOCK_UNLOCKED;

atomic_set(&sk->protinfo.af_unix.inflight, 0);

init_MUTEX(&sk->protinfo.af_unix.readsem);/* single task reading lock */

init_waitqueue_head(&sk->protinfo.af_unix.peer_wait);

sk->protinfo.af_unix.list=NULL;

unix_insert_socket(&unix_sockets_unbound, sk);//一个sock数据结构插入到一个unix_socket结构队列

return sk;

}void sock_init_data(struct socket *sock, struct sock *sk)

{

skb_queue_head_init(&sk->receive_queue);//重要,sk_buff_head数据结构

skb_queue_head_init(&sk->write_queue);//重要,sk_buff_head数据结构

skb_queue_head_init(&sk->error_queue);

init_timer(&sk->timer);

sk->allocation = GFP_KERNEL;

sk->rcvbuf = sysctl_rmem_default;//64KB

sk->sndbuf = sysctl_wmem_default;

sk->state = TCP_CLOSE;//插口的状态刚开始为TCP_CLOSE

sk->zapped = 1;

sk->socket = sock;//互相指向

if(sock)

{

sk->type = sock->type;

sk->sleep = &sock->wait;

sock->sk = sk;//互相指向

} else

sk->sleep = NULL;

sk->dst_lock = RW_LOCK_UNLOCKED;

sk->callback_lock = RW_LOCK_UNLOCKED;

sk->state_change = sock_def_wakeup;

sk->data_ready = sock_def_readable;//重要

sk->write_space = sock_def_write_space;

sk->error_report = sock_def_error_report;

sk->destruct = sock_def_destruct;

sk->peercred.pid = 0;

sk->peercred.uid = -1;

sk->peercred.gid = -1;

sk->rcvlowat = 1;

sk->rcvtimeo = MAX_SCHEDULE_TIMEOUT;

sk->sndtimeo = MAX_SCHEDULE_TIMEOUT;

atomic_set(&sk->refcnt, 1);

}

最低0.47元/天 解锁文章

最低0.47元/天 解锁文章

685

685

被折叠的 条评论

为什么被折叠?

被折叠的 条评论

为什么被折叠?