前一篇文章是Spark SQL的入门篇Spark SQL初探,介绍了一些基础知识和API,但是离我们的日常使用还似乎差了一步之遥。

终结Shark的利用有2个:

1、和Spark程序的集成有诸多限制

2、Hive的优化器不是为Spark而设计的,计算模型的不同,使得Hive的优化器来优化Spark程序遇到了瓶颈。

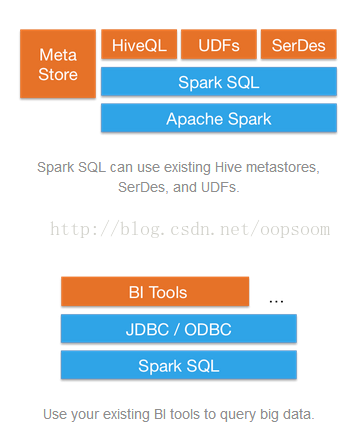

这里看一下Spark SQL 的基础架构:

Spark1.1发布后会支持Spark SQL CLI , Spark SQL的CLI会要求被连接到一个Hive Thrift Server上,来实现类似hive shell的功能。(ps:目前git里面的branch-1.0-jdbc。目前还没有正式release,我测了一下午,发现还是有bug的,耐心等待release吧!)

本着研究的心态,想和Hive环境集成一下,在spark shell里执行hive的语句。

一、编译Spark支持Hive

让Spark支持Hive有2种sbt编译方式:

1、sbt前加变量名

SPARK_HADOOP_VERSION=0.20.2-cdh3u5 SPARK_HIVE=true sbt/sbt assembly2、修改project/SparkBuild.scala文件

val DEFAULT_HADOOP_VERSION = "0.20.2-cdh3u5"

val DEFAULT_HIVE = true

然后执行sbt/sbt assembly二、Spark SQL 操作Hive

启动spark-shell

[root@web01 spark]# bin/spark-shell --master spark://10.1.8.210:7077 --driver-class-path /app/hadoop/hive-0.11.0-bin/lib/mysql-connector-java-5.1.13-bin.jar:/app/hadoop/hive-0.11.0-bin/lib/hadoop-lzo-0.4.15.jar导入HiveContext

scala> val hiveContext = new org.apache.spark.sql.hive.HiveContext(sc)

hiveContext: org.apache.spark.sql.hive.HiveContext = org.apache.spark.sql.hive.HiveContext@7766d31c

scala> import hiveContext._

import hiveContext._

hiveContext里提供了一个执行sql的函数 hql(string text)

去hive里show databases. 这里Spark会parse hql 然后生成Query Plan。但是这里不会执行查询,只有调用collect的时候才会执行。

scala> val show_databases = hql("show databases")

14/07/09 19:59:09 INFO storage.BlockManager: Removing broadcast 0

14/07/09 19:59:09 INFO storage.BlockManager: Removing block broadcast_0

14/07/09 19:59:09 INFO parse.ParseDriver: Parsing command: show databases

14/07/09 19:59:09 INFO parse.ParseDriver: Parse Completed

14/07/09 19:59:09 INFO analysis.Analyzer: Max iterations (2) reached for batch MultiInstanceRelations

14/07/09 19:59:09 INFO analysis.Analyzer: Max iterations (2) reached for batch CaseInsensitiveAttributeReferences

14/07/09 19:59:09 INFO analysis.Analyzer: Max iterations (2) reached for batch Check Analysis

14/07/09 19:59:09 INFO storage.MemoryStore: Block broadcast_0 of size 393044 dropped from memory (free 308713881)

14/07/09 19:59:09 INFO broadcast.HttpBroadcast: Deleted broadcast file: /tmp/spark-c29da0f8-c5e3-4fbf-adff-9aa77f9743b2/broadcast_0

14/07/09 19:59:09 INFO sql.SQLContext$$anon$1: Max iterations (2) reached for batch Add exchange

14/07/09 19:59:09 INFO sql.SQLContext$$anon$1: Max iterations (2) reached for batch Prepare Expressions

14/07/09 19:59:09 INFO spark.ContextCleaner: Cleaned broadcast 0

14/07/09 19:59:09 INFO ql.Driver: <PERFLOG method=Driver.run>

14/07/09 19:59:09 INFO ql.Driver: <PERFLOG method=TimeToSubmit>

14/07/09 19:59:09 INFO ql.Driver: <PERFLOG method=compile>

14/07/09 19:59:09 INFO exec.ListSinkOperator: 0 finished. closing...

14/07/09 19:59:09 INFO exec.ListSinkOperator: 0 forwarded 0 rows

14/07/09 19:59:09 INFO ql.Driver: <PERFLOG method=parse>

14/07/09 19:59:09 INFO parse.ParseDriver: Parsing command: show databases

14/07/09 19:59:09 INFO parse.ParseDriver: Parse Completed

14/07/09 19:59:09 INFO ql.Driver: </PERFLOG method=parse start=1404907149927 end=1404907149928 duration=1>

14/07/09 19:59:09 INFO ql.Driver: <PERFLOG method=semanticAnalyze>

14/07/09 19:59:09 INFO ql.Driver: Semantic Analysis Completed

14/07/09 19:59:09 INFO ql.Driver: </PERFLOG method=semanticAnalyze start=1404907149928 end=1404907149977 duration=49>

14/07/09 19:59:09 INFO exec.ListSinkOperator: Initializing Self 0 OP

14/07/09 19:59:09 INFO exec.ListSinkOperator: Operator 0 OP initialized

14/07/09 19:59:09 INFO exec.ListSinkOperator: Initialization Done 0 OP

14/07/09 19:59:09 INFO ql.Driver: Returning Hive schema: Schema(fieldSchemas:[FieldSchema(name:database_name, type:string, comment:from deserializer)], properties:null)

14/07/09 19:59:09 INFO ql.Driver: </PERFLOG method=compile start=1404907149925 end=1404907149980 duration=55>

14/07/09 19:59:09 INFO ql.Driver: <PERFLOG method=Driver.execute>

14/07/09 19:59:09 INFO ql.Driver: Starting command: show databases

14/07/09 19:59:09 INFO ql.Driver: </PERFLOG method=TimeToSubmit start=1404907149925 end=1404907149980 duration=55>

14/07/09 19:59:09 INFO ql.Driver: <PERFLOG method=runTasks>

14/07/09 19:59:09 INFO ql.Driver: <PERFLOG method=task.DDL.Stage-0>

14/07/09 19:59:09 INFO metastore.HiveMetaStore: 0: get_all_databases

14/07/09 19:59:09 INFO HiveMetaStore.audit: ugi=root ip=unknown-ip-addr cmd=get_all_databases

14/07/09 19:59:09 INFO exec.DDLTask: results : 1

14/07/09 19:59:10 INFO ql.Driver: </PERFLOG method=task.DDL.Stage-0 start=1404907149980 end=1404907150032 duration=52>

14/07/09 19:59:10 INFO ql.Driver: </PERFLOG method=runTasks start=1404907149980 end=1404907150032 duration=52>

14/07/09 19:59:10 INFO ql.Driver: </PERFLOG method=Driver.execute start=1404907149980 end=1404907150032 duration=52>

14/07/09 19:59:10 INFO ql.Driver: OK

14/07/09 19:59:10 INFO ql.Driver: <PERFLOG method=releaseLocks>

14/07/09 19:59:10 INFO ql.Driver: </PERFLOG method=releaseLocks start=1404907150033 end=1404907150033 duration=0>

14/07/09 19:59:10 INFO ql.Driver: </PERFLOG method=Driver.run start=1404907149925 end=1404907150033 duration=108>

14/07/09 19:59:10 INFO mapred.FileInputFormat: Total input paths to process : 1

14/07/09 19:59:10 INFO ql.Driver: <PERFLOG method=releaseLocks>

14/07/09 19:59:10 INFO ql.Driver: </PERFLOG method=releaseLocks start=1404907150037 end=1404907150037 duration=0>

show_databases: org.apache.spark.sql.SchemaRDD =

SchemaRDD[16] at RDD at SchemaRDD.scala:100

== Query Plan ==

<Native command: executed by Hive>scala> show_databases.collect()

14/07/09 20:00:44 INFO spark.SparkContext: Starting job: collect at SparkPlan.scala:52

14/07/09 20:00:44 INFO scheduler.DAGScheduler: Got job 2 (collect at SparkPlan.scala:52) with 1 output partitions (allowLocal=false)

14/07/09 20:00:44 INFO scheduler.DAGScheduler: Final stage: Stage 2(collect at SparkPlan.scala:52)

14/07/09 20:00:44 INFO scheduler.DAGScheduler: Parents of final stage: List()

14/07/09 20:00:44 INFO scheduler.DAGScheduler: Missing parents: List()

14/07/09 20:00:44 INFO scheduler.DAGScheduler: Submitting Stage 2 (MappedRDD[20] at map at SparkPlan.scala:52), which has no missing parents

14/07/09 20:00:44 INFO scheduler.DAGScheduler: Submitting 1 missing tasks from Stage 2 (MappedRDD[20] at map at SparkPlan.scala:52)

14/07/09 20:00:44 INFO scheduler.TaskSchedulerImpl: Adding task set 2.0 with 1 tasks

14/07/09 20:00:44 INFO scheduler.TaskSetManager: Starting task 2.0:0 as TID 9 on executor 0: web01.dw (PROCESS_LOCAL)

14/07/09 20:00:44 INFO scheduler.TaskSetManager: Serialized task 2.0:0 as 1511 bytes in 0 ms

14/07/09 20:00:45 INFO scheduler.DAGScheduler: Completed ResultTask(2, 0)

14/07/09 20:00:45 INFO scheduler.TaskSetManager: Finished TID 9 in 12 ms on web01.dw (progress: 1/1)

14/07/09 20:00:45 INFO scheduler.TaskSchedulerImpl: Removed TaskSet 2.0, whose tasks have all completed, from pool

14/07/09 20:00:45 INFO scheduler.DAGScheduler: Stage 2 (collect at SparkPlan.scala:52) finished in 0.014 s

14/07/09 20:00:45 INFO spark.SparkContext: Job finished: collect at SparkPlan.scala:52, took 0.020520428 s

res5: Array[org.apache.spark.sql.Row] = Array([default])

同样的执行:show tables

scala> hql("show tables").collect()14/07/09 20:01:28 INFO scheduler.TaskSchedulerImpl: Removed TaskSet 3.0, whose tasks have all completed, from pool

14/07/09 20:01:28 INFO scheduler.DAGScheduler: Stage 3 (collect at SparkPlan.scala:52) finished in 0.013 s

14/07/09 20:01:28 INFO spark.SparkContext: Job finished: collect at SparkPlan.scala:52, took 0.019173851 s

res7: Array[org.apache.spark.sql.Row] = Array([item], [src])

理论上是支持HIVE所有的操作,包括UDF。

PS:遇到的问题:

Caused by: org.datanucleus.exceptions.NucleusException: Attempt to invoke the "BoneCP" plugin to create a ConnectionPool gave an error : The specified datastore driver ("com.mysql.jdbc.Driver") was not found in the CLASSPATH. Please check your CLASSPATH specification, and the name of the driver.

解决办法:就是我上面启动的时候带上sql-connector的路径。。

三、总结:

Spark SQL 兼容了Hive的大部分语法和UDF,但是在处理查询计划的时候,使用了Catalyst框架进行优化,优化成适合Spark编程模型的执行计划,使得效率上高出hive很多。由于Spark1.1暂时还未发布,目前还存在bug,等到稳定版发布了再继续测试了。

全文完:)

原创文章,转载请注明出自:http://blog.csdn.net/oopsoom/article/details/37603261

1505

1505

被折叠的 条评论

为什么被折叠?

被折叠的 条评论

为什么被折叠?