通过mapReduce实现基于项目的协同过滤推荐

- 需求介绍

协同过滤推荐网上有很多种介绍,我这里主要介绍的是基于项目的协同过滤。基于项目的协同过滤推荐基于这样的假设:一个用户会喜欢他之前喜欢的项目相似的项目。因此,基于项目的协同过滤推荐关键在于计算物品之间的相似度。 - 数据介绍

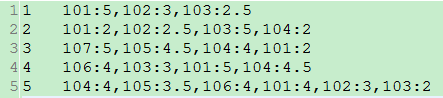

我选用的数据集合如下:

1,101,5.0

1,102,3.0

1,103,2.5

2,101,2.0

2,102,2.5

2,103,5.0

2,104,2.0

3,101,2.0

3,104,4.0

3,105,4.5

3,107,5.0

4,101,5.0

4,103,3.0

4,104,4.5

4,106,4.0

5,101,4.0

5,102,3.0

5,103,2.0

5,104,4.0

5,105,3.5

5,106,4.0

这个数据集合总共有三列,分别是用户的id,项目的id,用户对项目的评分。我想通过这些数据计算出推荐结果。 - 环境介绍

我的系统是Windows7,用的,软件如下:

Eclipse Java EE IDE for Web Developers.

Version: Mars.2 Release (4.5.2)

Build id: 20160218-0600

JDK1.7

Maven3.3.9

Hadoop2.6

开发环境我这里就不介绍了,大家感兴趣的网上搜搜配置一下。 - 实现步骤介绍

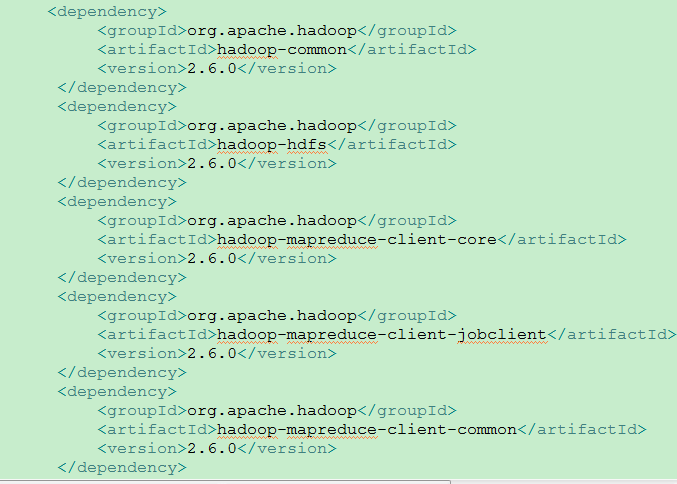

1、我先构建一个maven的项目,配置hadoop依赖的jar包,主要步骤如下:

打开ecplise,在下面右键,选择new,选择Project,弹出一个New Project界面,在输入框中输入maven,出现如下图所示的项目,

,点击next。。构建maven项目这里就不详细介绍了,网上有很多。这里主要介绍pom.xml配置。我主要配置了如下内容:

hadoop-common

hadoop-hdfs

hadoop-mapreduce-client-core

hadoop-mapreduce-client-jobclient

hadoop-mapreduce-client-common

结果如下:

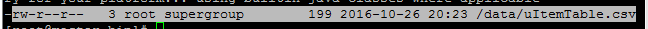

2、通过WinSCP把uItemTable.csv数据上传到分布式服务器端的Downloads文件夹,通过命令:

./hadoop -put ~/Downloads hdfs://master:9000/data

效果如下图所示:

3、读取hdfs对应数据,按用户分组,计算所有物品出现的组合列表,得到用户对物品的评分矩阵。

3.1、构建推荐入口类,Recommend.java内容如下:

package recommand.hadoop.phl;

import java.io.IOException;

import java.net.URISyntaxException;

import java.util.HashMap;

import java.util.Map;

import java.util.regex.Pattern;

/**

<ul><li>推荐入口</li>

<li>@author root

*

*/

public class Recommend {

public static final String HDFS = "hdfs://10.10.44.92:9000";

public static final Pattern DELIMITER = Pattern.compile("[\t,]");

public static void main(String[] args) throws ClassNotFoundException, IOException, URISyntaxException, InterruptedException {

Map<String,String> path = new HashMap<String,String>();

path.put("Step1Input", HDFS + "/data/uItemTable.csv");

path.put("Step1Output", HDFS + "/output/step1");

path.put("Step2Input", path.get("Step1Output"));

path.put("Step2Output", HDFS + "/output/step2");

path.put("Step3Input1", path.get("Step1Output"));

path.put("Step3Output1", HDFS + "/output/step3_1");

path.put("Step3Input2", path.get("Step2Output"));

path.put("Step3Output2", HDFS + "/output/step3_2");

path.put("Step4Input1", path.get("Step3Output1"));

path.put("Step4Input2", path.get("Step3Output2"));

path.put("Step4Output", HDFS + "/output/step4");

Step1.runStep1(path);

Step2.runStep2(path);

Step3.runStep3_1(path);

Step3.runStep3_2(path);

Step4.runStep4(path);

System.exit(0);

}

}

3.2、管理hdfs的类,HDFSDao.java内容如下:

package recommand.hadoop.phl;

import java.io.IOException;

import java.net.URI;

import java.net.URISyntaxException;

import org.apache.hadoop.conf.Configuration;

import org.apache.hadoop.fs.FileSystem;

import org.apache.hadoop.fs.Path;

import org.apache.hadoop.mapreduce.Job;

import sun.tools.tree.ThisExpression;

public class HDFSDao {

//HDFS访问地址

private static final String HDFS = "hdfs://10.10.44.92:9000/";

//hdfs路径

private String hdfsPath;

//Hadoop系统配置

private Configuration conf;

//运行job的名字

private String name;

public HDFSDao(Configuration conf) {

this.conf = conf;

}

public HDFSDao( Configuration conf,String name) {

this.conf = conf;

this.name = name;

}

public HDFSDao(String hdfs, Configuration conf,String name) {

this.hdfsPath = hdfs;

this.conf = conf;

this.name = name;

}

public Job conf() throws IOException{

Job job = Job.getInstance(this.conf,this.name);

return job;

}

public void rmr(String outUrl) throws IOException, URISyntaxException {

FileSystem fileSystem = FileSystem.get(new URI(outUrl),this.conf);

if(fileSystem.exists(new Path(outUrl))){

fileSystem.delete(new Path(outUrl), true);

System.out.println("outUrl "+outUrl);

}

}

}

3.3、按用户分组,计算所有物品出现的组合列表,得到用户对物品的评分矩阵,Step1.java,内容如下:

package recommand.hadoop.phl;

import java.io.IOException;

import java.net.URISyntaxException;

import java.util.Map;

import org.apache.hadoop.conf.Configuration;

import org.apache.hadoop.fs.Path;

import org.apache.hadoop.io.IntWritable;

import org.apache.hadoop.io.Text;

import org.apache.hadoop.mapreduce.Job;

import org.apache.hadoop.mapreduce.Mapper;

import org.apache.hadoop.mapreduce.Reducer;

import org.apache.hadoop.mapreduce.lib.input.FileInputFormat;

import org.apache.hadoop.mapreduce.lib.input.TextInputFormat;

import org.apache.hadoop.mapreduce.lib.output.FileOutputFormat;

import org.apache.hadoop.mapreduce.lib.output.TextOutputFormat;

/**</li>

<li>按用户分组,计算所有物品出现的组合列表,得到用户对物品的评分矩阵</li>

<li>@author root

*

*/

public class Step1 {

public static class myMapper extends Mapper<Object,Text,IntWritable,Text> {

private final static IntWritable k = new IntWritable();

private final static Text v = new Text();

@Override

protected void map(Object key, Text value, Context context)

throws IOException, InterruptedException {

String[] tokens = Recommend.DELIMITER.split(value.toString());

int userID = Integer.parseInt(tokens[0]);

String itemID = tokens[1];

String pref = tokens[2];

k.set(userID);

v.set(itemID + ":" + pref);

context.write(k, v);

}

}

public static class myReducer extends Reducer<IntWritable, Text, IntWritable, Text>{

private final static Text v = new Text();

@Override

protected void reduce(IntWritable k2, Iterable<Text> v2s,Context context)throws IOException, InterruptedException {

StringBuilder sb = new StringBuilder();

for (Text v2 : v2s) {

sb.append("," + v2.toString());

}

v.set(sb.toString().replaceFirst(",", ""));

context.write(k2, v);

}

}

public static void runStep1(Map<String, String> path) throws IOException, URISyntaxException, ClassNotFoundException, InterruptedException {

String input = path.get("Step1Input");

String output = path.get("Step1Output");

//1.1读取hdfs文件

Configuration conf = new Configuration();

HDFSDao hdfsDao = new HDFSDao(input,conf,Step1.class.getSimpleName());

Job job = hdfsDao.conf();

//打包运行方法

job.setJarByClass(Step1.class);

//1.1读取文件 设置输入路径以及文件输入格式

FileInputFormat.addInputPath(job, new Path(input));

job.setInputFormatClass(TextInputFormat.class);

//指定自定义的Mapper类

job.setMapperClass(myMapper.class);

//指定Mapper输出的key value类型

job.setMapOutputKeyClass(IntWritable.class);

job.setMapOutputValueClass(Text.class);

//1.3分区

/* job.setPartitionerClass(HashPartitioner.class);

job.setNumReduceTasks(1);*/

//1.4排序 分组

//1.5归约

job.setCombinerClass(myReducer.class);

//2.1

//2.2指定自定义的Reduce类

job.setReducerClass(myReducer.class);

job.setOutputKeyClass(IntWritable.class);

job.setOutputValueClass(Text.class);

//2.3指定输出的路径

FileOutputFormat.setOutputPath(job, new Path(output));

job.setOutputFormatClass(TextOutputFormat.class);

hdfsDao.rmr(output);

job.waitForCompletion(true);

}

}

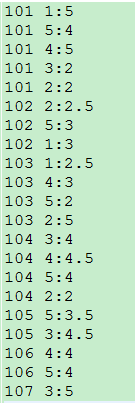

结果如下:

以第一条数据为例说明,用户1评价了三个商品分别是101,102,103,对应评分分别是:5,3,2.5。

3.4、对物品组合列表进行计数,建立物品的同现矩阵,Step2.java内容如下:

package recommand.hadoop.phl;

import java.io.IOException;

import java.net.URISyntaxException;

import java.util.Map;

import org.apache.hadoop.conf.Configuration;

import org.apache.hadoop.fs.Path;

import org.apache.hadoop.io.IntWritable;

import org.apache.hadoop.io.Text;

import org.apache.hadoop.mapreduce.Job;

import org.apache.hadoop.mapreduce.Mapper;

import org.apache.hadoop.mapreduce.Reducer;

import org.apache.hadoop.mapreduce.lib.input.FileInputFormat;

import org.apache.hadoop.mapreduce.lib.input.TextInputFormat;

import org.apache.hadoop.mapreduce.lib.output.FileOutputFormat;

import org.apache.hadoop.mapreduce.lib.output.TextOutputFormat;

public class Step2 {

public static class myMapper extends Mapper<Object, Text, Text, IntWritable>{

private final static Text k = new Text();

private final static IntWritable v = new IntWritable(1);

@Override

protected void map(Object key, Text value, Context context)

throws IOException, InterruptedException {

//[1, 101:5, 102:3, 103:2.5]

String[] tokens = Recommend.DELIMITER.split(value.toString());

for (int i = 1; i < tokens.length; i++) {

String itemID = tokens[i].split(":")[0];//itemID 101

for (int j = 1; j < tokens.length; j++) {

String itemID2 = tokens[j].split(":")[0]; //101

k.set(itemID + ":" + itemID2);

context.write(k, v);

}

}

}

}

public static class myReducer extends Reducer<Text, IntWritable, Text, IntWritable>{

private final static IntWritable v = new IntWritable();

@Override

protected void reduce(Text k2, Iterable<IntWritable> v2s,

Context context) throws IOException, InterruptedException {

int count=0;

for (IntWritable v2 : v2s) {

count+=v2.get();

}

v.set(count);

context.write(k2, v);

}

}

public static void runStep2(Map<String, String> path) throws IOException, URISyntaxException, ClassNotFoundException, InterruptedException {

String input = path.get("Step2Input");

String output = path.get("Step2Output");

//1.1读取hdfs文件

Configuration conf = new Configuration();

HDFSDao hdfsDao = new HDFSDao(input,conf,Step2.class.getSimpleName());

Job job = hdfsDao.conf();

//打包运行方法

job.setJarByClass(Step2.class);

//1.1读取文件 设置输入路径以及文件输入格式

FileInputFormat.addInputPath(job, new Path(input));

job.setInputFormatClass(TextInputFormat.class);

//指定自定义的Mapper类

job.setMapperClass(myMapper.class);

//指定Mapper输出的key value类型

job.setMapOutputKeyClass(Text.class);

job.setMapOutputValueClass(IntWritable.class);

//1.3分区

/* job.setPartitionerClass(HashPartitioner.class);

job.setNumReduceTasks(1);*/

//1.4排序 分组

//1.5归约

job.setCombinerClass(myReducer.class);

//2.1

//2.2指定自定义的Reduce类

job.setReducerClass(myReducer.class);

job.setOutputKeyClass(Text.class);

job.setOutputValueClass(IntWritable.class);

//2.3指定输出的路径

FileOutputFormat.setOutputPath(job, new Path(output));

job.setOutputFormatClass(TextOutputFormat.class);

//检查是否已有相同输出,有则删除

hdfsDao.rmr(output);

job.waitForCompletion(true);

}

}

运行结果如下:

101:101 5

101:102 3

101:103 4

101:104 4

101:105 2

101:106 2

101:107 1

102:101 3

102:102 3

102:103 3

102:104 2

102:105 1

102:106 1

103:101 4

103:102 3

103:103 4

103:104 3

103:105 1

103:106 2

104:101 4

104:102 2

104:103 3

104:104 4

104:105 2

104:106 2

104:107 1

105:101 2

105:102 1

105:103 1

105:104 2

105:105 2

105:106 1

105:107 1

106:101 2

106:102 1

106:103 2

106:104 2

106:105 1

106:106 2

107:101 1

107:104 1

107:105 1

107:107 1

我们构建的同现矩阵是以两个商品为一组,以第二组为例说明,101:102 3意思是既对101商品评分又对102商品评分的有3个用户。为了方便阅读我博客的人,我把上述结果转化为数学中的矩阵,如下所示:

[101] [102] [103] [104] [105] [106] [107]

[101] 5 3 4 4 2 2 1

[102] 3 3 3 2 1 1 0

[103] 4 3 4 3 1 2 0

[104] 4 2 3 4 2 2 1

[105] 2 1 1 2 2 1 1

[106] 2 1 2 2 1 2 0

[107] 1 0 0 1 1 0 1

3.5、合并同现矩阵和评分矩阵,Step3.java内容如下:

package recommand.hadoop.phl;

import java.io.IOException;

import java.net.URISyntaxException;

import java.util.Map;

import org.apache.hadoop.conf.Configuration;

import org.apache.hadoop.fs.Path;

import org.apache.hadoop.io.IntWritable;

import org.apache.hadoop.io.LongWritable;

import org.apache.hadoop.io.Text;

import org.apache.hadoop.mapreduce.Job;

import org.apache.hadoop.mapreduce.Mapper;

import org.apache.hadoop.mapreduce.lib.input.FileInputFormat;

import org.apache.hadoop.mapreduce.lib.input.TextInputFormat;

import org.apache.hadoop.mapreduce.lib.output.FileOutputFormat;

import org.apache.hadoop.mapreduce.lib.output.TextOutputFormat;

public class Step3 {

public static class myMapper1 extends Mapper<LongWritable, Text, IntWritable, Text>

{

private final static IntWritable k = new IntWritable();

private final static Text v = new Text();

@Override

protected void map(LongWritable key, Text value, Context context)

throws IOException, InterruptedException {

//101:101 5

String[] tokens = Recommend.DELIMITER.split(value.toString());

for (int i = 1; i < tokens.length; i++) {

String[] vector = tokens[i].split(":");

int itemID = Integer.parseInt(vector[0]);

String pref = vector[1];

k.set(itemID);

v.set(tokens[0] + ":" + pref);

context.write(k, v);

}

}

}

public static class myMapper2 extends Mapper<LongWritable, Text, Text,IntWritable>

{

private final static Text k = new Text();

private final static IntWritable v = new IntWritable();

@Override

protected void map(LongWritable key, Text value, Context context)

throws IOException, InterruptedException {

String[] tokens = Recommend.DELIMITER.split(value.toString());

k.set(tokens[0]);

v.set(Integer.parseInt(tokens[1]));

context.write(k, v);

}

}

public static void runStep3_1(Map<String, String> path) throws IOException, URISyntaxException, ClassNotFoundException, InterruptedException{

String input = path.get("Step3Input1");

String output = path.get("Step3Output1");

//1.1读取hdfs文件

Configuration conf = new Configuration();

HDFSDao hdfsDao = new HDFSDao(input,conf,Step3.class.getSimpleName());

Job job = hdfsDao.conf();

//打包运行方法

job.setJarByClass(Step2.class);

//1.1读取文件 设置输入路径以及文件输入格式

FileInputFormat.addInputPath(job, new Path(input));

job.setInputFormatClass(TextInputFormat.class);

//指定自定义的Mapper类

job.setMapperClass(myMapper1.class);

//指定Mapper输出的key value类型

job.setMapOutputKeyClass(IntWritable.class);

job.setMapOutputValueClass(Text.class);

//2.3指定输出的路径

FileOutputFormat.setOutputPath(job, new Path(output));

job.setOutputFormatClass(TextOutputFormat.class);

//检查是否已有相同输出,有则删除

hdfsDao.rmr(output);

job.waitForCompletion(true);

}

public static void runStep3_2(Map<String, String> path) throws IOException, URISyntaxException, ClassNotFoundException, InterruptedException{

String input = path.get("Step3Input2");

String output = path.get("Step3Output2");

//1.1读取hdfs文件

Configuration conf = new Configuration();

HDFSDao hdfsDao = new HDFSDao(input,conf,Step3.class.getSimpleName());

Job job = hdfsDao.conf();

//打包运行方法

job.setJarByClass(Step2.class);

//1.1读取文件 设置输入路径以及文件输入格式

FileInputFormat.addInputPath(job, new Path(input));

job.setInputFormatClass(TextInputFormat.class);

//指定自定义的Mapper类

job.setMapperClass(myMapper2.class);

//指定Mapper输出的key value类型

job.setMapOutputKeyClass(Text.class);

job.setMapOutputValueClass(IntWritable.class);

//2.3指定输出的路径

FileOutputFormat.setOutputPath(job, new Path(output));

job.setOutputFormatClass(TextOutputFormat.class);

//检查是否已有相同输出,有则删除

hdfsDao.rmr(output);

job.waitForCompletion(true);

}

}同现矩阵运行结果和3.4步给出的结果一样,用户评分矩阵如下:

以第一条为例说明,101商品用户1给评价了5分。转化成数据中矩阵如下:

[user1] [user2] [user3] [user4] [user5]

[101] 5 2 2 5 4

[102] 3 2.5 0 0 3

[103] 2.5 5 0 3 2

[104] 0 2 4 4 4

[105] 0 0 4.5 0 3.5

[106] 0 0 0 4 4

[107] 0 0 5 0 0

3.6、为了表示方便,我引入了一个类,Cooccurrence.java内容如下:

package recommand.hadoop.phl;

public class Cooccurrence {

private int itemID1;

private int itemID2;

private int num;

public Cooccurrence(int itemID1, int itemID2, int num) {

super();

this.itemID1 = itemID1;

this.itemID2 = itemID2;

this.num = num;

}

public int getItemID1() {

return itemID1;

}

public void setItemID1(int itemID1) {

this.itemID1 = itemID1;

}

public int getItemID2() {

return itemID2;

}

public void setItemID2(int itemID2) {

this.itemID2 = itemID2;

}

public int getNum() {

return num;

}

public void setNum(int num) {

this.num = num;

}

}

由于我们同现矩阵是以两个商品分组的,所以itemID1,itemID2,代表既评价itemID1又评价itemID2,num代表评价的用户数。3.7、计算推荐列表,Step4.java内容如下:

package recommand.hadoop.phl;

import java.io.IOException;

import java.net.URISyntaxException;

import java.util.ArrayList;

import java.util.HashMap;

import java.util.Iterator;

import java.util.List;

import java.util.Map;

import org.apache.hadoop.conf.Configuration;

import org.apache.hadoop.fs.Path;

import org.apache.hadoop.io.IntWritable;

import org.apache.hadoop.io.LongWritable;

import org.apache.hadoop.io.Text;

import org.apache.hadoop.mapreduce.Job;

import org.apache.hadoop.mapreduce.Mapper;

import org.apache.hadoop.mapreduce.Reducer;

import org.apache.hadoop.mapreduce.lib.input.FileInputFormat;

import org.apache.hadoop.mapreduce.lib.input.TextInputFormat;

import org.apache.hadoop.mapreduce.lib.output.FileOutputFormat;

import org.apache.hadoop.mapreduce.lib.output.TextOutputFormat;

import recommand.hadoop.phl.Step1.myReducer;

public class Step4 {

public static class myMapper extends Mapper<LongWritable, Text, IntWritable, Text>{

private final static IntWritable k = new IntWritable();

private final static Text v = new Text();

private final static Map<Integer, List> cooccurrenceMatrix = new HashMap<Integer, List>();

@Override

protected void map(LongWritable key, Text values, Mapper<LongWritable, Text, IntWritable, Text>.Context context)

throws IOException, InterruptedException {

String[] tokens = Recommend.DELIMITER.split(values.toString());

//同现矩阵时候,v1存的是101 101,v2存的是5。用户评分矩阵,v1存的是101 v2存的是1 5.0

String[] v1 = tokens[0].split(":");

String[] v2 = tokens[1].split(":");

if (v1.length > 1) {// cooccurrence

int itemID1 = Integer.parseInt(v1[0]);

int itemID2 = Integer.parseInt(v1[1]);

int num = Integer.parseInt(tokens[1]);

List<Cooccurrence> list = null;

if (!cooccurrenceMatrix.containsKey(itemID1)) {

list = new ArrayList<Cooccurrence>();

} else {

list = cooccurrenceMatrix.get(itemID1);

}

list.add(new Cooccurrence(itemID1, itemID2, num));

System.out.println("<itemID1,{itemID1, itemID2, num}: "+itemID1+"{"+itemID1+" , "+itemID2+" , "+num+"}");

cooccurrenceMatrix.put(itemID1, list);

}

if (v2.length > 1) {// userVector

int itemID = Integer.parseInt(tokens[0]);

int userID = Integer.parseInt(v2[0]);

double pref = Double.parseDouble(v2[1]);

k.set(userID);

Iterator<Cooccurrence> iterator = cooccurrenceMatrix.get(itemID).iterator();

while(iterator.hasNext()){

Cooccurrence co = iterator.next();

v.set(co.getItemID2() + "," + pref * co.getNum());

System.out.println("ItemID2,pref * co.getNum()"+userID+":{"+co.getItemID2()+ "," + pref * co.getNum()+"}");

context.write(k, v);

}

/*for (Cooccurrence co : cooccurrenceMatrix.get(itemID)) {

v.set(co.getItemID2() + "," + pref * co.getNum());

context.write(k, v);

}*/

}

}

}

public static class myReducer extends Reducer<IntWritable, Text, IntWritable, Text>{

private final static Text v = new Text();

@Override

protected void reduce(IntWritable key, Iterable<Text> values,

Context context)throws IOException, InterruptedException {

Map<String, Double> result = new HashMap<String, Double>();

for(Text value:values){

String[] str = value.toString().split(",");

if (result.containsKey(str[0])) {

result.put(str[0], result.get(str[0]) + Double.parseDouble(str[1]));

}else{

result.put(str[0], Double.parseDouble(str[1]));

}

}

Iterator iter = result.keySet().iterator();

while (iter.hasNext()) {

String itemID = iter.next().toString();

System.out.println("itemID:"+itemID);

double score = result.get(itemID);

v.set(itemID + "," + score);

context.write(key, v);

}

}

}

public static void runStep4(Map<String, String> path) throws IOException, URISyntaxException, ClassNotFoundException, InterruptedException {

String input1 = path.get("Step4Input1");

String input2 = path.get("Step4Input2");

String output = path.get("Step4Output");

//1.1读取hdfs文件

Configuration conf = new Configuration();

HDFSDao hdfsDao = new HDFSDao(conf,Step1.class.getSimpleName());

Job job = hdfsDao.conf();

//打包运行方法

job.setJarByClass(Step1.class);

//1.1读取文件 设置输入路径以及文件输入格式

FileInputFormat.setInputPaths(job, new Path(input1), new Path(input2));

job.setInputFormatClass(TextInputFormat.class);

//指定自定义的Mapper类

job.setMapperClass(myMapper.class);

//指定Mapper输出的key value类型

job.setMapOutputKeyClass(IntWritable.class);

job.setMapOutputValueClass(Text.class);

//2.2指定自定义的Reduce类

job.setReducerClass(myReducer.class);

job.setOutputKeyClass(IntWritable.class);

job.setOutputValueClass(Text.class);

//2.3指定输出的路径

FileOutputFormat.setOutputPath(job, new Path(output));

job.setOutputFormatClass(TextOutputFormat.class);

hdfsDao.rmr(output);

job.waitForCompletion(true);

}

}计算结果如下所示:

1 107,5.0

1 106,18.0

1 105,15.5

1 104,33.5

1 103,39.0

1 102,31.5

1 101,44.0

2 107,4.0

2 106,20.5

2 105,15.5

2 104,36.0

2 103,41.5

2 102,32.5

2 101,45.5

3 107,15.5

3 106,16.5

3 105,26.0

3 104,38.0

3 103,24.5

3 102,18.5

3 101,40.0

4 107,9.5

4 106,33.0

4 105,26.0

4 104,55.0

4 103,53.5

4 102,37.0

4 101,63.0

5 107,11.5

5 106,34.5

5 105,32.0

5 104,59.0

5 103,56.5

5 102,42.5

5 101,68.0

以用户1为了说明,

1 107,5.0

1 106,18.0

1 105,15.5

1 104,33.5

1 103,39.0

1 102,31.5

1 101,44.0

通过计算,得到用户1多这七中商品的预测评分,其中101,102,103用户评价过,104,105,106,107还没有评价过,通过得分,我们发现这四个商品中评分最高的是104,其次是106…,可以把104商品推荐给用户1。

以上是关于基于项目的协同过过滤推荐的一个入门的介绍,后续文章会对代码做一个调优。

本文介绍了一种基于项目的协同过滤推荐算法实现过程,利用Hadoop MapReduce计算用户评分矩阵及物品同现矩阵,并最终生成推荐列表。

本文介绍了一种基于项目的协同过滤推荐算法实现过程,利用Hadoop MapReduce计算用户评分矩阵及物品同现矩阵,并最终生成推荐列表。

429

429

被折叠的 条评论

为什么被折叠?

被折叠的 条评论

为什么被折叠?