一. 先看Oracle 官方文档

参考:

http://download.oracle.com/docs/cd/E11882_01/rac.112/e16794/intro.htm#CWADD91998

Oracle Clusterware Software Concepts and Requirements

Oracle Clusterware uses voting disk files to provide fencing and cluster node membership determination. OCR provides cluster configuration information. You can place the Oracle Clusterware files on either Oracle ASM or on shared common disk storage. If you configure Oracle Clusterware on storage that does not provide file redundancy, then Oracle recommends that you configure multiple locations for OCR and voting disks. The voting disks and OCR are described as follows:

Oracle Clusterware uses voting disk files to determine which nodes are members of a cluster. You can configure voting disks on Oracle ASM, or you can configure voting disks on shared storage.

If you configure voting disks on Oracle ASM, then you do not need to manually configure the voting disks. Depending on the redundancy of your disk group, an appropriate number of voting disks are created.

If you do not configure voting disks on Oracle ASM, then for high availability, Oracle recommends that you have a minimum of three voting disks on physically separate storage. This avoids having a single point of failure. If you configure a single voting disk, then you must use external mirroring to provide redundancy.

You should have at least three voting disks, unless you have a storage device, such as a disk array that provides external redundancy. Oracle recommends that you do not use more than five voting disks. The maximum number of voting disks that is supported is 15.

Oracle Clusterware uses the Oracle Cluster Registry (OCR) to store and manage information about the components that Oracle Clusterware controls, such as Oracle RAC databases, listeners, virtual IP addresses (VIPs), and services and any applications. OCR stores configuration information in a series of key-value pairs in a tree structure. To ensure cluster high availability, Oracle recommends that you define multiple OCR locations. In addition:

o You can have up to five OCR locations

o Each OCR location must reside on shared storage that is accessible by all of the nodes in the cluster

o You can replace a failed OCR location online if it is not the only OCR location

o You must update OCR through supported utilities such as Oracle Enterprise Manager, the Server Control Utility (SRVCTL), the OCR configuration utility (OCRCONFIG), or the Database Configuration Assistant (DBCA)

See Also:

Chapter 2, "Administering Oracle Clusterware" for more information about voting disks and OCR

Oracle Clusterware Network Configuration Concepts

Oracle Clusterware enables a dynamic Grid Infrastructure through the self-management of the network requirements for the cluster. Oracle Clusterware 11g release 2 (11.2) supports the use of dynamic host configuration protocol (DHCP) for all private interconnect addresses, as well as for most of the VIP addresses. DHCP provides dynamic configuration of the host's IP address, but it does not provide an optimal method of producing names that are useful to external clients.

When you are using Oracle RAC, all of the clients must be able to reach the database. This means that the VIP addresses must be resolved by the clients. This problem is solved by the addition of the Oracle Grid Naming Service (GNS) to the cluster. GNS is linked to the corporate domain name service (DNS) so that clients can easily connect to the cluster and the databases running there. Activating GNS in a cluster requires a DHCP service on the public network.

Implementing GNS

To implement GNS, you must collaborate with your network administrator to obtain an IP address on the public network for the GNS VIP. DNS uses the GNS VIP to forward requests for access to the cluster to GNS. The network administrator must delegate a subdomain in the network to the cluster. The subdomain forwards all requests for addresses in the subdomain to the GNS VIP.

GNS and the GNS VIP run on one node in the cluster. The GNS daemon listens on the GNS VIP using port 53 for DNS requests. Oracle Clusterware manages the GNS and the GNS VIP to ensure that they are always available. If the server on which GNS is running fails, then Oracle Clusterware fails GNS over, along with the GNS VIP, to another node in the cluster.

With DHCP on the network, Oracle Clusterware obtains an IP address from the server along with other network information, such as what gateway to use, what DNS servers to use, what domain to use, and what NTP server to use. Oracle Clusterware initially obtains the necessary IP addresses during cluster configuration and it updates the Oracle Clusterware resources with the correct information obtained from the DHCP server.

Single Client Access Name (SCAN)

Oracle RAC 11g release 2 (11.2) introduces the Single Client Access Name (SCAN). The SCAN is a single name that resolves to three IP addresses in the public network. When using GNS and DHCP, Oracle Clusterware configures the VIP addresses for the SCAN name that is provided during cluster configuration.

The node VIP and the three SCAN VIPs are obtained from the DHCP server when using GNS. If a new server joins the cluster, then Oracle Clusterware dynamically obtains the required VIP address from the DHCP server, updates the cluster resource, and makes the server accessible through GNS.

Example 1-1 shows the DNS entries that delegate a domain to the cluster.

# Delegate to gns on mycluster

mycluster.example.com NS myclustergns.example.com

#Let the world know to go to the GNS vip

myclustergns.example.com. 10.9.8.7

See Also:

Oracle Grid Infrastructure Installation Guide for details about establishing resolution through DNS

Configuring Addresses Manually

Alternatively, you can choose manual address configuration, in which you configure the following:

· One public host name for each node.

· One VIP address for each node.

You must assign a VIP address to each node in the cluster. Each VIP address must be on the same subnet as the public IP address for the node and should be an address that is assigned a name in the DNS. Each VIP address must also be unused and unpingable from within the network before you install Oracle Clusterware.

· Up to three SCAN addresses for the entire cluster.

Note:

The SCAN must resolve to at least one address on the public network. For high availability and scalability, Oracle recommends that you configure the SCAN to resolve to three addresses.

See Also:

Your platform-specific Oracle Grid Infrastructure Installation Guide installation documentation for information about system requirements and configuring network addresses

Overview of Oracle Clusterware Platform-Specific Software Components

When Oracle Clusterware is operational, several platform-specific processes or services run on each node in the cluster. This section describes these various processes and services.

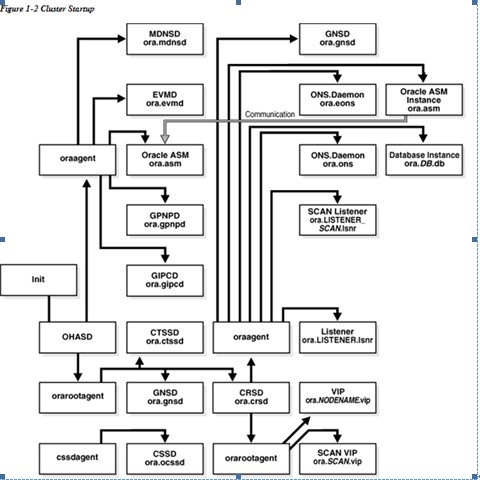

The Oracle Clusterware Stack

Oracle Clusterware consists of two separate stacks: an upper stack anchored by the Cluster Ready Services (CRS) daemon (crsd) and a lower stack anchored by the Oracle High Availability Services daemon (ohasd). These two stacks have several processes that facilitate cluster operations. The following sections describe these stacks in more detail:

· The Cluster Ready Services Stack

· The Oracle High Availability Services Stack

The Cluster Ready Services Stack

The list in this section describes the processes that comprise CRS. The list includes components that are processes on Linux and UNIX operating systems, or services on Windows.

· Cluster Ready Services (CRS): The primary program for managing high availability operations in a cluster.

The CRS daemon (crsd) manages cluster resources based on the configuration information that is stored in OCR for each resource. This includes start, stop, monitor, and failover operations. The crsd process generates events when the status of a resource changes. When you have Oracle RAC installed, the crsd process monitors the Oracle database instance, listener, and so on, and automatically restarts these components when a failure occurs.

· Cluster Synchronization Services (CSS): Manages the cluster configuration by controlling which nodes are members of the cluster and by notifying members when a node joins or leaves the cluster. If you are using certified third-party clusterware, then CSS processes interface with your clusterware to manage node membership information.

The cssdagent process monitors the cluster and provides I/O fencing. This service formerly was provided by Oracle Process Monitor Daemon (oprocd), also known as OraFenceService on Windows. A cssdagent failure may result in Oracle Clusterware restarting the node.

· Oracle ASM: Provides disk management for Oracle Clusterware and Oracle Database.

· Cluster Time Synchronization Service (CTSS): Provides time management in a cluster for Oracle Clusterware.

· Event Management (EVM): A background process that publishes events that Oracle Clusterware creates.

· Oracle Notification Service (ONS): A publish and subscribe service for communicating Fast Application Notification (FAN) events.

· Oracle Agent (oraagent): Extends clusterware to support Oracle-specific requirements and complex resources. This process runs server callout scripts when FAN events occur. This process was known as RACG in Oracle Clusterware 11g release 1 (11.1).

· Oracle Root Agent (orarootagent): A specialized oraagent process that helps crsd manage resources owned by root, such as the network, and the Grid virtual IP address.

The Cluster Synchronization Service (CSS), Event Management (EVM), and Oracle Notification Services (ONS) components communicate with other cluster component layers on other nodes in the same cluster database environment. These components are also the main communication links between Oracle Database, applications, and the Oracle Clusterware high availability components. In addition, these background processes monitor and manage database operations.

The Oracle High Availability Services Stack

This section describes the processes that comprise the Oracle High Availability Services stack. The list includes components that are processes on Linux and UNIX operating systems, or services on Windows.

· Cluster Logger Service (ologgerd): Receives information from all the nodes in the cluster and persists in a CHM Repository-based database. This service runs on only two nodes in a cluster.

· System Monitor Service (osysmond): The monitoring and operating system metric collection service that sends the data to the cluster logger service. This service runs on every node in a cluster.

· Grid Plug and Play (GPNPD): Provides access to the Grid Plug and Play profile, and coordinates updates to the profile among the nodes of the cluster to ensure that all of the nodes have the most recent profile.

· Grid Interprocess Communication (GIPC): A support daemon that enables Redundant Interconnect Usage.

· Multicast Domain Name Service (mDNS): Used by Grid Plug and Play to locate profiles in the cluster, as well as by GNS to perform name resolution. The mDNS process is a background process on Linux and UNIX, and a service on Windows.

· Oracle Grid Naming Service (GNS): Handles requests sent by external DNS servers, performing name resolution for names defined by the cluster.

二. 查看OHASD 资源

Oracle High Availability Services Daemon (OHASD) :This process anchors the lower part of the Oracle Clusterware stack, which consists of processes that facilitate cluster operations.

在11gR2里面启动CRS的时候,会提示ohasd已经启动。 那么这个OHASD到底包含哪些资源。 我们可以通过如下命令来查看:

[grid@racnode1 ~]$ crsctl stat res -init -t

---------------------------------------------------------

NAME TARGET STATE SERVER STATE_DETAILS

---------------------------------------------------------

Cluster Resources

---------------------------------------------------------

ora.asm

1 ONLINE ONLINE racnode1 Started

ora.crsd

1 ONLINE ONLINE racnode1

ora.cssd

1 ONLINE ONLINE racnode1

ora.cssdmonitor

1 ONLINE ONLINE racnode1

ora.ctssd

1 ONLINE ONLINE racnode1 OBSERVER

ora.diskmon

1 ONLINE ONLINE racnode1

ora.drivers.acfs

1 ONLINE UNKNOWN racnode1

ora.evmd

1 ONLINE ONLINE racnode1

ora.gipcd

1 ONLINE ONLINE racnode1

ora.gpnpd

1 ONLINE ONLINE racnode1

ora.mdnsd

1 ONLINE ONLINE racnode1

在10g平台下,RAC的一些资源,在我的Blog:

RAC 的一些概念性和原理性的知识

http://www.cndba.cn/Dave/article/1021

里已经做了相关的说明。

分别看下这些进程:

(1)ora.asm:这个是asm 实例的进程。 在10g里, OCR和Voting disk 是放在其他共享设备上的。 11gR2里面,默认是放在ASM里面。 在Clusterware启动的时候需要读取这些信息,所以在集群启动的时候需要先启动ASM实例。

(2)ora.crsd,ora.cssd 和 ora.evmd:

这三个进程是Clusterware中最重要的3个进程.

在10g中,在安装clusterware的最后阶段,会要求在每个节点执行root.sh 脚本, 这个脚本会在/etc/inittab 文件的最后把这3个进程加入启动项,这样以后每次系统启动时,Clusterware 也会自动启动,其中EVMD和CRSD 两个进程如果出现异常,则系统会自动重启这两个进程,如果是CSSD 进程异常,系统会立即重启。

在11gR2中,只会将ohasd 写入/etc/inittab 文件。

[grid@racnode1 init.d]$ cat /etc/inittab

h1:35:respawn:/etc/init.d/init.ohasd run >/dev/null 2>&1 </dev/null

所以在10g中常用的/etc/init.d/init.crs 之类的命令都没有了。 就剩下一个/etc/init.d/init.ohasd 进程。

OCSSD :这个进程是Clusterware最关键的进程,如果这个进程出现异常,会导致系统重启,这个进程提供CSS(Cluster Synchronization Service)服务。 CSS 服务通过多种心跳机制实时监控集群状态,提供脑裂保护等基础集群服务功能。

CRSD:是实现"高可用性(HA)"的主要进程,它提供的服务叫作CRS(Cluster Ready Service) 服务。所有需要 高可用性 的组件,都会在安装配置的时候,以CRS Resource的形式登记到OCR中,而CRSD 进程就是根据OCR中的内容,决定监控哪些进程,如何监控,出现问题时又如何解决。也就是说,CRSD 进程负责监控CRS Resource 的运行状态,并要启动,停止,监控,Failover这些资源。 默认情况下,CRS 会自动尝试重启资源5次,如果还是失败,则放弃尝试。

CRS Resource 包括GSD(Global Serveice Daemon),ONS(Oracle Notification Service),VIP, Database, Instance 和 Service.

EVMD:负责发布CRS 产生的各种事件(Event). 这些Event可以通过2种方式发布给客户:ONS 和 Callout Script.

这三个进程各自的作用,具体参考

RAC 的一些概念性和原理性的知识

http://www.cndba.cn/Dave/article/1021

中的说明。

(3)Grid Plug and Play (GPNPD):

Provides access to the Grid Plug and Play profile, and coordinates updates to the profile among the nodes of the cluster to ensure that all of the nodes have the most recent profile.

(4)Grid Interprocess Communication (GIPC):

A support daemon that enables Redundant Interconnect Usage.

(5)ora.mdns

Used by Grid Plug and Play to locate profiles in the cluster, as well as by GNS to perform name resolution. The mDNS process is a background process on Linux and UNIX, and a service on Windows.

(6)Cluster Time Synchronization Service (CTSS):

Provides time management in a cluster for Oracle Clusterware. 在上面的查询结果中,我们看到CTSS 的状态是OBSERVER。即旁观者。

在11gR2中,RAC在安装的时候,时间同步可以用两种方式来实现,一是NTP,还有就是CTSS. 当安装程序发现 NTP 协议处于非活动状态时,安装集群时间同步服务将以活动模式自动进行安装并通过所有节点的时间。如果发现配置了 NTP,则以观察者模式启动集群时间同步服务,Oracle Clusterware 不会在集群中进行活动的时间同步。

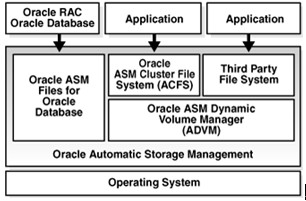

(7)Automatic Storage Management Cluster File System (Oracle ACFS):

Oracle Automatic Storage Management Cluster File System (Oracle ACFS) is a multi-platform, scalable file system, and storage management technology that extends Oracle Automatic Storage Management (Oracle ASM) functionality to support customer files maintained outside of Oracle Database. Oracle ACFS supports many database and application files, including executables, database trace files, database alert logs, application reports, BFILEs, and configuration files. Other supported files are video, audio, text, images, engineering drawings, and other general-purpose application file data.

An Oracle ACFS file system is a layer on Oracle ASM and is configured with Oracle ASM storage, as shown in Figure 5-1. Oracle ACFS leverages Oracle ASM functionality that enables:

· Oracle ACFS dynamic file system resizing

· Maximized performance through direct access to Oracle ASM disk group storage

· Balanced distribution of Oracle ACFS across Oracle ASM disk group storage for increased I/O parallelism

· Data reliability through Oracle ASM mirroring protection mechanisms

更多内容参考:

http://download.oracle.com/docs/cd/E11882_01/server.112/e16102/asmfilesystem.htm#OSTMG31000

三. 查看CRS资源

在11.2中,对CRSD资源进行了重新分类: Local Resources 和 Cluster Resources。 OHASD 指的就是Cluster Resource.

[grid@racnode1 ~]$ crsctl stat res -t

---------------------------------------------------------

NAME TARGET STATE SERVER STATE_DETAILS

---------------------------------------------------------

Local Resources

---------------------------------------------------------

ora.CRS.dg

ONLINE ONLINE racnode1

ONLINE ONLINE racnode2

ora.DATA.dg

ONLINE ONLINE racnode1

ONLINE ONLINE racnode2

ora.FRA.dg

ONLINE ONLINE racnode1

ONLINE ONLINE racnode2

ora.LISTENER.lsnr

ONLINE ONLINE racnode1

ONLINE ONLINE racnode2

ora.asm

ONLINE ONLINE racnode1 Started

ONLINE ONLINE racnode2 Started

ora.eons

ONLINE ONLINE racnode1

ONLINE ONLINE racnode2

ora.gsd

OFFLINE OFFLINE racnode1

OFFLINE OFFLINE racnode2

ora.net1.network

ONLINE ONLINE racnode1

ONLINE ONLINE racnode2

ora.ons

ONLINE ONLINE racnode1

ONLINE ONLINE racnode2

ora.registry.acfs

ONLINE UNKNOWN racnode1

ONLINE ONLINE racnode2

---------------------------------------------------------

Cluster Resources

---------------------------------------------------------

ora.LISTENER_SCAN1.lsnr

1 ONLINE ONLINE racnode2

ora.oc4j

1 OFFLINE OFFLINE

ora.racdb.db

1 ONLINE ONLINE racnode1 Open

2 ONLINE ONLINE racnode2 Open

ora.racnode1.vip

1 ONLINE ONLINE racnode1

ora.racnode2.vip

1 ONLINE ONLINE racnode2

ora.scan1.vip

1 ONLINE ONLINE racnode2

[grid@racnode1 ~]$

从上面的查询结果可以看出,在11gR2中把network,disgroup,eons,和 asm 也作为了一种资源。

还有一点需要注意:就是gsd 和 oc4j 这两资源,他们是offlie的。 说明如下:

ora.gsd is OFFLINE by default if there is no 9i database in the cluster.

ora.oc4j is OFFLINE in 11.2.0.1 as Database Workload Management(DBWLM) is unavailable. these can be ignored in 11gR2 RAC.

也可用如下命令来查看进程:

[root@racnode1 ~]# crs_stat -t

Name Type Target State Host

------------------------------------------------------------

ora.CRS.dg ora....up.type ONLINE ONLINE racnode1

ora.DATA.dg ora....up.type ONLINE ONLINE racnode1

ora.FRA.dg ora....up.type ONLINE ONLINE racnode1

ora....ER.lsnr ora....er.type ONLINE ONLINE racnode1

ora....N1.lsnr ora....er.type ONLINE ONLINE racnode2

ora.asm ora.asm.type ONLINE ONLINE racnode1

ora.eons ora.eons.type ONLINE ONLINE racnode1

ora.gsd ora.gsd.type OFFLINE OFFLINE

ora....network ora....rk.type ONLINE ONLINE racnode1

ora.oc4j ora.oc4j.type OFFLINE OFFLINE

ora.ons ora.ons.type ONLINE ONLINE racnode1

ora.racdb.db ora....se.type ONLINE ONLINE racnode1

ora....SM1.asm application ONLINE ONLINE racnode1

ora....E1.lsnr application ONLINE ONLINE racnode1

ora....de1.gsd application OFFLINE OFFLINE

ora....de1.ons application ONLINE ONLINE racnode1

ora....de1.vip ora....t1.type ONLINE ONLINE racnode1

ora....SM2.asm application ONLINE ONLINE racnode2

ora....E2.lsnr application ONLINE ONLINE racnode2

ora....de2.gsd application OFFLINE OFFLINE

ora....de2.ons application ONLINE ONLINE racnode2

ora....de2.vip ora....t1.type ONLINE ONLINE racnode2

ora....ry.acfs ora....fs.type ONLINE ONLINE racnode2

ora.scan1.vip ora....ip.type ONLINE ONLINE racnode1

ora.scan2.vip ora....ip.type ONLINE ONLINE racnode2

[root@racnode1 ~]#

四. 查看各种资源之间的依赖关系

比如DG resource依赖于ASM,VIP依赖于network。这些可以从资源的详细属性看出:

[root@racnode1 ~]# crsctl stat res ora.DATA.dg -p

NAME=ora.DATA.dg

TYPE=ora.diskgroup.type

ACL=owner:grid:rwx,pgrp:oinstall:rwx,other::r--

ACTION_FAILURE_TEMPLATE=

ACTION_SCRIPT=

AGENT_FILENAME=%CRS_HOME%/bin/oraagent%CRS_EXE_SUFFIX%

ALIAS_NAME=

AUTO_START=never

CHECK_INTERVAL=300

CHECK_TIMEOUT=600

DEFAULT_TEMPLATE=

DEGREE=1

DESCRIPTION=CRS resource type definition for ASM disk group resource

ENABLED=1

LOAD=1

LOGGING_LEVEL=1

NLS_LANG=

NOT_RESTARTING_TEMPLATE=

OFFLINE_CHECK_INTERVAL=0

PROFILE_CHANGE_TEMPLATE=

RESTART_ATTEMPTS=5

SCRIPT_TIMEOUT=60

START_DEPENDENCIES=hard(ora.asm) pullup(ora.asm)

START_TIMEOUT=900

STATE_CHANGE_TEMPLATE=

STOP_DEPENDENCIES=hard(intermediate:ora.asm)

STOP_TIMEOUT=180

UPTIME_THRESHOLD=1d

USR_ORA_ENV=

USR_ORA_OPI=false

USR_ORA_STOP_MODE=

VERSION=11.2.0.1.0

[grid@racnode1 ~]$ crsctl stat res ora.racnode1.vip -p

NAME=ora.racnode1.vip

TYPE=ora.cluster_vip_net1.type

ACL=owner:root:rwx,pgrp:root:r-x,other::r--,group:oinstall:r-x,user:grid:r-x

ACTION_FAILURE_TEMPLATE=

ACTION_SCRIPT=

ACTIVE_PLACEMENT=1

AGENT_FILENAME=%CRS_HOME%/bin/orarootagent%CRS_EXE_SUFFIX%

AUTO_START=restore

CARDINALITY=1

CHECK_INTERVAL=1

DEFAULT_TEMPLATE=PROPERTY(RESOURCE_CLASS=vip)

DEGREE=1

DESCRIPTION=Oracle VIP resource

ENABLED=1

FAILOVER_DELAY=0

FAILURE_INTERVAL=0

FAILURE_THRESHOLD=0

HOSTING_MEMBERS=racnode1

LOAD=1

LOGGING_LEVEL=1

NLS_LANG=

NOT_RESTARTING_TEMPLATE=

OFFLINE_CHECK_INTERVAL=0

PLACEMENT=favored

PROFILE_CHANGE_TEMPLATE=

RESTART_ATTEMPTS=0

SCRIPT_TIMEOUT=60

SERVER_POOLS=*

START_DEPENDENCIES=hard(ora.net1.network) pullup(ora.net1.network)

START_TIMEOUT=0

STATE_CHANGE_TEMPLATE=

STOP_DEPENDENCIES=hard(ora.net1.network)

STOP_TIMEOUT=0

UPTIME_THRESHOLD=1h

USR_ORA_ENV=

USR_ORA_VIP=racnode1-vip

VERSION=11.2.0.1.0

------------------------------------------------------------------------------

QQ: 492913789

Email:ahdba@qq.com

Blog:http://www.cndba.cn/dave

网上资源: http://tianlesoftware.download.csdn.net

相关视频:http://blog.csdn.net/tianlesoftware/archive/2009/11/27/4886500.aspx

DBA1 群:62697716(满); DBA2 群:62697977(满)

DBA3 群:62697850 DBA 超级群:63306533;

聊天 群:40132017

--加群需要在备注说明Oracle表空间和数据文件的关系,否则拒绝申请

2万+

2万+

被折叠的 条评论

为什么被折叠?

被折叠的 条评论

为什么被折叠?