一个倒排索引(inverted index)的python实现

- 使用spider.py抓取了10篇中英双语安徒生童话并存在 “documents_cn”目录下

- 使用inverted_index_cn.py对 “documents_cn”目录下文档建立倒排索引

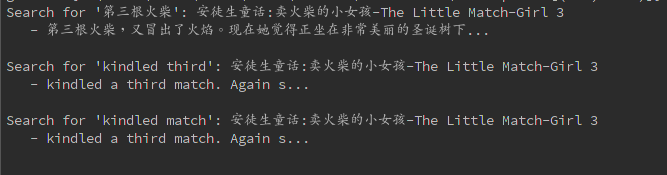

- 查询 “第三根火柴”, “kindled third”, “kindled match”的位置

- 获得结果如下

注:search函数先搜索词组的情况(即每个汉字或词间距离为1),如无结果再搜索临近情况(即距离为2或距离为3)

spider.py

from lxml import html

import os

import sys

reload(sys)

sys.setdefaultencoding('utf-8')

seed_url = u"http://www.kekenet.com/read/essay/ats/"

x = html.parse(seed_url)

spans = x.xpath("*//ul[@id='menu-list']//li/h2/a")

for span in spans[:10]:

details_url = span.xpath("attribute::href")[0]

xx = html.parse(details_url)

name = 'documents_cn//'+span.text.replace(u' ', u'_')

f = open(name, 'a')

try:

contents = xx.xpath("//div[@id='article']//p/text()")

for content in contents:

if len(str(content)) > 1:

f.write(content.encode('raw_unicode_escape')+'\n')

except Exception, e:

print "wrong!!!!", e

f.close()

os.remove(name)

else:

f.close()

inverted_index_cn.py

# coding:utf-8

import os

import jieba

import re

import sys

reload(sys)

sys.setdefaultencoding('utf-8')

_STOP_WORDS = frozenset([

'a', 'about', 'above', 'above', 'across', 'after', 'afterwards', 'again',

'against', 'all', 'almost', 'alone', 'along', 'already', 'also', 'although',

'always', 'am', 'among', 'amongst', 'amoungst', 'amount', 'an', 'and', 'another',

'any', 'anyhow', 'anyone', 'anything', 'anyway', 'anywhere', 'are', 'around', 'as',

'at', 'back', 'be', 'became', 'because', 'become', 'becomes', 'becoming', 'been',

'before', 'beforehand', 'behind', 'being', 'below', 'beside', 'besides',

'between', 'beyond', 'bill', 'both', 'bottom', 'but', 'by', 'call', 'can',

'cannot', 'cant', 'co', 'con', 'could', 'couldnt', 'cry', 'de', 'describe',

'detail', 'do', 'done', 'down', 'due', 'during', 'each', 'eg', 'eight',

'either', 'eleven', 'else', 'elsewhere', 'empty', 'enough', 'etc', 'even',

'ever', 'every', 'everyone', 'everything', 'everywhere', 'except', 'few',

'fifteen', 'fify', 'fill', 'find', 'fire', 'first', 'five', 'for', 'former',

'formerly', 'forty', 'found', 'four', 'from', 'front', 'full', 'further', 'get',

'give', 'go', 'had', 'has', 'hasnt', 'have', 'he', 'hence', 'her', 'here',

'hereafter', 'hereby', 'herein', 'hereupon', 'hers', 'herself', 'him',

'himself', 'his', 'how', 'however', 'hundred', 'ie', 'if', 'in', 'inc',

'indeed', 'interest', 'into', 'is', 'it', 'its', 'itself', 'keep', 'last',

'latter', 'latterly', 'least', 'less', 'ltd', 'made', 'many', 'may', 'me',

'meanwhile', 'might', 'mill', 'mine', 'more', 'moreover', 'most', 'mostly',

'move', 'much', 'must', 'my', 'myself', 'name', 'namely', 'neither', 'never',

'nevertheless', 'next', 'nine', 'no', 'nobody', 'none', 'noone', 'nor', 'not',

'nothing', 'now', 'nowhere', 'of', 'off', 'often', 'on', 'once', 'one', 'only',

'onto', 'or', 'other', 'others', 'otherwise', 'our', 'ours', 'ourselves', 'out',

'over', 'own', 'part', 'per', 'perhaps', 'please', 'put', 'rather', 're', 'same',

'see', 'seem', 'seemed', 'seeming', 'seems', 'serious', 'several', 'she',

'should', 'show', 'side', 'since', 'sincere', 'six', 'sixty', 'so', 'some',

'somehow', 'someone', 'something', 'sometime', 'sometimes', 'somewhere',

'still', 'such', 'system', 'take', 'ten', 'than', 'that', 'the', 'their',

'them', 'themselves', 'then', 'thence', 'there', 'thereafter', 'thereby',

'therefore', 'therein', 'thereupon', 'these', 'they', 'thickv', 'thin', 'third',

'this', 'those', 'though', 'three', 'through', 'throughout', 'thru', 'thus',

'to', 'together', 'too', 'top', 'toward', 'towards', 'twelve', 'twenty', 'two',

'un', 'under', 'until', 'up', 'upon', 'us', 'very', 'via', 'was', 'we', 'well',

'were', 'what', 'whatever', 'when', 'whence', 'whenever', 'where', 'whereafter',

'whereas', 'whereby', 'wherein', 'whereupon', 'wherever', 'whether', 'which',

'while', 'whither', 'who', 'whoever', 'whole', 'whom', 'whose', 'why', 'will',

'with', 'within', 'without', 'would', 'yet', 'you', 'your', 'yours', 'yourself',

'yourselves', 'the'])

def word_split(text):

word_list = []

pattern = re.compile(u'[\u4e00-\u9fa5]+')

jieba_list = list(jieba.cut(text))

time = {}

for i, c in enumerate(jieba_list):

if c in time: # record appear time

time[c] += 1

else:

time.setdefault(c, 0) != 0

if pattern.search(c): # if Chinese

word_list.append((len(word_list), (text.index(c, time[c]), c)))

continue

if c.isalnum(): # if English or number

word_list.append((len(word_list), (text.index(c, time[c]), c.lower()))) # include normalize

return word_list

def words_cleanup(words):

cleaned_words = []

for index, (offset, word) in words: # words-(word index for search,(letter offset for display,word))

if word in _STOP_WORDS:

continue

cleaned_words.append((index, (offset, word)))

return cleaned_words

def word_index(text):

words = word_split(text)

words = words_cleanup(words)

return words

def inverted_index(text):

inverted = {}

for index, (offset, word) in word_index(text):

locations = inverted.setdefault(word, [])

locations.append((index, offset))

return inverted

def inverted_index_add(inverted, doc_id, doc_index):

for word, locations in doc_index.iteritems():

indices = inverted.setdefault(word, {})

indices[doc_id] = locations

return inverted

def search(inverted, query):

words = [word for _, (offset, word) in word_index(query) if word in inverted] # query_words_list

results = [set(inverted[word].keys()) for word in words]

# x = map(lambda old: old+1, x)

doc_set = reduce(lambda x, y: x & y, results) if results else []

precise_doc_dic = {}

if doc_set:

for doc in doc_set:

index_list = [[indoff[0] for indoff in inverted[word][doc]] for word in words]

offset_list = [[indoff[1] for indoff in inverted[word][doc]] for word in words]

precise_doc_dic = precise(precise_doc_dic, doc, index_list, offset_list, 1) # 词组查询

precise_doc_dic = precise(precise_doc_dic, doc, index_list, offset_list, 2) # 临近查询

precise_doc_dic = precise(precise_doc_dic, doc, index_list, offset_list, 3) # 临近查询

return precise_doc_dic

else:

return {}

def precise(precise_doc_dic, doc, index_list, offset_list, range):

if precise_doc_dic:

if range != 1:

return precise_doc_dic # 如果已找到词组,不需再进行临近查询

phrase_index = reduce(lambda x, y: set(map(lambda old: old + range, x)) & set(y), index_list)

phrase_index = map(lambda x: x - len(index_list) - range + 2, phrase_index)

if len(phrase_index):

phrase_offset = []

for po in phrase_index:

phrase_offset.append(offset_list[0][index_list[0].index(po)]) # offset_list[0]代表第一个单词的字母偏移list

precise_doc_dic[doc] = phrase_offset

return precise_doc_dic

if __name__ == '__main__':

# Build Inverted-Index for documents

inverted = {}

# documents = {}

#

# doc1 = u"开发者可以指定自己自定义的词典,以便包含jieba词库里没有的词"

# doc2 = u"军机处长到底是谁,Python Perl"

#

# documents.setdefault("doc1", doc1)

# documents.setdefault("doc2", doc2)

documents = {}

for filename in os.listdir('documents_cn'):

f = open('documents_cn//' + filename).read()

documents.setdefault(filename.decode('utf-8'), f)

for doc_id, text in documents.iteritems():

doc_index = inverted_index(text)

inverted_index_add(inverted, doc_id, doc_index)

# Print Inverted-Index

for word, doc_locations in inverted.iteritems():

print word, doc_locations

# Search something and print results

queries = ['第三根火柴', 'kindled third', 'kindled match']

for query in queries:

result_docs = search(inverted, query)

print "Search for '%s': %s" % (query, u','.join(result_docs.keys())) # %s是str()输出字符串%r是repr()输出对象

def extract_text(doc, index):

return documents[doc].decode('utf-8')[index:index + 30].replace('\n', ' ')

if result_docs:

for doc, offsets in result_docs.items():

for offset in offsets:

print ' - %s...' % extract_text(doc, offset)

else:

print 'Nothing found!'

print

404

404

被折叠的 条评论

为什么被折叠?

被折叠的 条评论

为什么被折叠?