注意,IK Analyzer需要使用其下载列表中的

IK Analyzer 2012FF_hf1.zip,否则在和Lucene 4.10配合使用时会报错。

我使用 intellij IDEA 12进行的测试。

1、Field()已经不推荐按使用。

2、QueryParser()的使用方式也改变了。

下面是更加符合要求的示例。

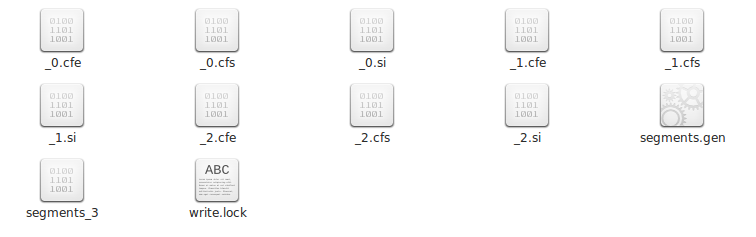

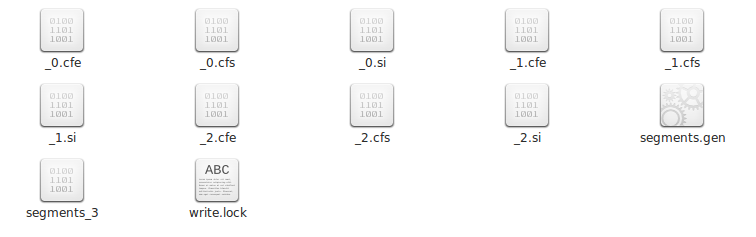

运行上面的代码,创建索引的结果如下:

MySearch.java是一个搜索的示例:

我使用 intellij IDEA 12进行的测试。

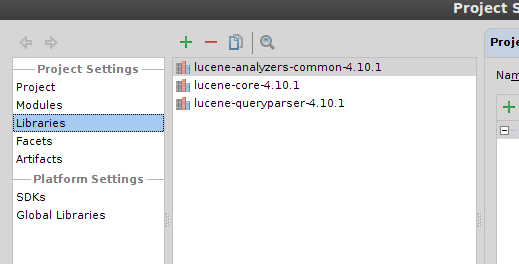

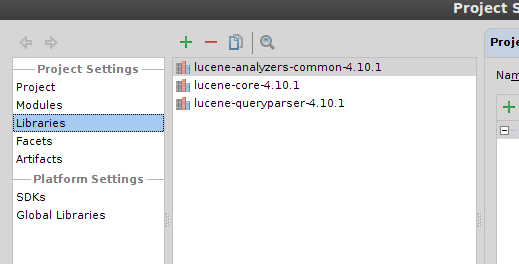

建立java项目

建立项目HelloLucene,导入Lucene的几个库。“File”->“Project Structure”->

将IK Analyzer 2012FF_hf1.zip解压后的源码放入src目录,并将字典和配置文件放入src目录,最终如下:

一个示例:

IKAnalyzerDemo.java中是我在其他地方找的一个示例,和IK的官方示例很像。内容如下:

|

1

2

3

4

5

6

7

8

9

10

11

12

13

14

15

16

17

18

19

20

21

22

23

24

25

26

27

28

29

30

31

32

33

34

35

36

37

38

39

40

41

42

43

44

45

46

47

48

49

50

51

52

53

54

55

56

57

58

59

60

61

62

63

64

65

66

67

68

69

70

71

72

73

74

75

76

77

78

79

80

81

82

83

84

85

86

87

88

89

90

91

92

93

94

95

96

|

package

org.apache.lucene.demo;

import

java.io.IOException;

import

java.io.StringReader;

import

org.apache.lucene.analysis.Analyzer;

import

org.apache.lucene.analysis.TokenStream;

import

org.apache.lucene.analysis.tokenattributes.CharTermAttribute;

import

org.apache.lucene.document.Document;

import

org.apache.lucene.document.Field;

import

org.apache.lucene.index.IndexReader;

import

org.apache.lucene.index.IndexWriter;

import

org.apache.lucene.index.IndexWriterConfig;

import

org.apache.lucene.queryparser.classic.ParseException;

import

org.apache.lucene.queryparser.classic.QueryParser;

import

org.apache.lucene.search.IndexSearcher;

import

org.apache.lucene.search.Query;

import

org.apache.lucene.search.ScoreDoc;

import

org.apache.lucene.search.TopDocs;

import

org.apache.lucene.store.RAMDirectory;

import

org.apache.lucene.util.Version;

import

org.wltea.analyzer.lucene.IKAnalyzer;

public

class

IKAnalyzerDemo {

/**

* @param args

* @throws IOException

*/

public

static

void

main(String[] args)

throws

IOException {

// TODO Auto-generated method stub

//建立索引

String text1 =

"IK Analyzer是一个结合词典分词和文法分词的中文分词开源工具包。它使用了全新的正向迭代最细粒度切"

+

"分算法。"

;

String text2 =

"中文分词工具包可以和lucene是一起使用的"

;

String text3 =

"中文分词,你妹"

;

String fieldName =

"contents"

;

Analyzer analyzer =

new

IKAnalyzer();

RAMDirectory directory =

new

RAMDirectory();

IndexWriterConfig writerConfig =

new

IndexWriterConfig(Version.LUCENE_34, analyzer);

IndexWriter indexWriter =

new

IndexWriter(directory, writerConfig);

Document document1 =

new

Document();

document1.add(

new

Field(

"ID"

,

"1"

, Field.Store.YES, Field.Index.NOT_ANALYZED));

document1.add(

new

Field(fieldName, text1, Field.Store.YES, Field.Index.ANALYZED));

indexWriter.addDocument(document1);

Document document2 =

new

Document();

document2.add(

new

Field(

"ID"

,

"2"

, Field.Store.YES, Field.Index.NOT_ANALYZED));

document2.add(

new

Field(fieldName, text2, Field.Store.YES, Field.Index.ANALYZED));

indexWriter.addDocument(document2);

Document document3 =

new

Document();

document3.add(

new

Field(

"ID"

,

"2"

, Field.Store.YES, Field.Index.NOT_ANALYZED));

document3.add(

new

Field(fieldName, text3, Field.Store.YES, Field.Index.ANALYZED));

indexWriter.addDocument(document3);

indexWriter.close();

//搜索

IndexReader indexReader = IndexReader.open(directory);

IndexSearcher searcher =

new

IndexSearcher(indexReader);

String request =

"中文分词工具包"

;

QueryParser parser =

new

QueryParser(Version.LUCENE_40, fieldName, analyzer);

parser.setDefaultOperator(QueryParser.AND_OPERATOR);

try

{

Query query = parser.parse(request);

TopDocs topDocs = searcher.search(query,

5

);

System.out.println(

"命中数:"

+topDocs.totalHits);

ScoreDoc[] docs = topDocs.scoreDocs;

for

(ScoreDoc doc : docs){

Document d = searcher.doc(doc.doc);

System.out.println(

"内容:"

+d.get(fieldName));

}

}

catch

(ParseException e) {

// TODO Auto-generated catch block

e.printStackTrace();

}

finally

{

if

(indexReader !=

null

){

try

{

indexReader.close();

}

catch

(IOException e) {

e.printStackTrace();

}

}

if

(directory !=

null

){

try

{

directory.close();

}

catch

(Exception e) {

e.printStackTrace();

}

}

}

}

}

|

|

1

2

3

4

|

加载扩展停止词典:stopword.dic

命中数:2

内容:中文分词工具包可以和lucene是一起使用的

内容:IK Analyzer是一个结合词典分词和文法分词的中文分词开源工具包。它使用了全新的正向迭代最细粒度切分算法。

|

1、Field()已经不推荐按使用。

2、QueryParser()的使用方式也改变了。

下面是更加符合要求的示例。

第二个示例:

MyIndex类用来创建索引:|

1

2

3

4

5

6

7

8

9

10

11

12

13

14

15

16

17

18

19

20

21

22

23

24

25

26

27

28

29

30

31

32

33

34

35

36

37

38

39

40

41

42

43

44

45

46

47

48

49

50

51

52

53

54

55

56

|

import

org.apache.lucene.analysis.Analyzer;

import

org.apache.lucene.document.*;

import

org.apache.lucene.document.Field.Store;

import

org.apache.lucene.index.IndexWriter;

import

org.apache.lucene.index.IndexWriterConfig;

import

org.apache.lucene.store.Directory;

import

org.apache.lucene.store.FSDirectory;

import

org.apache.lucene.util.Version;

import

org.wltea.analyzer.lucene.IKAnalyzer;

import

java.io.File;

public

class

MyIndex {

public

static

void

main(String[] args) {

String ID;

String content;

ID =

"1231"

;

content =

"BuzzFeed has compiled an amazing array of "

+

"ridiculously strange bridesmaid snapshots, courtesy of Awkward Family Photos. "

;

indexPost(ID, content);

ID =

"1234"

;

content =

"Lucene是apache软件基金会4 jakarta项目组的一个子项目,是一个开放源代码的全文检索引擎工具包"

;

indexPost(ID, content);

ID =

"1235"

;

content =

"Lucene不是一个完整的全文索引应用,而是是一个用Java写的全文索引引擎工具包,它可以方便的嵌入到各种应用中实现"

;

indexPost(ID, content);

}

public

static

void

indexPost(String ID, String content) {

File indexDir =

new

File(

"/home/letian/lucene-test/index"

);

Analyzer analyzer =

new

IKAnalyzer();

TextField postIdField =

new

TextField(

"id"

, ID, Store.YES);

// 不要用StringField

TextField postContentField =

new

TextField(

"content"

, content, Store.YES);

Document doc =

new

Document();

doc.add(postIdField);

doc.add(postContentField);

IndexWriterConfig iwConfig =

new

IndexWriterConfig(Version.LUCENE_4_10_1, analyzer);

iwConfig.setOpenMode(IndexWriterConfig.OpenMode.CREATE_OR_APPEND);

try

{

Directory fsDirectory = FSDirectory.open(indexDir);

IndexWriter indexWriter =

new

IndexWriter(fsDirectory, iwConfig);

indexWriter.addDocument(doc);

indexWriter.close();

}

catch

(Exception e) {

e.printStackTrace();

}

}

}

|

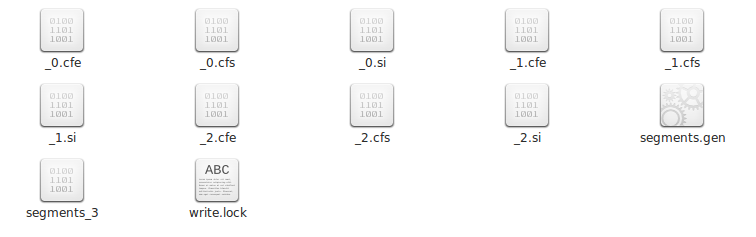

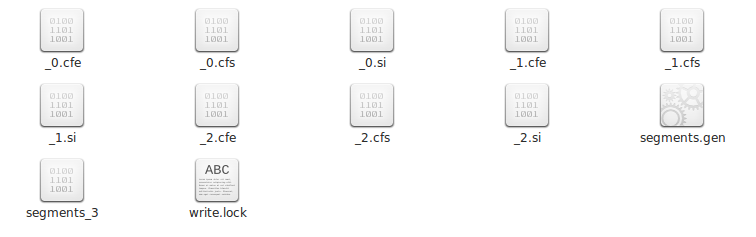

运行上面的代码,创建索引的结果如下:

MySearch.java是一个搜索的示例:

|

1

2

3

4

5

6

7

8

9

10

11

12

13

14

15

16

17

18

19

20

21

22

23

24

25

26

27

28

29

30

31

32

33

34

35

36

37

|

import

org.apache.lucene.analysis.Analyzer;

import

org.apache.lucene.document.Document;

import

org.apache.lucene.queryparser.classic.QueryParser;

import

org.apache.lucene.search.*;

import

org.apache.lucene.store.Directory;

import

org.apache.lucene.store.FSDirectory;

import

org.apache.lucene.index.DirectoryReader;

import

org.wltea.analyzer.lucene.IKAnalyzer;

import

java.io.File;

public

class

MySearch {

public

static

void

main(String[] args) {

Analyzer analyzer =

new

IKAnalyzer();

File indexDir =

new

File(

"/home/letian/lucene-test/index"

);

try

{

Directory fsDirectory = FSDirectory.open(indexDir);

DirectoryReader ireader = DirectoryReader.open(fsDirectory);

IndexSearcher isearcher =

new

IndexSearcher(ireader);

QueryParser qp =

new

QueryParser(

"content"

, analyzer);

//使用QueryParser查询分析器构造Query对象

qp.setDefaultOperator(QueryParser.AND_OPERATOR);

Query query = qp.parse(

"Lucene"

);

// 搜索Lucene

TopDocs topDocs = isearcher.search(query ,

5

);

//搜索相似度最高的5条记录

System.out.println(

"命中:"

+ topDocs.totalHits);

ScoreDoc[] scoreDocs = topDocs.scoreDocs;

for

(

int

i =

0

; i < topDocs.totalHits; i++){

Document targetDoc = isearcher.doc(scoreDocs[i].doc);

System.out.println(

"内容:"

+ targetDoc.toString());

}

}

catch

(Exception e) {

}

}

}

|

|

1

2

3

|

命中:2

内容:Document<stored,indexed,tokenized<

id

:1234> stored,indexed,tokenized<content:Lucene是apache软件基金会4 jakarta项目组的一个子项目,是一个开放源代码的全文检索引擎工具包>>

内容:Document<stored,indexed,tokenized<

id

:1235> stored,indexed,tokenized<content:Lucene不是一个完整的全文索引应用,而是是一个用Java写的全文索引引擎工具包,它可以方便的嵌入到各种应用中实现>>

|

注意,IK Analyzer需要使用其下载列表中的

IK Analyzer 2012FF_hf1.zip,否则在和Lucene 4.10配合使用时会报错。

我使用 intellij IDEA 12进行的测试。

1、Field()已经不推荐按使用。

2、QueryParser()的使用方式也改变了。

下面是更加符合要求的示例。

运行上面的代码,创建索引的结果如下:

MySearch.java是一个搜索的示例:

我使用 intellij IDEA 12进行的测试。

建立java项目

建立项目HelloLucene,导入Lucene的几个库。“File”->“Project Structure”->

将IK Analyzer 2012FF_hf1.zip解压后的源码放入src目录,并将字典和配置文件放入src目录,最终如下:

一个示例:

IKAnalyzerDemo.java中是我在其他地方找的一个示例,和IK的官方示例很像。内容如下:

|

1

2

3

4

5

6

7

8

9

10

11

12

13

14

15

16

17

18

19

20

21

22

23

24

25

26

27

28

29

30

31

32

33

34

35

36

37

38

39

40

41

42

43

44

45

46

47

48

49

50

51

52

53

54

55

56

57

58

59

60

61

62

63

64

65

66

67

68

69

70

71

72

73

74

75

76

77

78

79

80

81

82

83

84

85

86

87

88

89

90

91

92

93

94

95

96

|

package

org.apache.lucene.demo;

import

java.io.IOException;

import

java.io.StringReader;

import

org.apache.lucene.analysis.Analyzer;

import

org.apache.lucene.analysis.TokenStream;

import

org.apache.lucene.analysis.tokenattributes.CharTermAttribute;

import

org.apache.lucene.document.Document;

import

org.apache.lucene.document.Field;

import

org.apache.lucene.index.IndexReader;

import

org.apache.lucene.index.IndexWriter;

import

org.apache.lucene.index.IndexWriterConfig;

import

org.apache.lucene.queryparser.classic.ParseException;

import

org.apache.lucene.queryparser.classic.QueryParser;

import

org.apache.lucene.search.IndexSearcher;

import

org.apache.lucene.search.Query;

import

org.apache.lucene.search.ScoreDoc;

import

org.apache.lucene.search.TopDocs;

import

org.apache.lucene.store.RAMDirectory;

import

org.apache.lucene.util.Version;

import

org.wltea.analyzer.lucene.IKAnalyzer;

public

class

IKAnalyzerDemo {

/**

* @param args

* @throws IOException

*/

public

static

void

main(String[] args)

throws

IOException {

// TODO Auto-generated method stub

//建立索引

String text1 =

"IK Analyzer是一个结合词典分词和文法分词的中文分词开源工具包。它使用了全新的正向迭代最细粒度切"

+

"分算法。"

;

String text2 =

"中文分词工具包可以和lucene是一起使用的"

;

String text3 =

"中文分词,你妹"

;

String fieldName =

"contents"

;

Analyzer analyzer =

new

IKAnalyzer();

RAMDirectory directory =

new

RAMDirectory();

IndexWriterConfig writerConfig =

new

IndexWriterConfig(Version.LUCENE_34, analyzer);

IndexWriter indexWriter =

new

IndexWriter(directory, writerConfig);

Document document1 =

new

Document();

document1.add(

new

Field(

"ID"

,

"1"

, Field.Store.YES, Field.Index.NOT_ANALYZED));

document1.add(

new

Field(fieldName, text1, Field.Store.YES, Field.Index.ANALYZED));

indexWriter.addDocument(document1);

Document document2 =

new

Document();

document2.add(

new

Field(

"ID"

,

"2"

, Field.Store.YES, Field.Index.NOT_ANALYZED));

document2.add(

new

Field(fieldName, text2, Field.Store.YES, Field.Index.ANALYZED));

indexWriter.addDocument(document2);

Document document3 =

new

Document();

document3.add(

new

Field(

"ID"

,

"2"

, Field.Store.YES, Field.Index.NOT_ANALYZED));

document3.add(

new

Field(fieldName, text3, Field.Store.YES, Field.Index.ANALYZED));

indexWriter.addDocument(document3);

indexWriter.close();

//搜索

IndexReader indexReader = IndexReader.open(directory);

IndexSearcher searcher =

new

IndexSearcher(indexReader);

String request =

"中文分词工具包"

;

QueryParser parser =

new

QueryParser(Version.LUCENE_40, fieldName, analyzer);

parser.setDefaultOperator(QueryParser.AND_OPERATOR);

try

{

Query query = parser.parse(request);

TopDocs topDocs = searcher.search(query,

5

);

System.out.println(

"命中数:"

+topDocs.totalHits);

ScoreDoc[] docs = topDocs.scoreDocs;

for

(ScoreDoc doc : docs){

Document d = searcher.doc(doc.doc);

System.out.println(

"内容:"

+d.get(fieldName));

}

}

catch

(ParseException e) {

// TODO Auto-generated catch block

e.printStackTrace();

}

finally

{

if

(indexReader !=

null

){

try

{

indexReader.close();

}

catch

(IOException e) {

e.printStackTrace();

}

}

if

(directory !=

null

){

try

{

directory.close();

}

catch

(Exception e) {

e.printStackTrace();

}

}

}

}

}

|

|

1

2

3

4

|

加载扩展停止词典:stopword.dic

命中数:2

内容:中文分词工具包可以和lucene是一起使用的

内容:IK Analyzer是一个结合词典分词和文法分词的中文分词开源工具包。它使用了全新的正向迭代最细粒度切分算法。

|

1、Field()已经不推荐按使用。

2、QueryParser()的使用方式也改变了。

下面是更加符合要求的示例。

第二个示例:

MyIndex类用来创建索引:|

1

2

3

4

5

6

7

8

9

10

11

12

13

14

15

16

17

18

19

20

21

22

23

24

25

26

27

28

29

30

31

32

33

34

35

36

37

38

39

40

41

42

43

44

45

46

47

48

49

50

51

52

53

54

55

56

|

import

org.apache.lucene.analysis.Analyzer;

import

org.apache.lucene.document.*;

import

org.apache.lucene.document.Field.Store;

import

org.apache.lucene.index.IndexWriter;

import

org.apache.lucene.index.IndexWriterConfig;

import

org.apache.lucene.store.Directory;

import

org.apache.lucene.store.FSDirectory;

import

org.apache.lucene.util.Version;

import

org.wltea.analyzer.lucene.IKAnalyzer;

import

java.io.File;

public

class

MyIndex {

public

static

void

main(String[] args) {

String ID;

String content;

ID =

"1231"

;

content =

"BuzzFeed has compiled an amazing array of "

+

"ridiculously strange bridesmaid snapshots, courtesy of Awkward Family Photos. "

;

indexPost(ID, content);

ID =

"1234"

;

content =

"Lucene是apache软件基金会4 jakarta项目组的一个子项目,是一个开放源代码的全文检索引擎工具包"

;

indexPost(ID, content);

ID =

"1235"

;

content =

"Lucene不是一个完整的全文索引应用,而是是一个用Java写的全文索引引擎工具包,它可以方便的嵌入到各种应用中实现"

;

indexPost(ID, content);

}

public

static

void

indexPost(String ID, String content) {

File indexDir =

new

File(

"/home/letian/lucene-test/index"

);

Analyzer analyzer =

new

IKAnalyzer();

TextField postIdField =

new

TextField(

"id"

, ID, Store.YES);

// 不要用StringField

TextField postContentField =

new

TextField(

"content"

, content, Store.YES);

Document doc =

new

Document();

doc.add(postIdField);

doc.add(postContentField);

IndexWriterConfig iwConfig =

new

IndexWriterConfig(Version.LUCENE_4_10_1, analyzer);

iwConfig.setOpenMode(IndexWriterConfig.OpenMode.CREATE_OR_APPEND);

try

{

Directory fsDirectory = FSDirectory.open(indexDir);

IndexWriter indexWriter =

new

IndexWriter(fsDirectory, iwConfig);

indexWriter.addDocument(doc);

indexWriter.close();

}

catch

(Exception e) {

e.printStackTrace();

}

}

}

|

运行上面的代码,创建索引的结果如下:

MySearch.java是一个搜索的示例:

|

1

2

3

4

5

6

7

8

9

10

11

12

13

14

15

16

17

18

19

20

21

22

23

24

25

26

27

28

29

30

31

32

33

34

35

36

37

|

import

org.apache.lucene.analysis.Analyzer;

import

org.apache.lucene.document.Document;

import

org.apache.lucene.queryparser.classic.QueryParser;

import

org.apache.lucene.search.*;

import

org.apache.lucene.store.Directory;

import

org.apache.lucene.store.FSDirectory;

import

org.apache.lucene.index.DirectoryReader;

import

org.wltea.analyzer.lucene.IKAnalyzer;

import

java.io.File;

public

class

MySearch {

public

static

void

main(String[] args) {

Analyzer analyzer =

new

IKAnalyzer();

File indexDir =

new

File(

"/home/letian/lucene-test/index"

);

try

{

Directory fsDirectory = FSDirectory.open(indexDir);

DirectoryReader ireader = DirectoryReader.open(fsDirectory);

IndexSearcher isearcher =

new

IndexSearcher(ireader);

QueryParser qp =

new

QueryParser(

"content"

, analyzer);

//使用QueryParser查询分析器构造Query对象

qp.setDefaultOperator(QueryParser.AND_OPERATOR);

Query query = qp.parse(

"Lucene"

);

// 搜索Lucene

TopDocs topDocs = isearcher.search(query ,

5

);

//搜索相似度最高的5条记录

System.out.println(

"命中:"

+ topDocs.totalHits);

ScoreDoc[] scoreDocs = topDocs.scoreDocs;

for

(

int

i =

0

; i < topDocs.totalHits; i++){

Document targetDoc = isearcher.doc(scoreDocs[i].doc);

System.out.println(

"内容:"

+ targetDoc.toString());

}

}

catch

(Exception e) {

}

}

}

|

|

1

2

3

|

命中:2

内容:Document<stored,indexed,tokenized<

id

:1234> stored,indexed,tokenized<content:Lucene是apache软件基金会4 jakarta项目组的一个子项目,是一个开放源代码的全文检索引擎工具包>>

内容:Document<stored,indexed,tokenized<

id

:1235> stored,indexed,tokenized<content:Lucene不是一个完整的全文索引应用,而是是一个用Java写的全文索引引擎工具包,它可以方便的嵌入到各种应用中实现>>

|

4002

4002

被折叠的 条评论

为什么被折叠?

被折叠的 条评论

为什么被折叠?