本人的需求是:

通过theano的cnn训练神经网络,将最终稳定的网络权值保存下来。c++实现cnn的前向计算过程,读取theano的权值,复现theano的测试结果

本人最终的成果是:

1、卷积神经网络的前向计算过程

2、mlp网络的前向与后向计算,也就是可以用来训练样本

需要注意的是:

如果为了复现theano的测试结果,那么隐藏层的激活函数要选用tanh;

否则,为了mlp的训练过程,激活函数要选择sigmoid

成果的展现:

下图是theano的训练以及测试结果,验证样本错误率为9.23%

下面是我的c++程序,验证错误率也是9.23%,完美复现theano的结果

简单讲难点有两个:

1.theano的权值以及测试样本与c++如何互通?

2.theano的卷积的时候,上层输入的featuremap如何组合,映射到本层的每个像素点上?

在解决上述两点的过程中,走了很多的弯路:

为了用c++重现theano的测试结果,必须让c++能够读取theano保存的权值以及测试样本。

思考分析如下:

1.theano的权值是numpy格式,而它直接与c++交互,很困难,numpy的格式不好解析,网上资料很少

2.采用python做中间转换,实现1)的要求。后看theano代码,发现读入python的训练样本,不用转换成numpy数组,用本来python就可以了。但是python经过cPickle的dump文件,加了很多格式,不适合同c++交互。

3.

用json转换,由于python和cpp都有json的接口,都转成json的格式,然后再交互。可是theano训练之后权值是numpy格式的,需要转成python数组,json才可以存到文件中。现在的问题是怎么把numpy转成python的list?

4.为了解决3,找了一天,终于找到了numpy数组的tolist接口,可以将numpy数组转换成python的list。

5.现在python和c++都可以用json了。研究jsoncpp库的使用,将python的json文件读取。通过测试发现,库

jsoncpp不适合读取大文件,很容易造成内存不足,效率极低,故不可取。

6.用c++写函数,自己解析json文件。并且通过pot文件生成训练与测试样本的时候,也直接用c++来生成,不需要转换成numpy数组的格式。

经过上述分析,解决了难点1。

通过json格式实现c++与theano权值与测试样本的互通,并且自己写函数解析json文件。

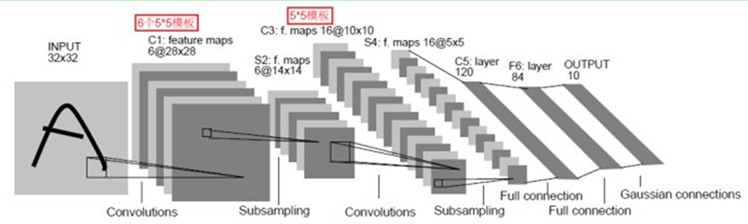

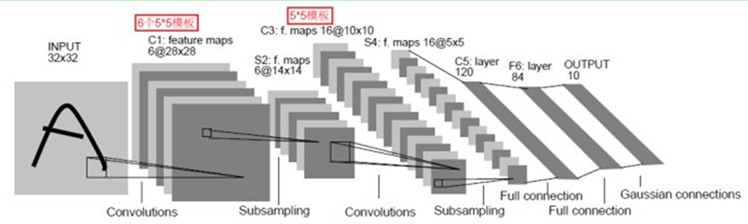

对于难点2,看一个典型的cnn网络图

难点2的详细描述如下:

- Theano从S2到C3的时候,如何选择S2的featuremap进行组合?每次固定选取还是根据一定的算法动态组合?

- Theano从C3到S4的pooling过程,令poolsize是(2*2),如何将C3的每4个像素变成S4的一个像素?

通过大量的分析,对比验证,发现以下结论:

- Theano从S2到C3的时候,选择S2的所有featuremap进行组合

- Theano从C3到S4的pooling过程,令poolsize是(2*2),,对于C3的每4个像素,选取最大值作为S4的一个像素

通过以上的分析,理论上初步已经弄清楚了。下面就是要根据理论编写代码,真正耗时的是代码的调试过程,总是复现不了theano的测试结果。

曾经不止一次的认为这是不可能复现的,鬼知道theano怎么搞的。

今天终于将代码调通,很是兴奋,于是有了这篇博客。

阻碍我实现结果的bug主要有两个,一个是理论上的不足,对theano卷积的细节把握不准确;一个是自己写代码时粗心,变量初始化错误。如下:

- S2到C3卷积时,theano会对卷积核旋转180度之后,才会像下图这样进行卷积(本人刚接触这块,实在是不知道啊。。。)

- C3到S4取像素最大值的时候,想当然认为像素都是正的,变量初始化为0,导致最终找最大值错误(这个bug找的时间最久,血淋淋的教训。。。)

Python CNN代码:Here。

然后加入下面代码。

theano对写权值的函数,注意它保存的是卷积核旋转180度后的权值,如果权值是二维的,那么行列互换(与c++的权值表示法统一)

- def getDataJson(layers):

- data = []

- i = 0

- for layer in layers:

- w, b = layer.params

- # print '..layer is', i

- w, b = w.get_value(), b.get_value()

- wshape = w.shape

- # print '...the shape of w is', wshape

- if len(wshape) == 2:

- w = w.transpose()

- else:

- for k in xrange(wshape[0]):

- for j in xrange(wshape[1]):

- w[k][j] = numpy.rot90(w[k][j], 2)

- w = w.reshape((wshape[0], numpy.prod(wshape[1:])))

- w = w.tolist()

- b = b.tolist()

- data.append([w, b])

- i += 1

- return data

- def writefile(data, name = '../../tmp/src/data/theanocnn.json'):

- print ('writefile is ' + name)

- f = open(name, "wb")

- json.dump(data,f)

- f.close()

theano读权值

- def readfile(layers, nkerns, name = '../../tmp/src/data/theanocnn.json'):

- # Load the dataset

- print ('readfile is ' + name)

- f = open(name, 'rb')

- data = json.load(f)

- f.close()

- readwb(data, layers, nkerns)

- def readwb(data, layers, nkerns):

- i = 0

- kernSize = len(nkerns)

- inputnum = 1

- for layer in layers:

- w, b = data[i]

- w = numpy.array(w, dtype='float32')

- b = numpy.array(b, dtype='float32')

- # print '..layer is', i

- # print w.shape

- if i >= kernSize:

- w = w.transpose()

- else:

- w = w.reshape((nkerns[i], inputnum, 5, 5))

- for k in xrange(nkerns[i]):

- for j in xrange(inputnum):

- c = w[k][j]

- w[k][j] = numpy.rot90(c, 2)

- inputnum = nkerns[i]

- # print '..readwb ,transpose and rot180'

- # print w.shape

- layer.W.set_value(w, borrow=True)

- layer.b.set_value(b, borrow=True)

- i += 1

测试样本由 手写数字库mnist生成,核心代码如下:

- def mnist2json_small(cnnName = 'mnist_small.json', validNumber = 10):

- dataset = '../../data/mnist.pkl'

- print '... loading data', dataset

- # Load the dataset

- f = open(dataset, 'rb')

- train_set, valid_set, test_set = cPickle.load(f)

- #print test_set

- f.close()

- def np2listSmall(train_set, number):

- trainfile = []

- trains, labels = train_set

- trainfile = []

- #如果注释掉下面,将生成number个验证样本

- number = len(labels)

- for one in trains[:number]:

- one = one.tolist()

- trainfile.append(one)

- labelfile = labels[:number].tolist()

- datafile = [trainfile, labelfile]

- return datafile

- smallData = valid_set

- print len(smallData)

- valid, validlabel = np2listSmall(smallData, validNumber)

- datafile = [valid, validlabel]

- basedir = '../../tmp/src/data/'

- # basedir = './'

- json.dump(datafile, open(basedir + cnnName, 'wb'))

个人收获:

- 面对较难的任务,逐步分解,各个击破

- 解决问题的过程中,如果此路不通,要马上寻找其它思路,就像当年做数学证明题一样

- 态度要积极,不要轻言放弃,尽全力完成任务

- 代码调试时,应该首先构造较为全面的测试用例,这样可以迅速定位bug

的时间就可以解决,下面开始贴代码

的时间就可以解决,下面开始贴代码

- #include <iostream>

- #include "mlp.h"

- #include "util.h"

- #include "testinherit.h"

- #include "neuralNetwork.h"

- using namespace std;

- /************************************************************************/

- /* 本程序实现了

- 1、卷积神经网络的前向计算过程

- 2、mlp网络的前向与后向计算,也就是可以用来训练样本

- 需要注意的是:

- 如果为了复现theano的测试结果,那么隐藏层的激活函数要选用tanh;

- 否则,为了mlp的训练过程,激活函数要选择sigmoid

- */

- /************************************************************************/

- int main()

- {

- cout << "****cnn****" << endl;

- TestCnnTheano(28 * 28, 10);

- // TestMlpMnist对mlp训练样本进行测试

- //TestMlpMnist(28 * 28, 500, 10);

- return 0;

- }

neuralNetwork.h

- #ifndef NEURALNETWORK_H

- #define NEURALNETWORK_H

- #include "mlp.h"

- #include "cnn.h"

- #include <vector>

- using std::vector;

- /************************************************************************/

- /* 这是一个卷积神经网络 */

- /************************************************************************/

- class NeuralNetWork

- {

- public:

- NeuralNetWork(int iInput, int iOut);

- ~NeuralNetWork();

- void Predict(double** in_data, int n);

- double CalErrorRate(const vector<double *> &vecvalid, const vector<WORD> &vecValidlabel);

- void Setwb(vector< vector<double*> > &vvAllw, vector< vector<double> > &vvAllb);

- void SetTrainNum(int iNum);

- int Predict(double *pInputData);

- // void Forward_propagation(double** ppdata, int n);

- double* Forward_propagation(double *);

- private:

- int m_iSampleNum; //样本数量

- int m_iInput; //输入维数

- int m_iOut; //输出维数

- vector<CnnLayer *> vecCnns;

- Mlp *m_pMlp;

- };

- void TestCnnTheano(const int iInput, const int iOut);

- #endif

neuralNetwork.cpp

- #include "neuralNetwork.h"

- #include <iostream>

- #include "util.h"

- #include <iomanip>

- using namespace std;

- NeuralNetWork::NeuralNetWork(int iInput, int iOut):m_iSampleNum(0), m_iInput(iInput), m_iOut(iOut), m_pMlp(NULL)

- {

- int iFeatureMapNumber = 20, iPoolWidth = 2, iInputImageWidth = 28, iKernelWidth = 5, iInputImageNumber = 1;

- CnnLayer *pCnnLayer = new CnnLayer(m_iSampleNum, iInputImageNumber, iInputImageWidth, iFeatureMapNumber, iKernelWidth, iPoolWidth);

- vecCnns.push_back(pCnnLayer);

- iInputImageNumber = 20;

- iInputImageWidth = 12;

- iFeatureMapNumber = 50;

- pCnnLayer = new CnnLayer(m_iSampleNum, iInputImageNumber, iInputImageWidth, iFeatureMapNumber, iKernelWidth, iPoolWidth);

- vecCnns.push_back(pCnnLayer);

- const int ihiddenSize = 1;

- int phidden[ihiddenSize] = {500};

- // construct LogisticRegression

- m_pMlp = new Mlp(m_iSampleNum, iFeatureMapNumber * 4 * 4, m_iOut, ihiddenSize, phidden);

- }

- NeuralNetWork::~NeuralNetWork()

- {

- for (vector<CnnLayer*>::iterator it = vecCnns.begin(); it != vecCnns.end(); ++it)

- {

- delete *it;

- }

- delete m_pMlp;

- }

- void NeuralNetWork::SetTrainNum(int iNum)

- {

- m_iSampleNum = iNum;

- for (size_t i = 0; i < vecCnns.size(); ++i)

- {

- vecCnns[i]->SetTrainNum(iNum);

- }

- m_pMlp->SetTrainNum(iNum);

- }

- int NeuralNetWork::Predict(double *pdInputdata)

- {

- double *pdPredictData = NULL;

- pdPredictData = Forward_propagation(pdInputdata);

- int iResult = -1;

- iResult = m_pMlp->m_pLogisticLayer->Predict(pdPredictData);

- return iResult;

- }

- double* NeuralNetWork::Forward_propagation(double *pdInputData)

- {

- double *pdPredictData = pdInputData;

- vector<CnnLayer*>::iterator it;

- CnnLayer *pCnnLayer;

- for (it = vecCnns.begin(); it != vecCnns.end(); ++it)

- {

- pCnnLayer = *it;

- pCnnLayer->Forward_propagation(pdPredictData);

- pdPredictData = pCnnLayer->GetOutputData();

- }

- //此时pCnnLayer指向最后一个卷积层,pdInputData是卷积层的最后输出

- //暂时忽略mlp的前向计算,以后加上

- pdPredictData = m_pMlp->Forward_propagation(pdPredictData);

- return pdPredictData;

- }

- void NeuralNetWork::Setwb(vector< vector<double*> > &vvAllw, vector< vector<double> > &vvAllb)

- {

- for (size_t i = 0; i < vecCnns.size(); ++i)

- {

- vecCnns[i]->Setwb(vvAllw[i], vvAllb[i]);

- }

- size_t iLayerNum = vvAllw.size();

- for (size_t i = vecCnns.size(); i < iLayerNum - 1; ++i)

- {

- int iHiddenIndex = 0;

- m_pMlp->m_ppHiddenLayer[iHiddenIndex]->Setwb(vvAllw[i], vvAllb[i]);

- ++iHiddenIndex;

- }

- m_pMlp->m_pLogisticLayer->Setwb(vvAllw[iLayerNum - 1], vvAllb[iLayerNum - 1]);

- }

- double NeuralNetWork::CalErrorRate(const vector<double *> &vecvalid, const vector<WORD> &vecValidlabel)

- {

- cout << "Predict------------" << endl;

- int iErrorNumber = 0, iValidNumber = vecValidlabel.size();

- //iValidNumber = 1;

- for (int i = 0; i < iValidNumber; ++i)

- {

- int iResult = Predict(vecvalid[i]);

- //cout << i << ",valid is " << iResult << " label is " << vecValidlabel[i] << endl;

- if (iResult != vecValidlabel[i])

- {

- ++iErrorNumber;

- }

- }

- cout << "the num of error is " << iErrorNumber << endl;

- double dErrorRate = (double)iErrorNumber / iValidNumber;

- cout << "the error rate of Train sample by softmax is " << setprecision(10) << dErrorRate * 100 << "%" << endl;

- return dErrorRate;

- }

- /************************************************************************/

- /*

- 测试样本采用mnist库,此cnn的结构与theano教程上的一致,即

- 输入是28*28图像,接下来是2个卷积层(卷积+pooling),featuremap个数分别是20和50,

- 然后是全连接层(500个神经元),最后输出层10个神经元

- */

- /************************************************************************/

- void TestCnnTheano(const int iInput, const int iOut)

- {

- //构建卷积神经网络

- NeuralNetWork neural(iInput, iOut);

- //存取theano的权值

- vector< vector<double*> > vvAllw;

- vector< vector<double> > vvAllb;

- //存取测试样本与标签

- vector<double*> vecValid;

- vector<WORD> vecLabel;

- //保存theano权值与测试样本的文件

- const char *szTheanoWeigh = "../../data/theanocnn.json", *szTheanoTest = "../../data/mnist_validall.json";

- //将每次权值的第二维(列宽)保存到vector中,用于读取json文件

- vector<int> vecSecondDimOfWeigh;

- vecSecondDimOfWeigh.push_back(5 * 5);

- vecSecondDimOfWeigh.push_back(20 * 5 * 5);

- vecSecondDimOfWeigh.push_back(50 * 4 * 4);

- vecSecondDimOfWeigh.push_back(500);

- cout << "loadwb ---------" << endl;

- //读取权值

- LoadWeighFromJson(vvAllw, vvAllb, szTheanoWeigh, vecSecondDimOfWeigh);

- //将权值设置到cnn中

- neural.Setwb(vvAllw, vvAllb);

- //读取测试文件

- LoadTestSampleFromJson(vecValid, vecLabel, szTheanoTest, iInput);

- //设置测试样本的总量

- int iVaildNum = vecValid.size();

- neural.SetTrainNum(iVaildNum);

- //前向计算cnn的错误率,输出结果

- neural.CalErrorRate(vecValid, vecLabel);

- //释放测试文件所申请的空间

- for (vector<double*>::iterator cit = vecValid.begin(); cit != vecValid.end(); ++cit)

- {

- delete [](*cit);

- }

- }

cnn.h

- #ifndef CNN_H

- #define CNN_H

- #include "featuremap.h"

- #include "poollayer.h"

- #include <vector>

- using std::vector;

- typedef unsigned short WORD;

- /**

- *本卷积模拟theano的测试过程

- *当输入层是num个featuremap时,本层卷积层假设有featureNum个featuremap。

- *对于本层每个像素点选取,上一层num个featuremap一起组合,并且没有bias

- *然后本层输出到pooling层,pooling只对poolsize内的像素取最大值,然后加上bias,总共有featuremap个bias值

- */

- class CnnLayer

- {

- public:

- CnnLayer(int iSampleNum, int iInputImageNumber, int iInputImageWidth, int iFeatureMapNumber,

- int iKernelWidth, int iPoolWidth);

- ~CnnLayer();

- void Forward_propagation(double *pdInputData);

- void Back_propagation(double* , double* , double );

- void Train(double *x, WORD y, double dLr);

- int Predict(double *);

- void Setwb(vector<double*> &vpdw, vector<double> &vdb);

- void SetInputAllData(double **ppInputAllData, int iInputNum);

- void SetTrainNum(int iSampleNumber);

- void PrintOutputData();

- double* GetOutputData();

- private:

- int m_iSampleNum;

- FeatureMap *m_pFeatureMap;

- PoolLayer *m_pPoolLayer;

- //反向传播时所需值

- double **m_ppdDelta;

- double *m_pdInputData;

- double *m_pdOutputData;

- };

- void TestCnn();

- #endif // CNN_H

cnn.cpp

- #include "cnn.h"

- #include "util.h"

- #include <cassert>

- CnnLayer::CnnLayer(int iSampleNum, int iInputImageNumber, int iInputImageWidth, int iFeatureMapNumber,

- int iKernelWidth, int iPoolWidth):

- m_iSampleNum(iSampleNum), m_pdInputData(NULL), m_pdOutputData(NULL)

- {

- m_pFeatureMap = new FeatureMap(iInputImageNumber, iInputImageWidth, iFeatureMapNumber, iKernelWidth);

- int iFeatureMapWidth = iInputImageWidth - iKernelWidth + 1;

- m_pPoolLayer = new PoolLayer(iFeatureMapNumber, iPoolWidth, iFeatureMapWidth);

- }

- CnnLayer::~CnnLayer()

- {

- delete m_pFeatureMap;

- delete m_pPoolLayer;

- }

- void CnnLayer::Forward_propagation(double *pdInputData)

- {

- m_pFeatureMap->Convolute(pdInputData);

- m_pPoolLayer->Convolute(m_pFeatureMap->GetFeatureMapValue());

- m_pdOutputData = m_pPoolLayer->GetOutputData();

- /************************************************************************/

- /* 调试卷积过程的各阶段结果,调通后删除 */

- /************************************************************************/

- /*m_pFeatureMap->PrintOutputData();

- m_pPoolLayer->PrintOutputData();*/

- }

- void CnnLayer::SetInputAllData(double **ppInputAllData, int iInputNum)

- {

- }

- double* CnnLayer::GetOutputData()

- {

- assert(NULL != m_pdOutputData);

- return m_pdOutputData;

- }

- void CnnLayer::Setwb(vector<double*> &vpdw, vector<double> &vdb)

- {

- m_pFeatureMap->SetWeigh(vpdw);

- m_pPoolLayer->SetBias(vdb);

- }

- void CnnLayer::SetTrainNum( int iSampleNumber )

- {

- m_iSampleNum = iSampleNumber;

- }

- void CnnLayer::PrintOutputData()

- {

- m_pFeatureMap->PrintOutputData();

- m_pPoolLayer->PrintOutputData();

- }

- void TestCnn()

- {

- const int iFeatureMapNumber = 2, iPoolWidth = 2, iInputImageWidth = 8, iKernelWidth = 3, iInputImageNumber = 2;

- double *pdImage = new double[iInputImageWidth * iInputImageWidth * iInputImageNumber];

- double arrInput[iInputImageNumber][iInputImageWidth * iInputImageWidth];

- MakeCnnSample(arrInput, pdImage, iInputImageWidth, iInputImageNumber);

- double *pdKernel = new double[3 * 3 * iInputImageNumber];

- double arrKernel[3 * 3 * iInputImageNumber];

- MakeCnnWeigh(pdKernel, iInputImageNumber) ;

- CnnLayer cnn(3, iInputImageNumber, iInputImageWidth, iFeatureMapNumber, iKernelWidth, iPoolWidth);

- vector <double*> vecWeigh;

- vector <double> vecBias;

- for (int i = 0; i < iFeatureMapNumber; ++i)

- {

- vecBias.push_back(1.0);

- }

- vecWeigh.push_back(pdKernel);

- for (int i = 0; i < 3 * 3 * 2; ++i)

- {

- arrKernel[i] = i;

- }

- vecWeigh.push_back(arrKernel);

- cnn.Setwb(vecWeigh, vecBias);

- cnn.Forward_propagation(pdImage);

- cnn.PrintOutputData();

- delete []pdKernel;

- delete []pdImage;

- }

featuremap.h

- #ifndef FEATUREMAP_H

- #define FEATUREMAP_H

- #include <cassert>

- #include <vector>

- using std::vector;

- class FeatureMap

- {

- public:

- FeatureMap(int iInputImageNumber, int iInputImageWidth, int iFeatureMapNumber, int iKernelWidth);

- ~FeatureMap();

- void Forward_propagation(double* );

- void Back_propagation(double* , double* , double );

- void Convolute(double *pdInputData);

- int GetFeatureMapSize()

- {

- return m_iFeatureMapSize;

- }

- int GetFeatureMapWidth()

- {

- return m_iFeatureMapWidth;

- }

- double* GetFeatureMapValue()

- {

- assert(m_pdOutputValue != NULL);

- return m_pdOutputValue;

- }

- void SetWeigh(const vector<double *> &vecWeigh);

- void PrintOutputData();

- double **m_ppdWeigh;

- double *m_pdBias;

- private:

- int m_iInputImageNumber;

- int m_iInputImageWidth;

- int m_iInputImageSize;

- int m_iFeatureMapNumber;

- int m_iFeatureMapWidth;

- int m_iFeatureMapSize;

- int m_iKernelWidth;

- // double m_dBias;

- double *m_pdOutputValue;

- };

- #endif // FEATUREMAP_H

featuremap.cpp

- #include "featuremap.h"

- #include "util.h"

- #include <cassert>

- FeatureMap::FeatureMap(int iInputImageNumber, int iInputImageWidth, int iFeatureMapNumber, int iKernelWidth):

- m_iInputImageNumber(iInputImageNumber),

- m_iInputImageWidth(iInputImageWidth),

- m_iFeatureMapNumber(iFeatureMapNumber),

- m_iKernelWidth(iKernelWidth)

- {

- m_iFeatureMapWidth = m_iInputImageWidth - m_iKernelWidth + 1;

- m_iInputImageSize = m_iInputImageWidth * m_iInputImageWidth;

- m_iFeatureMapSize = m_iFeatureMapWidth * m_iFeatureMapWidth;

- int iKernelSize;

- iKernelSize = m_iKernelWidth * m_iKernelWidth;

- double dbase = 1.0 / m_iInputImageSize;

- srand((unsigned)time(NULL));

- m_ppdWeigh = new double*[m_iFeatureMapNumber];

- m_pdBias = new double[m_iFeatureMapNumber];

- for (int i = 0; i < m_iFeatureMapNumber; ++i)

- {

- m_ppdWeigh[i] = new double[m_iInputImageNumber * iKernelSize];

- for (int j = 0; j < m_iInputImageNumber * iKernelSize; ++j)

- {

- m_ppdWeigh[i][j] = uniform(-dbase, dbase);

- }

- //m_pdBias[i] = uniform(-dbase, dbase);

- //theano的卷积层貌似没有用到bias,它在pooling层使用

- m_pdBias[i] = 0;

- }

- m_pdOutputValue = new double[m_iFeatureMapNumber * m_iFeatureMapSize];

- // m_dBias = uniform(-dbase, dbase);

- }

- FeatureMap::~FeatureMap()

- {

- delete []m_pdOutputValue;

- delete []m_pdBias;

- for (int i = 0; i < m_iFeatureMapNumber; ++i)

- {

- delete []m_ppdWeigh[i];

- }

- delete []m_ppdWeigh;

- }

- void FeatureMap::SetWeigh(const vector<double *> &vecWeigh)

- {

- assert(vecWeigh.size() == (DWORD)m_iFeatureMapNumber);

- for (int i = 0; i < m_iFeatureMapNumber; ++i)

- {

- delete []m_ppdWeigh[i];

- m_ppdWeigh[i] = vecWeigh[i];

- }

- }

- /*

- 卷积计算

- pdInputData:一维向量,包含若干个输入图像

- */

- void FeatureMap::Convolute(double *pdInputData)

- {

- for (int iMapIndex = 0; iMapIndex < m_iFeatureMapNumber; ++iMapIndex)

- {

- double dBias = m_pdBias[iMapIndex];

- //每一个featuremap

- for (int i = 0; i < m_iFeatureMapWidth; ++i)

- {

- for (int j = 0; j < m_iFeatureMapWidth; ++j)

- {

- double dSum = 0.0;

- int iInputIndex, iKernelIndex, iInputIndexStart, iKernelStart, iOutIndex;

- //输出向量的索引计算

- iOutIndex = iMapIndex * m_iFeatureMapSize + i * m_iFeatureMapWidth + j;

- //分别计算每一个输入图像

- for (int k = 0; k < m_iInputImageNumber; ++k)

- {

- //与kernel对应的输入图像的起始位置

- //iInputIndexStart = k * m_iInputImageSize + j * m_iInputImageWidth + i;

- iInputIndexStart = k * m_iInputImageSize + i * m_iInputImageWidth + j;

- //kernel的起始位置

- iKernelStart = k * m_iKernelWidth * m_iKernelWidth;

- for (int m = 0; m < m_iKernelWidth; ++m)

- {

- for (int n = 0; n < m_iKernelWidth; ++n)

- {

- //iKernelIndex = iKernelStart + n * m_iKernelWidth + m;

- iKernelIndex = iKernelStart + m * m_iKernelWidth + n;

- //i am not sure, is the expression of below correct?

- iInputIndex = iInputIndexStart + m * m_iInputImageWidth + n;

- dSum += pdInputData[iInputIndex] * m_ppdWeigh[iMapIndex][iKernelIndex];

- }//end n

- }//end m

- }//end k

- //加上偏置

- //dSum += dBias;

- m_pdOutputValue[iOutIndex] = dSum;

- }//end j

- }//end i

- }//end iMapIndex

- }

- void FeatureMap::PrintOutputData()

- {

- for (int i = 0; i < m_iFeatureMapNumber; ++i)

- {

- cout << "featuremap " << i <<endl;

- for (int m = 0; m < m_iFeatureMapWidth; ++m)

- {

- for (int n = 0; n < m_iFeatureMapWidth; ++n)

- {

- cout << m_pdOutputValue[i * m_iFeatureMapSize +m * m_iFeatureMapWidth +n] << ' ';

- }

- cout << endl;

- }

- cout <<endl;

- }

- }

poollayer.h

- #ifndef POOLLAYER_H

- #define POOLLAYER_H

- #include <vector>

- using std::vector;

- class PoolLayer

- {

- public:

- PoolLayer(int iOutImageNumber, int iPoolWidth, int iFeatureMapWidth);

- ~PoolLayer();

- void Convolute(double *pdInputData);

- void SetBias(const vector<double> &vecBias);

- double* GetOutputData();

- void PrintOutputData();

- private:

- int m_iOutImageNumber;

- int m_iPoolWidth;

- int m_iFeatureMapWidth;

- int m_iPoolSize;

- int m_iOutImageEdge;

- int m_iOutImageSize;

- double *m_pdOutData;

- double *m_pdBias;

- };

- #endif // POOLLAYER_H

poollayer.cpp

- #include "poollayer.h"

- #include "util.h"

- #include <cassert>

- PoolLayer::PoolLayer(int iOutImageNumber, int iPoolWidth, int iFeatureMapWidth):

- m_iOutImageNumber(iOutImageNumber),

- m_iPoolWidth(iPoolWidth),

- m_iFeatureMapWidth(iFeatureMapWidth)

- {

- m_iPoolSize = m_iPoolWidth * m_iPoolWidth;

- m_iOutImageEdge = m_iFeatureMapWidth / m_iPoolWidth;

- m_iOutImageSize = m_iOutImageEdge * m_iOutImageEdge;

- m_pdOutData = new double[m_iOutImageNumber * m_iOutImageSize];

- m_pdBias = new double[m_iOutImageNumber];

- /*for (int i = 0; i < m_iOutImageNumber; ++i)

- {

- m_pdBias[i] = 1;

- }*/

- }

- PoolLayer::~PoolLayer()

- {

- delete []m_pdOutData;

- delete []m_pdBias;

- }

- void PoolLayer::Convolute(double *pdInputData)

- {

- int iFeatureMapSize = m_iFeatureMapWidth * m_iFeatureMapWidth;

- for (int iOutImageIndex = 0; iOutImageIndex < m_iOutImageNumber; ++iOutImageIndex)

- {

- double dBias = m_pdBias[iOutImageIndex];

- for (int i = 0; i < m_iOutImageEdge; ++i)

- {

- for (int j = 0; j < m_iOutImageEdge; ++j)

- {

- double dValue = 0.0;

- int iInputIndex, iInputIndexStart, iOutIndex;

- /************************************************************************/

- /* 这里是最大的bug,dMaxPixel初始值设置为0,然后找最大值

- ** 问题在于像素值有负数,导致后面一系列计算错误,实在是太难找了

- /************************************************************************/

- double dMaxPixel = INT_MIN ;

- iOutIndex = iOutImageIndex * m_iOutImageSize + i * m_iOutImageEdge + j;

- iInputIndexStart = iOutImageIndex * iFeatureMapSize + (i * m_iFeatureMapWidth + j) * m_iPoolWidth;

- for (int m = 0; m < m_iPoolWidth; ++m)

- {

- for (int n = 0; n < m_iPoolWidth; ++n)

- {

- // int iPoolIndex = m * m_iPoolWidth + n;

- //i am not sure, the expression of below is correct?

- iInputIndex = iInputIndexStart + m * m_iFeatureMapWidth + n;

- if (pdInputData[iInputIndex] > dMaxPixel)

- {

- dMaxPixel = pdInputData[iInputIndex];

- }

- }//end n

- }//end m

- dValue = dMaxPixel + dBias;

- assert(iOutIndex < m_iOutImageNumber * m_iOutImageSize);

- //m_pdOutData[iOutIndex] = (dMaxPixel);

- m_pdOutData[iOutIndex] = mytanh(dValue);

- }//end j

- }//end i

- }//end iOutImageIndex

- }

- void PoolLayer::SetBias(const vector<double> &vecBias)

- {

- assert(vecBias.size() == (DWORD)m_iOutImageNumber);

- for (int i = 0; i < m_iOutImageNumber; ++i)

- {

- m_pdBias[i] = vecBias[i];

- }

- }

- double* PoolLayer::GetOutputData()

- {

- assert(NULL != m_pdOutData);

- return m_pdOutData;

- }

- void PoolLayer::PrintOutputData()

- {

- for (int i = 0; i < m_iOutImageNumber; ++i)

- {

- cout << "pool image " << i <<endl;

- for (int m = 0; m < m_iOutImageEdge; ++m)

- {

- for (int n = 0; n < m_iOutImageEdge; ++n)

- {

- cout << m_pdOutData[i * m_iOutImageSize + m * m_iOutImageEdge + n] << ' ';

- }

- cout << endl;

- }

- cout <<endl;

- }

- }

mlp.h

- #ifndef MLP_H

- #define MLP_H

- #include "hiddenLayer.h"

- #include "logisticRegression.h"

- class Mlp

- {

- public:

- Mlp(int n, int n_i, int n_o, int nhl, int *hls);

- ~Mlp();

- // void Train(double** in_data, double** in_label, double dLr, int epochs);

- void Predict(double** in_data, int n);

- void Train(double *x, WORD y, double dLr);

- void TrainAllSample(const vector<double*> &vecTrain, const vector<WORD> &vectrainlabel, double dLr);

- double CalErrorRate(const vector<double *> &vecvalid, const vector<WORD> &vecValidlabel);

- void Writewb(const char *szName);

- void Readwb(const char *szName);

- void Setwb(vector< vector<double*> > &vvAllw, vector< vector<double> > &vvAllb);

- void SetTrainNum(int iNum);

- int Predict(double *pInputData);

- // void Forward_propagation(double** ppdata, int n);

- double* Forward_propagation(double *);

- int* GetHiddenSize();

- int GetHiddenNumber();

- double *GetHiddenOutputData();

- HiddenLayer **m_ppHiddenLayer;

- LogisticRegression *m_pLogisticLayer;

- private:

- int m_iSampleNum; //样本数量

- int m_iInput; //输入维数

- int m_iOut; //输出维数

- int m_iHiddenLayerNum; //隐层数目

- int* m_piHiddenLayerSize; //中间隐层的大小 e.g. {3,4}表示有两个隐层,第一个有三个节点,第二个有4个节点

- };

- void mlp();

- void TestMlpTheano(const int m_iInput, const int ihidden, const int m_iOut);

- void TestMlpMnist(const int m_iInput, const int ihidden, const int m_iOut);

- #endif

mlp.cpp

- #include <iostream>

- #include "mlp.h"

- #include "util.h"

- #include <cassert>

- #include <iomanip>

- using namespace std;

- const int m_iSamplenum = 8, innode = 3, outnode = 8;

- Mlp::Mlp(int n, int n_i, int n_o, int nhl, int *hls)

- {

- m_iSampleNum = n;

- m_iInput = n_i;

- m_iOut = n_o;

- m_iHiddenLayerNum = nhl;

- m_piHiddenLayerSize = hls;

- //构造网络结构

- m_ppHiddenLayer = new HiddenLayer* [m_iHiddenLayerNum];

- for(int i = 0; i < m_iHiddenLayerNum; ++i)

- {

- if(i == 0)

- {

- m_ppHiddenLayer[i] = new HiddenLayer(m_iInput, m_piHiddenLayerSize[i]);//第一个隐层

- }

- else

- {

- m_ppHiddenLayer[i] = new HiddenLayer(m_piHiddenLayerSize[i-1], m_piHiddenLayerSize[i]);//其他隐层

- }

- }

- if (m_iHiddenLayerNum > 0)

- {

- m_pLogisticLayer = new LogisticRegression(m_piHiddenLayerSize[m_iHiddenLayerNum - 1], m_iOut, m_iSampleNum);//最后的softmax层

- }

- else

- {

- m_pLogisticLayer = new LogisticRegression(m_iInput, m_iOut, m_iSampleNum);//最后的softmax层

- }

- }

- Mlp::~Mlp()

- {

- //二维指针分配的对象不一定是二维数组

- for(int i = 0; i < m_iHiddenLayerNum; ++i)

- delete m_ppHiddenLayer[i]; //删除的时候不能加[]

- delete[] m_ppHiddenLayer;

- //log_layer只是一个普通的对象指针,不能作为数组delete

- delete m_pLogisticLayer;//删除的时候不能加[]

- }

- void Mlp::TrainAllSample(const vector<double *> &vecTrain, const vector<WORD> &vectrainlabel, double dLr)

- {

- cout << "Mlp::TrainAllSample" << endl;

- for (int j = 0; j < m_iSampleNum; ++j)

- {

- Train(vecTrain[j], vectrainlabel[j], dLr);

- }

- }

- void Mlp::Train(double *pdTrain, WORD usLabel, double dLr)

- {

- // cout << "******pdLabel****" << endl;

- // printArrDouble(ppdinLabel, m_iSampleNum, m_iOut);

- double *pdLabel = new double[m_iOut];

- MakeOneLabel(usLabel, pdLabel, m_iOut);

- //前向传播阶段

- for(int n = 0; n < m_iHiddenLayerNum; ++ n)

- {

- if(n == 0) //第一个隐层直接输入数据

- {

- m_ppHiddenLayer[n]->Forward_propagation(pdTrain);

- }

- else //其他隐层用前一层的输出作为输入数据

- {

- m_ppHiddenLayer[n]->Forward_propagation(m_ppHiddenLayer[n-1]->m_pdOutdata);

- }

- }

- //softmax层使用最后一个隐层的输出作为输入数据

- m_pLogisticLayer->Forward_propagation(m_ppHiddenLayer[m_iHiddenLayerNum-1]->m_pdOutdata);

- //反向传播阶段

- m_pLogisticLayer->Back_propagation(m_ppHiddenLayer[m_iHiddenLayerNum-1]->m_pdOutdata, pdLabel, dLr);

- for(int n = m_iHiddenLayerNum-1; n >= 1; --n)

- {

- if(n == m_iHiddenLayerNum-1)

- {

- m_ppHiddenLayer[n]->Back_propagation(m_ppHiddenLayer[n-1]->m_pdOutdata,

- m_pLogisticLayer->m_pdDelta, m_pLogisticLayer->m_ppdW, m_pLogisticLayer->m_iOut, dLr);

- }

- else

- {

- double *pdInputData;

- pdInputData = m_ppHiddenLayer[n-1]->m_pdOutdata;

- m_ppHiddenLayer[n]->Back_propagation(pdInputData,

- m_ppHiddenLayer[n+1]->m_pdDelta, m_ppHiddenLayer[n+1]->m_ppdW, m_ppHiddenLayer[n+1]->m_iOut, dLr);

- }

- }

- //这里该怎么写?

- if (m_iHiddenLayerNum > 1)

- m_ppHiddenLayer[0]->Back_propagation(pdTrain,

- m_ppHiddenLayer[1]->m_pdDelta, m_ppHiddenLayer[1]->m_ppdW, m_ppHiddenLayer[1]->m_iOut, dLr);

- else

- m_ppHiddenLayer[0]->Back_propagation(pdTrain,

- m_pLogisticLayer->m_pdDelta, m_pLogisticLayer->m_ppdW, m_pLogisticLayer->m_iOut, dLr);

- delete []pdLabel;

- }

- void Mlp::SetTrainNum(int iNum)

- {

- m_iSampleNum = iNum;

- }

- double* Mlp::Forward_propagation(double* pData)

- {

- double *pdForwardValue = pData;

- for(int n = 0; n < m_iHiddenLayerNum; ++ n)

- {

- if(n == 0) //第一个隐层直接输入数据

- {

- pdForwardValue = m_ppHiddenLayer[n]->Forward_propagation(pData);

- }

- else //其他隐层用前一层的输出作为输入数据

- {

- pdForwardValue = m_ppHiddenLayer[n]->Forward_propagation(pdForwardValue);

- }

- }

- return pdForwardValue;

- //softmax层使用最后一个隐层的输出作为输入数据

- // m_pLogisticLayer->Forward_propagation(m_ppHiddenLayer[m_iHiddenLayerNum-1]->m_pdOutdata);

- // m_pLogisticLayer->Predict(m_ppHiddenLayer[m_iHiddenLayerNum-1]->m_pdOutdata);

- }

- int Mlp::Predict(double *pInputData)

- {

- Forward_propagation(pInputData);

- int iResult = m_pLogisticLayer->Predict(m_ppHiddenLayer[m_iHiddenLayerNum-1]->m_pdOutdata);

- return iResult;

- }

- void Mlp::Setwb(vector< vector<double*> > &vvAllw, vector< vector<double> > &vvAllb)

- {

- for (int i = 0; i < m_iHiddenLayerNum; ++i)

- {

- m_ppHiddenLayer[i]->Setwb(vvAllw[i], vvAllb[i]);

- }

- m_pLogisticLayer->Setwb(vvAllw[m_iHiddenLayerNum], vvAllb[m_iHiddenLayerNum]);

- }

- void Mlp::Writewb(const char *szName)

- {

- for(int i = 0; i < m_iHiddenLayerNum; ++i)

- {

- m_ppHiddenLayer[i]->Writewb(szName);

- }

- m_pLogisticLayer->Writewb(szName);

- }

- double Mlp::CalErrorRate(const vector<double *> &vecvalid, const vector<WORD> &vecValidlabel)

- {

- int iErrorNumber = 0, iValidNumber = vecValidlabel.size();

- for (int i = 0; i < iValidNumber; ++i)

- {

- int iResult = Predict(vecvalid[i]);

- if (iResult != vecValidlabel[i])

- {

- ++iErrorNumber;

- }

- }

- cout << "the num of error is " << iErrorNumber << endl;

- double dErrorRate = (double)iErrorNumber / iValidNumber;

- cout << "the error rate of Train sample by softmax is " << setprecision(10) << dErrorRate * 100 << "%" << endl;

- return dErrorRate;

- }

- void Mlp::Readwb(const char *szName)

- {

- long dcurpos = 0, dreadsize = 0;

- for(int i = 0; i < m_iHiddenLayerNum; ++i)

- {

- dreadsize = m_ppHiddenLayer[i]->Readwb(szName, dcurpos);

- cout << "hiddenlayer " << i + 1 << " read bytes: " << dreadsize << endl;

- if (-1 != dreadsize)

- dcurpos += dreadsize;

- else

- {

- cout << "read wb error from HiddenLayer" << endl;

- return;

- }

- }

- dreadsize = m_pLogisticLayer->Readwb(szName, dcurpos);

- if (-1 != dreadsize)

- dcurpos += dreadsize;

- else

- {

- cout << "read wb error from sofmaxLayer" << endl;

- return;

- }

- }

- int* Mlp::GetHiddenSize()

- {

- return m_piHiddenLayerSize;

- }

- double* Mlp::GetHiddenOutputData()

- {

- assert(m_iHiddenLayerNum > 0);

- return m_ppHiddenLayer[m_iHiddenLayerNum-1]->m_pdOutdata;

- }

- int Mlp::GetHiddenNumber()

- {

- return m_iHiddenLayerNum;

- }

- //double **makeLabelSample(double **label_x)

- double **makeLabelSample(double label_x[][outnode])

- {

- double **pplabelSample;

- pplabelSample = new double*[m_iSamplenum];

- for (int i = 0; i < m_iSamplenum; ++i)

- {

- pplabelSample[i] = new double[outnode];

- }

- for (int i = 0; i < m_iSamplenum; ++i)

- {

- for (int j = 0; j < outnode; ++j)

- pplabelSample[i][j] = label_x[i][j];

- }

- return pplabelSample;

- }

- double **maken_train(double train_x[][innode])

- {

- double **ppn_train;

- ppn_train = new double*[m_iSamplenum];

- for (int i = 0; i < m_iSamplenum; ++i)

- {

- ppn_train[i] = new double[innode];

- }

- for (int i = 0; i < m_iSamplenum; ++i)

- {

- for (int j = 0; j < innode; ++j)

- ppn_train[i][j] = train_x[i][j];

- }

- return ppn_train;

- }

- void TestMlpMnist(const int m_iInput, const int ihidden, const int m_iOut)

- {

- const int ihiddenSize = 1;

- int phidden[ihiddenSize] = {ihidden};

- // construct LogisticRegression

- Mlp neural(m_iSamplenum, m_iInput, m_iOut, ihiddenSize, phidden);

- vector<double*> vecTrain, vecvalid;

- vector<WORD> vecValidlabel, vectrainlabel;

- LoadTestSampleFromJson(vecvalid, vecValidlabel, "../../data/mnist.json", m_iInput);

- LoadTestSampleFromJson(vecTrain, vectrainlabel, "../../data/mnisttrain.json", m_iInput);

- // test

- int itrainnum = vecTrain.size();

- neural.SetTrainNum(itrainnum);

- const int iepochs = 1;

- const double dLr = 0.1;

- neural.CalErrorRate(vecvalid, vecValidlabel);

- for (int i = 0; i < iepochs; ++i)

- {

- neural.TrainAllSample(vecTrain, vectrainlabel, dLr);

- neural.CalErrorRate(vecvalid, vecValidlabel);

- }

- for (vector<double*>::iterator cit = vecTrain.begin(); cit != vecTrain.end(); ++cit)

- {

- delete [](*cit);

- }

- for (vector<double*>::iterator cit = vecvalid.begin(); cit != vecvalid.end(); ++cit)

- {

- delete [](*cit);

- }

- }

- void TestMlpTheano(const int m_iInput, const int ihidden, const int m_iOut)

- {

- const int ihiddenSize = 1;

- int phidden[ihiddenSize] = {ihidden};

- // construct LogisticRegression

- Mlp neural(m_iSamplenum, m_iInput, m_iOut, ihiddenSize, phidden);

- vector<double*> vecTrain, vecw;

- vector<double> vecb;

- vector<WORD> vecLabel;

- vector< vector<double*> > vvAllw;

- vector< vector<double> > vvAllb;

- const char *pcfilename = "../../data/theanomlp.json";

- vector<int> vecSecondDimOfWeigh;

- vecSecondDimOfWeigh.push_back(m_iInput);

- vecSecondDimOfWeigh.push_back(ihidden);

- LoadWeighFromJson(vvAllw, vvAllb, pcfilename, vecSecondDimOfWeigh);

- LoadTestSampleFromJson(vecTrain, vecLabel, "../../data/mnist_validall.json", m_iInput);

- cout << "loadwb ---------" << endl;

- int itrainnum = vecTrain.size();

- neural.SetTrainNum(itrainnum);

- neural.Setwb(vvAllw, vvAllb);

- cout << "Predict------------" << endl;

- neural.CalErrorRate(vecTrain, vecLabel);

- for (vector<double*>::iterator cit = vecTrain.begin(); cit != vecTrain.end(); ++cit)

- {

- delete [](*cit);

- }

- }

- void mlp()

- {

- //输入样本

- double X[m_iSamplenum][innode]= {

- {0,0,0},{0,0,1},{0,1,0},{0,1,1},{1,0,0},{1,0,1},{1,1,0},{1,1,1}

- };

- double Y[m_iSamplenum][outnode]={

- {1, 0, 0, 0, 0, 0, 0, 0},

- {0, 1, 0, 0, 0, 0, 0, 0},

- {0, 0, 1, 0, 0, 0, 0, 0},

- {0, 0, 0, 1, 0, 0, 0, 0},

- {0, 0, 0, 0, 1, 0, 0, 0},

- {0, 0, 0, 0, 0, 1, 0, 0},

- {0, 0, 0, 0, 0, 0, 1, 0},

- {0, 0, 0, 0, 0, 0, 0, 1},

- };

- WORD pdLabel[outnode] = {0, 1, 2, 3, 4, 5, 6, 7};

- const int ihiddenSize = 2;

- int phidden[ihiddenSize] = {5, 5};

- //printArr(phidden, 1);

- Mlp neural(m_iSamplenum, innode, outnode, ihiddenSize, phidden);

- double **train_x, **ppdLabel;

- train_x = maken_train(X);

- //printArrDouble(train_x, m_iSamplenum, innode);

- ppdLabel = makeLabelSample(Y);

- for (int i = 0; i < 3500; ++i)

- {

- for (int j = 0; j < m_iSamplenum; ++j)

- {

- neural.Train(train_x[j], pdLabel[j], 0.1);

- }

- }

- cout<<"trainning complete..."<<endl;

- for (int i = 0; i < m_iSamplenum; ++i)

- neural.Predict(train_x[i]);

- //szName存放权值

- // const char *szName = "mlp55new.wb";

- // neural.Writewb(szName);

- // Mlp neural2(m_iSamplenum, innode, outnode, ihiddenSize, phidden);

- // cout<<"Readwb start..."<<endl;

- // neural2.Readwb(szName);

- // cout<<"Readwb end..."<<endl;

- // cout << "----------after readwb________" << endl;

- // for (int i = 0; i < m_iSamplenum; ++i)

- // neural2.Forward_propagation(train_x[i]);

- for (int i = 0; i != m_iSamplenum; ++i)

- {

- delete []train_x[i];

- delete []ppdLabel[i];

- }

- delete []train_x;

- delete []ppdLabel;

- cout<<endl;

- }

hiddenLayer.h

- #ifndef HIDDENLAYER_H

- #define HIDDENLAYER_H

- #include "neuralbase.h"

- class HiddenLayer: public NeuralBase

- {

- public:

- HiddenLayer(int n_i, int n_o);

- ~HiddenLayer();

- double* Forward_propagation(double* input_data);

- void Back_propagation(double *pdInputData, double *pdNextLayerDelta,

- double** ppdnextLayerW, int iNextLayerOutNum, double dLr);

- };

- #endif

hiddenLayer.cpp

- #include <cmath>

- #include <cassert>

- #include <cstdlib>

- #include <ctime>

- #include <iostream>

- #include "hiddenLayer.h"

- #include "util.h"

- using namespace std;

- HiddenLayer::HiddenLayer(int n_i, int n_o): NeuralBase(n_i, n_o, 0)

- {

- }

- HiddenLayer::~HiddenLayer()

- {

- }

- /************************************************************************/

- /* 需要注意的是:

- 如果为了复现theano的测试结果,那么隐藏层的激活函数要选用tanh;

- 否则,为了mlp的训练过程,激活函数要选择sigmoid */

- /************************************************************************/

- double* HiddenLayer::Forward_propagation(double* pdInputData)

- {

- NeuralBase::Forward_propagation(pdInputData);

- for(int i = 0; i < m_iOut; ++i)

- {

- // m_pdOutdata[i] = sigmoid(m_pdOutdata[i]);

- m_pdOutdata[i] = mytanh(m_pdOutdata[i]);

- }

- return m_pdOutdata;

- }

- void HiddenLayer::Back_propagation(double *pdInputData, double *pdNextLayerDelta,

- double** ppdnextLayerW, int iNextLayerOutNum, double dLr)

- {

- /*

- pdInputData 为输入数据

- *pdNextLayerDelta 为下一层的残差值delta,是一个大小为iNextLayerOutNum的数组

- **ppdnextLayerW 为此层到下一层的权值

- iNextLayerOutNum 实际上就是下一层的n_out

- dLr 为学习率learning rate

- m_iSampleNum 为训练样本总数

- */

- //sigma元素个数应与本层单元个数一致,而网上代码有误

- //作者是没有自己测试啊,测试啊

- //double* sigma = new double[iNextLayerOutNum];

- double* sigma = new double[m_iOut];

- //double sigma[10];

- for(int i = 0; i < m_iOut; ++i)

- sigma[i] = 0.0;

- for(int i = 0; i < iNextLayerOutNum; ++i)

- {

- for(int j = 0; j < m_iOut; ++j)

- {

- sigma[j] += ppdnextLayerW[i][j] * pdNextLayerDelta[i];

- }

- }

- //计算得到本层的残差delta

- for(int i = 0; i < m_iOut; ++i)

- {

- m_pdDelta[i] = sigma[i] * m_pdOutdata[i] * (1 - m_pdOutdata[i]);

- }

- //调整本层的权值w

- for(int i = 0; i < m_iOut; ++i)

- {

- for(int j = 0; j < m_iInput; ++j)

- {

- m_ppdW[i][j] += dLr * m_pdDelta[i] * pdInputData[j];

- }

- m_pdBias[i] += dLr * m_pdDelta[i];

- }

- delete[] sigma;

- }

logisticRegression.h

- #ifndef LOGISTICREGRESSIONLAYER

- #define LOGISTICREGRESSIONLAYER

- #include "neuralbase.h"

- typedef unsigned short WORD;

- class LogisticRegression: public NeuralBase

- {

- public:

- LogisticRegression(int n_i, int i_o, int);

- ~LogisticRegression();

- double* Forward_propagation(double* input_data);

- void Softmax(double* x);

- void Train(double *pdTrain, WORD usLabel, double dLr);

- void SetOldWb(double ppdWeigh[][3], double arriBias[8]);

- int Predict(double *);

- void MakeLabels(int* piMax, double (*pplabels)[8]);

- };

- void Test_lr();

- void Testwb();

- void Test_theano(const int m_iInput, const int m_iOut);

- #endif

logisticRegression.cpp

- #include <cmath>

- #include <cassert>

- #include <iomanip>

- #include <ctime>

- #include <iostream>

- #include "logisticRegression.h"

- #include "util.h"

- using namespace std;

- LogisticRegression::LogisticRegression(int n_i, int n_o, int n_t): NeuralBase(n_i, n_o, n_t)

- {

- }

- LogisticRegression::~LogisticRegression()

- {

- }

- void LogisticRegression::Softmax(double* x)

- {

- double _max = 0.0;

- double _sum = 0.0;

- for(int i = 0; i < m_iOut; ++i)

- {

- if(_max < x[i])

- _max = x[i];

- }

- for(int i = 0; i < m_iOut; ++i)

- {

- x[i] = exp(x[i]-_max);

- _sum += x[i];

- }

- for(int i = 0; i < m_iOut; ++i)

- {

- x[i] /= _sum;

- }

- }

- double* LogisticRegression::Forward_propagation(double* pdinputdata)

- {

- NeuralBase::Forward_propagation(pdinputdata);

- /************************************************************************/

- /* 调试 */

- /************************************************************************/

- //cout << "Forward_propagation from LogisticRegression" << endl;

- //PrintOutputData();

- //cout << "over\n";

- Softmax(m_pdOutdata);

- return m_pdOutdata;

- }

- int LogisticRegression::Predict(double *pdtest)

- {

- Forward_propagation(pdtest);

- /************************************************************************/

- /* 调试使用 */

- /************************************************************************/

- //PrintOutputData();

- int iResult = getMaxIndex(m_pdOutdata, m_iOut);

- return iResult;

- }

- void LogisticRegression::Train(double *pdTrain, WORD usLabel, double dLr)

- {

- Forward_propagation(pdTrain);

- double *pdLabel = new double[m_iOut];

- MakeOneLabel(usLabel, pdLabel);

- Back_propagation(pdTrain, pdLabel, dLr);

- delete []pdLabel;

- }

- //double LogisticRegression::CalErrorRate(const vector<double*> &vecvalid, const vector<WORD> &vecValidlabel)

- //{

- // int iErrorNumber = 0, iValidNumber = vecValidlabel.size();

- // for (int i = 0; i < iValidNumber; ++i)

- // {

- // int iResult = Predict(vecvalid[i]);

- // if (iResult != vecValidlabel[i])

- // {

- // ++iErrorNumber;

- // }

- // }

- // cout << "the num of error is " << iErrorNumber << endl;

- // double dErrorRate = (double)iErrorNumber / iValidNumber;

- // cout << "the error rate of Train sample by softmax is " << setprecision(10) << dErrorRate * 100 << "%" << endl;

- // return dErrorRate;

- //}

- void LogisticRegression::SetOldWb(double ppdWeigh[][3], double arriBias[8])

- {

- for (int i = 0; i < m_iOut; ++i)

- {

- for (int j = 0; j < m_iInput; ++j)

- m_ppdW[i][j] = ppdWeigh[i][j];

- m_pdBias[i] = arriBias[i];

- }

- cout << "Setwb----------" << endl;

- printArrDouble(m_ppdW, m_iOut, m_iInput);

- printArr(m_pdBias, m_iOut);

- }

- //void LogisticRegression::TrainAllSample(const vector<double*> &vecTrain, const vector<WORD> &vectrainlabel, double dLr)

- //{

- // for (int j = 0; j < m_iSamplenum; ++j)

- // {

- // Train(vecTrain[j], vectrainlabel[j], dLr);

- // }

- //}

- void LogisticRegression::MakeLabels(int* piMax, double (*pplabels)[8])

- {

- for (int i = 0; i < m_iSamplenum; ++i)

- {

- for (int j = 0; j < m_iOut; ++j)

- pplabels[i][j] = 0;

- int k = piMax[i];

- pplabels[i][k] = 1.0;

- }

- }

- void Test_theano(const int m_iInput, const int m_iOut)

- {

- // construct LogisticRegression

- LogisticRegression classifier(m_iInput, m_iOut, 0);

- vector<double*> vecTrain, vecvalid, vecw;

- vector<double> vecb;

- vector<WORD> vecValidlabel, vectrainlabel;

- LoadTestSampleFromJson(vecvalid, vecValidlabel, "../.../../data/mnist.json", m_iInput);

- LoadTestSampleFromJson(vecTrain, vectrainlabel, "../.../../data/mnisttrain.json", m_iInput);

- // test

- int itrainnum = vecTrain.size();

- classifier.m_iSamplenum = itrainnum;

- const int iepochs = 5;

- const double dLr = 0.1;

- for (int i = 0; i < iepochs; ++i)

- {

- classifier.TrainAllSample(vecTrain, vectrainlabel, dLr);

- if (i % 2 == 0)

- {

- cout << "Predict------------" << i + 1 << endl;

- classifier.CalErrorRate(vecvalid, vecValidlabel);

- }

- }

- for (vector<double*>::iterator cit = vecTrain.begin(); cit != vecTrain.end(); ++cit)

- {

- delete [](*cit);

- }

- for (vector<double*>::iterator cit = vecvalid.begin(); cit != vecvalid.end(); ++cit)

- {

- delete [](*cit);

- }

- }

- void Test_lr()

- {

- srand(0);

- double learning_rate = 0.1;

- double n_epochs = 200;

- int test_N = 2;

- const int trainNum = 8, m_iInput = 3, m_iOut = 8;

- //int m_iOut = 2;

- double train_X[trainNum][m_iInput] = {

- {1, 1, 1},

- {1, 1, 0},

- {1, 0, 1},

- {1, 0, 0},

- {0, 1, 1},

- {0, 1, 0},

- {0, 0, 1},

- {0, 0, 0}

- };

- //sziMax存储的是最大值的下标

- int sziMax[trainNum];

- for (int i = 0; i < trainNum; ++i)

- sziMax[i] = trainNum - i - 1;

- // construct LogisticRegression

- LogisticRegression classifier(m_iInput, m_iOut, trainNum);

- // Train online

- for(int epoch=0; epoch<n_epochs; epoch++) {

- for(int i=0; i<trainNum; i++) {

- //classifier.trainEfficient(train_X[i], train_Y[i], learning_rate);

- classifier.Train(train_X[i], sziMax[i], learning_rate);

- }

- }

- const char *pcfile = "test.wb";

- classifier.Writewb(pcfile);

- LogisticRegression logistic(m_iInput, m_iOut, trainNum);

- logistic.Readwb(pcfile, 0);

- // test data

- double test_X[2][m_iOut] = {

- {1, 0, 1},

- {0, 0, 1}

- };

- // test

- cout << "before Readwb ---------" << endl;

- for(int i=0; i<test_N; i++) {

- classifier.Predict(test_X[i]);

- cout << endl;

- }

- cout << "after Readwb ---------" << endl;

- for(int i=0; i<trainNum; i++) {

- logistic.Predict(train_X[i]);

- cout << endl;

- }

- cout << "*********\n";

- }

- void Testwb()

- {

- // int test_N = 2;

- const int trainNum = 8, m_iInput = 3, m_iOut = 8;

- //int m_iOut = 2;

- double train_X[trainNum][m_iInput] = {

- {1, 1, 1},

- {1, 1, 0},

- {1, 0, 1},

- {1, 0, 0},

- {0, 1, 1},

- {0, 1, 0},

- {0, 0, 1},

- {0, 0, 0}

- };

- double arriBias[m_iOut] = {1, 2, 3, 3, 3, 3, 2, 1};

- // construct LogisticRegression

- LogisticRegression classifier(m_iInput, m_iOut, trainNum);

- classifier.SetOldWb(train_X, arriBias);

- const char *pcfile = "test.wb";

- classifier.Writewb(pcfile);

- LogisticRegression logistic(m_iInput, m_iOut, trainNum);

- logistic.Readwb(pcfile, 0);

- }

neuralbase.h

- #ifndef NEURALBASE_H

- #define NEURALBASE_H

- #include <vector>

- using std::vector;

- typedef unsigned short WORD;

- class NeuralBase

- {

- public:

- NeuralBase(int , int , int);

- virtual ~NeuralBase();

- virtual double* Forward_propagation(double* );

- virtual void Back_propagation(double* , double* , double );

- virtual void Train(double *x, WORD y, double dLr);

- virtual int Predict(double *);

- void Callbackwb();

- void MakeOneLabel(int iMax, double *pdLabel);

- void TrainAllSample(const vector<double*> &vecTrain, const vector<WORD> &vectrainlabel, double dLr);

- double CalErrorRate(const vector<double*> &vecvalid, const vector<WORD> &vecValidlabel);

- void Printwb();

- void Writewb(const char *szName);

- long Readwb(const char *szName, long);

- void Setwb(vector<double*> &vpdw, vector<double> &vdb);

- void PrintOutputData();

- int m_iInput;

- int m_iOut;

- int m_iSamplenum;

- double** m_ppdW;

- double* m_pdBias;

- //本层前向传播的输出值,也是最终的预测值

- double* m_pdOutdata;

- //反向传播时所需值

- double* m_pdDelta;

- private:

- void _callbackwb();

- };

- #endif // NEURALBASE_H

neuralbase.cpp

- #include "neuralbase.h"

- #include <cmath>

- #include <cassert>

- #include <ctime>

- #include <iomanip>

- #include <iostream>

- #include "util.h"

- using namespace std;

- NeuralBase::NeuralBase(int n_i, int n_o, int n_t):m_iInput(n_i), m_iOut(n_o), m_iSamplenum(n_t)

- {

- m_ppdW = new double* [m_iOut];

- for(int i = 0; i < m_iOut; ++i)

- {

- m_ppdW[i] = new double [m_iInput];

- }

- m_pdBias = new double [m_iOut];

- double a = 1.0 / m_iInput;

- srand((unsigned)time(NULL));

- for(int i = 0; i < m_iOut; ++i)

- {

- for(int j = 0; j < m_iInput; ++j)

- m_ppdW[i][j] = uniform(-a, a);

- m_pdBias[i] = uniform(-a, a);

- }

- m_pdDelta = new double [m_iOut];

- m_pdOutdata = new double [m_iOut];

- }

- NeuralBase::~NeuralBase()

- {

- Callbackwb();

- delete[] m_pdOutdata;

- delete[] m_pdDelta;

- }

- void NeuralBase::Callbackwb()

- {

- _callbackwb();

- }

- double NeuralBase::CalErrorRate(const vector<double *> &vecvalid, const vector<WORD> &vecValidlabel)

- {

- int iErrorNumber = 0, iValidNumber = vecValidlabel.size();

- for (int i = 0; i < iValidNumber; ++i)

- {

- int iResult = Predict(vecvalid[i]);

- if (iResult != vecValidlabel[i])

- {

- ++iErrorNumber;

- }

- }

- cout << "the num of error is " << iErrorNumber << endl;

- double dErrorRate = (double)iErrorNumber / iValidNumber;

- cout << "the error rate of Train sample by softmax is " << setprecision(10) << dErrorRate * 100 << "%" << endl;

- return dErrorRate;

- }

- int NeuralBase::Predict(double *)

- {

- cout << "NeuralBase::Predict(double *)" << endl;

- return 0;

- }

- void NeuralBase::_callbackwb()

- {

- for(int i=0; i < m_iOut; i++)

- delete []m_ppdW[i];

- delete[] m_ppdW;

- delete[] m_pdBias;

- }

- void NeuralBase::Printwb()

- {

- cout << "'****m_ppdW****\n";

- for(int i = 0; i < m_iOut; ++i)

- {

- for(int j = 0; j < m_iInput; ++j)

- cout << m_ppdW[i][j] << ' ';

- cout << endl;

- }

- cout << "'****m_pdBias****\n";

- for(int i = 0; i < m_iOut; ++i)

- {

- cout << m_pdBias[i] << ' ';

- }

- cout << endl;

- cout << "'****output****\n";

- for(int i = 0; i < m_iOut; ++i)

- {

- cout << m_pdOutdata[i] << ' ';

- }

- cout << endl;

- }

- double* NeuralBase::Forward_propagation(double* input_data)

- {

- for(int i = 0; i < m_iOut; ++i)

- {

- m_pdOutdata[i] = 0.0;

- for(int j = 0; j < m_iInput; ++j)

- {

- m_pdOutdata[i] += m_ppdW[i][j]*input_data[j];

- }

- m_pdOutdata[i] += m_pdBias[i];

- }

- return m_pdOutdata;

- }

- void NeuralBase::Back_propagation(double* input_data, double* pdlabel, double dLr)

- {

- for(int i = 0; i < m_iOut; ++i)

- {

- m_pdDelta[i] = pdlabel[i] - m_pdOutdata[i] ;

- for(int j = 0; j < m_iInput; ++j)

- {

- m_ppdW[i][j] += dLr * m_pdDelta[i] * input_data[j] / m_iSamplenum;

- }

- m_pdBias[i] += dLr * m_pdDelta[i] / m_iSamplenum;

- }

- }

- void NeuralBase::MakeOneLabel(int imax, double *pdlabel)

- {

- for (int j = 0; j < m_iOut; ++j)

- pdlabel[j] = 0;

- pdlabel[imax] = 1.0;

- }

- void NeuralBase::Writewb(const char *szName)

- {

- savewb(szName, m_ppdW, m_pdBias, m_iOut, m_iInput);

- }

- long NeuralBase::Readwb(const char *szName, long dstartpos)

- {

- return loadwb(szName, m_ppdW, m_pdBias, m_iOut, m_iInput, dstartpos);

- }

- void NeuralBase::Setwb(vector<double*> &vpdw, vector<double> &vdb)

- {

- assert(vpdw.size() == (DWORD)m_iOut);

- for (int i = 0; i < m_iOut; ++i)

- {

- delete []m_ppdW[i];

- m_ppdW[i] = vpdw[i];

- m_pdBias[i] = vdb[i];

- }

- }

- void NeuralBase::TrainAllSample(const vector<double *> &vecTrain, const vector<WORD> &vectrainlabel, double dLr)

- {

- for (int j = 0; j < m_iSamplenum; ++j)

- {

- Train(vecTrain[j], vectrainlabel[j], dLr);

- }

- }

- void NeuralBase::Train(double *x, WORD y, double dLr)

- {

- (void)x;

- (void)y;

- (void)dLr;

- cout << "NeuralBase::Train(double *x, WORD y, double dLr)" << endl;

- }

- void NeuralBase::PrintOutputData()

- {

- for (int i = 0; i < m_iOut; ++i)

- {

- cout << m_pdOutdata[i] << ' ';

- }

- cout << endl;

- }

util.h

- #ifndef UTIL_H

- #define UTIL_H

- #include <iostream>

- #include <cstdio>

- #include <cstdlib>

- #include <ctime>

- #include <vector>

- using namespace std;

- typedef unsigned char BYTE;

- typedef unsigned short WORD;

- typedef unsigned int DWORD;

- double sigmoid(double x);

- double mytanh(double dx);

- typedef struct stShapeWb

- {

- stShapeWb(int w, int h):width(w), height(h){}

- int width;

- int height;

- }ShapeWb_S;

- void MakeOneLabel(int iMax, double *pdLabel, int m_iOut);

- double uniform(double _min, double _max);

- //void printArr(T *parr, int num);

- //void printArrDouble(double **pparr, int row, int col);

- void initArr(double *parr, int num);

- int getMaxIndex(double *pdarr, int num);

- void Printivec(const vector<int> &ivec);

- void savewb(const char *szName, double **m_ppdW, double *m_pdBias,

- int irow, int icol);

- long loadwb(const char *szName, double **m_ppdW, double *m_pdBias,

- int irow, int icol, long dstartpos);

- void TestLoadJson(const char *pcfilename);

- bool LoadvtFromJson(vector<double*> &vecTrain, vector<WORD> &vecLabel, const char *filename, const int m_iInput);

- bool LoadwbFromJson(vector<double*> &vecTrain, vector<double> &vecLabel, const char *filename, const int m_iInput);

- bool LoadTestSampleFromJson(vector<double*> &vecTrain, vector<WORD> &vecLabel, const char *filename, const int m_iInput);

- bool LoadwbByByte(vector<double*> &vecTrain, vector<double> &vecLabel, const char *filename, const int m_iInput);

- bool LoadallwbByByte(vector< vector<double*> > &vvAllw, vector< vector<double> > &vvAllb, const char *filename,

- const int m_iInput, const int ihidden, const int m_iOut);

- bool LoadWeighFromJson(vector< vector<double*> > &vvAllw, vector< vector<double> > &vvAllb,

- const char *filename, const vector<int> &vecSecondDimOfWeigh);

- void MakeCnnSample(double arr[2][64], double *pdImage, int iImageWidth, int iNumOfImage );

- void MakeCnnWeigh(double *, int iNumOfKernel);

- template <typename T>

- void printArr(T *parr, int num)

- {

- cout << "****printArr****" << endl;

- for (int i = 0; i < num; ++i)

- cout << parr[i] << ' ';

- cout << endl;

- }

- template <typename T>

- void printArrDouble(T **pparr, int row, int col)

- {

- cout << "****printArrDouble****" << endl;

- for (int i = 0; i < row; ++i)

- {

- for (int j = 0; j < col; ++j)

- {

- cout << pparr[i][j] << ' ';

- }

- cout << endl;

- }

- }

- #endif

util.cpp

- #include "util.h"

- #include <iostream>

- #include <ctime>

- #include <cmath>

- #include <cassert>

- #include <fstream>

- #include <cstring>

- #include <stack>

- #include <iomanip>

- using namespace std;

- int getMaxIndex(double *pdarr, int num)

- {

- double dmax = -1;

- int iMax = -1;

- for(int i = 0; i < num; ++i)

- {

- if (pdarr[i] > dmax)

- {

- dmax = pdarr[i];

- iMax = i;

- }

- }

- return iMax;

- }

- double sigmoid(double dx)

- {

- return 1.0/(1.0+exp(-dx));

- }

- double mytanh(double dx)

- {

- double e2x = exp(2 * dx);

- return (e2x - 1) / (e2x + 1);

- }

- double uniform(double _min, double _max)

- {

- return rand()/(RAND_MAX + 1.0) * (_max - _min) + _min;

- }

- void initArr(double *parr, int num)

- {

- for (int i = 0; i < num; ++i)

- parr[i] = 0.0;

- }

- void savewb(const char *szName, double **m_ppdW, double *m_pdBias,

- int irow, int icol)

- {

- FILE *pf;

- if( (pf = fopen(szName, "ab" )) == NULL )

- {

- printf( "File coulkd not be opened " );

- return;

- }

- int isizeofelem = sizeof(double);

- for (int i = 0; i < irow; ++i)

- {

- if (fwrite((const void*)m_ppdW[i], isizeofelem, icol, pf) != icol)

- {

- fputs ("Writing m_ppdW error",stderr);

- return;

- }

- }

- if (fwrite((const void*)m_pdBias, isizeofelem, irow, pf) != irow)

- {

- fputs ("Writing m_ppdW error",stderr);

- return;

- }

- fclose(pf);

- }

- long loadwb(const char *szName, double **m_ppdW, double *m_pdBias,

- int irow, int icol, long dstartpos)

- {

- FILE *pf;

- long dtotalbyte = 0, dreadsize;

- if( (pf = fopen(szName, "rb" )) == NULL )

- {

- printf( "File coulkd not be opened " );

- return -1;

- }

- //让文件指针偏移到正确位置

- fseek(pf, dstartpos , SEEK_SET);

- int isizeofelem = sizeof(double);

- for (int i = 0; i < irow; ++i)

- {

- dreadsize = fread((void*)m_ppdW[i], isizeofelem, icol, pf);

- if (dreadsize != icol)

- {

- fputs ("Reading m_ppdW error",stderr);

- return -1;

- }

- //每次成功读取,都要加到dtotalbyte中,最后返回

- dtotalbyte += dreadsize;

- }

- dreadsize = fread(m_pdBias, isizeofelem, irow, pf);

- if (dreadsize != irow)

- {

- fputs ("Reading m_pdBias error",stderr);

- return -1;

- }

- dtotalbyte += dreadsize;

- dtotalbyte *= isizeofelem;

- fclose(pf);

- return dtotalbyte;

- }

- void Printivec(const vector<int> &ivec)

- {

- for (vector<int>::const_iterator it = ivec.begin(); it != ivec.end(); ++it)

- {

- cout << *it << ' ';

- }

- cout << endl;

- }

- void TestLoadJson(const char *pcfilename)

- {

- vector<double *> vpdw;

- vector<double> vdb;

- vector< vector<double*> > vvAllw;

- vector< vector<double> > vvAllb;

- int m_iInput = 28 * 28, ihidden = 500, m_iOut = 10;

- LoadallwbByByte(vvAllw, vvAllb, pcfilename, m_iInput, ihidden, m_iOut );

- }

- //read vt from mnist, format is [[[], [],..., []],[1, 3, 5,..., 7]]

- bool LoadvtFromJson(vector<double*> &vecTrain, vector<WORD> &vecLabel, const char *filename, const int m_iInput)

- {

- cout << "loadvtFromJson" << endl;

- const int ciStackSize = 10;

- const int ciFeaturesize = m_iInput;

- int arriStack[ciStackSize], iTop = -1;

- ifstream ifs;

- ifs.open(filename, ios::in);

- assert(ifs.is_open());

- BYTE ucRead, ucLeftbrace, ucRightbrace, ucComma, ucSpace;

- ucLeftbrace = '[';

- ucRightbrace = ']';

- ucComma = ',';

- ucSpace = '0';

- ifs >> ucRead;

- assert(ucRead == ucLeftbrace);

- //栈中全部存放左括号,用1代表,0说明清除

- arriStack[++iTop] = 1;

- //样本train开始

- ifs >> ucRead;

- assert(ucRead == ucLeftbrace);

- arriStack[++iTop] = 1;//iTop is 1

- int iIndex;

- bool isdigit = false;

- double dread, *pdvt;

- //load vt sample

- while (iTop > 0)

- {

- if (isdigit == false)

- {

- ifs >> ucRead;

- isdigit = true;

- if (ucRead == ucComma)

- {

- //next char is space or leftbrace

- // ifs >> ucRead;

- isdigit = false;

- continue;

- }

- if (ucRead == ucSpace)

- {

- //if pdvt is null, next char is leftbrace;

- //else next char is double value

- if (pdvt == NULL)

- isdigit = false;

- continue;

- }

- if (ucRead == ucLeftbrace)

- {

- pdvt = new double[ciFeaturesize];

- memset(pdvt, 0, ciFeaturesize * sizeof(double));

- //iIndex数组下标

- iIndex = 0;

- arriStack[++iTop] = 1;

- continue;

- }

- if (ucRead == ucRightbrace)

- {

- if (pdvt != NULL)

- {

- assert(iIndex == ciFeaturesize);

- vecTrain.push_back(pdvt);

- pdvt = NULL;

- }

- isdigit = false;

- arriStack[iTop--] = 0;

- continue;

- }

- }

- else

- {

- ifs >> dread;

- pdvt[iIndex++] = dread;

- isdigit = false;

- }

- };

- //next char is dot

- ifs >> ucRead;

- assert(ucRead == ucComma);

- cout << vecTrain.size() << endl;

- //读取label

- WORD usread;

- isdigit = false;

- while (iTop > -1 && ifs.eof() == false)

- {

- if (isdigit == false)

- {

- ifs >> ucRead;

- isdigit = true;

- if (ucRead == ucComma)

- {

- //next char is space or leftbrace

- // ifs >> ucRead;

- // isdigit = false;

- continue;

- }

- if (ucRead == ucSpace)

- {

- //if pdvt is null, next char is leftbrace;

- //else next char is double value

- if (pdvt == NULL)

- isdigit = false;

- continue;

- }

- if (ucRead == ucLeftbrace)

- {

- arriStack[++iTop] = 1;

- continue;

- }

- //右括号的下一个字符是右括号(最后一个字符)

- if (ucRead == ucRightbrace)

- {

- isdigit = false;

- arriStack[iTop--] = 0;

- continue;

- }

- }

- else

- {

- ifs >> usread;

- vecLabel.push_back(usread);

- isdigit = false;

- }

- };

- assert(vecLabel.size() == vecTrain.size());

- assert(iTop == -1);

- ifs.close();

- return true;

- }

- bool testjsonfloat(const char *filename)

- {

- vector<double> vecTrain;

- cout << "testjsondouble" << endl;

- const int ciStackSize = 10;

- int arriStack[ciStackSize], iTop = -1;

- ifstream ifs;

- ifs.open(filename, ios::in);

- assert(ifs.is_open());

- BYTE ucRead, ucLeftbrace, ucRightbrace, ucComma;

- ucLeftbrace = '[';

- ucRightbrace = ']';

- ucComma = ',';

- ifs >> ucRead;

- assert(ucRead == ucLeftbrace);

- //栈中全部存放左括号,用1代表,0说明清除

- arriStack[++iTop] = 1;

- //样本train开始

- ifs >> ucRead;

- assert(ucRead == ucLeftbrace);

- arriStack[++iTop] = 1;//iTop is 1

- double fread;

- bool isdigit = false;

- while (iTop > -1)

- {

- if (isdigit == false)

- {

- ifs >> ucRead;

- isdigit = true;

- if (ucRead == ucComma)

- {

- //next char is space or leftbrace

- // ifs >> ucRead;

- isdigit = false;

- continue;

- }

- if (ucRead == ' ')

- continue;

- if (ucRead == ucLeftbrace)

- {

- arriStack[++iTop] = 1;

- continue;

- }

- if (ucRead == ucRightbrace)

- {

- isdigit = false;

- //右括号的下一个字符是右括号(最后一个字符)

- arriStack[iTop--] = 0;

- continue;

- }

- }

- else

- {

- ifs >> fread;

- vecTrain.push_back(fread);

- isdigit = false;

- }

- }

- ifs.close();

- return true;

- }

- bool LoadwbFromJson(vector<double*> &vecTrain, vector<double> &vecLabel, const char *filename, const int m_iInput)

- {

- cout << "loadvtFromJson" << endl;

- const int ciStackSize = 10;

- const int ciFeaturesize = m_iInput;

- int arriStack[ciStackSize], iTop = -1;

- ifstream ifs;

- ifs.open(filename, ios::in);

- assert(ifs.is_open());

- BYTE ucRead, ucLeftbrace, ucRightbrace, ucComma, ucSpace;

- ucLeftbrace = '[';

- ucRightbrace = ']';

- ucComma = ',';

- ucSpace = '0';

- ifs >> ucRead;

- assert(ucRead == ucLeftbrace);

- //栈中全部存放左括号,用1代表,0说明清除

- arriStack[++iTop] = 1;

- //样本train开始

- ifs >> ucRead;

- assert(ucRead == ucLeftbrace);

- arriStack[++iTop] = 1;//iTop is 1

- int iIndex;

- bool isdigit = false;

- double dread, *pdvt;

- //load vt sample

- while (iTop > 0)

- {

- if (isdigit == false)

- {

- ifs >> ucRead;

- isdigit = true;

- if (ucRead == ucComma)

- {

- //next char is space or leftbrace

- // ifs >> ucRead;

- isdigit = false;

- continue;

- }

- if (ucRead == ucSpace)

- {

- //if pdvt is null, next char is leftbrace;

- //else next char is double value

- if (pdvt == NULL)

- isdigit = false;

- continue;

- }

- if (ucRead == ucLeftbrace)

- {

- pdvt = new double[ciFeaturesize];

- memset(pdvt, 0, ciFeaturesize * sizeof(double));

- //iIndex数组下标

- iIndex = 0;

- arriStack[++iTop] = 1;

- continue;

- }

- if (ucRead == ucRightbrace)

- {

- if (pdvt != NULL)

- {

- assert(iIndex == ciFeaturesize);

- vecTrain.push_back(pdvt);

- pdvt = NULL;

- }

- isdigit = false;

- arriStack[iTop--] = 0;

- continue;

- }

- }

- else

- {

- ifs >> dread;

- pdvt[iIndex++] = dread;

- isdigit = false;

- }

- };

- //next char is dot

- ifs >> ucRead;

- assert(ucRead == ucComma);

- cout << vecTrain.size() << endl;

- //读取label

- double usread;

- isdigit = false;

- while (iTop > -1 && ifs.eof() == false)

- {

- if (isdigit == false)

- {

- ifs >> ucRead;

- isdigit = true;

- if (ucRead == ucComma)

- {

- //next char is space or leftbrace

- // ifs >> ucRead;

- // isdigit = false;

- continue;

- }

- if (ucRead == ucSpace)

- {

- //if pdvt is null, next char is leftbrace;

- //else next char is double value

- if (pdvt == NULL)

- isdigit = false;

- continue;

- }

- if (ucRead == ucLeftbrace)

- {

- arriStack[++iTop] = 1;

- continue;

- }

- //右括号的下一个字符是右括号(最后一个字符)

- if (ucRead == ucRightbrace)

- {

- isdigit = false;

- arriStack[iTop--] = 0;

- continue;

- }

- }

- else

- {

- ifs >> usread;

- vecLabel.push_back(usread);

- isdigit = false;

- }

- };

- assert(vecLabel.size() == vecTrain.size());

- assert(iTop == -1);

- ifs.close();

- return true;

- }

- bool vec2double(vector<BYTE> &vecDigit, double &dvalue)

- {

- if (vecDigit.empty())

- return false;

- int ivecsize = vecDigit.size();

- const int iMaxlen = 50;

- char szdigit[iMaxlen];

- assert(iMaxlen > ivecsize);

- memset(szdigit, 0, iMaxlen);

- int i;

- for (i = 0; i < ivecsize; ++i)

- szdigit[i] = vecDigit[i];

- szdigit[i++] = '\0';

- vecDigit.clear();

- dvalue = atof(szdigit);

- return true;

- }

- bool vec2short(vector<BYTE> &vecDigit, WORD &usvalue)

- {

- if (vecDigit.empty())

- return false;

- int ivecsize = vecDigit.size();

- const int iMaxlen = 50;

- char szdigit[iMaxlen];

- assert(iMaxlen > ivecsize);

- memset(szdigit, 0, iMaxlen);

- int i;

- for (i = 0; i < ivecsize; ++i)

- szdigit[i] = vecDigit[i];

- szdigit[i++] = '\0';

- vecDigit.clear();

- usvalue = atoi(szdigit);

- return true;

- }

- void readDigitFromJson(ifstream &ifs, vector<double*> &vecTrain, vector<WORD> &vecLabel,

- vector<BYTE> &vecDigit, double *&pdvt, int &iIndex,

- const int ciFeaturesize, int *arrStack, int &iTop, bool bFirstlist)

- {

- BYTE ucRead;

- WORD usvalue;

- double dvalue;

- const BYTE ucLeftbrace = '[', ucRightbrace = ']', ucComma = ',', ucSpace = ' ';

- ifs.read((char*)(&ucRead), 1);

- switch (ucRead)

- {

- case ucLeftbrace:

- {

- if (bFirstlist)

- {

- pdvt = new double[ciFeaturesize];

- memset(pdvt, 0, ciFeaturesize * sizeof(double));

- iIndex = 0;

- }

- arrStack[++iTop] = 1;

- break;

- }

- case ucComma:

- {

- //next char is space or leftbrace

- if (bFirstlist)

- {

- if (vecDigit.empty() == false)

- {

- vec2double(vecDigit, dvalue);

- pdvt[iIndex++] = dvalue;

- }

- }

- else

- {

- if(vec2short(vecDigit, usvalue))

- vecLabel.push_back(usvalue);

- }

- break;

- }

- case ucSpace:

- break;

- case ucRightbrace:

- {

- if (bFirstlist)

- {

- if (pdvt != NULL)

- {

- vec2double(vecDigit, dvalue);

- pdvt[iIndex++] = dvalue;

- vecTrain.push_back(pdvt);

- pdvt = NULL;

- }

- assert(iIndex == ciFeaturesize);

- }

- else

- {

- if(vec2short(vecDigit, usvalue))

- vecLabel.push_back(usvalue);

- }

- arrStack[iTop--] = 0;

- break;

- }

- default:

- {

- vecDigit.push_back(ucRead);

- break;

- }

- }

- }

- void readDoubleFromJson(ifstream &ifs, vector<double*> &vecTrain, vector<double> &vecLabel,

- vector<BYTE> &vecDigit, double *&pdvt, int &iIndex,

- const int ciFeaturesize, int *arrStack, int &iTop, bool bFirstlist)

- {

- BYTE ucRead;

- double dvalue;

- const BYTE ucLeftbrace = '[', ucRightbrace = ']', ucComma = ',', ucSpace = ' ';

- ifs.read((char*)(&ucRead), 1);

- switch (ucRead)

- {

- case ucLeftbrace:

- {

- if (bFirstlist)

- {

- pdvt = new double[ciFeaturesize];

- memset(pdvt, 0, ciFeaturesize * sizeof(double));

- iIndex = 0;

- }

- arrStack[++iTop] = 1;

- break;

- }

- case ucComma:

- {

- //next char is space or leftbrace

- if (bFirstlist)

- {

- if (vecDigit.empty() == false)

- {

- vec2double(vecDigit, dvalue);

- pdvt[iIndex++] = dvalue;

- }

- }

- else

- {

- if(vec2double(vecDigit, dvalue))

- vecLabel.push_back(dvalue);

- }

- break;

- }

- case ucSpace:

- break;

- case ucRightbrace:

- {

- if (bFirstlist)

- {

- if (pdvt != NULL)

- {

- vec2double(vecDigit, dvalue);

- pdvt[iIndex++] = dvalue;

- vecTrain.push_back(pdvt);

- pdvt = NULL;

- }

- assert(iIndex == ciFeaturesize);

- }

- else

- {

- if(vec2double(vecDigit, dvalue))

- vecLabel.push_back(dvalue);

- }

- arrStack[iTop--] = 0;

- break;

- }

- default:

- {

- vecDigit.push_back(ucRead);

- break;

- }

- }

- }

- bool LoadallwbByByte(vector< vector<double*> > &vvAllw, vector< vector<double> > &vvAllb, const char *filename,

- const int m_iInput, const int ihidden, const int m_iOut)

- {

- cout << "LoadallwbByByte" << endl;

- const int szistsize = 10;

- int ciFeaturesize = m_iInput;

- const BYTE ucLeftbrace = '[', ucRightbrace = ']', ucComma = ',', ucSpace = ' ';

- int arrStack[szistsize], iTop = -1, iIndex = 0;

- ifstream ifs;

- ifs.open(filename, ios::in | ios::binary);

- assert(ifs.is_open());

- double *pdvt;

- BYTE ucRead;

- ifs.read((char*)(&ucRead), 1);

- assert(ucRead == ucLeftbrace);

- //栈中全部存放左括号,用1代表,0说明清除

- arrStack[++iTop] = 1;

- ifs.read((char*)(&ucRead), 1);

- assert(ucRead == ucLeftbrace);

- arrStack[++iTop] = 1;//iTop is 1

- ifs.read((char*)(&ucRead), 1);

- assert(ucRead == ucLeftbrace);

- arrStack[++iTop] = 1;//iTop is 2

- vector<BYTE> vecDigit;

- vector<double *> vpdw;

- vector<double> vdb;

- while (iTop > 1 && ifs.eof() == false)

- {

- readDoubleFromJson(ifs, vpdw, vdb, vecDigit, pdvt, iIndex, m_iInput, arrStack, iTop, true);

- };

- //next char is dot

- ifs.read((char*)(&ucRead), 1);;

- assert(ucRead == ucComma);

- cout << vpdw.size() << endl;

- //next char is space

- while (iTop > 0 && ifs.eof() == false)

- {

- readDoubleFromJson(ifs, vpdw, vdb, vecDigit, pdvt, iIndex, m_iInput, arrStack, iTop, false);

- };

- assert(vpdw.size() == vdb.size());

- assert(iTop == 0);

- vvAllw.push_back(vpdw);

- vvAllb.push_back(vdb);

- //clear vpdw and pdb 's contents

- vpdw.clear();

- vdb.clear();

- //next char is comma

- ifs.read((char*)(&ucRead), 1);;

- assert(ucRead == ucComma);

- //next char is space

- ifs.read((char*)(&ucRead), 1);;

- assert(ucRead == ucSpace);

- ifs.read((char*)(&ucRead), 1);

- assert(ucRead == ucLeftbrace);

- arrStack[++iTop] = 1;//iTop is 1

- ifs.read((char*)(&ucRead), 1);

- assert(ucRead == ucLeftbrace);

- arrStack[++iTop] = 1;//iTop is 2

- while (iTop > 1 && ifs.eof() == false)

- {

- readDoubleFromJson(ifs, vpdw, vdb, vecDigit, pdvt, iIndex, ihidden, arrStack, iTop, true);

- };

- //next char is dot

- ifs.read((char*)(&ucRead), 1);;