更多代码请见:https://github.com/xubo245/SparkLearning

1解释

2.代码:

/**

* @author xubo

* ref http://spark.apache.org/docs/1.5.2/graphx-programming-guide.html

* time 20160503

*/

package org.apache.spark.graphx.learning

import org.apache.spark._

import org.apache.spark.graphx._

// To make some of the examples work we will also need RDD

import org.apache.spark.rdd.RDD

object gettingStart {

def main(args: Array[String]) {

val conf = new SparkConf().setAppName("gettingStart").setMaster("local[4]")

// Assume the SparkContext has already been constructed

val sc = new SparkContext(conf)

// Create an RDD for the vertices

val users: RDD[(VertexId, (String, String))] =

sc.parallelize(Array((3L, ("rxin", "student")), (7L, ("jgonzal", "postdoc")),

(5L, ("franklin", "prof")), (2L, ("istoica", "prof"))))

// Create an RDD for edges

val relationships: RDD[Edge[String]] =

sc.parallelize(Array(Edge(3L, 7L, "collab"), Edge(5L, 3L, "advisor"),

Edge(2L, 5L, "colleague"), Edge(5L, 7L, "pi")))

// Define a default user in case there are relationship with missing user

val defaultUser = ("John Doe", "Missing")

// Build the initial Graph

val graph = Graph(users, relationships, defaultUser)

// Count all users which are postdocs

println(graph.vertices.filter { case (id, (name, pos)) => pos == "postdoc" }.count)

// Count all the edges where src > dst

println(graph.edges.filter(e => e.srcId > e.dstId).count)

//another method

println(graph.edges.filter { case Edge(src, dst, prop) => src > dst }.count)

// reverse

println(graph.edges.filter { case Edge(src, dst, prop) => src < dst }.count)

// Use the triplets view to create an RDD of facts.

val facts: RDD[String] =

graph.triplets.map(triplet =>

triplet.srcAttr._1 + " is the " + triplet.attr + " of " + triplet.dstAttr._1)

facts.collect.foreach(println(_))

// Use the triplets view to create an RDD of facts.

println("\ntriplets:");

val facts2: RDD[String] =

graph.triplets.map(triplet =>

triplet.srcId +"("+triplet.srcAttr._1+" "+ triplet.srcAttr._2+")"+" is the" + triplet.attr + " of " + triplet.dstId+"("+triplet.dstAttr._1+" "+ triplet.dstAttr._2+ ")")

facts2.collect.foreach(println(_))

}

}

3.结果:

2016-05-03 19:18:48 WARN MetricsSystem:71 - Using default name DAGScheduler for source because spark.app.id is not set.

1

1

1

3

rxin is the collab of jgonzal

franklin is the advisor of rxin

istoica is the colleague of franklin

franklin is the pi of jgonzal

triplets:

3(rxin student) is thecollab of 7(jgonzal postdoc)

5(franklin prof) is theadvisor of 3(rxin student)

2(istoica prof) is thecolleague of 5(franklin prof)

5(franklin prof) is thepi of 7(jgonzal postdoc)

参考

【1】 http://spark.apache.org/docs/1.5.2/graphx-programming-guide.html

【2】https://github.com/xubo245/SparkLearning

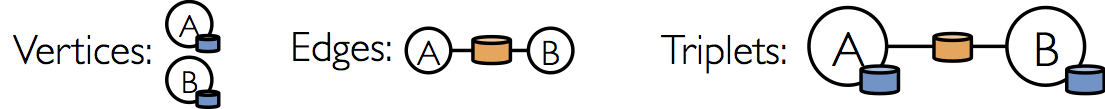

本文通过实例展示了如何使用Apache Spark的GraphX模块进行图数据处理,包括创建图、过滤节点、过滤边以及使用图的三元组视图创建事实RDD等基本操作。

本文通过实例展示了如何使用Apache Spark的GraphX模块进行图数据处理,包括创建图、过滤节点、过滤边以及使用图的三元组视图创建事实RDD等基本操作。

974

974

被折叠的 条评论

为什么被折叠?

被折叠的 条评论

为什么被折叠?