1、Maven依赖

<dependency> <groupId>org.apache.kafka</groupId> <artifactId>kafka_2.11</artifactId> <version>0.10.2.0</version> </dependency>

2.ConfigureAPI

首先是一个配置结构类文件,配置Kafka的相关参数,代码如下所示:

public class ConfigureAPI { public interface KafkaProperties { public final static String ZK = "127.0.0.1:2181"; public final static String GROUP_ID = "test_group1"; public final static String TOPIC = "test"; public final static String BROKER_LIST = "127.0.0.1:9092"; public final static int BUFFER_SIZE = 64 * 1024; public final static int TIMEOUT = 20000; public final static int INTERVAL = 10000; } }

3.Consumer

然后是一个消费程序,用于消费Kafka的消息,代码如下所示:

import java.util.HashMap; import java.util.List; import java.util.Map; import java.util.Properties; import kafka.consumer.Consumer; import kafka.consumer.ConsumerConfig; import kafka.consumer.ConsumerIterator; import kafka.consumer.KafkaStream; import kafka.javaapi.consumer.ConsumerConnector; import kafka.message.MessageAndMetadata; import kafka.serializer.StringDecoder; import kafka.utils.VerifiableProperties; public class JConsumer extends Thread{ private String topic; private final int SLEEP = 1000 * 3; private ConsumerConnector consumer; public JConsumer(String topic) { consumer = Consumer.createJavaConsumerConnector(this.consumerConfig()); this.topic = topic; } private ConsumerConfig consumerConfig() { Properties props = new Properties(); props.put("bootstrap.servers", ConfigureAPI.KafkaProperties.BROKER_LIST); props.put("zookeeper.connect", ConfigureAPI.KafkaProperties.ZK); props.put("group.id", ConfigureAPI.KafkaProperties.GROUP_ID); props.put("zookeeper.session.timeout.ms", "40000"); props.put("enable.auto.commit", "false"); props.put("zookeeper.sync.time.ms", "200"); props.put("auto.commit.interval.ms", "1000"); props.put("key.deserializer", "org.apache.kafka.common.serialization.StringDeserializer"); props.put("value.deserializer", "org.apache.kafka.common.serialization.StringDeserializer"); return new ConsumerConfig(props); } @Override public void run() { Map<String, Integer> topicCountMap = new HashMap<String, Integer>(); topicCountMap.put(topic, new Integer(1)); StringDecoder keyDecoder = new StringDecoder(new VerifiableProperties()); StringDecoder valueDecoder = new StringDecoder(new VerifiableProperties()); Map<String, List<KafkaStream<String, String>>> consumerMap = consumer.createMessageStreams(topicCountMap, keyDecoder, valueDecoder); List<KafkaStream<String, String>> streams = consumerMap.get(topic); for (final KafkaStream<String, String> stream : streams) { ConsumerIterator<String, String> it = stream.iterator(); while (it.hasNext()) { MessageAndMetadata<String, String> messageAndMetadata= it.next(); System.out.println("Receive->[" + new String(messageAndMetadata.message()) + "],topic->["+messageAndMetadata.topic() +"],offset->["+messageAndMetadata.offset()+"],partition->["+messageAndMetadata.partition() +"],timestamp->["+messageAndMetadata.timestamp()+"]"); consumer.commitOffsets(); try { sleep(SLEEP); } catch (Exception ex) { consumer.commitOffsets(); ex.printStackTrace(); } } } /*Map<String, List<KafkaStream<byte[], byte[]>>> consumerMap = consumer.createMessageStreams(topicCountMap); List<KafkaStream<byte[], byte[]>> streams = consumerMap.get(topic); for (final KafkaStream<byte[], byte[]> stream : streams) { ConsumerIterator<byte[], byte[]> it = stream.iterator(); while (it.hasNext()) { MessageAndMetadata<byte[], byte[]> messageAndMetadata= it.next(); System.out.println("Receive->[" + new String(messageAndMetadata.message()) + "],topic->["+messageAndMetadata.topic() +"],offset->["+messageAndMetadata.offset()+"],partition->["+messageAndMetadata.partition() +"],timestamp->["+messageAndMetadata.timestamp()+"]"); //consumer.commitOffsets(); consumer.commitOffsets(); try { sleep(SLEEP); } catch (Exception ex) { consumer.commitOffsets(); ex.printStackTrace(); } } }*/ } }

4.Producer

接着是Kafka的生产消息程序,用于产生Kafka的消息供Consumer去消费,具体代码如下所示:

import org.apache.kafka.clients.producer.KafkaProducer; import org.apache.kafka.clients.producer.Producer; import org.apache.kafka.clients.producer.ProducerRecord; import java.util.Properties; public class JProducer extends Thread{ private Producer<String, String> producer; private String topic; private Properties props = new Properties(); private final int SLEEP = 1000 * 3; public JProducer(String topic) { props.put("serializer.class", "kafka.serializer.StringEncoder"); //props.put("metadata.broker.list", ConfigureAPI.KafkaProperties.BROKER_LIST); props.put("bootstrap.servers", ConfigureAPI.KafkaProperties.BROKER_LIST); props.put("acks", "all"); props.put("retries", 0); props.put("batch.size", 16384); props.put("linger.ms", 1); props.put("buffer.memory", 33554432); props.put("key.serializer", "org.apache.kafka.common.serialization.StringSerializer"); props.put("value.serializer", "org.apache.kafka.common.serialization.StringSerializer"); producer = new KafkaProducer<String, String>(props); this.topic = topic; } @Override public void run() { int offsetNo = 1; boolean flag = true; while (flag) { String msg = new String("Message_" + offsetNo); System.out.println("Send->[" + msg + "]"); producer.send(new ProducerRecord<String, String>(topic, String.valueOf(offsetNo), msg)); offsetNo++; if(offsetNo == 210){ flag = false; } try { sleep(SLEEP); } catch (Exception ex) { producer.close(); ex.printStackTrace(); } } producer.close(); } }

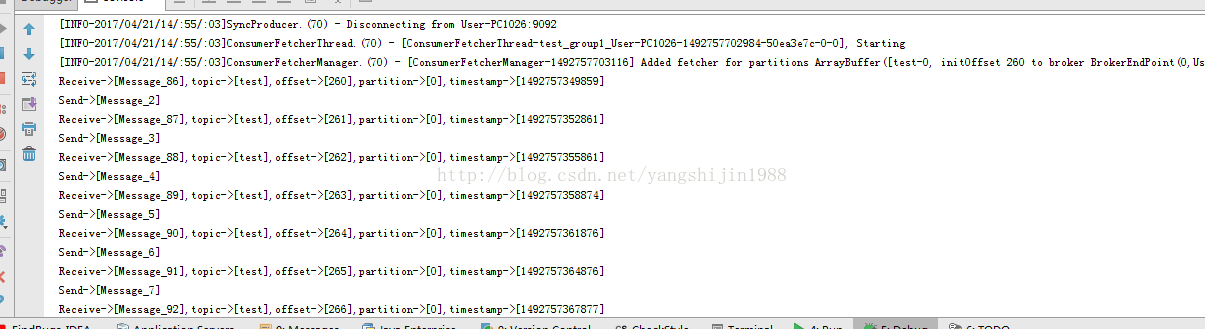

5.截图预览

在开发完Consumer和Producer的代码后,我们来测试相关应用,下面给大家编写了一个Client去测试Consumer和Producer,具体代码如下所示:

public class KafkaClient { public static void main(String[] args) { JProducer pro = new JProducer(ConfigureAPI.KafkaProperties.TOPIC); pro.start(); JConsumer con = new JConsumer(ConfigureAPI.KafkaProperties.TOPIC); con.start(); } }

运行截图如下所示:

6.总结

大家在开发Kafka的应用时,需要注意相关事项。若是使用Maven项目工程,在添加相关Kafka依赖JAR包时,有可能依赖JAR会下载失败,若出现这种情况,可手动将Kafka的依赖JAR包添加到Maven仓库即可,在编写Consumer和Producer程序,这里只是给出一个示例让大家先熟悉Kafka的代码如何去编写,后面会给大家更加详细复杂的代码模块案例

743

743

被折叠的 条评论

为什么被折叠?

被折叠的 条评论

为什么被折叠?