Stanford Parser是由StanforsNLP Group开发的基于Java的开源NLP工具,支持中文的语法分析,当前最新的版本为3.3.0,下载地址为:http://nlp.stanford.edu/software/lex-parser.shtml。下载后解压。解压文件中lexparser-gui.bat进行可视化页面运行,解析需要的模型文件存放在stanford-parser-3.3.0-models.jar,可以对其解压,方面以后使用。在中文处理方面,提供的模型文件有chineseFactored.ser.gz、chinesePCFG.ser.gz、xinhuaFactored.ser.gz、xinhuaFactoredSegmenting.ser.gz、xinhuaPCFG.ser.gz。factored包含词汇化信息,PCFG是更快更小的模板,xinhua据说是根据大陆的《新华日报》训练的语料,而chinese同时包含香港和台湾的语料,xinhuaFactoredSegmenting.ser.gz可以对未分词的句子进行句法解析。

运行方式:

1. 命令行

没有实际操作过,不是很熟悉。

2. 图形界面

直接运行lexparser-gui.bat文件,选择Load Parser 选择上文中的模型文件,如果语句未分词应该选择具有分词功能的模型文件,然后Load File或者直接在空白区域内输入语句。

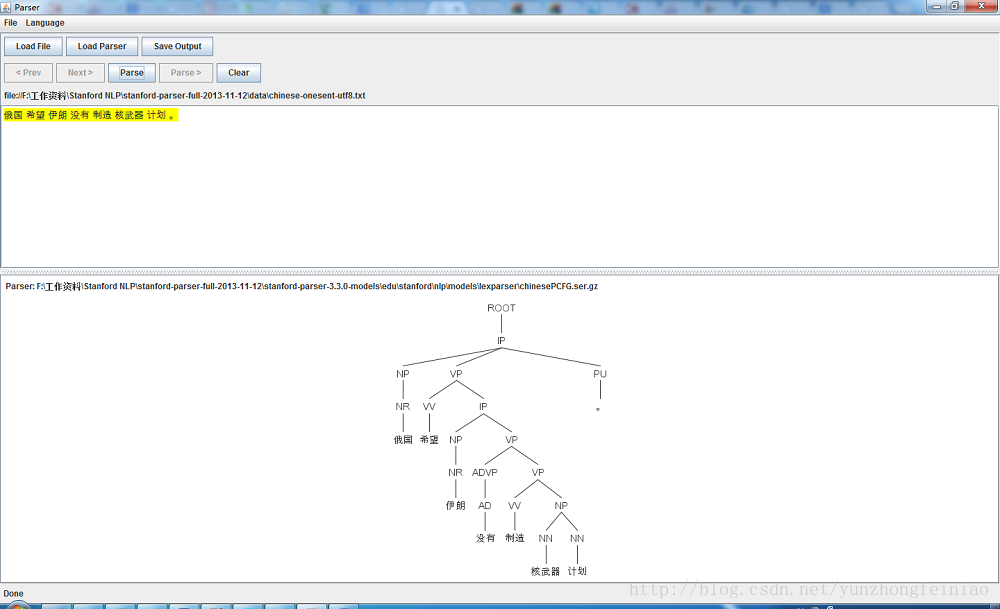

其中分词后的语句进行句法分析示例如下图所示:

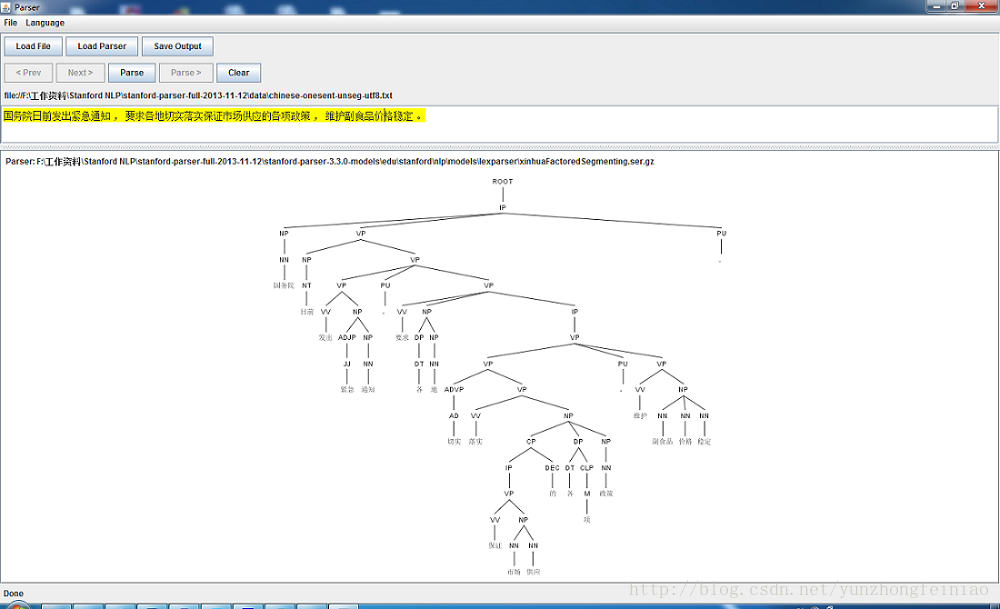

未分词的句子解析后如下图所示:

3. API调用

工具同时提供了API的调用方式,在文件夹中也提供了帮助文档。具体的思路大致是:

1. 指定模型文件,指定一些参数信息。

String grammars = "edu/stanford/nlp/models/lexparser/chinesePCFG.ser.gz";2. 加载模型文件,初始化用于句法分析的类LexicalizedParser。

LexicalizedParser lp = LexicalizedParser.loadModel(grammars);3. 调用parse()方法进行解析。

Tree t = lp.parse(s);4. 后续处理,根据自己的需要生成不同的处理格式。

ChineseTreebankLanguagePack tlp = new ChineseTreebankLanguagePack();

GrammaticalStructureFactory gsf = tlp.grammaticalStructureFactory();

ChineseGrammaticalStructure gs = new ChineseGrammaticalStructure(t);

Collection<TypedDependency> tdl = gs.typedDependenciesCollapsed();我做的东西需要获取句子的主谓宾结构,但是Stanford Parser的输出结果中没发现有直接的标注主谓宾的结果,于是需要自己对于输出的语法依赖树结果处理一下。对句子词语之间的依赖关系如:[nsubj(发出-3, 国务院-1), tmod(发出-3, 日前-2), root(ROOT-0, 发出-3), amod(通知-5, 紧急-4), dobj(发出-3, 通知-5), conj(发出-3, 要求-7), det(地-9, 各-8), dobj(要球-7, 地-9), advmod(落实-11, 切实-10), dep(要求-7, 落实-11), rcmod(政策-18, 保证-12), nn(供应-14, 市场-13), dobj(保证-12, 供应-14), cpm(保证-12, 的-15), det(政策-18, 各-16), clf(各-16, 项-17), dobj(落实-11, 政策-18), dep(落实-11, 维护-20), nn(稳定-23, 副食品-21), nn(稳定-23, 价格-22), dobj(维护-20, 稳定-23)]

对于标注为dobj这样的每一个TypedDependency实体关系为直接宾语关系,然后获取实体的支配者和被支配者,遍历所有依赖关系查看支配者是否存在主语,如存在则构成一个完整的主谓宾结构,否则是一个谓语宾语结构,这种方法不是特别合理,但是暂时还没想到更好的方法。

最后附上我整理汇总的一些常用的标注指代。

ROOT:要处理文本的语句

IP:简单从句

NP:名词短语

VP:动词短语

PU:断句符,通常是句号、问号、感叹号等标点符号

LCP:方位词短语

PP:介词短语

CP:由‘的’构成的表示修饰性关系的短语

DNP:由‘的’构成的表示所属关系的短语

ADVP:副词短语

ADJP:形容词短语

DP:限定词短语

QP:量词短语

NN:常用名词

NR:固有名词

NT:时间名词

PN:代词

VV:动词

VC:是

CC:表示连词

VE:有

VA:表语形容词

AS:内容标记(如:了)

VRD:动补复合词

CD: 表示基数词

DT: determiner 表示限定词

EX: existential there 存在句

FW: foreign word 外来词

IN: preposition or conjunction, subordinating 介词或从属连词

JJ: adjective or numeral, ordinal 形容词或序数词

JJR: adjective, comparative 形容词比较级

JJS: adjective, superlative 形容词最高级

LS: list item marker 列表标识

MD: modal auxiliary 情态助动词

PDT: pre-determiner 前位限定词

POS: genitive marker 所有格标记

PRP: pronoun, personal 人称代词

RB: adverb 副词

RBR: adverb, comparative 副词比较级

RBS: adverb, superlative 副词最高级

RP: particle 小品词

SYM: symbol 符号

TO:”to” as preposition or infinitive marker 作为介词或不定式标记

WDT: WH-determiner WH限定词

WP: WH-pronoun WH代词

WP$: WH-pronoun, possessive WH所有格代词

WRB:Wh-adverb WH副词

关系表示

abbrev: abbreviation modifier,缩写

acomp: adjectival complement,形容词的补充;

advcl : adverbial clause modifier,状语从句修饰词

advmod: adverbial modifier状语

agent: agent,代理,一般有by的时候会出现这个

amod: adjectival modifier形容词

appos: appositional modifier,同位词

attr: attributive,属性

aux: auxiliary,非主要动词和助词,如BE,HAVE SHOULD/COULD等到

auxpass: passive auxiliary 被动词

cc: coordination,并列关系,一般取第一个词

ccomp: clausal complement从句补充

complm: complementizer,引导从句的词好重聚中的主要动词

conj : conjunct,连接两个并列的词。

cop: copula。系动词(如be,seem,appear等),(命题主词与谓词间的)连系

csubj : clausal subject,从主关系

csubjpass: clausal passive subject 主从被动关系

dep: dependent依赖关系

det: determiner决定词,如冠词等

dobj : direct object直接宾语

expl: expletive,主要是抓取there

infmod: infinitival modifier,动词不定式

iobj : indirect object,非直接宾语,也就是所以的间接宾语;

mark: marker,主要出现在有“that” or “whether”“because”, “when”,

mwe: multi-word expression,多个词的表示

neg: negation modifier否定词

nn: noun compound modifier名词组合形式

npadvmod: noun phrase as adverbial modifier名词作状语

nsubj : nominal subject,名词主语

nsubjpass: passive nominal subject,被动的名词主语

num: numeric modifier,数值修饰

number: element of compound number,组合数字

parataxis: parataxis: parataxis,并列关系

partmod: participial modifier动词形式的修饰

pcomp: prepositional complement,介词补充

pobj : object of a preposition,介词的宾语

poss: possession modifier,所有形式,所有格,所属

possessive: possessive modifier,这个表示所有者和那个’S的关系

preconj : preconjunct,常常是出现在 “either”, “both”, “neither”的情况下

predet: predeterminer,前缀决定,常常是表示所有

prep: prepositional modifier

prepc: prepositional clausal modifier

prt: phrasal verb particle,动词短语

punct: punctuation,这个很少见,但是保留下来了,结果当中不会出现这个

purpcl : purpose clause modifier,目的从句

quantmod: quantifier phrase modifier,数量短语

rcmod: relative clause modifier相关关系

ref : referent,指示物,指代

rel : relative

root: root,最重要的词,从它开始,根节点

tmod: temporal modifier

xcomp: open clausal complement

xsubj : controlling subject 掌控者

3301

3301