1+2简单部署

1、在master节点上创建/greenplum目录,将bin 文件传到主节点的该目录下,并安装;

mkdir /greenplum #20G

mkdir /data #有数据盘用数据盘70G

cd /greenplum

unzip greenplum-db-5.26.0-rhel7-x86_64.zip

./greenplum-db-5.29.5-rhel7-x86_64.bin #安装目录为/greenplum/greenplum-db

useradd gpadmin

passwd gpadmin

chown -R gpadmin:gpadmin /greenplum

chown -R gpadmin:gpadmin /data2、配置环境变量

root用户下环境变量

gp的python是独立的自己用的,不要在root用户下写,这样会升级python导致问题啊

vi ~/.bashrc

#增加如下行

source /greenplum/greenplum-db/greenplum_path.shgpadmin用户下环境变量

vi ~/.bash_profile

#增加如下行

source /greenplum/greenplum-db/greenplum_path.sh

export MASTER_DATA_DIRECTORY=/data/master/gpseg-13、配置集群各节点互信

#所有节点都写

vi /etc/hosts

10.10.173.39 gp_test1

10.10.173.59 gp_test2

10.10.173.69 gp_test3

#以下只写主节点

vi /home/gpadmin/allhost

gp_test1

gp_test2

gp_test3

vi /home/gpadmin/allsegs

gp_test2

gp_test3#root用户下(不需要建立,可忽略)

source ~/.bashrc

gpssh-exkeys -f /home/gpadmin/allhost#gpadmin用户下

su - gpadmin

gpssh-exkeys -f /home/gpadmin/allhost

出现问题:

Enter password for co51t-153-7-dc-gp2:

[ERROR co51t-153-7-dc-gp2] Server 'co51t-153-7-dc-gp2' not found in known_hosts

解决方法:关键文件known_hosts

检查您的known_hosts文件中是否包含正确的服务器主机密钥。如果不包含,请尝试使用ssh-keyscan命令手动添加服务器主机密钥。

例如,运行以下命令:ssh-keyscan co51t-153-7-dc-gp2 >> ~/.ssh/known_hosts

gpssh-exkeys -f /home/gpadmin/allhost

[STEP 1 of 5] create local ID and authorize on local host

[STEP 2 of 5] keyscan all hosts and update known_hosts file

[STEP 3 of 5] authorize current user on remote hosts

... send to co51t-153-7-dc-gp2

*** Enter password for co51t-153-7-dc-gp2:

... send to co51t-153-8-dc-gp3

[STEP 4 of 5] determine common authentication file content

[STEP 5 of 5] copy authentication files to all remote hosts

... finished key exchange with co51t-153-7-dc-gp2

... finished key exchange with co51t-153-8-dc-gp3

[INFO] completed successfully

成功了4、主节点上创建各节点对应的目录

##master创建目录

mkdir -p /data/master

chown -R gpadmin:gpadmin /data/master##创建segment目录

gpssh -f /home/gpadmin/allsegs

=> mkdir /data

=> chown -R gpadmin:gpadmin /data##gpadmin用户下配置非主节点gpadmin用户环境变量

gpssh -f /home/gpadmin/allsegs

=> echo "source /greenplum/greenplum-db/greenplum_path.sh">> ~/.bashrc

=> mkdir –p /data/primary

=> mkdir –p /data/mirror各节点安装GP,只需主节点运行该指令

gpseginstall -f /home/gpadmin/allhost -u gpadmin -p gpadmin

5、获取初始化文件

cp $GPHOME/docs/cli_help/gpconfigs/gpinitsystem_config /home/gpadmin/

修改初始化文件

ARRAY_NAME="EMC Greenplum DW"

SEG_PREFIX=gpseg

PORT_BASE=40000

declare -a DATA_DIRECTORY=(/data/primary)

MASTER_HOSTNAME=gp_test1

MASTER_DIRECTORY=/data/master

MASTER_PORT=5432

TRUSTED SHELL=ssh

CHECK_POINT_SEGMENT=8

ENCODING=UNICODE

MIRROR_PORT_BASE=50000

REPLICATION_PORT_BASE=41000

MIRROR_REPLICATION_PORT_BASE=51000

declare -a MIRROR_DATA_DIRECTORY=(/data/mirror)

MACHINE_LIST_FILE=/home/gpadmin/allsegs

####参数解释#######

这些参数是用于配置 EMC Greenplum DW 数据库的设置。下面是每个参数的解释:

ARRAY_NAME: 数据库集群的名称,这里设置为 "EMC Greenplum DW"。

SEG_PREFIX: 段服务器的前缀,用于标识不同的段服务器。在这里设置为 "gpseg"。

PORT_BASE: 段服务器的基本端口号,用于与客户端建立连接。在这里设置为 40000。

DATA_DIRECTORY: 主数据库存储数据的目录路径。这里设置为 "/data/primary"。

MASTER_HOSTNAME: 主数据库服务器的主机名或 IP 地址。在这里设置为 "gp_test1"。

MASTER_DIRECTORY: 主数据库存储主节点数据的目录路径。在这里设置为 "/data/master"。

MASTER_PORT: 主数据库服务器的端口号。在这里设置为 5432。

TRUSTED SHELL: 用于在数据库服务器之间进行安全通信的远程 Shell 工具。在这里设置为 "ssh"。

CHECK_POINT_SEGMENT: 用于检查点操作的段服务器数量。在这里设置为 8。

ENCODING: 数据库使用的字符编码。在这里设置为 UNICODE。

MIRROR_PORT_BASE: 镜像段服务器的基本端口号,用于与客户端建立连接。在这里设置为 50000。

REPLICATION_PORT_BASE: 复制段服务器的基本端口号,用于与主数据库进行数据复制。在这里设置为 41000。

MIRROR_REPLICATION_PORT_BASE: 镜像复制段服务器的基本端口号,用于与主数据库进行数据复制。在这里设置为 51000。

MIRROR_DATA_DIRECTORY: 镜像数据库存储数据的目录路径。这里设置为 "/data/mirror"。

MACHINE_LIST_FILE: 包含所有段服务器主机名或 IP 地址的文件路径。在这里设置为 "/home/gpadmin/allsegs"。6、初始化集群

gpinitsystem -c /home/gpadmin/gpinitsystem_config

#检查集群状态

gpstate -m

20231122:10:53:36:395437 gpstate:co51t-153-6-dc-gp1:gpadmin-[INFO]:-local Greenplum Version: 'postgres (Greenplum Database) 5.26.0 build commit:eb3677e9921499cb403e288c83074df37cf704c0'

20231122:10:53:36:395437 gpstate:co51t-153-6-dc-gp1:gpadmin-[INFO]:-master Greenplum Version: 'PostgreSQL 8.3.23 (Greenplum Database 5.26.0 build commit:eb3677e9921499cb403e288c83074df37cf704c0) on x86_64-pc-linux-gnu, compiled by GCC gcc (GCC) 6.2.0, 64-bit compiled on Apr 3 2020 21:23:19'

20231122:10:53:36:395437 gpstate:co51t-153-6-dc-gp1:gpadmin-[INFO]:-Obtaining Segment details from master...

20231122:10:53:36:395437 gpstate:co51t-153-6-dc-gp1:gpadmin-[INFO]:--------------------------------------------------------------

20231122:10:53:36:395437 gpstate:co51t-153-6-dc-gp1:gpadmin-[INFO]:--Current GPDB mirror list and status

20231122:10:53:36:395437 gpstate:co51t-153-6-dc-gp1:gpadmin-[INFO]:--Type = Spread

20231122:10:53:36:395437 gpstate:co51t-153-6-dc-gp1:gpadmin-[INFO]:--------------------------------------------------------------

20231122:10:53:36:395437 gpstate:co51t-153-6-dc-gp1:gpadmin-[INFO]:- Mirror Datadir Port Status Data Status

20231122:10:53:36:395437 gpstate:co51t-153-6-dc-gp1:gpadmin-[INFO]:- co51t-153-8-dc-gp3 /data/mirror/gpseg0 50000 Passive Synchronized

20231122:10:53:36:395437 gpstate:co51t-153-6-dc-gp1:gpadmin-[INFO]:- co51t-153-7-dc-gp2 /data/mirror/gpseg1 50000 Passive Synchronized

20231122:10:53:36:395437 gpstate:co51t-153-6-dc-gp1:gpadmin-[INFO]:--------------------------------------------------------------

gpadmin@co51t-153-6-dc-gp1[/home/gpadmin]$

gpadmin@co51t-153-6-dc-gp1[/home/gpadmin]$ gpstate

20231122:10:54:14:395511 gpstate:co51t-153-6-dc-gp1:gpadmin-[INFO]:-Starting gpstate with args:

20231122:10:54:14:395511 gpstate:co51t-153-6-dc-gp1:gpadmin-[INFO]:-local Greenplum Version: 'postgres (Greenplum Database) 5.26.0 build commit:eb3677e9921499cb403e288c83074df37cf704c0'

20231122:10:54:14:395511 gpstate:co51t-153-6-dc-gp1:gpadmin-[INFO]:-master Greenplum Version: 'PostgreSQL 8.3.23 (Greenplum Database 5.26.0 build commit:eb3677e9921499cb403e288c83074df37cf704c0) on x86_64-pc-linux-gnu, compiled by GCC gcc (GCC) 6.2.0, 64-bit compiled on Apr 3 2020 21:23:19'

20231122:10:54:14:395511 gpstate:co51t-153-6-dc-gp1:gpadmin-[INFO]:-Obtaining Segment details from master...

20231122:10:54:14:395511 gpstate:co51t-153-6-dc-gp1:gpadmin-[INFO]:-Gathering data from segments...

.

20231122:10:54:15:395511 gpstate:co51t-153-6-dc-gp1:gpadmin-[INFO]:-Greenplum instance status summary

20231122:10:54:15:395511 gpstate:co51t-153-6-dc-gp1:gpadmin-[INFO]:-----------------------------------------------------

20231122:10:54:15:395511 gpstate:co51t-153-6-dc-gp1:gpadmin-[INFO]:- Master instance = Active

20231122:10:54:15:395511 gpstate:co51t-153-6-dc-gp1:gpadmin-[INFO]:- Master standby = No master standby configured

20231122:10:54:15:395511 gpstate:co51t-153-6-dc-gp1:gpadmin-[INFO]:- Total segment instance count from metadata = 4

20231122:10:54:15:395511 gpstate:co51t-153-6-dc-gp1:gpadmin-[INFO]:-----------------------------------------------------

20231122:10:54:15:395511 gpstate:co51t-153-6-dc-gp1:gpadmin-[INFO]:- Primary Segment Status

20231122:10:54:15:395511 gpstate:co51t-153-6-dc-gp1:gpadmin-[INFO]:-----------------------------------------------------

20231122:10:54:15:395511 gpstate:co51t-153-6-dc-gp1:gpadmin-[INFO]:- Total primary segments = 2

20231122:10:54:15:395511 gpstate:co51t-153-6-dc-gp1:gpadmin-[INFO]:- Total primary segment valid (at master) = 2

20231122:10:54:15:395511 gpstate:co51t-153-6-dc-gp1:gpadmin-[INFO]:- Total primary segment failures (at master) = 0

20231122:10:54:15:395511 gpstate:co51t-153-6-dc-gp1:gpadmin-[INFO]:- Total number of postmaster.pid files missing = 0

20231122:10:54:15:395511 gpstate:co51t-153-6-dc-gp1:gpadmin-[INFO]:- Total number of postmaster.pid files found = 2

20231122:10:54:15:395511 gpstate:co51t-153-6-dc-gp1:gpadmin-[INFO]:- Total number of postmaster.pid PIDs missing = 0

20231122:10:54:15:395511 gpstate:co51t-153-6-dc-gp1:gpadmin-[INFO]:- Total number of postmaster.pid PIDs found = 2

20231122:10:54:15:395511 gpstate:co51t-153-6-dc-gp1:gpadmin-[INFO]:- Total number of /tmp lock files missing = 0

20231122:10:54:15:395511 gpstate:co51t-153-6-dc-gp1:gpadmin-[INFO]:- Total number of /tmp lock files found = 2

20231122:10:54:15:395511 gpstate:co51t-153-6-dc-gp1:gpadmin-[INFO]:- Total number postmaster processes missing = 0

20231122:10:54:15:395511 gpstate:co51t-153-6-dc-gp1:gpadmin-[INFO]:- Total number postmaster processes found = 2

20231122:10:54:15:395511 gpstate:co51t-153-6-dc-gp1:gpadmin-[INFO]:-----------------------------------------------------

20231122:10:54:15:395511 gpstate:co51t-153-6-dc-gp1:gpadmin-[INFO]:- Mirror Segment Status

20231122:10:54:15:395511 gpstate:co51t-153-6-dc-gp1:gpadmin-[INFO]:-----------------------------------------------------

20231122:10:54:15:395511 gpstate:co51t-153-6-dc-gp1:gpadmin-[INFO]:- Total mirror segments = 2

20231122:10:54:15:395511 gpstate:co51t-153-6-dc-gp1:gpadmin-[INFO]:- Total mirror segment valid (at master) = 2

20231122:10:54:15:395511 gpstate:co51t-153-6-dc-gp1:gpadmin-[INFO]:- Total mirror segment failures (at master) = 0

20231122:10:54:15:395511 gpstate:co51t-153-6-dc-gp1:gpadmin-[INFO]:- Total number of postmaster.pid files missing = 0

20231122:10:54:15:395511 gpstate:co51t-153-6-dc-gp1:gpadmin-[INFO]:- Total number of postmaster.pid files found = 2

20231122:10:54:15:395511 gpstate:co51t-153-6-dc-gp1:gpadmin-[INFO]:- Total number of postmaster.pid PIDs missing = 0

20231122:10:54:15:395511 gpstate:co51t-153-6-dc-gp1:gpadmin-[INFO]:- Total number of postmaster.pid PIDs found = 2

20231122:10:54:15:395511 gpstate:co51t-153-6-dc-gp1:gpadmin-[INFO]:- Total number of /tmp lock files missing = 0

20231122:10:54:15:395511 gpstate:co51t-153-6-dc-gp1:gpadmin-[INFO]:- Total number of /tmp lock files found = 2

20231122:10:54:15:395511 gpstate:co51t-153-6-dc-gp1:gpadmin-[INFO]:- Total number postmaster processes missing = 0

20231122:10:54:15:395511 gpstate:co51t-153-6-dc-gp1:gpadmin-[INFO]:- Total number postmaster processes found = 2

20231122:10:54:15:395511 gpstate:co51t-153-6-dc-gp1:gpadmin-[INFO]:- Total number mirror segments acting as primary segments = 0

20231122:10:54:15:395511 gpstate:co51t-153-6-dc-gp1:gpadmin-[INFO]:- Total number mirror segments acting as mirror segments = 2

20231122:10:54:15:395511 gpstate:co51t-153-6-dc-gp1:gpadmin-[INFO]:-----------------------------------------------------

gpadmin@co51t-153-6-dc-gp1[/home/gpadmin]$ GPCC安装(4.0版本)

gpcc 是安装在 master 机器上的,如果安装在一台独立的机器上,会报错

1、gpmon用户

必须是gpadmin超级用户执行,必须提供连接master的连接端口,并提供将要创建的gpmon超级用户的密码。

$ su - gpadmin

$ gpperfmon_install --enable --password gpmon --port 5432此命令的功能大致是:

创建greenplum监控用数据库(gpperfmon)

创建greenplum监控用数据库角色(gpmon)

配置greenplum数据库接受来自perfmon监控的链接文件(pg_hba.conf和.pgpass)

设置postgresql.conf文件,增加启用监控的参数。(这些参数默认会添加在文件的末尾)2、检查 gpperfmon_install是否执行成功

$ ps -ef | grep gpmon

$ psql gpperfmon -c 'SELECT* FROM system_now;'3、安装GPCC-web

3.1. 将下载的GPCC压缩文件上传到系统,并解压

$ unzip greenplum-cc-web-4.8.0-LINUX-x86_64-for5.23.0.zip请注意,执行gpccinstall命令涉及了root用户和gpadmin用户的权限问题。

因为gpccinstall命令需要在Greenplum集群每个节点都创建一个新文件夹,所以,执行命令一定要对目录有读写权限。命令的默认安装路径是/usr/local下。所以,

- 如果,自己规划的路径,并且路径的各级目录是属于gpadmin,那么全程都可以用gpadmin系统用户执行。

- 如果,要安装在/usr/local下,需要用root用户将/usr/local的权限改成gpadmin:

$ su root

$ ssh 各节点的hostname chown gpadmin:gpadmin /usr/local

$ chown -R gpadmin:gpadmin /usr/local/greenplum-cc-web-4.8.0-LINUX-x86_64

$ su - gpadmin3.2. 进入到GPCC的解压目录,并执行命令

$ ./gpccinstall-4.8.0

license······ 一路点击space空格到底

如果,是默认安装在/usr/local目录下,可以一路enter回车,如果想要自定义,则:

Do you agree to the Pivotal Greenplum Command Center End User License Agreement? Yy/Nn (Default=Y)

回车

Where would you like to install Greenplum Command Center? (Default=/usr/local)

/server/greenplum-tools/(输入,自定义安装路径)

What would you like to name this installation of Greenplum Command Center? (Default=gpcc)

回车

What port would you like gpcc webserver to use? (Default=28080)

如果使用默认的web访问端口,就直接回车

Would you like enable SSL? Yy/Nn (Default=N)

回车

Please choose a display language (Default=English)

1. English

2. Chinese

3. Korean

4. Russian

5. Japanese

回车或者输入2

······3.3. 添加环境变量

$ echo "source /greenplum/greenplum-cc/greenplum-cc-web-4.8.0/gpcc_path.sh" >> ~/.bash_profile

$ source ~/.bash_profile3.4. GPCC开启:

一般pg_hba.conf不需要修改

$ echo "host all gpmon <GPCC-host-ip>/32 md5" >> /home/gpdata/master/gpseg-1/pg_hba.conf

$ gpstop -u #重启更新

$ gpcc start

后续添加白名单:

pg_hba.conf

host all all 0.0.0.0/0 md5

之后执行:

gpstop -u

3.5 浏览器登录GPCC的web界面

地址:http://<GPCC-host-ip>:28080

用户名和密码:gpmon/gpmon

4、问题集合

gpmon用户不能登陆(待总结)会在项目新建好的集群上测试

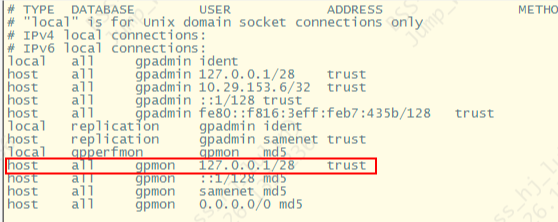

访问安全:pg_hba.conf(/data/master/gpseg-1/)

在Greenplum数据库中,pg_hba.conf文件用于配置客户端连接到数据库服务器的身份验证规则。"hba"代表"host-based authentication"(基于主机的身份验证),它定义了哪些主机和用户可以连接到数据库以及使用哪种身份验证方法。

pg_hba.conf文件中的每一行规则都指定了一个主机、一个数据库、一个用户以及一种身份验证方法。当客户端请求连接到数据库服务器时,服务器会根据pg_hba.conf文件中的规则进行身份验证,以确定是否允许连接。

# TYPE DATABASE USER ADDRESS METHOD

# "local" is for Unix domain socket connections only

local all all peer

# IPv4 local connections:

host all all 127.0.0.1/32 ident

# IPv6 local connections:

host all all ::1/128 ident

# Allow replication connections from localhost, by a user with the

# replication privilege.

local replication all peer

host replication all 127.0.0.1/32 ident

host replication all ::1/128 ident| TYPE | 说明 |

| local | 定义本地连接类型 |

| host | 配置数据库支持远程TCP/IP链 |

| DATABASE | 说明 |

| 数据库名称 | 对配置的数据库支持远程或者本地链接的配置 |

| all | 表明当前的配置针对所有的数据库生效 |

| replication | 流复制链接的配置,在PG的主从配置中会用到 |

| USER | 说明 |

| 配置进行链接的用户,需要注意的是这里是指的数据库的用户 | |

| ADDRESS | 说明 |

| 允许或者拒绝远端链接的IP地址 | |

| METHOD | 说明 |

| trust | 无条件的允许链接。在此种认证方法下,数据库用户进行连接时不需要输入任何口令 |

| reject | 无条件的拒绝连接。在此种认证方法下,数据库会拒绝该连接(即,配置连接的黑名单) |

| md5 | 要求客户端提供一个MD5加密的口令的认证 |

请注意,修改pg_hba.conf文件后,需要重新加载配置才能使更改生效。您可以使用以下命令重新加载配置:

gpstop -u

#gpstop工具中-u选项主要在不中断数据库服务的情况下重新加载pg_hba.conf配置文件的变更

及master主机上postgresql.conf 文件runtime参数的变更。

#在不停止集群情况下,修改reload配置文件![]()

1788

1788

被折叠的 条评论

为什么被折叠?

被折叠的 条评论

为什么被折叠?