一、简介

MHA(Master HA)是一款开源的 MySQL 的高可用程序,它为 MySQL 主从复制架构提供了 automating master failover 功能。MHA 在监控到 master 节点故障时,会提升其中拥有最新数据的 slave 节点成为新的master 节点,在此期间,MHA 会通过于其它从节点获取额外信息来避免一致性方面的问题。MHA 还提供了 master 节点的在线切换功能,即按需切换 master/slave 节点。

MHA 是由日本人 yoshinorim(原就职于DeNA现就职于FaceBook)开发的比较成熟的 MySQL 高可用方案。MHA 能够在30秒内实现故障切换,并能在故障切换中,最大可能的保证数据一致性。目前淘宝也正在开发相似产品 TMHA, 目前已支持一主一从。

二、实验过程

3.1 准备实验 Mysql 的 Replication 环境

3.1.1 下载实验机器镜像(均为centos 7.x)

(1)在镜像站下载 centos 7.x 阿里巴巴开源镜像站-OPSX镜像站-阿里云开发者社区 (aliyun.com)

下载链接如下:https://mirrors.aliyun.com/centos/7.9.2009/isos/x86_64/CentOS-7-x86_64-Minimal-2207-02.iso

下载成功!

(2)安装教程:CentOS7(Linux)详细安装教程(手把手图文详解版)-CSDN博客

解决centos-7ifconfig没有IP地址_centos7 ifconfig没有ip地址-CSDN博客

4.1 CentOS7系统安装后的基本配置_bytes of data-CSDN博客

Centos7下bash命令自动补全功能安装_centos7 bash-completion-CSDN博客

(3)安装MySQL,推荐使用在线安装方式

[root@manager ~]# ll total 16 -rw-------. 1 root root 1452 Feb 9 04:26 anaconda-ks.cfg -rw-r--r-- 1 root root 11700 Feb 9 05:50 mysql84-community-release-el7-1.noarch.rpm [root@manager ~]# [root@manager ~]# yum install -y mysql84-community-release-el7-1.noarch.rpm [root@manager ~]# yum list | grep -i ^mysql mysql-community-server.x86_64 8.0.41-1.el7 mysql80-community [root@manager ~]# yum install -y mysql-community-server

安装完成!

3.1.2 相关配置

MHA 对 MYSQL 复制环境有特殊要求,例如各节点都要开启二进制日志及中继日志,各从节点必须显示启用其

read-only属性,并关闭relay_log_purge功能等,这里对配置做事先说明。本实验环境共有四个节点, 其角色分配如下(实验机器均为centos 7.x)

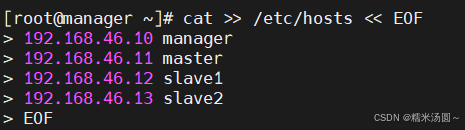

为了方便我们后期的操作,我们在各节点的/etc/hosts文件配置内容中添加如下内容:

机器名称 IP配置 服务角色 备注 manager 192.168.46.10 manager控制器 用于监控管理 master 192.168.46.11 数据库主服务器 开启bin-log relay-log 关闭relay_log slave1 192.168.46.12 数据库从服务器 开启bin-log relay-log 关闭relay_log slave2 192.168.46.13 数据库从服务器 开启bin-log relay-log 关闭relay_log 192.168.46.144 node1.kongd.com node1 192.168.46.145 node2.kongd.com node2 192.168.46.146 node3.kongd.com node3 192.168.46.147 node4.kongd.com node4

3.1.3 初始主节点 master 的配置 --- 配置“一主”两从

[root@master ~]# vim /etc/my.cnf #添加 [mysql] prompt = (\\u@\\h) [\d] >\\ no_auto_rehash server_id = 1 //复制集群中的各节点的id均必须唯一 skip_name_resolve //关闭名称解析(非必须) gtid-mode = on //启用gtid类型 enforce-gtid-consistency = true //强制GTID的一致性 log-slave-updates = 1 //slave更新是否记入日志 log-bin = mysql-bin //开启二进制日志 relay-log = relay-log //开启中继日志

#重启 [root@master ~]# systemctl restart mysql.server

3.1.4 所有 slave 节点依赖的配置 --- 配置一主“两从”

[root@master ~]# vim /etc/my.cnf #添加 [mysql] prompt = (\\u@\\h) [\d] >\\ no_auto_rehash server_id = 1 //复制集群中的各节点的id均必须唯一 skip_name_resolve //关闭名称解析(非必须) gtid-mode = on //启用gtid类型 enforce-gtid-consistency = true //强制GTID的一致性 log-slave-updates = 1 //slave更新是否记入日志 log-bin = mysql-bin //开启二进制日志 relay-log = relay-log //开启中继日志 relay_log_purge = 0 //是否自动清空不再需要中继日志

#重启 [root@master ~]# systemctl restart mysql.server

3.1.5 配置一主多从复制架构

(1)master与slave 进入MySQL(但是下载的MySQL含有初始密码,所以先修改密码,再进入MySQL界面)

修改密码如下:

方法一:

# 查看初始密码 [root@master ~]# grep -i password /var/log/mysqld.log 2025-02-08T14:27:03.203105Z 6 [Note] [MY-010454] [Server] A temporary password is generated for root@localhost: kgeiZ9)zCs92 # 修改初始密码 [root@master ~]# mysqladmin -uroot -p'kgeiZ9)zCs92' password 'MySQL@123' mysqladmin: [Warning] Using a password on the command line interface can be insecure. Warning: Since password will be sent to server in plain text, use ssl connection to ensure password safety. [root@master ~]#方法二:使用变量提取出初始密码即可

[root@slave-1 ~]# tmp_pwd=`awk '/temporary password/ {print $NF}' /var/log/mysqld.log` [root@slave-1 ~]# echo $tmp_pwd Uj1cFtt#jr6; [root@slave-1 ~]# mysqladmin -uroot -p"$tmp_pwd" password 'MySQL@123' mysqladmin: [Warning] Using a password on the command line interface can be insecure. Warning: Since password will be sent to server in plain text, use ssl connection to ensure password safety. [root@slave-1 ~]#为实现进入MySQL不用每次输入密码,则进行以下配置,记录用户名及密码:

[root@master ~]# vim /etc/my.cnf # 添加 [client] user = root password = MySQL@123

# 登录进行验证 [root@master ~]# mysql

(2)配置主从同步

master 机:

(root@localhost) [(none)] >create user slave@'192.168.46.%' identified with mysql_native_password by 'MySQL@123' -> ; Query OK, 0 rows affected (0.01 sec) (root@localhost) [(none)] >grant all on *.* to slave@'192.168.46.%'; Query OK, 0 rows affected (0.00 sec) (root@localhost) [(none)] >show master status; +------------------+----------+--------------+------------------+------------------------------------------+ | File | Position | Binlog_Do_DB | Binlog_Ignore_DB | Executed_Gtid_Set | +------------------+----------+--------------+------------------+------------------------------------------+ | mysql-bin.000002 | 1018 | | | bd809305-e6ef-11ef-81ab-000c294ed7bd:1-3 | +------------------+----------+--------------+------------------+------------------------------------------+ 1 row in set (0.00 sec) (root@localhost) [(none)] >slave1、2 机:

(root@localhost) [(none)] >change master to -> master_user='slave', -> master_password='MySQL@123', -> master_host='192.168.46.11', -> master_auto_position=1; Query OK, 0 rows affected, 7 warnings (0.01 sec) (root@localhost) [(none)] > (root@localhost) [(none)] >start slave; Query OK, 0 rows affected, 1 warning (0.14 sec) (root@localhost) [(none)] >show slave status \G;

主从同步配置完成!

3.2 安装配置MHA

3.2.1 在 master 上进行授权

(root@localhost) [(none)] >create user 'mhaadmin'@'192.168.46.%' identified with mysql_native_password by 'Mha@1234'; Query OK, 0 rows affected (0.01 sec) (root@localhost) [(none)] >grant all on *.* to 'mhaadmin'@'192.168.46.%'; Query OK, 0 rows affected (0.01 sec)

3.2.2 准备 ssh 互通环境

MHA集群中的各节点彼此之间均需要基于ssh互信通信,以实现远程控制及数据管理功能。

[root@all ~]# ssh-keygen -f ~/.ssh/id_rsa -P '' -q [root@all ~]# ssh-copy-id node1所有节点都生成密钥对,然后拷贝到管理节点,最后管理节点的authorized_keys分发到其他节点。

[root@manager ~]# cd ~/.ssh/ [root@manager .ssh]# ls authorized_keys id_rsa id_rsa.pub known_hosts [root@manager .ssh]# cat authorized_keys ssh-rsa AAAAB3NzaC1yc2EAAAADAQABAAABAQCubj4tkJ/U2WjbFqeK3b7v2nqYt2rsi/YO2CGaP4T46ZZ2 Et+BcSCixjb8ucAoxnnjfpzRLVW9g0bbdRkk/nR3uYxPSWNEerW65ZeobUcsK54A3fwbrwZCBWuCXU75d05d yVpRomz3W4UAsedMNOJmByfxF+fb57EDcZMUfBy75QcpwxVUUropQY9UrgGN6x3/IjMm5MYwMu7XxWK/9IN6 y7rFlA70PmdzPAAVBYsPc7ZTHx1ahruag1SeEEPy5OcBDorQZLVU0oG+Y+/TlqqzWEQU9ekFTGgyoclS4MW+ D8ileRNC/+1iAx+cQP50yWJ1tXWfVqjMt+3bkjhFsNLl root@slave2 ssh-rsa AAAAB3NzaC1yc2EAAAADAQABAAABAQC8qeD55Fb1FLPts2qrTttpU8ZHp15kmPjH1m6UAf8GH6kH nCJbA043VKZ39luhzP743230pTgLRNDuavdrJB29HnTq01D64+SttBJJqg+C3/QMvWXveutrwaik9/4bYflY CbonFuaDzAWtLucmWFojd+8+z+Wi2zC44eKFXFgw4o6GUYel3edJlQ0FCj/QNplxkaLKDYVSfvugLRlteH6z G3XlAtI4ExCOTv378oPU6TQJoKBPXiT4IbRLzTP+WlwHlRz6t3pid06lG2y2HvGoBuy4pAcQHCDgjCltOzl+ /yoTQx5WRuKmPHXkCSJj/xSk7+AaLjHrfdNpwjDK4Yqf root@slave1 ssh-rsa AAAAB3NzaC1yc2EAAAADAQABAAABAQC9rcYOb1WkC2YpsrWh7nrnq+SxRJFqxfOE+5dwSiK+WQPo eEhWK/qoWLgFv8sgLPnlTTmgdxJDXuJMI2EspA4HewsnE4LFals+d7Na5PoRJ7SMusANs8xqeJfGMjeYTBXi PzdB2dF+PsBJC9BmDzDeJi/mA9yr+0r/drZeAt65nim86oZabVXM8yZtoA4hDe4iKNi+edh6RsXbTTYf1Jxd 9+tcYRSXB9gc93R1G1UcuX81aNBtB070PDJNvsYyxoN0V8/nqk/7eGn3QkG4W8aYFkM5FqjvbRAyDf0cwM/e WIDrA2L35QkyQrRGtZ9Vo3rSx4h/InZujEhtAMGxBzh7 root@manager ssh-rsa AAAAB3NzaC1yc2EAAAADAQABAAABAQCuZjZZ3Lzg+qQ3XZZbRv7oZFRudt+TehTjTkFRrDkD/WqD csUPIELF+Hfst+AwReuVPvatlUgvOjvBloRla68wGlZP/UOJgcqxIiKZJCzzz/qVChHWRpVWKUbyfJuCp5Yi K/oPo/t2uG1sIROmZneIOOuZHk5agCdQjgjb20Du+Yga03CMKTHIXY6G11NAVjeHNU5ppgeQu23KuuWtms2B 2BAnmZf1FUExwLNmkjGC3BkMWEuWtoR8fwINgGrENcT1VIIynv5TnUgpB6qlAqSZjBNXmxRCCGsCQ9nm5yyg LrUm9Q9dXc/yqEUrBaaTa4rZz0ns1wrFL+Z1Wk4AMw53 root@master [root@manager .ssh]# [root@manager ~]# scp ~/.ssh/authorized_keys master:~/.ssh/ root@master's password: authorized_keys 100% 1573 1.1MB/s 00:00 [root@manager ~]# scp ~/.ssh/authorized_keys slave1:~/.ssh/ root@slave1's password: authorized_keys 100% 1573 1.7MB/s 00:00 [root@manager ~]# scp ~/.ssh/authorized_keys slave2:~/.ssh/ root@slave2's password: authorized_keys 100% 1573 955.5KB/s 00:00 [root@manager ~]# 验证:所有节点验证 [root@slave1 .ssh]# for i in manager master slave1 slave2;do ssh $i hostname;done manager master slave1 slave2 [root@slave1 .ssh]#

3.2.3 安装 MHA 包

安装包下载地址:

mha官网:https://code.google.com/archive/p/mysql-master-ha/github下载地址:

https://github.com/yoshinorim/mha4mysql-manager/releases/tag/v0.58 https://github.com/yoshinorim/mha4mysql-node/releases/tag/v0.58在本步骤中, Manager节点需要另外多安装一个包。具体需要安装的内容如下:

MHA Manager服务器需要安装manager和node

MHA的Node依赖于perl-DBD-MySQL,所以配置epel源。

manager,master,slave1,slave2配置 [root@node1 ~]# wget -O /etc/yum.repos.d/epel.repo http://mirrors.aliyun.com/repo/epel-7.repo配置完成后,安装即可:

manager:

[root@manager ~]# ll total 132 -rw-------. 1 root root 1452 Feb 9 04:26 anaconda-ks.cfg -rw-r--r-- 1 root root 81024 Feb 10 19:19 mha4mysql-manager-0.58-0.el7.centos.noarch.rpm -rw-r--r-- 1 root root 36328 Feb 10 19:19 mha4mysql-node-0.58-0.el7.centos.noarch.rpm -rw-r--r-- 1 root root 11700 Feb 10 04:48 mysql84-community-release-el7-1.noarch.rpm [root@manager ~]# yum install -y mha4mysql-manager-0.58-0.el7.centos.noarch.rpm mha4mysql-node-0.58-0.el7.centos.noarch.rpm

master、slave1、slave2:

[root@slave2 ~]# yum install -y mha4mysql-node-0.58-0.el7.centos.noarch.rpmMHA安装完毕!

3.2.4 定义 MHA 管理配置文件

为MHA专门创建一个管理用户, 方便以后使用, 在mysql的主节点上, 三个节点自动同步:

[root@manager ~]# mkdir /etc/mha [root@manager ~]# mkdir -p /var/log/mha/app1 [root@manager ~]# vim /etc/mha/app1.cnf [root@manager ~]# [root@manager ~]# cat -v /etc/mha/app1.cnf [server default] uer=mhaadmin password=Mha@1234 manager_workdir=/var/log/mha/app1 manager_log=/var/log/mha/app1/manager.log ssh_user=root repl_user=slave repl_password=MySQL@123 ping_interval=1 [server1] hostname=192.168.46.11 ssh_port=22 candidate_master=1 [server2] hostname=192.168.46.12 ssh_port=22 candidate_master=1 [server3] hostname=192.168.46.13 ssh_port=22 candidate_master=1 [root@manager ~]# ^C

3.2.5 对四个节点进行检测

1)检测各节点间 ssh 互信通信配置是否 ok

我们在 Manager 机器上输入下述命令来检测:

[root@manager ~]# masterha_check_ssh --conf=/etc/mha/app1.cnf Mon Feb 10 19:48:25 2025 - [warning] Global configuration file /etc/masterha_default.cnf not found. Skipping. Mon Feb 10 19:48:25 2025 - [info] Reading application default configuration from /etc/mha/app1.cnf.. Mon Feb 10 19:48:25 2025 - [info] Reading server configuration from /etc/mha/app1.cnf.. Mon Feb 10 19:48:25 2025 - [info] Starting SSH connection tests.. Mon Feb 10 19:48:26 2025 - [debug] Mon Feb 10 19:48:25 2025 - [debug] Connecting via SSH from root@192.168.46.11(192.168.46.11:22) to root@192.168.46.12(192.168.46.12:22).. Mon Feb 10 19:48:25 2025 - [debug] ok. Mon Feb 10 19:48:25 2025 - [debug] Connecting via SSH from root@192.168.46.11(192.168.46.11:22) to root@192.168.46.13(192.168.46.13:22).. Mon Feb 10 19:48:26 2025 - [debug] ok. Mon Feb 10 19:48:26 2025 - [debug] Mon Feb 10 19:48:26 2025 - [debug] Connecting via SSH from root@192.168.46.12(192.168.46.12:22) to root@192.168.46.11(192.168.46.11:22).. Mon Feb 10 19:48:26 2025 - [debug] ok. Mon Feb 10 19:48:26 2025 - [debug] Connecting via SSH from root@192.168.46.12(192.168.46.12:22) to root@192.168.46.13(192.168.46.13:22).. Mon Feb 10 19:48:26 2025 - [debug] ok. Mon Feb 10 19:48:28 2025 - [debug] Mon Feb 10 19:48:26 2025 - [debug] Connecting via SSH from root@192.168.46.13(192.168.46.13:22) to root@192.168.46.11(192.168.46.11:22).. Mon Feb 10 19:48:26 2025 - [debug] ok. Mon Feb 10 19:48:26 2025 - [debug] Connecting via SSH from root@192.168.46.13(192.168.46.13:22) to root@192.168.46.12(192.168.46.12:22).. Mon Feb 10 19:48:27 2025 - [debug] ok. Mon Feb 10 19:48:28 2025 - [info] All SSH connection tests passed successfully. [root@manager ~]#2)检查管理的MySQL复制集群的连接配置参数是否OK

[root@manager ~]# masterha_check_repl --conf=/etc/mha/app1.cnf

3.3 启动 MHA

1. 在 manager 节点上启动 MHA:

[root@manager ~]# nohup masterha_manager --conf=/etc/mha/app1.cnf --remove_dead_master_conf --ignore_last_failover < /dev/null > /var/log/mha/app1/manager.log 2>&1 & [1] 26992. 查看 master 节点的状态

[root@manager ~]# masterha_check_status --conf=/etc/mha/app1.cnf app1 (pid:2699) is running(0:PING_OK), master:192.168.46.113. 开发启动服务脚本

[root@manager ~]# vim /etc/init.d/masterha_managerd #!/bin/bash # chkconfig: 35 80 20 # description: MHA management script. STARTEXEC="/usr/bin/masterha_manager --conf" STOPEXEC="/usr/bin/masterha_stop --conf" CONF="/etc/mha/app1.cnf" process_count=`ps -ef |grep -w masterha_manager|grep -v grep|wc -l` PARAMS="--ignore_last_failover" case "$1" in start) if [ $process_count -gt 1 ] then echo "masterha_manager exists, process is already running" else echo "Starting Masterha Manager" $STARTEXEC $CONF $PARAMS < /dev/null > /var/log/mha/app1/manager.log 2>&1 & fi ;; stop) if [ $process_count -eq 0 ] then echo "Masterha Manager does not exist, process is not running" else echo "Stopping ..." $STOPEXEC $CONF while(true) do process_count=`ps -ef |grep -w masterha_manager|grep -v grep|wc -l` if [ $process_count -gt 0 ] then sleep 1 else break fi done echo "Master Manager stopped" fi ;; *) echo "Please use start or stop as first argument" ;; esac [root@manager ~]# chmod +x /etc/init.d/masterha_managerd [root@manager ~]# chkconfig --add masterha_managerd [root@manager ~]# chkconfig masterha_managerd on4. 测试服务脚本

由于之前用命令开启服务,则先进行停止该进程

[root@manager ~]# ps -ef | grep masterha root 2699 2290 0 19:50 pts/0 00:00:00 perl /usr/bin/masterha_manager --conf=/etc/mha/app1.cnf --remove_dead_master_conf --ignore_last_failover root 3138 2290 0 19:57 pts/0 00:00:00 grep --color=auto masterha [root@manager ~]# kill 2699 [root@manager ~]# ps -ef | grep masterha root 3161 2290 0 19:57 pts/0 00:00:00 grep --color=auto masterha [1]+ Exit 1 nohup masterha_manager --conf=/etc/mha/app1.cnf --remove_dead_master_conf --ignore_last_failover < /dev/null > /var/log/mha/app1/manager.log 2>&1 [root@manager ~]# ps -ef | grep masterha root 3163 2290 0 19:57 pts/0 00:00:00 grep --color=auto masterha [root@manager ~]#进程停止完成,开始进行服务脚本测试:

[root@manager ~]# systemctl start masterha_managerd [root@manager ~]# systemctl status masterha_managerd [root@manager ~]# ps -ef | grep -w masterha_manager [root@manager ~]# systemctl stop masterha_managerd [root@manager ~]# ps -ef | grep -w masterha_manager

3.4 配置VIP

1. 编写脚本

[root@node1 ~]# vim /usr/local/bin/master_ip_failover #!/usr/bin/env perl use strict; use warnings FATAL => 'all'; use Getopt::Long; my ( $command, $ssh_user, $orig_master_host, $orig_master_ip, $orig_master_port, $new_master_host, $new_master_ip, $new_master_port ); my $vip = '172.16.90.210/24'; my $key = '1'; my $ssh_start_vip = "/sbin/ifconfig eth0:$key $vip"; my $ssh_stop_vip = "/sbin/ifconfig eth0:$key down"; GetOptions( 'command=s' => \$command, 'ssh_user=s' => \$ssh_user, 'orig_master_host=s' => \$orig_master_host, 'orig_master_ip=s' => \$orig_master_ip, 'orig_master_port=i' => \$orig_master_port, 'new_master_host=s' => \$new_master_host, 'new_master_ip=s' => \$new_master_ip, 'new_master_port=i' => \$new_master_port, ); exit &main(); sub main { print "\n\nIN SCRIPT TEST====$ssh_stop_vip==$ssh_start_vip===\n\n"; if ( $command eq "stop" || $command eq "stopssh" ) { my $exit_code = 1; eval { print "Disabling the VIP on old master: $orig_master_host \n"; &stop_vip(); $exit_code = 0; }; if ($@) { warn "Got Error: $@\n"; exit $exit_code; } exit $exit_code; } elsif ( $command eq "start" ) { my $exit_code = 10; eval { print "Enabling the VIP - $vip on the new master - $new_master_host \n"; &start_vip(); $exit_code = 0; }; if ($@) { warn $@; exit $exit_code; } exit $exit_code; } elsif ( $command eq "status" ) { print "Checking the Status of the script.. OK \n"; exit 0; } else { &usage(); exit 1; } } sub start_vip() { `ssh $ssh_user\@$new_master_host \" $ssh_start_vip \"`; } sub stop_vip() { return 0 unless ($ssh_user); `ssh $ssh_user\@$orig_master_host \" $ssh_stop_vip \"`; } sub usage { print "Usage: master_ip_failover --command=start|stop|stopssh|status --orig_master_host=host --orig_master_ip=ip --orig_master_port=port --new_master_host=host --new_master_ip=ip --new_master_port=port\n"; } [root@node1 ~]# chmod +x /usr/local/bin/master_ip_failover2、更改manager配置文件

[root@node1 ~]# vim /etc/mha/app1.cnf [server default] 添加: master_ip_failover_script=/usr/local/bin/master_ip_failover

3、主库上,手工生成第一个vip地址

[root@master ~]# ip addr 1: lo: <LOOPBACK,UP,LOWER_UP> mtu 65536 qdisc noqueue state UNKNOWN group default qlen 1000 link/loopback 00:00:00:00:00:00 brd 00:00:00:00:00:00 inet 127.0.0.1/8 scope host lo valid_lft forever preferred_lft forever inet6 ::1/128 scope host valid_lft forever preferred_lft forever 2: ens33: <BROADCAST,MULTICAST,UP,LOWER_UP> mtu 1500 qdisc pfifo_fast state UP group default qlen 1000 link/ether 00:0c:29:4e:d7:bd brd ff:ff:ff:ff:ff:ff inet 192.168.46.11/24 brd 192.168.46.255 scope global noprefixroute ens33 valid_lft forever preferred_lft forever inet6 fe80::22df:a835:ba99:ba96/64 scope link noprefixroute valid_lft forever preferred_lft forever [root@master ~]# ifconfig ens33:1 192.168.46.15/24 [root@master ~]# ip addr 1: lo: <LOOPBACK,UP,LOWER_UP> mtu 65536 qdisc noqueue state UNKNOWN group default qlen 1000 link/loopback 00:00:00:00:00:00 brd 00:00:00:00:00:00 inet 127.0.0.1/8 scope host lo valid_lft forever preferred_lft forever inet6 ::1/128 scope host valid_lft forever preferred_lft forever 2: ens33: <BROADCAST,MULTICAST,UP,LOWER_UP> mtu 1500 qdisc pfifo_fast state UP group default qlen 1000 link/ether 00:0c:29:4e:d7:bd brd ff:ff:ff:ff:ff:ff inet 192.168.46.11/24 brd 192.168.46.255 scope global noprefixroute ens33 valid_lft forever preferred_lft forever inet 192.168.46.15/24 brd 192.168.46.255 scope global secondary ens33:1 valid_lft forever preferred_lft forever inet6 fe80::22df:a835:ba99:ba96/64 scope link noprefixroute valid_lft forever preferred_lft forever [root@master ~]#4、重启MHA

[root@manager ~]# systemctl restart masterha_managerd

3.5 测试 MHA 故障转移

3.5.1 在 master 节点关闭 mysql 服务,模拟主节点数据崩溃

[root@master ~]# systemctl stop mysqld.service3.5.2 在 manger 节点查看日志

[root@manager ~]# tail -1 /var/log/mha/app1/manager.log Master failover to 192.168.46.12(192.168.46.12:3306) completed successfully. [root@manager ~]#3.5.3 检查VIP

[root@slave1 ~]# ifconfig -a |grep -A 2 "ens33" ens33: flags=4163<UP,BROADCAST,RUNNING,MULTICAST> mtu 1500 inet 192.168.46.12 netmask 255.255.255.0 broadcast 192.168.46.255 inet6 fe80::22df:a835:ba99:ba96 prefixlen 64 scopeid 0x20<link> [root@slave1 ~]#

试验结束!

被折叠的 条评论

为什么被折叠?

被折叠的 条评论

为什么被折叠?