先自我介绍一下,小编浙江大学毕业,去过华为、字节跳动等大厂,目前阿里P7

深知大多数程序员,想要提升技能,往往是自己摸索成长,但自己不成体系的自学效果低效又漫长,而且极易碰到天花板技术停滞不前!

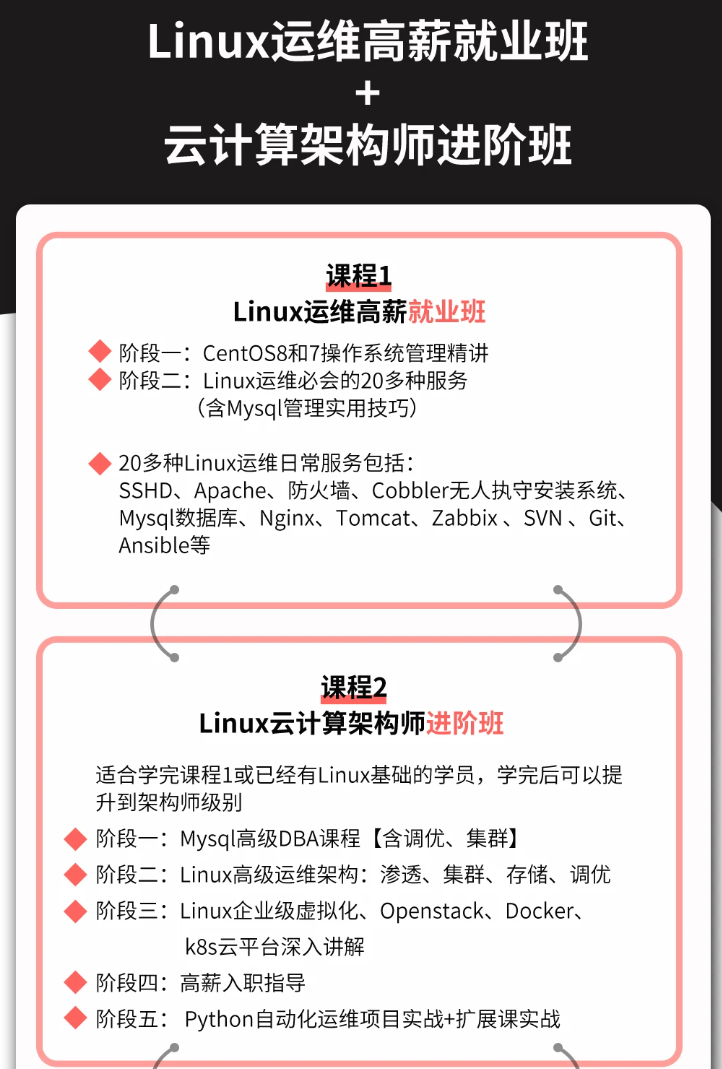

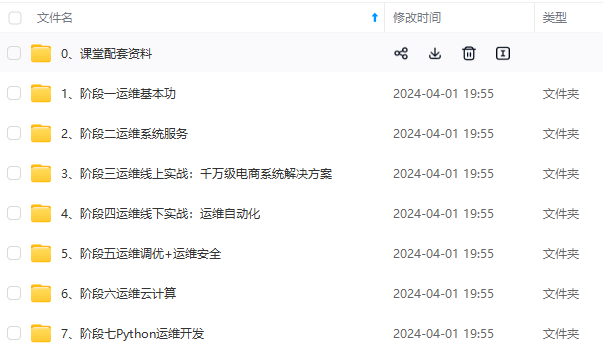

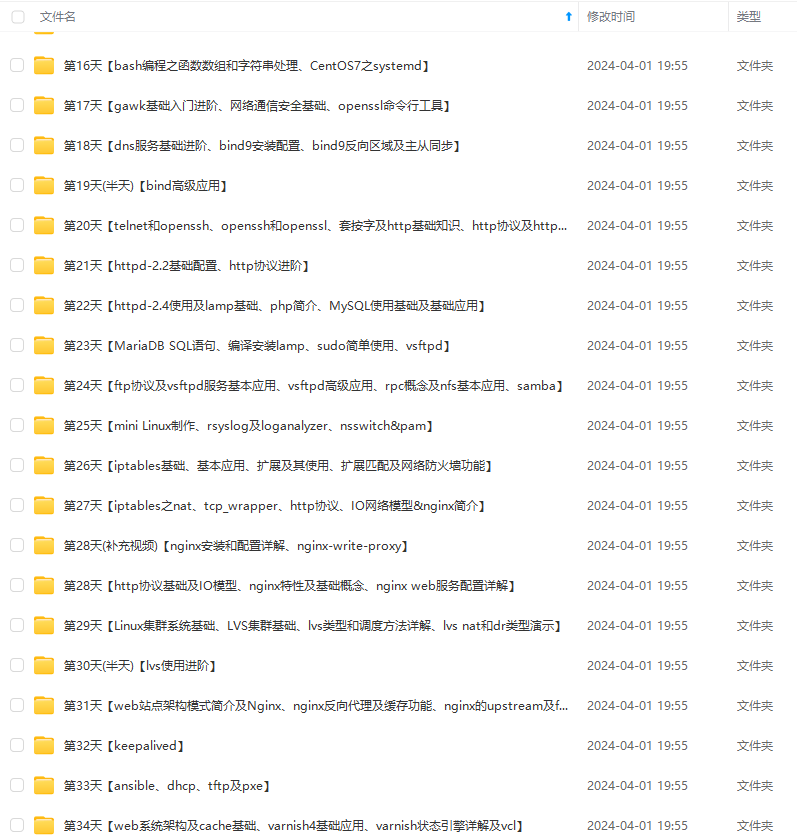

因此收集整理了一份《2024年最新Linux运维全套学习资料》,初衷也很简单,就是希望能够帮助到想自学提升又不知道该从何学起的朋友。

既有适合小白学习的零基础资料,也有适合3年以上经验的小伙伴深入学习提升的进阶课程,涵盖了95%以上运维知识点,真正体系化!

由于文件比较多,这里只是将部分目录截图出来,全套包含大厂面经、学习笔记、源码讲义、实战项目、大纲路线、讲解视频,并且后续会持续更新

如果你需要这些资料,可以添加V获取:vip1024b (备注运维)

正文

- apiGroups: [“”]

resources: [“endpoints”]

verbs: [“get”, “list”, “watch”, “create”, “update”, “patch”] - apiGroups: [“”]

resources: [“services”]

resourceNames: [“kube-dns”]

verbs: [“list”, “get”]

kind: ClusterRoleBinding

apiVersion: rbac.authorization.k8s.io/v1

metadata:

name: rbd-provisioner

subjects:

- kind: ServiceAccount

name: rbd-provisioner

namespace: kube-system

roleRef:

kind: ClusterRole

name: rbd-provisioner

apiGroup: rbac.authorization.k8s.io

apiVersion: rbac.authorization.k8s.io/v1

kind: Role

metadata:

name: rbd-provisioner

namespace: kube-system

rules:

- apiGroups: [“”]

resources: [“secrets”]

verbs: [“get”]

apiVersion: rbac.authorization.k8s.io/v1

kind: RoleBinding

metadata:

name: rbd-provisioner

namespace: kube-system

roleRef:

apiGroup: rbac.authorization.k8s.io

kind: Role

name: rbd-provisioner

subjects:

- kind: ServiceAccount

name: rbd-provisioner

namespace: kube-system

apiVersion: apps/v1

kind: Deployment

metadata:

name: rbd-provisioner

namespace: kube-system

spec:

selector:

matchLabels:

app: rbd-provisioner

replicas: 1

strategy:

type: Recreate

template:

metadata:

labels:

app: rbd-provisioner

spec:

containers:

- name: rbd-provisioner

image: quay.io/external_storage/rbd-provisioner:latest

env:

- name: PROVISIONER_NAME

value: ceph.com/rbd

serviceAccount: rbd-provisioner

EOF

kubectl apply -f external-storage-rbd-provisioner.yaml

2、配置storageclass

#1、创建pod时,kubelet需要使用rbd命令去检测和挂载pv对应的ceph image,所以要在所有的worker节点安装ceph客户端ceph-common。将ceph的ceph.client.admin.keyring和ceph.conf文件拷贝到master的/etc/ceph目录下

推送文件到master节点:

scp -rp ceph.client.admin.keyring ceph.conf root@192.168.0.10:/etc/ceph/

在k8s集群每个节点安装(下载ceph的源)

yum -y install ceph-common

#2、创建 osd pool 在ceph的mon或者admin节点

ceph osd pool create kube 16 16

[root@k8s-master ceph]# ceph osd lspools

1 .rgw.root

2 default.rgw.control

3 default.rgw.meta

4 default.rgw.log

5 rbd

6 kube

7 cephfs-data

8 cephfs-metadata

#3、创建k8s访问ceph的用户 在ceph的mon或者admin节点

ceph auth get-or-create client.kube mon ‘allow r’ osd ‘allow class-read object_prefix rbd_children, allow rwx pool=kube’ -o ceph.client.kube.keyring

#4、查看key 在ceph的mon或者admin节点和kube用户

ceph auth get-key client.admin

AQBQhrJeRHJmLxAATSxU4vjf79KgJpVkNb+VsQ==

ceph auth get-key client.kube

AQCQqztfSQNWFxAAdBmLqhJ/thboY0vGcZ7ixQ==

#5、创建 admin secret(使用admin的key)

kubectl create secret generic ceph-secret --type=“kubernetes.io/rbd”

–from-literal=key=AQBQhrJeRHJmLxAATSxU4vjf79KgJpVkNb+VsQ==

–namespace=kube-system

#6、在 default 命名空间创建pvc用于访问ceph的 secret(使用kube用户的key)

kubectl create secret generic ceph-user-secret --type=“kubernetes.io/rbd”

–from-literal=key=AQCQqztfSQNWFxAAdBmLqhJ/thboY0vGcZ7ixQ==

–namespace=default

[root@k8s-master ceph]# kubectl get secret

NAME TYPE DATA AGE

ceph-user-secret kubernetes.io/rbd 1 30s

3、配置StorageClass

cat >storageclass-ceph-rdb.yaml<<EOF

kind: StorageClass

apiVersion: storage.k8s.io/v1

metadata:

name: dynamic-ceph-rdb

provisioner: ceph.com/rbd

parameters:

monitors: 192.168.0.6:6789,192.168.0.7:6789,192.168.0.8:6789

adminId: admin

adminSecretName: ceph-secret

adminSecretNamespace: kube-system

pool: kube

userId: kube

userSecretName: ceph-user-secret

fsType: ext4

imageFormat: “2”

imageFeatures: “layering”

EOF

4、创建yaml

kubectl apply -f storageclass-ceph-rdb.yaml

5、查看sc

[root@k8s-master ceph]# kubectl get sc

NAME PROVISIONER RECLAIMPOLICY VOLUMEBINDINGMODE ALLOWVOLUMEEXPANSION AGE

dynamic-ceph-rdb ceph.com/rbd Delete Immediate false 11s

#### 测试使用

1、创建pvc测试

cat >ceph-rdb-pvc-test.yaml<<EOF

kind: PersistentVolumeClaim

apiVersion: v1

metadata:

name: ceph-rdb-claim

spec:

accessModes:

- ReadWriteOnce

storageClassName: dynamic-ceph-rdb

resources:

requests:

storage: 2Gi

EOF

kubectl apply -f ceph-rdb-pvc-test.yaml

2、查看

[root@k8s-master ceph]# kubectl get pvc

NAME STATUS VOLUME CAPACITY ACCESS MODES STORAGECLASS AGE

ceph-rdb-claim Bound pvc-214e462d-8da7-4234-ac68-3222900dd176 2Gi RWO dynamic-ceph-rdb 40s

[root@k8s-master ceph]# kubectl get pv

NAME CAPACITY ACCESS MODES RECLAIM POLICY STATUS CLAIM STORAGECLASS REASON AGE

pvc-214e462d-8da7-4234-ac68-3222900dd176 2Gi RWO Delete Bound default/ceph-rdb-claim dynamic-ceph-rdb 10s

3、创建 nginx pod 挂载测试

cat >nginx-pod.yaml<<EOF

apiVersion: v1

kind: Pod

metadata:

name: nginx-pod1

labels:

name: nginx-pod1

spec:

containers:

- name: nginx-pod1

image: nginx

ports:- name: web

containerPort: 80

volumeMounts: - name: ceph-rdb

mountPath: /usr/share/nginx/html

volumes:

- name: web

- name: ceph-rdb

persistentVolumeClaim:

claimName: ceph-rdb-claim

EOF

4、查看

[root@k8s-master ceph]# kubectl get pod

NAME READY STATUS RESTARTS AGE

nginx-pod1 1/1 Running 0 39s

#pod的IP为10.244.58.252

5、修改文件内容

kubectl exec -it nginx-pod1 – /bin/sh -c ‘echo this is from Ceph RBD!!! > /usr/share/nginx/html/index.html’

6、访问测试

[root@k8s-master ceph]# curl http://10.244.58.252

this is from Ceph RBD!!!

#查看rbd块设备的数据

[root@k8s-master ceph]# rados -p kube ls --all

rbd_data.67b206b8b4567.0000000000000102

rbd_data.67b206b8b4567.00000000000000a0

rbd_data.67b206b8b4567.000000000000010b

rbd_data.67b206b8b4567.0000000000000100

rbd_id.image02_clone01

rbd_id.kubernetes-dynamic-pvc-5fd1042a-e13e-11ea-9abe-1edcc60c1557

…

7、清理

kubectl delete -f nginx-pod.yaml

kubectl delete -f ceph-rdb-pvc-test.yaml

#rbd块中已经清除了

[root@k8s-master ceph]# rados -p kube ls --all

rbd_id.image02_clone01

rbd_header.2828baf9d30c7

rbd_directory

rbd_children

rbd_info

rbd_object_map.2828baf9d30c7

## 四、POD使用CephFS做为持久数据卷

CephFS方式支持k8s的pv的3种访问模式ReadWriteOnce,ReadOnlyMany ,ReadWriteMany

### Ceph端创建CephFS pool

1、如下操作在ceph的mon或者admin节点

CephFS需要使用两个Pool来分别存储数据和元数据

ceph osd pool create fs_data 128

ceph osd pool create fs_metadata 128

ceph osd lspools

2、创建一个CephFS

ceph fs new cephfs fs_metadata fs_data

3、查看

ceph fs ls

### 部署 cephfs-provisioner

1、使用社区提供的cephfs-provisioner

cat >external-storage-cephfs-provisioner.yaml<<EOF

apiVersion: v1

kind: ServiceAccount

metadata:

name: cephfs-provisioner

namespace: kube-system

kind: ClusterRole

apiVersion: rbac.authorization.k8s.io/v1

metadata:

name: cephfs-provisioner

rules:

- apiGroups: [“”]

resources: [“persistentvolumes”]

verbs: [“get”, “list”, “watch”, “create”, “delete”] - apiGroups: [“”]

resources: [“persistentvolumeclaims”]

verbs: [“get”, “list”, “watch”, “update”] - apiGroups: [“storage.k8s.io”]

resources: [“storageclasses”]

verbs: [“get”, “list”, “watch”] - apiGroups: [“”]

resources: [“events”]

verbs: [“create”, “update”, “patch”] - apiGroups: [“”]

resources: [“endpoints”]

verbs: [“get”, “list”, “watch”, “create”, “update”, “patch”] - apiGroups: [“”]

resources: [“secrets”]

verbs: [“create”, “get”, “delete”]

kind: ClusterRoleBinding

apiVersion: rbac.authorization.k8s.io/v1

metadata:

name: cephfs-provisioner

subjects:

- kind: ServiceAccount

name: cephfs-provisioner

namespace: kube-system

roleRef:

kind: ClusterRole

name: cephfs-provisioner

apiGroup: rbac.authorization.k8s.io

apiVersion: rbac.authorization.k8s.io/v1

kind: Role

metadata:

name: cephfs-provisioner

namespace: kube-system

rules:

- apiGroups: [“”]

resources: [“secrets”]

verbs: [“create”, “get”, “delete”]

apiVersion: rbac.authorization.k8s.io/v1

kind: RoleBinding

metadata:

name: cephfs-provisioner

namespace: kube-system

roleRef:

apiGroup: rbac.authorization.k8s.io

kind: Role

name: cephfs-provisioner

subjects:

- kind: ServiceAccount

name: cephfs-provisioner

namespace: kube-system

apiVersion: apps/v1

kind: Deployment

metadata:

name: cephfs-provisioner

namespace: kube-system

spec:

selector:

matchLabels:

app: cephfs-provisioner

replicas: 1

strategy:

type: Recreate

template:

metadata:

labels:

app: cephfs-provisioner

spec:

containers:

- name: cephfs-provisioner

image: “quay.io/external_storage/cephfs-provisioner:latest”

env:

- name: PROVISIONER_NAME

value: ceph.com/cephfs

command:

- “/usr/local/bin/cephfs-provisioner”

args:

- “-id=cephfs-provisioner-1”

serviceAccount: cephfs-provisioner

EOF

kubectl apply -f external-storage-cephfs-provisioner.yaml

2、查看状态 等待running之后 再进行后续的操作

kubectl get pod -n kube-system

### 配置 storageclass

1、查看key 在ceph的mon或者admin节点

ceph auth get-key client.admin

2、创建 admin secret

### 最后的话

最近很多小伙伴找我要Linux学习资料,于是我翻箱倒柜,整理了一些优质资源,涵盖视频、电子书、PPT等共享给大家!

### 资料预览

给大家整理的视频资料:

给大家整理的电子书资料:

**如果本文对你有帮助,欢迎点赞、收藏、转发给朋友,让我有持续创作的动力!**

**网上学习资料一大堆,但如果学到的知识不成体系,遇到问题时只是浅尝辄止,不再深入研究,那么很难做到真正的技术提升。**

**需要这份系统化的资料的朋友,可以添加V获取:vip1024b (备注运维)**

**一个人可以走的很快,但一群人才能走的更远!不论你是正从事IT行业的老鸟或是对IT行业感兴趣的新人,都欢迎加入我们的的圈子(技术交流、学习资源、职场吐槽、大厂内推、面试辅导),让我们一起学习成长!**

secret

### 最后的话

最近很多小伙伴找我要Linux学习资料,于是我翻箱倒柜,整理了一些优质资源,涵盖视频、电子书、PPT等共享给大家!

### 资料预览

给大家整理的视频资料:

[外链图片转存中...(img-WswDfHdu-1713441630600)]

给大家整理的电子书资料:

[外链图片转存中...(img-54qMAE2g-1713441630601)]

**如果本文对你有帮助,欢迎点赞、收藏、转发给朋友,让我有持续创作的动力!**

**网上学习资料一大堆,但如果学到的知识不成体系,遇到问题时只是浅尝辄止,不再深入研究,那么很难做到真正的技术提升。**

**需要这份系统化的资料的朋友,可以添加V获取:vip1024b (备注运维)**

[外链图片转存中...(img-kLJB5xF9-1713441630601)]

**一个人可以走的很快,但一群人才能走的更远!不论你是正从事IT行业的老鸟或是对IT行业感兴趣的新人,都欢迎加入我们的的圈子(技术交流、学习资源、职场吐槽、大厂内推、面试辅导),让我们一起学习成长!**

2万+

2万+

被折叠的 条评论

为什么被折叠?

被折叠的 条评论

为什么被折叠?