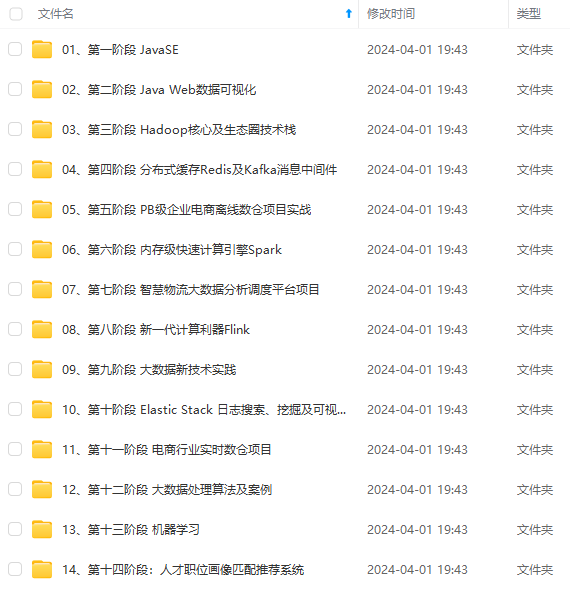

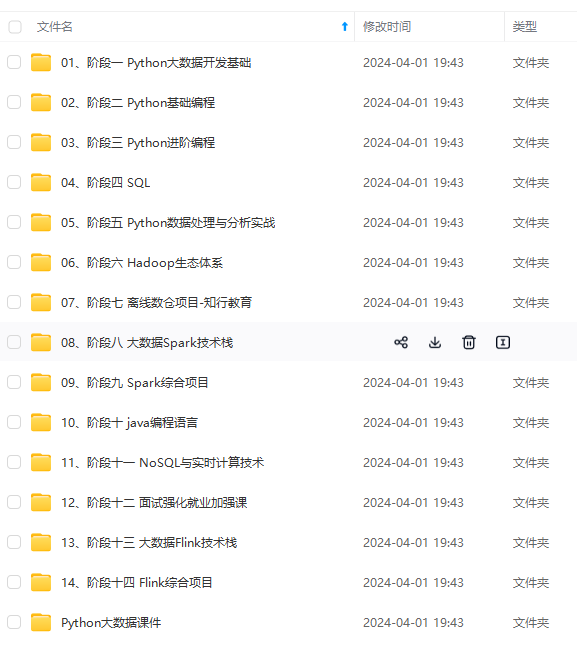

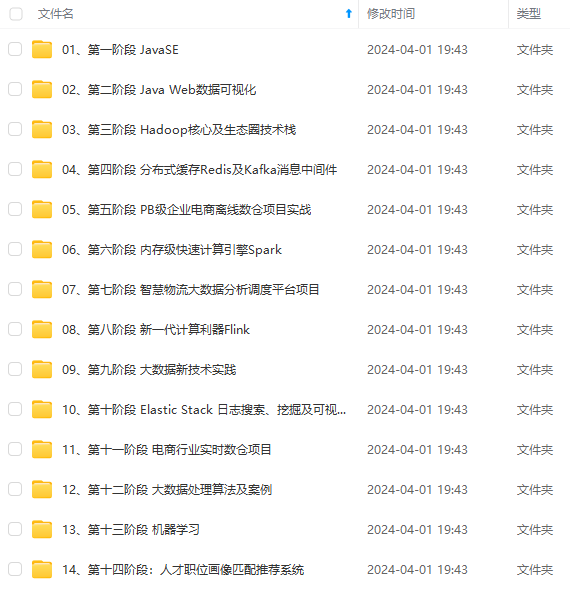

既有适合小白学习的零基础资料,也有适合3年以上经验的小伙伴深入学习提升的进阶课程,涵盖了95%以上大数据知识点,真正体系化!

由于文件比较多,这里只是将部分目录截图出来,全套包含大厂面经、学习笔记、源码讲义、实战项目、大纲路线、讲解视频,并且后续会持续更新

IT安全

医疗保健

二、部署

get hadoop-3.2.1.tar.gz jdk-8u171-linux-x64.tar.gz

[root@server1 ~]# useradd -u 1001 hadoop

[root@server1 ~]# mv * /home/hadoop/

[root@server1 ~]# su - hadoop

[hadoop@server1 ~]$ tar zxf hadoop-3.2.1.tar.gz

[hadoop@server1 ~]$ tar zxf jdk-8u171-linux-x64.tar.gz

[hadoop@server1 ~]$ ln -s jdk1.8.0_171/ java

[hadoop@server1 ~]$ ln -s hadoop-3.2.1 hadoop

[hadoop@server1 ~]$ cd hadoop

[hadoop@server1 hadoop]$ cd etc/hadoop/

[hadoop@server1 hadoop]$ vim hadoop-env.sh

[hadoop@server1 ~]$ cd hadoop

[hadoop@server1 hadoop]$ mkdir input

[hadoop@server1 hadoop]$ cp etc/hadoop/*.xml input

[hadoop@server1 hadoop]$ bin/hadoop jar share/hadoop/mapreduce/hadoop-mapreduce-examples-3.2.1.jar grep input output 'dfs[a-z.]+'

[hadoop@server1 hadoop]$ cat output/*

1 dfsadmin

[hadoop@server1 ~]$ cd hadoop/etc/hadoop/

[hadoop@server1 hadoop]$ vim core-site.xml

<configuration>

<property>

<name>fs.defaultFS</name>

<value>hdfs://localhost:9000</value>

</property>

</configuration>

[hadoop@server1 hadoop]$ vim hdfs-site.xml

<configuration>

<property>

<name>dfs.replication</name>

<value>1</value>

</property>

</configuration>

[hadoop@server1 ~]$ cd hadoop

[hadoop@server1 hadoop]$ bin/hdfs namenode -format

vim ~/.bash_profile

source ~/.bash_profile

[hadoop@server1 ~]$ hdfs dfs -mkdir -p /user/hadoop

[hadoop@server1 hadoop]$ hdfs dfs -put input

网页访问:172.25.3.1:9870查看上传结果

[root@server1 ~]# echo westos | passwd --stdin hadoop

[hadoop@server1 hadoop]$ ssh-keygen

[hadoop@server1 hadoop]$ ssh-copy-id localhost

[hadoop@server1 hadoop]$ bin/hdfs namenode -format

[hadoop@server1 hadoop]$ cd sbin/

[hadoop@server1 sbin]$ ./start-dfs.sh

[hadoop@server1 hadoop]$bin/hadoop jar share/hadoop/mapreduce/hadoop-mapreduce-examples-3.2.1.jar wordcount input output

[hadoop@server1 hadoop]$ hdfs dfs -ls input

[hadoop@server1 hadoop]$ hdfs dfs -cat output/*

[hadoop@server1 sbin]$ ./stop-dfs.sh

[root@server1 ~]# yum install nfs-utils.x86_64 -y

[root@server1 ~]# vim /etc/exports

[root@server1 ~]# systemctl start nfs

[root@server2 ~]# yum install -y nfs-utils #server3同样操作

[root@server2 ~]# useradd -u 1001 hadoop

[root@server2 ~]# showmount -e 172.25.3.1

Export list for 172.25.3.1:

/home/hadoop *

[root@server2 ~]# mount 172.25.3.1:/home/hadoop/ /home/hadoop/

[hadoop@server2 ~]$ jps

14426 Jps

14335 DataNode

[root@server1 ~]# su - hadoop

[hadoop@server1 ~]$ cd hadoop

[hadoop@server1 hadoop]$ cd etc/hadoop/

[hadoop@server1 hadoop]$ vim core-site.xml

<configuration>

<property>

<name>fs.defaultFS</name>

<value>hdfs://server1:9000</value>

</property>

</configuration>

[hadoop@server1 hadoop]$ vim workers

server2

server3

[hadoop@server1 hadoop]$ vim hdfs-site.xml

<configuration>

<property>

<name>dfs.replication</name>

<value>2</value>

</property>

</configuration>

[hadoop@server1 hadoop]$ bin/hdfs namenode -format

[hadoop@server1 ~]$ cd hadoop

[hadoop@server1 hadoop]$ cd sbin/

[hadoop@server1 sbin]$ ./start-dfs.sh

[hadoop@server1 sbin]$ jps

19218 NameNode

19442 SecondaryNameNode

19562 Jps

[hadoop@server1 hadoop]$ hdfs dfs -mkdir -p /user/hadoop/

[hadoop@server1 hadoop]$ hdfs dfs -mkdir input

[hadoop@server1 hadoop]$ hdfs dfs -put * input

热添加:

[root@server4 ~]# yum install nfs-utils -y

[root@server4 ~]# useradd -u 1001 hadoop

[root@server4 ~]# mount 172.25.3.1:/home/hadoop/ /home/hadoop/

[root@server4 ~]# su - hadoop

[hadoop@server1 hadoop]$ vim workers

server2

server3

server4

[hadoop@server4 hadoop]$ hdfs --daemon start datanode

上传测试:

[hadoop@server4 ~]$ hdfs dfs -put jdk-8u171-linux-x64.tar.gz

**既有适合小白学习的零基础资料,也有适合3年以上经验的小伙伴深入学习提升的进阶课程,涵盖了95%以上大数据知识点,真正体系化!**

**由于文件比较多,这里只是将部分目录截图出来,全套包含大厂面经、学习笔记、源码讲义、实战项目、大纲路线、讲解视频,并且后续会持续更新**

**[需要这份系统化资料的朋友,可以戳这里获取](https://bbs.csdn.net/topics/618545628)**

年以上经验的小伙伴深入学习提升的进阶课程,涵盖了95%以上大数据知识点,真正体系化!**

**由于文件比较多,这里只是将部分目录截图出来,全套包含大厂面经、学习笔记、源码讲义、实战项目、大纲路线、讲解视频,并且后续会持续更新**

**[需要这份系统化资料的朋友,可以戳这里获取](https://bbs.csdn.net/topics/618545628)**

被折叠的 条评论

为什么被折叠?

被折叠的 条评论

为什么被折叠?