既有适合小白学习的零基础资料,也有适合3年以上经验的小伙伴深入学习提升的进阶课程,涵盖了95%以上大数据知识点,真正体系化!

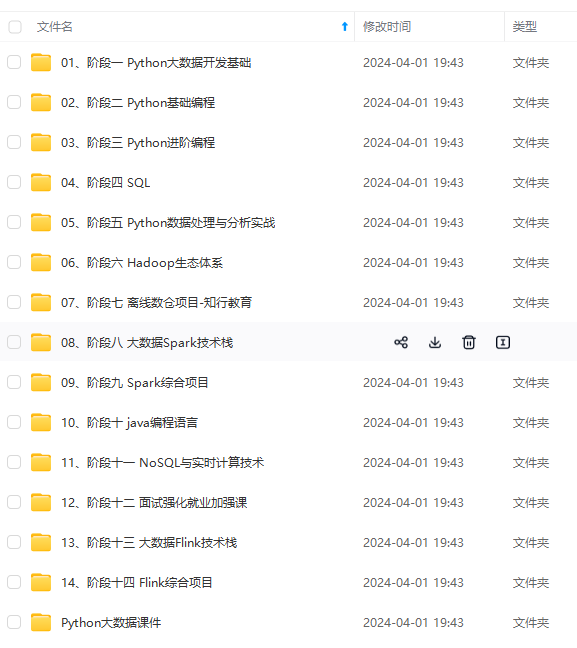

由于文件比较多,这里只是将部分目录截图出来,全套包含大厂面经、学习笔记、源码讲义、实战项目、大纲路线、讲解视频,并且后续会持续更新

user => "elastic"

password => "XXX"

query => '{ "query": { "query_string": { "query": "*" } } }'

size => 2000

scroll => "1m"

docinfo => true

# input中添加routing

docinfo_fields => ["_index", "_id", "_type", "_routing"]

}

}

output {

elasticsearch {

hosts => “http://es2.es.com:80”

index => “xxx”

user => “elastic”

password => “XXX”

document_id => “%{[@metadata][_id]}”

# 指定routing

routing => “%{[@metadata][_routing]}”

}

}

那么问题来了,如果你所有的索引都用这个模板,那么当上游没有指定routing字段的时候,下游的数据中的routing字段就会是[@metadata][_routing],真的是人都麻了,这个logstash组件一段都不智能,那么这个问题能解决吗?别急,看到最后你就知道了

索引严格模式,无法写入@timestamp和@version字段

上面的问题解决了,跑着跑着,又遇到事了

[2024-03-04T11:43:48,372][WARN ][logstash.outputs.elasticsearch][[main]>worker0][main][23eda3c9518e4ba5a787adadf9714d5512c8ad9a9754020744b84ca81fe1bedc] Could not index event to Elasticsearch. {:status=>400, :action=>["index", {:_id=>"110109711637125402", :_index=>"xxx", :routing=>nil, :_type=>"_doc"}, #<LogStash::Event:0x5e156236>], :response=>{"index"=>{"_index"=>"xxx", "_type"=>"_doc", "_id"=>"110109711637125402", "status"=>400, "error"=>{"type"=>"strict_dynamic_mapping_exception", "reason"=>"mapping set to strict, dynamic introduction of [@timestamp] within [_doc] is not allowed"}}}}

[2024-03-04T11:43:48,372][WARN ][logstash.outputs.elasticsearch][[main]>worker0][main][23eda3c9518e4ba5a787adadf9714d5512c8ad9a9754020744b84ca81fe1bedc] Could not index event to Elasticsearch. {:status=>400, :action=>["index", {:_id=>"110109711960916147", :_index=>"xxx", :routing=>nil, :_type=>"_doc"}, #<LogStash::Event:0x75333e01>], :response=>{"index"=>{"_index"=>"xxx", "_type"=>"_doc", "_id"=>"110109711960916147", "status"=>400, "error"=>{"type"=>"strict_dynamic_mapping_exception", "reason"=>"mapping set to strict, dynamic introduction of [@timestamp] within [_doc] is not allowed"}}}}

[2024-03-04T11:43:48,372][WARN ][logstash.outputs.elasticsearch][[main]>worker0][main][23eda3c9518e4ba5a787adadf9714d5512c8ad9a9754020744b84ca81fe1bedc] Could not index event to Elasticsearch. {:status=>400, :action=>["index", {:_id=>"110109712328692950", :_index=>"xxx", :routing=>nil, :_type=>"_doc"}, #<LogStash::Event:0x7405cd45>], :response=>{"index"=>{"_index"=>"xxx", "_type"=>"_doc", "_id"=>"110109712328692950", "status"=>400, "error"=>{"type"=>"strict_dynamic_mapping_exception", "reason"=>"mapping set to strict, dynamic introduction of [@timestamp] within [_doc] is not allowed"}}}}

看下索引结构

{

"xxx" : {

"aliases" : { },

"mappings" : {

"dynamic" : "strict",

"properties" : {

}

},

"settings" : {

"index" : {

}

}

}

}

原来是索引设置了,严格模式,不允许插入新的字段,那咋整?

还有logstash支持一些filter可以删除掉一些字段,那么我们安排上

input {

elasticsearch {

hosts => "http://es1.es.com:80"

index => "merchant_order_rel_pro_v2"

user => "elastic"

password => "XXX"

query => '{ "query": { "query_string": { "query": "*" } } }'

size => 2000

scroll => "1m"

docinfo => true

}

}

filter {

mutate {

# 删除logstash多余字段

remove_field => ["@version","@timestamp"]

}

}

output {

elasticsearch {

hosts => "http://es2.es.com:80"

index => "xxx"

user => "elastic"

password => "XXX"

document_id => "%{[@metadata][_id]}"

}

}

logstash限流

有的时候写入的太快了,下游扛不住,刚开始是通过修改参数来解决,但是每次修改任务都要重新跑,人有点麻了

网上找了一通也没见到logstash有限流插件

发现可以调用本地ruby脚本,不会ruby让gpt生成了一个令牌桶算法的脚本,但是限流效果一言难尽,只能说能限流,但是数字不是你想要的值。

没办法了只好研究下怎么编写插件,结果gradle功底太差了,源码编译不过彻底麻了

最后没办法,自己写了个java版本的基于guava的RateLimiter实现的限流插件打成jar包直接放进去解决了该问题

https://github.com/valsong/logstash-java-rate-limiter

logstash-java-rate-limiter使用方法

使用方法也很简单,将我编写的插件的jar放到目录logstash/logstash-core/lib/jars/中即可

- 参数

| param | type | required | 默认值 | 样例 | desc |

|---|---|---|---|---|---|

| rate_path | string | no | 无 | /usr/share/logstash/rate.txt | 从该文件中读取第一行作为限流值,你可以随时修改这个文件中的限流值 |

| count_path | string | no | 无 | /usr/share/logstash/count.txt | 记录已经同步的事件的数量到该文件中 |

| count_log_delay_sec | long | no | 30 | 30 | 根据设置的秒数以固定间隔在logstash的日志中打印事件数量 |

- 在文件中设置限流值

echo 5000 > /usr/share/logstash/rate.txt

- 添加一个filter叫

java_rate_limit到任务的配置文件中

input {

elasticsearch {

hosts => "http://xxx-es.xxx.com:9200"

index => "xxx"

user => "elastic"

password => "XXXX"

query => '{ "query": { "query_string": { "query": "*" } } }'

size => 2000

scroll => "10m"

docinfo => true

# docinfo_fields => ["_index", "_id", "_type", "_routing"]

}

}

filter {

# plugin name

java_rate_limit {

# 设置限流值到该文件的第一行

rate_path => "/usr/share/logstash/rate.txt"

# 用于记录时间的数量的文件

count_path => "/usr/share/logstash/count.txt"

# 根据设置的秒数定时打印事件数量到日志中

count_log_delay_sec => 30

}

}

output {

elasticsearch {

hosts => "yyy-es.yyy.com:9200"

index => "xxx"

user => "elastic"

password => "YYYY"

document_id => "%{[@metadata][_id]}"

# document_type => "%{[@metadata][_type]}"

# routing => "%{[@metadata][_routing]}"

}

}

然后就可以限流了,如果需要调整限流值,直接改文本中的数字即可,过了几秒就会生效

效果如下:

[2024-02-01T16:44:41,515][WARN ][org.logstash.plugins.filters.RateLimitFilter][Converge PipelineAction::Create<main>] ### Rate limiter enabled:[true]! ratePath:[/usr/share/logstash/rate.txt].

[2024-02-01T16:44:41,519][WARN ][org.logstash.plugins.filters.RateLimitFilter][rate-limit-0] # Rate changed, set new RateLimiter! lastRate:[0.0] rate:[5000.0] ratePath:[/usr/share/logstash/rate.txt].

[2024-02-01T16:44:41,520][WARN ][org.logstash.plugins.filters.RateLimitFilter][Converge PipelineAction::Create<main>] ### Record event count to file enabled:[true]! countPath:[/usr/share/logstash/count.txt].

[2024-02-01T16:44:50,536][INFO ][org.logstash.plugins.filters.RateLimitFilter][rate-limit-1] Event count:[36500] rate:[5000.0].

[2024-02-01T16:45:00,561][INFO ][org.logstash.plugins.filters.RateLimitFilter][rate-limit-1] Event count:[87000] rate:[5000.0].

[2024-02-01T16:45:10,587][INFO ][org.logstash.plugins.filters.RateLimitFilter][rate-limit-1] Event count:[137000] rate:[5000.0].

[2024-02-01T16:45:11,587][WARN ][org.logstash.plugins.filters.RateLimitFilter][rate-limit-0] # Rate changed, set new RateLimiter! lastRate:[5000.0] rate:[6000.0] ratePath:[/usr/share/logstash/rate.txt].

[2024-02-01T16:45:20,591][INFO ][org.logstash.plugins.filters.RateLimitFilter][rate-limit-0] Event count:[204000] rate:[6000.0].

[2024-02-01T16:45:30,595][INFO ][org.logstash.plugins.filters.RateLimitFilter][rate-limit-0] Event count:[264000] rate:[6000.0].

[2024-02-01T16:45:40,638][INFO ][org.logstash.plugins.filters.RateLimitFilter][rate-limit-0] Event count:[324000] rate:[6000.0].

[2024-02-01T16:45:50,647][INFO ][org.logstash.plugins.filters.RateLimitFilter][rate-limit-0] Event count:[384000] rate:[6000.0].

[2024-02-01T16:46:00,649][WARN ][org.logstash.plugins.filters.RateLimitFilter][rate-limit-1] # Rate changed, set new RateLimiter! lastRate:[6000.0] rate:[3000.0] ratePath:[/usr/share/logstash/rate.txt].

[2024-02-01T16:46:00,651][INFO ][org.logstash.plugins.filters.RateLimitFilter][rate-limit-0] Event count:[444000] rate:[3000.0].

[2024-02-01T16:46:10,655][INFO ][org.logstash.plugins.filters.RateLimitFilter][rate-limit-0] Event count:[482000] rate:[3000.0].

配置文件最终版本

如果你用了我的插件,又不想每次都判断routing值,同时不想将@version和@timestamp两个字段写入下游,那么配置文件这么写就对了

注意output中的if判断条件,不能写到elasticsearch插件内,折腾了一下午才知道这个问题

input {

elasticsearch {

hosts => "http://es1.es:80"

index => "xxx_pro_v1"

user => "elastic"

password => "XXXXXX"

query => '{ "query": { "query_string": { "query": "*" } } }'

size => 2000

scroll => "1m"

docinfo => true

# input中添加routing

docinfo_fields => ["_index", "_id", "_type", "_routing"]

}

}

filter {

# 限流插件名称,没有用限流插件就把这个去掉即可

java_rate_limit {

# 限流插件限流值地址

rate_path => "/usr/share/logstash/rate.txt"

}

mutate{

# 移除logstash新增的两个字段

remove_field => ["@version","@timestamp"]

}

}

output {

# 判断是否有routing

if [@metadata][_routing] {

elasticsearch {

hosts => "http://es2.es.com:80"

index => "xxx_pro_v1"

user => "elastic"

password => "XXX"

document_id => "%{[@metadata][_id]}"

# ES6需要指定type

# document_type => "%{[@metadata][_type]}"

# 指定routing

routing => "%{[@metadata][_routing]}"

}

} else {

elasticsearch {

hosts => "http://es2.es.com:80"

index => "xxx_pro_v1"

user => "elastic"

password => "XXX"

document_id => "%{[@metadata][_id]}"

# ES6需要指定type

# document_type => "%{[@metadata][_type]}"

}

}

}

**既有适合小白学习的零基础资料,也有适合3年以上经验的小伙伴深入学习提升的进阶课程,涵盖了95%以上大数据知识点,真正体系化!**

**由于文件比较多,这里只是将部分目录截图出来,全套包含大厂面经、学习笔记、源码讲义、实战项目、大纲路线、讲解视频,并且后续会持续更新**

**[需要这份系统化资料的朋友,可以戳这里获取](https://bbs.csdn.net/topics/618545628)**

714900749106)]

[外链图片转存中...(img-rQb0gw1Y-1714900749106)]

[外链图片转存中...(img-sULQX7oH-1714900749107)]

**既有适合小白学习的零基础资料,也有适合3年以上经验的小伙伴深入学习提升的进阶课程,涵盖了95%以上大数据知识点,真正体系化!**

**由于文件比较多,这里只是将部分目录截图出来,全套包含大厂面经、学习笔记、源码讲义、实战项目、大纲路线、讲解视频,并且后续会持续更新**

**[需要这份系统化资料的朋友,可以戳这里获取](https://bbs.csdn.net/topics/618545628)**

2766

2766

被折叠的 条评论

为什么被折叠?

被折叠的 条评论

为什么被折叠?