chmod 755 -R $HADOOP_HOME/etc/hadoop/*

chown root:hadoop $HADOOP_HOME/etc

chown root:hadoop $HADOOP_HOME/etc/hadoop

chown root:hadoop $HADOOP_HOME/etc/hadoop/container-executor.cfg

chown root:hadoop $HADOOP_HOME/bin/container-executor

chown root:hadoop $HADOOP_HOME/bin/test-container-executor

chmod 6050 $HADOOP_HOME/bin/container-executor

chown 6050 $HADOOP_HOME/bin/test-container-executor

mkdir $HADOOP_HOME/logs

mkdir $HADOOP_HOME/logs/hdfs

mkdir $HADOOP_HOME/logs/yarn

chown root:hadoop $HADOOP_HOME/logs

chmod 775 $HADOOP_HOME/logs

chown hdfs:hadoop $HADOOP_HOME/logs/hdfs

chmod 755 -R $HADOOP_HOME/logs/hdfs

chown yarn:hadoop $HADOOP_HOME/logs/yarn

chmod 755 -R $HADOOP_HOME/logs/yarn

chown -R hdfs:hadoop $DFS_DATANODE_DATA_DIR

chown -R hdfs:hadoop $DFS_NAMENODE_NAME_DIR

chmod 700 $DFS_DATANODE_DATA_DIR

chmod 700 $DFS_NAMENODE_NAME_DIR

chown -R yarn:hadoop $NODEMANAGER_LOCAL_DIR

chown -R yarn:hadoop $NODEMANAGER_LOG_DIR

chmod 770 $NODEMANAGER_LOCAL_DIR

chmod 770 $NODEMANAGER_LOG_DIR

chown -R mapred:hadoop $MR_HISTORY

chmod 770 $MR_HISTORY

##### 3.2 hadoop 配置:

环境变量相关配置非必要,如果 /etc/profile 中配置了,可以在 hadoop 配置文件中省略

###### hadoop-env.sh

非必须,如果 /etc/profile 中配置了,这里可以省略

export JAVA_HOME=/usr/local/jdk1.8.0_221

export HADOOP_HOME=/bigdata/hadoop-3.3.0

export HADOOP_CONF_DIR=

H

A

D

O

O

P

H

O

M

E

/

e

t

c

/

h

a

d

o

o

p

e

x

p

o

r

t

H

A

D

O

O

P

L

O

G

D

I

R

=

HADOOP_HOME/etc/hadoop export HADOOP_LOG_DIR=

HADOOPHOME/etc/hadoopexportHADOOPLOGDIR=HADOOP_HOME/logs/hdfs

export HADOOP_COMMON_LIB_NATIVE_DIR=

H

A

D

O

O

P

H

O

M

E

/

l

i

b

/

n

a

t

i

v

e

e

x

p

o

r

t

H

A

D

O

O

P

O

P

T

S

=

"

−

D

j

a

v

a

.

l

i

b

r

a

r

y

.

p

a

t

h

=

HADOOP_HOME/lib/native export HADOOP_OPTS="-Djava.library.path=

HADOOPHOME/lib/nativeexportHADOOPOPTS="−Djava.library.path={HADOOP_HOME}/lib/native"

###### yarn-env.sh

export JAVA_HOME=/usr/local/jdk1.8.0_221

export HADOOP_HOME=/bigdata/hadoop-3.3.0

export YARN_CONF_DIR=

H

A

D

O

O

P

H

O

M

E

/

e

t

c

/

h

a

d

o

o

p

e

x

p

o

r

t

Y

A

R

N

L

O

G

D

I

R

=

HADOOP_HOME/etc/hadoop export YARN_LOG_DIR=

HADOOPHOME/etc/hadoopexportYARNLOGDIR=HADOOP_HOME/logs/yarn

###### mapred-env.sh

export JAVA_HOME=/usr/local/jdk1.8.0_221

###### core-site.xml

<property>

<name>io.file.buffer.size</name>

<value>131072</value>

<description></description>

</property>

<!-- 以下是 Kerberos 相关配置 -->

<property>

<name>hadoop.security.authorization</name>

<value>true</value>

<description>是否开启hadoop的安全认证</description>

</property>

<property>

<name>hadoop.security.authentication</name>

<value>kerberos</value>

<description>使用kerberos作为hadoop的安全认证方案</description>

</property>

<property>

<name>hadoop.security.auth_to_local</name>

<value>

RULE:[2:$1@$0](nn@.*EXAMPLE.COM)s/.*/hdfs/

RULE:[2:$1@$0](sn@.*EXAMPLE.COM)s/.*/hdfs/

RULE:[2:$1@$0](dn@.*EXAMPLE.COM)s/.*/hdfs/

RULE:[2:$1@$0](nm@.*EXAMPLE.COM)s/.*/yarn/

RULE:[2:$1@$0](rm@.*EXAMPLE.COM)s/.*/yarn/

RULE:[2:$1@$0](tl@.*EXAMPLE.COM)s/.*/yarn/

RULE:[2:$1@$0](jhs@.*EXAMPLE.COM)s/.*/mapred/

RULE:[2:$1@$0](HTTP@.*EXAMPLE.COM)s/.*/hdfs/

DEFAULT

</value>

<description>匹配规则,将 Kerberos 账户转换为本地账户。比如第一行将 nn\xx@EXAMPLE.COM 转换为 hdfs 账户</description>

</property>

<!-- HIVE KERBEROS -->

<property>

<name>hadoop.proxyuser.hive.hosts</name>

<value>*</value>

</property>

<property>

<name>hadoop.proxyuser.hive.groups</name>

<value>*</value>

</property>

<property>

<name>hadoop.proxyuser.hdfs.hosts</name>

<value>*</value>

</property>

<property>

<name>hadoop.proxyuser.hdfs.groups</name>

<value>*</value>

</property>

<property>

<name>hadoop.proxyuser.HTTP.hosts</name>

<value>*</value>

</property>

<property>

<name>hadoop.proxyuser.HTTP.groups</name>

<value>*</value>

</property>

###### hdfs-site.xml

<property>

<name>dfs.namenode.hosts</name>

<value>bigdata1.example.com,bigdata2.example.com</value>

<description>List of permitted DataNodes.</description>

</property>

<property>

<name>dfs.blocksize</name>

<value>268435456</value>

<description></description>

</property>

<property>

<name>dfs.namenode.handler.count</name>

<value>100</value>

<description></description>

</property>

<property>

<name>dfs.datanode.data.dir</name>

<value>/data/dn</value>

</property>

<property>

<name>dfs.permissions.supergroup</name>

<value>hdfs</value>

</property>

<property>

<name>dfs.http.policy</name>

<value>HTTPS_ONLY</value>

<description>所有开启的web页面均使用https, 细节在ssl server 和client那个配置文件内配置</description>

</property>

<property>

<name>dfs.data.transfer.protection</name>

<value>integrity</value>

</property>

<property>

<name>dfs.https.port</name>

<value>50470</value>

</property>

<property>

<name>dfs.datanode.data.dir.perm</name>

<value>700</value>

</property>

<!-- 以下是 Kerberos 相关配置 -->

<!-- 配置 Kerberos 认证后,这个配置是必须为 true。否重 Datanode 启动会报错 -->

<property>

<name>dfs.block.access.token.enable</name>

<value>true</value>

</property>

<!-- NameNode security config -->

<property>

<name>dfs.namenode.kerberos.principal</name>

<value>nn/_HOST@EXAMPLE.COM</value>

<description>namenode对应的kerberos账户为 nn/主机名@EXAMPLE.COM, _HOST会自动转换为主机名

</description>

</property>

<property>

<name>dfs.namenode.keytab.file</name>

<!-- path to the HDFS keytab -->

<value>/etc/security/keytabs/nn.service.keytab</value>

<description>指定namenode用于免密登录的keytab文件</description>

</property>

<property>

<name>dfs.namenode.kerberos.internal.spnego.principal</name>

<value>HTTP/_HOST@EXAMPLE.COM</value>

<description>https 相关(如开启namenodeUI)使用的账户</description>

</property>

<!--Secondary NameNode security config -->

<property>

<name>dfs.secondary.namenode.kerberos.principal</name>

<value>sn/_HOST@EXAMPLE.COM</value>

<description>secondarynamenode使用的账户</description>

</property>

<property>

<name>dfs.secondary.namenode.keytab.file</name>

<!-- path to the HDFS keytab -->

<value>/etc/security/keytabs/sn.service.keytab</value>

<description>sn对应的keytab文件</description>

</property>

<property>

<name>dfs.secondary.namenode.kerberos.internal.spnego.principal</name>

<value>HTTP/_HOST@EXAMPLE.COM</value>

<description>sn需要开启http页面用到的账户</description>

</property>

<!-- DataNode security config -->

<property>

<name>dfs.datanode.kerberos.principal</name>

<value>dn/_HOST@EXAMPLE.COM</value>

<description>datanode用到的账户</description>

</property>

<property>

<name>dfs.datanode.keytab.file</name>

<!-- path to the HDFS keytab -->

<value>/etc/security/keytabs/dn.service.keytab</value>

<description>datanode用到的keytab文件路径</description>

</property>

<property>

<name>dfs.web.authentication.kerberos.principal</name>

<value>HTTP/_HOST@EXAMPLE.COM</value>

<description>web hdfs 使用的账户</description>

</property>

<property>

<name>dfs.web.authentication.kerberos.keytab</name>

<value>/etc/security/keytabs/spnego.service.keytab</value>

<description>对应的keytab文件</description>

</property>

###### yarn-site.xml

<property>

<name>yarn.resourcemanager.scheduler.class</name>

<value>org.apache.hadoop.yarn.server.resourcemanager.scheduler.fair.FairScheduler</value>

<description></description>

</property>

<property>

<name>yarn.nodemanager.local-dirs</name>

<value>/data/nm-local</value>

<description>Comma-separated list of paths on the local filesystem where

intermediate data is written.

</description>

</property>

<property>

<name>yarn.nodemanager.log-dirs</name>

<value>/data/nm-log</value>

<description>Comma-separated list of paths on the local filesystem where logs are

written.

</description>

</property>

<property>

<name>yarn.nodemanager.log.retain-seconds</name>

<value>10800</value>

<description>Default time (in seconds) to retain log files on the NodeManager Only

applicable if log-aggregation is disabled.

</description>

</property>

<property>

<name>yarn.nodemanager.aux-services</name>

<value>mapreduce_shuffle</value>

<description>Shuffle service that needs to be set for Map Reduce applications.

</description>

</property>

<!-- To enable SSL -->

<property>

<name>yarn.http.policy</name>

<value>HTTPS_ONLY</value>

</property>

<property>

<name>yarn.nodemanager.linux-container-executor.group</name>

<value>hadoop</value>

</property>

<!-- 这个配了可能需要在本机编译 ContainerExecutor -->

<!-- 可以用以下命令检查环境。 -->

<!-- hadoop checknative -a -->

<!-- ldd $HADOOP_HOME/bin/container-executor -->

<!--

<property>

<name>yarn.nodemanager.container-executor.class</name>

<value>org.apache.hadoop.yarn.server.nodemanager.LinuxContainerExecutor</value>

</property>

<property>

<name>yarn.nodemanager.linux-container-executor.path</name>

<value>/bigdata/hadoop-3.3.2/bin/container-executor</value>

</property>

-->

<!-- 以下是 Kerberos 相关配置 -->

<!-- ResourceManager security configs -->

<property>

<name>yarn.resourcemanager.principal</name>

<value>rm/_HOST@EXAMPLE.COM</value>

</property>

<property>

<name>yarn.resourcemanager.keytab</name>

<value>/etc/security/keytabs/rm.service.keytab</value>

</property>

<property>

<name>yarn.resourcemanager.webapp.delegation-token-auth-filter.enabled</name>

<value>true</value>

</property>

<!-- NodeManager security configs -->

<property>

<name>yarn.nodemanager.principal</name>

<value>nm/_HOST@EXAMPLE.COM</value>

</property>

<property>

<name>yarn.nodemanager.keytab</name>

<value>/etc/security/keytabs/nm.service.keytab</value>

</property>

<!-- TimeLine security configs -->

<property>

<name>yarn.timeline-service.principal</name>

<value>tl/_HOST@EXAMPLE.COM</value>

</property>

<property>

<name>yarn.timeline-service.keytab</name>

<value>/etc/security/keytabs/tl.service.keytab</value>

</property>

<property>

<name>yarn.timeline-service.http-authentication.type</name>

<value>kerberos</value>

</property>

<property>

<name>yarn.timeline-service.http-authentication.kerberos.principal</name>

<value>HTTP/_HOST@EXAMPLE.COM</value>

</property>

<property>

<name>yarn.timeline-service.http-authentication.kerberos.keytab</name>

<value>/etc/security/keytabs/spnego.service.keytab</value>

</property>

###### mapred-site.xml

<property>

<name>mapreduce.jobhistory.http.policy</name>

<value>HTTPS_ONLY</value>

</property>

<!-- 以下是 Kerberos 相关配置 -->

<property>

<name>mapreduce.jobhistory.keytab</name>

<value>/etc/security/keytabs/jhs.service.keytab</value>

</property>

<property>

<name>mapreduce.jobhistory.principal</name>

<value>jhs/_HOST@EXAMPLE.COM</value>

</property>

<property>

<name>mapreduce.jobhistory.webapp.spnego-principal</name>

<value>HTTP/_HOST@EXAMPLE.COM</value>

</property>

<property>

<name>mapreduce.jobhistory.webapp.spnego-keytab-file</name>

<value>/etc/security/keytabs/spnego.service.keytab</value>

</property>

##### 创建https证书

openssl req -new -x509 -keyout bd_ca_key -out bd_ca_cert -days 9999 -subj ‘/C=CN/ST=beijing/L=beijing/O=test/OU=test/CN=test’

scp -r /etc/security/cdh.https bigdata1:/etc/security/

scp -r /etc/security/cdh.https bigdata2:/etc/security/

[root@bigdata0 cdh.https]# openssl req -new -x509 -keyout bd_ca_key -out bd_ca_cert -days 9999 -subj ‘/C=CN/ST=beijing/L=beijing/O=test/OU=test/CN=test’

Generating a 2048 bit RSA private key

…+++

…+++

writing new private key to ‘bd_ca_key’

Enter PEM pass phrase:

Verifying - Enter PEM pass phrase:

[root@bigdata0 cdh.https]#

[root@bigdata0 cdh.https]# ll

总用量 8

-rw-r–r–. 1 root root 1298 10月 5 17:14 bd_ca_cert

-rw-r–r–. 1 root root 1834 10月 5 17:14 bd_ca_key

[root@bigdata0 cdh.https]# scp -r /etc/security/cdh.https bigdata1:/etc/security/

bd_ca_key 100% 1834 913.9KB/s 00:00

bd_ca_cert 100% 1298 1.3MB/s 00:00

[root@bigdata0 cdh.https]# scp -r /etc/security/cdh.https bigdata2:/etc/security/

bd_ca_key 100% 1834 1.7MB/s 00:00

bd_ca_cert 100% 1298 1.3MB/s 00:00

[root@bigdata0 cdh.https]#

在三个节点依次执行

cd /etc/security/cdh.https

所有需要输入密码的地方全部输入123456(方便起见,如果你对密码有要求请自行修改)

1 输入密码和确认密码:123456,此命令成功后输出keystore文件

keytool -keystore keystore -alias localhost -validity 9999 -genkey -keyalg RSA -keysize 2048 -dname “CN=test, OU=test, O=test, L=beijing, ST=beijing, C=CN”

2 输入密码和确认密码:123456,提示是否信任证书:输入yes,此命令成功后输出truststore文件

keytool -keystore truststore -alias CARoot -import -file bd_ca_cert

3 输入密码和确认密码:123456,此命令成功后输出cert文件

keytool -certreq -alias localhost -keystore keystore -file cert

4 此命令成功后输出cert_signed文件

openssl x509 -req -CA bd_ca_cert -CAkey bd_ca_key -in cert -out cert_signed -days 9999 -CAcreateserial -passin pass:123456

5 输入密码和确认密码:123456,是否信任证书,输入yes,此命令成功后更新keystore文件

keytool -keystore keystore -alias CARoot -import -file bd_ca_cert

6 输入密码和确认密码:123456

keytool -keystore keystore -alias localhost -import -file cert_signed

最终得到:

-rw-r–r-- 1 root root 1294 Sep 26 11:31 bd_ca_cert

-rw-r–r-- 1 root root 17 Sep 26 11:36 bd_ca_cert.srl

-rw-r–r-- 1 root root 1834 Sep 26 11:31 bd_ca_key

-rw-r–r-- 1 root root 1081 Sep 26 11:36 cert

-rw-r–r-- 1 root root 1176 Sep 26 11:36 cert_signed

-rw-r–r-- 1 root root 4055 Sep 26 11:37 keystore

-rw-r–r-- 1 root root 978 Sep 26 11:35 truststore

配置ssl-server.xml和ssl-client.xml

ssl-sserver.xml

<property>

<name>ssl.server.truststore.password</name>

<value>123456</value>

<description>Optional. Default value is "".

</description>

</property>

<property>

<name>ssl.server.truststore.type</name>

<value>jks</value>

<description>Optional. The keystore file format, default value is "jks".

</description>

</property>

<property>

<name>ssl.server.truststore.reload.interval</name>

<value>10000</value>

<description>Truststore reload check interval, in milliseconds.

Default value is 10000 (10 seconds).

</description>

</property>

<property>

<name>ssl.server.keystore.location</name>

<value>/etc/security/cdh.https/keystore</value>

<description>Keystore to be used by NN and DN. Must be specified.

</description>

</property>

<property>

<name>ssl.server.keystore.password</name>

<value>123456</value>

<description>Must be specified.

</description>

</property>

<property>

<name>ssl.server.keystore.keypassword</name>

<value>123456</value>

<description>Must be specified.

</description>

</property>

<property>

<name>ssl.server.keystore.type</name>

<value>jks</value>

<description>Optional. The keystore file format, default value is "jks".

</description>

</property>

<property>

<name>ssl.server.exclude.cipher.list</name>

<value>TLS_EE_RSA_WITH_RC4_128_SHA,SSL_DHE_RSA_EXPORT_WITH_DES40_CBC_SHA,

SSL_RSA_WITH_DES_CBC_SHA,SSL_DHE_RSA_WITH_DES_CBC_SHA,

SSL_RSA_EXPORT_WITH_RC4_40_MD5,SSL_RSA_EXPORT_WITH_DES40_CBC_SHA,

SSL_RSA_WITH_RC4_128_MD5</value>

<description>Optional. The weak security cipher suites that you want excluded

from SSL communication.</description>

</property>

ssl-client.xml

<property>

<name>ssl.client.truststore.password</name>

<value>123456</value>

<description>Optional. Default value is "".

</description>

</property>

<property>

<name>ssl.client.truststore.type</name>

<value>jks</value>

<description>Optional. The keystore file format, default value is "jks".

</description>

</property>

<property>

<name>ssl.client.truststore.reload.interval</name>

<value>10000</value>

<description>Truststore reload check interval, in milliseconds.

Default value is 10000 (10 seconds).

</description>

</property>

<property>

<name>ssl.client.keystore.location</name>

<value>/etc/security/cdh.https/keystore</value>

<description>Keystore to be used by clients like distcp. Must be

specified.

</description>

</property>

<property>

<name>ssl.client.keystore.password</name>

<value>123456</value>

<description>Optional. Default value is "".

</description>

</property>

<property>

<name>ssl.client.keystore.keypassword</name>

<value>123456</value>

<description>Optional. Default value is "".

</description>

</property>

<property>

<name>ssl.client.keystore.type</name>

<value>jks</value>

<description>Optional. The keystore file format, default value is "jks".

</description>

</property>

分发配置

scp $HADOOP_HOME/etc/hadoop/* bigdata1:/bigdata/hadoop-3.3.2/etc/hadoop/

scp $HADOOP_HOME/etc/hadoop/* bigdata2:/bigdata/hadoop-3.3.2/etc/hadoop/

### 4、启动服务

ps:初始化 namenode 后可以直接 sbin/start-all.sh

### 5、一些错误:

#### 5.1 Kerberos 相关错误

##### 连不上 realm

Cannot contact any KDC for realm

一般是网络不通

1、可能是没关闭防火墙:

[root@bigdata0 bigdata]# kinit krbtest/admin@EXAMPLE.COM

kinit: Cannot contact any KDC for realm ‘EXAMPLE.COM’ while getting initial credentials

[root@bigdata0 bigdata]#

[root@bigdata0 bigdata]#

[root@bigdata0 bigdata]# systemctl stop firewalld.service

[root@bigdata0 bigdata]# systemctl disable firewalld.service

Removed symlink /etc/systemd/system/multi-user.target.wants/firewalld.service.

Removed symlink /etc/systemd/system/dbus-org.fedoraproject.FirewallD1.service.

[root@bigdata0 bigdata]# kinit krbtest/admin@EXAMPLE.COM

Password for krbtest/admin@EXAMPLE.COM:

[root@bigdata0 bigdata]#

[root@bigdata0 bigdata]#

[root@bigdata0 bigdata]#

[root@bigdata0 bigdata]#

[root@bigdata0 bigdata]# klist

Ticket cache: FILE:/tmp/krb5cc_0

Default principal: krbtest/admin@EXAMPLE.COM

Valid starting Expires Service principal

2023-10-05T16:19:58 2023-10-06T16:19:58 krbtgt/EXAMPLE.COM@EXAMPLE.COM

[root@bigdata0 bigdata]#

2、可能是 没有在 hosts 中配置 kdc

编辑 /etc/hosts 添加 kdc 和对应 ip 的映射

{kdc ip} {kdc}

192.168.50.10 bigdata3.example.com

<https://serverfault.com/questions/612869/kinit-cannot-contact-any-kdc-for-realm-ubuntu-while-getting-initial-credentia>

##### kinit 命令找错依赖

kinit: relocation error: kinit: symbol krb5\_get\_init\_creds\_opt\_set\_pac\_request, version krb5\_3\_MIT not defined in file libkrb5.so.3 with link time reference

用 ldd

(

w

h

i

c

h

k

i

n

i

t

)

发现指向了非

/

l

i

b

64

下面的

l

i

b

k

r

b

5.

s

o

.

3

依赖。执行

e

x

p

o

r

t

L

D

L

I

B

R

A

R

Y

P

A

T

H

=

/

l

i

b

64

:

(which kinit) 发现指向了非 /lib64 下面的 libkrb5.so.3 依赖。执行 export LD\_LIBRARY\_PATH=/lib64:

(whichkinit)发现指向了非/lib64下面的libkrb5.so.3依赖。执行exportLDLIBRARYPATH=/lib64:{LD\_LIBRARY\_PATH} 即可。

<https://community.broadcom.com/communities/community-home/digestviewer/viewthread?MID=797304#:~:text=To%20solve%20this%20error%2C%20the%20only%20workaround%20found,to%20use%20system%20libraries%20%24%20export%20LD_LIBRARY_PATH%3D%2Flib64%3A%24%20%7BLD_LIBRARY_PATH%7D>

---

##### ccache id 非法

kinit: Invalid Uid in persistent keyring name while getting default ccache

修改 /etc/krb5.conf 中的 default\_ccache\_name 值。

<https://unix.stackexchange.com/questions/712857/kinit-invalid-uid-in-persistent-keyring-name-while-getting-default-ccache-while#:~:text=If%20running%20unset%20KRB5CCNAME%20did%20not%20resolve%20it%2C,of%20%22default_ccache_name%22%20in%20%2Fetc%2Fkrb5.conf%20to%20a%20local%20file%3A>

##### KrbException: Message stream modified (41)

据说和 jre 版本有关系,删除 krb5.conf 配置文件里的 `renew_lifetime = xxx` 即可。

#### 5.2 Hadoop 相关错误

哪个节点的哪个服务有错误,可以在对应日志(或manager日志)中查看是否有异常信息。比如一些 so 文件找不到,不上即可。

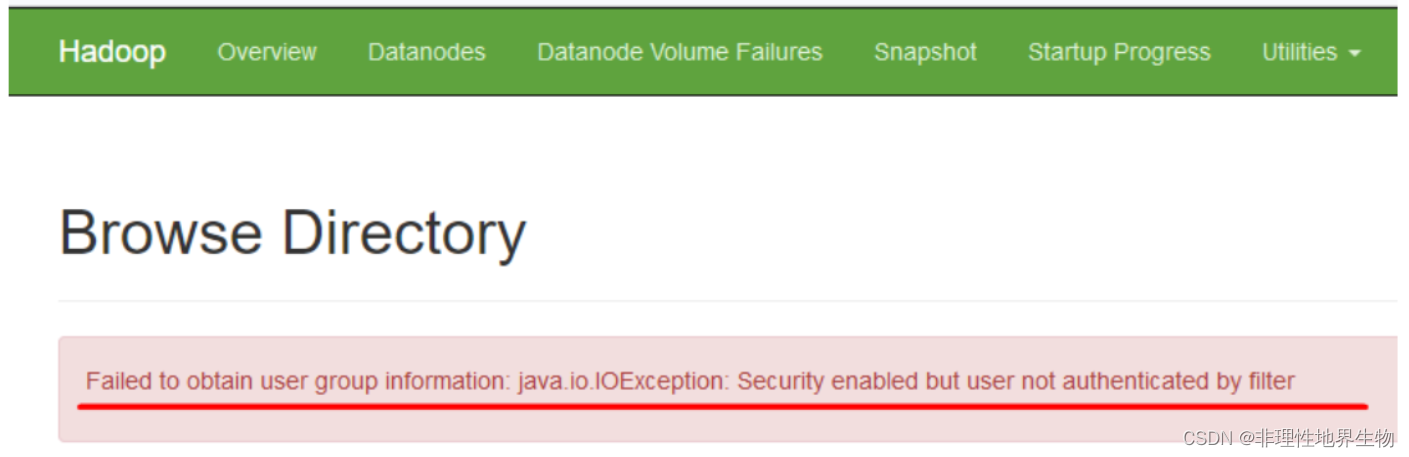

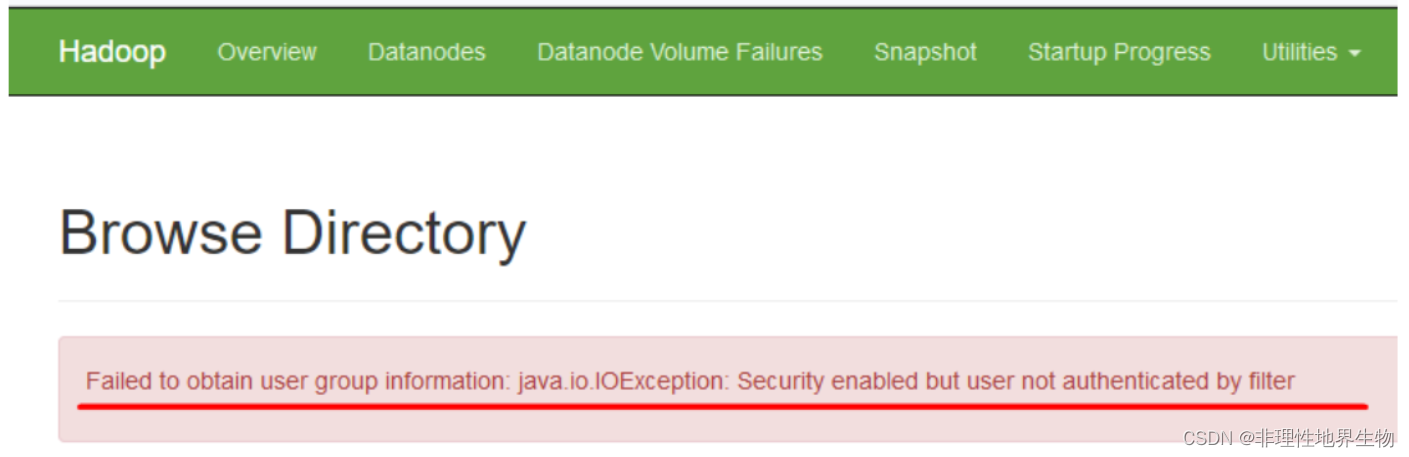

hdfs web 上报错 Failed to obtain user group information: java.io.IOException: Security enabled but user not authenticated by filter

<https://issues.apache.org/jira/browse/HDFS-16441>

### 6 提交 Spark on yarn

**既有适合小白学习的零基础资料,也有适合3年以上经验的小伙伴深入学习提升的进阶课程,涵盖了95%以上大数据知识点,真正体系化!**

**由于文件比较多,这里只是将部分目录截图出来,全套包含大厂面经、学习笔记、源码讲义、实战项目、大纲路线、讲解视频,并且后续会持续更新**

**[需要这份系统化资料的朋友,可以戳这里获取](https://bbs.csdn.net/topics/618545628)**

persistent-keyring-name-while-getting-default-ccache-while#:~:text=If%20running%20unset%20KRB5CCNAME%20did%20not%20resolve%20it%2C,of%20%22default_ccache_name%22%20in%20%2Fetc%2Fkrb5.conf%20to%20a%20local%20file%3A>

##### KrbException: Message stream modified (41)

据说和 jre 版本有关系,删除 krb5.conf 配置文件里的 `renew_lifetime = xxx` 即可。

#### 5.2 Hadoop 相关错误

哪个节点的哪个服务有错误,可以在对应日志(或manager日志)中查看是否有异常信息。比如一些 so 文件找不到,不上即可。

hdfs web 上报错 Failed to obtain user group information: java.io.IOException: Security enabled but user not authenticated by filter

<https://issues.apache.org/jira/browse/HDFS-16441>

### 6 提交 Spark on yarn

[外链图片转存中...(img-nvLassxm-1714152147790)]

[外链图片转存中...(img-NgTUbc0p-1714152147790)]

[外链图片转存中...(img-t0r9TzVT-1714152147791)]

**既有适合小白学习的零基础资料,也有适合3年以上经验的小伙伴深入学习提升的进阶课程,涵盖了95%以上大数据知识点,真正体系化!**

**由于文件比较多,这里只是将部分目录截图出来,全套包含大厂面经、学习笔记、源码讲义、实战项目、大纲路线、讲解视频,并且后续会持续更新**

**[需要这份系统化资料的朋友,可以戳这里获取](https://bbs.csdn.net/topics/618545628)**

2583

2583

被折叠的 条评论

为什么被折叠?

被折叠的 条评论

为什么被折叠?