先自我介绍一下,小编浙江大学毕业,去过华为、字节跳动等大厂,目前阿里P7

深知大多数程序员,想要提升技能,往往是自己摸索成长,但自己不成体系的自学效果低效又漫长,而且极易碰到天花板技术停滞不前!

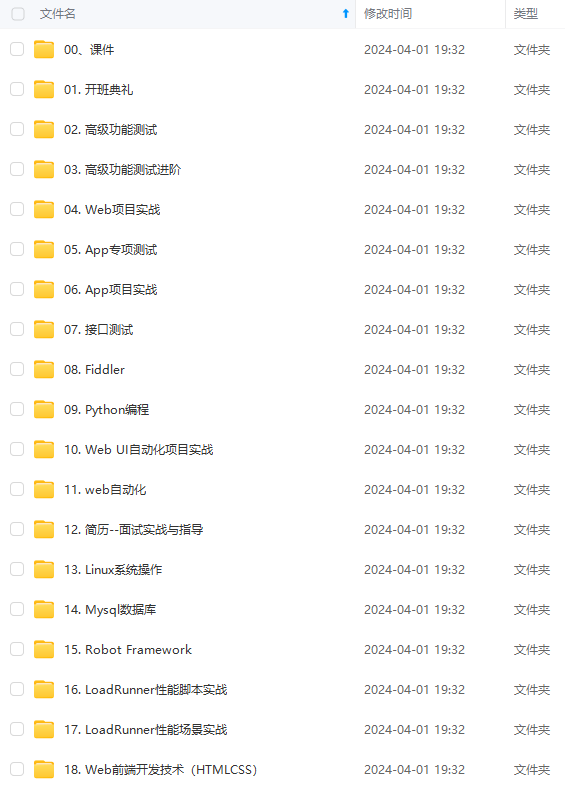

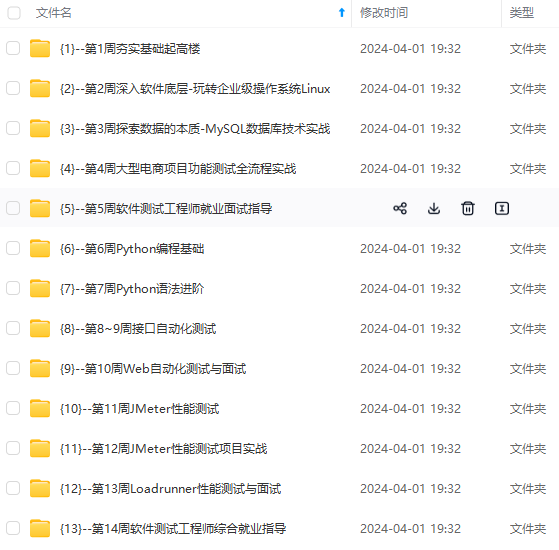

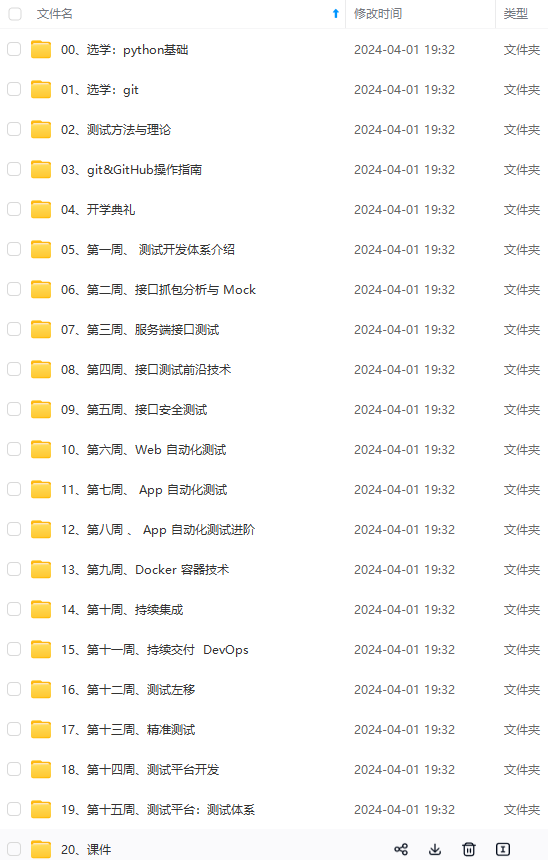

因此收集整理了一份《2024年最新软件测试全套学习资料》,初衷也很简单,就是希望能够帮助到想自学提升又不知道该从何学起的朋友。

既有适合小白学习的零基础资料,也有适合3年以上经验的小伙伴深入学习提升的进阶课程,涵盖了95%以上软件测试知识点,真正体系化!

由于文件比较多,这里只是将部分目录截图出来,全套包含大厂面经、学习笔记、源码讲义、实战项目、大纲路线、讲解视频,并且后续会持续更新

如果你需要这些资料,可以添加V获取:vip1024b (备注软件测试)

正文

To enable SSL for web console of YARN daemons, set yarn.http.policy to HTTPS_ONLY in yarn-site.xml.

To enable SSL for web console of MapReduce JobHistory server, set mapreduce.jobhistory.http.policy to HTTPS_ONLY in mapred-site.xml.

四. 配置

4.1. HDFS和 local fileSystem 路径的权限

下表列出了HDFS和 local fileSystem (在所有节点上)的各种路径以及建议的权限:

| Filesystem | Path | User:Group | Permissions |

|---|---|---|---|

| local | dfs.namenode.name.dir | hdfs:hadoop | drwx------ |

| local | dfs.datanode.data.dir | hdfs:hadoop | drwx------ |

| local | $HADOOP_LOG_DIR | hdfs:hadoop | drwxrwxr-x |

| local | $YARN_LOG_DIR | yarn:hadoop | drwxrwxr-x |

| local | yarn.nodemanager.local-dirs | yarn:hadoop | drwxr-xr-x |

| local | yarn.nodemanager.log-dirs | yarn:hadoop | drwxr-xr-x |

| local | container-executor | root:hadoop | –Sr-s–* |

| local | conf/container-executor.cfg | root:hadoop | r-------* |

| hdfs | / | hdfs:hadoop | drwxr-xr-x |

| hdfs | /tmp | hdfs:hadoop | drwxrwxrwxt |

| hdfs | /user | hdfs:hadoop | drwxr-xr-x |

| hdfs | yarn.nodemanager.remote-app-log-dir | yarn:hadoop | drwxrwxrwxt |

| hdfs | mapreduce.jobhistory.intermediate-done-dir | mapred:hadoop | drwxrwxrwxt |

| hdfs | mapreduce.jobhistory.done-dir | mapred:hadoop | drwxr-x— |

4.2. 通用配置

为了在hadoop中打开RPC身份验证,请将hadoop.security.authentication属性的值设置为“ kerberos”,并适当地设置下面列出的与安全性相关的设置。

以下属性应位于集群中所有节点的core-site.xml中。

| Parameter | Value | Notes |

|---|---|---|

| hadoop.security.authentication | kerberos | simple : No authentication. (default) kerberos : Enable authentication by Kerberos. |

| hadoop.security.authorization | true | Enable RPC service-level authorization. |

| hadoop.rpc.protection | authentication | authentication : authentication only (default); integrity : integrity check in addition to authentication; privacy : data encryption in addition to integrity |

| hadoop.security.auth_to_local | RULE:exp1 RULE:exp2 … DEFAULT | The value is string containing new line characters. See Kerberos documentation for the format of exp. |

| hadoop.proxyuser.superuser.hosts | comma separated hosts from which superuser access are allowed to impersonation. * means wildcard. | |

| hadoop.proxyuser.superuser.groups | comma separated groups to which users impersonated by superuser belong. * means wildcard. |

4.3. NameNode

| Parameter | Value | Notes |

|---|---|---|

| dfs.block.access.token.enable | true | Enable HDFS block access tokens for secure operations. |

| dfs.namenode.kerberos.principal | nn/_HOST@REALM.TLD | Kerberos principal name for the NameNode. |

| dfs.namenode.keytab.file | /etc/security/keytab/nn.service.keytab | Kerberos keytab file for the NameNode. |

| dfs.namenode.kerberos.internal.spnego.principal | HTTP/_HOST@REALM.TLD | The server principal used by the NameNode for web UI SPNEGO authentication. The SPNEGO server principal begins with the prefix HTTP/ by convention. If the value is ‘*’, the web server will attempt to login with every |

以下设置允许配置对NameNode Web UI的SSL访问(可选)。

| Parameter | Value | Notes |

|---|---|---|

| dfs.http.policy | HTTP_ONLY or HTTPS_ONLY or HTTP_AND_HTTPS | HTTPS_ONLY turns off http access. This option takes precedence over the deprecated configuration dfs.https.enable and hadoop.ssl.enabled. If using SASL to authenticate data transfer protocol instead of running DataNode as root and using privileged ports, then this property must be set to HTTPS_ONLY to guarantee authentication of HTTP servers. (See dfs.data.transfer.protection.) |

| dfs.namenode.https-address | 0.0.0.0:9871 | This parameter is used in non-HA mode and without federation. See HDFS High Availability and HDFS Federation for details. |

| dfs.https.enable | true | This value is deprecated. Use dfs.http.policy |

4.4. Secondary NameNode

| Parameter | Value | Notes |

|---|---|---|

| dfs.namenode.secondary.http-address | 0.0.0.0:9868 | HTTP web UI address for the Secondary NameNode. |

| dfs.namenode.secondary.https-address | 0.0.0.0:9869 | HTTPS web UI address for the Secondary NameNode. |

| dfs.secondary.namenode.keytab.file | /etc/security/keytab/sn.service.keytab | Kerberos keytab file for the Secondary NameNode. |

| dfs.secondary.namenode.kerberos.principal | sn/_HOST@REALM.TLD | Kerberos principal name for the Secondary NameNode. |

| dfs.secondary.namenode.kerberos.internal.spnego.principal | HTTP/_HOST@REALM.TLD | The server principal used by the Secondary NameNode for web UI SPNEGO authentication. The SPNEGO server principal begins with the prefix HTTP/ by convention. If the value is ‘*’, the web server will attempt to login with every principal specified in the keytab file dfs.web.authentication.kerberos.keytab. For most deployments this can be set to ${dfs.web.authentication.kerberos.principal} i.e use the value of dfs.web.authentication.kerberos.principal. |

4.5. JournalNode

| Parameter | Value | Notes |

|---|---|---|

| dfs.journalnode.kerberos.principal jn/_HOST@REALM.TLD | Kerberos principal name for the JournalNode. | |

| dfs.journalnode.keytab.file | /etc/security/keytab/jn.service.keytab | Kerberos keytab file for the JournalNode. |

| dfs.journalnode.kerberos.internal.spnego.principal | HTTP/_HOST@REALM.TLD | The server principal used by the JournalNode for web UI SPNEGO authentication when Kerberos security is enabled. The SPNEGO server principal begins with the prefix HTTP/ by convention. If the value is ‘*’, the web server will attempt to login with every principal specified in the keytab file dfs.web.authentication.kerberos.keytab. For most deployments this can be set to ${dfs.web.authentication.kerberos.principal} i.e use the value of dfs.web.authentication.kerberos.principal. |

| dfs.web.authentication.kerberos.keytab | /etc/security/keytab/spnego.service.keytab | SPNEGO keytab file for the JournalNode. In HA clusters this setting is shared with the Name Nodes. |

| dfs.journalnode.https-address | 0.0.0.0:8481 |

4.6. DataNode

| Parameter | Value | Notes |

|---|---|---|

| dfs.datanode.data.dir.perm | 700 | |

| dfs.datanode.address | 0.0.0.0:1004 | 安全数据节点必须使用特权端口,以确保服务器安全启动。这意味着服务器必须通过jsvc启动。 或者,如果使用SASL对数据传输协议进行身份验证,则必须将其设置为非特权端口. (See dfs.data.transfer.protection.) |

| dfs.datanode.http.address | 0.0.0.0:1006 | 安全数据节点必须使用特权端口,以确保服务器安全启动。这意味着服务器必须通过jsvc启动。 |

| dfs.datanode.https.address | 0.0.0.0:9865 | HTTPS web UI address for the Data Node. |

| dfs.datanode.kerberos.principal | dn/_HOST@REALM.TLD | Kerberos principal name for the DataNode. |

| dfs.datanode.keytab.file | /etc/security/keytab/dn.service.keytab | Kerberos keytab file for the DataNode. |

| dfs.encrypt.data.transfer | false | set to true when using data encryption |

| dfs.encrypt.data.transfer.algorithm | optionally set to 3des or rc4 when using data encryption to control encryption algorithm | |

| dfs.encrypt.data.transfer.cipher.suites | optionally set to AES/CTR/NoPadding to activate AES encryption when using data encryption | |

| dfs.encrypt.data.transfer.cipher.key.bitlength | optionally set to 128, 192 or 256 to control key bit length when using AES with data encryption | |

| dfs.data.transfer.protection | authentication : authentication only; integrity : integrity check in addition to authentication; privacy : data encryption in addition to integrity This property is unspecified by default. Setting this property enables SASL for authentication of data transfer protocol. If this is enabled, then dfs.datanode.address must use a non-privileged port, dfs.http.policy must be set to HTTPS_ONLY and the HDFS_DATANODE_SECURE_USER environment variable must be undefined when starting the DataNode process. |

4.7. WebHDFS

| Parameter | Value | Notes |

|---|---|---|

| dfs.web.authentication.kerberos.principal | http/_HOST@REALM.TLD | Kerberos principal name for the WebHDFS. In HA clusters this setting is commonly used by the JournalNodes for securing access to the JournalNode HTTP server with SPNEGO. |

| dfs.web.authentication.kerberos.keytab | /etc/security/keytab/http.service.keytab | Kerberos keytab file for WebHDFS. In HA clusters this setting is commonly used the JournalNodes for securing access to the JournalNode HTTP server with SPNEGO. |

4.8. ResourceManager

| Parameter | Value | Notes |

|---|---|---|

| yarn.resourcemanager.principal | rm/_HOST@REALM.TLD | Kerberos principal name for the ResourceManager. |

| yarn.resourcemanager.keytab | /etc/security/keytab/rm.service.keytab | Kerberos keytab file for the ResourceManager. |

| yarn.resourcemanager.webapp.https.address | ${yarn.resourcemanager.hostname}:8090 | The https adddress of the RM web application for non-HA. In HA clusters, use yarn.resourcemanager.webapp.https.address.rm-id for each ResourceManager. See ResourceManager High Availability for details. |

4.9. NodeManager

| Parameter | Value | Notes |

|---|---|---|

| yarn.nodemanager.principal | nm/_HOST@REALM.TLD | Kerberos principal name for the NodeManager. |

| yarn.nodemanager.keytab | /etc/security/keytab/nm.service.keytab | Kerberos keytab file for the NodeManager. |

| yarn.nodemanager.container-executor.class | org.apache.hadoop.yarn.server.nodemanager.LinuxContainerExecutor | Use LinuxContainerExecutor. |

| yarn.nodemanager.linux-container-executor.group | hadoop | Unix group of the NodeManager. |

| yarn.nodemanager.linux-container-executor.path | /path/to/bin/container-executor | The path to the executable of Linux container executor. |

| yarn.nodemanager.webapp.https.address | 0.0.0.0:8044 | The https adddress of the NM web application. |

4.10. Configuration for WebAppProxy

所述WebAppProxy提供由应用程序和终端用户导出的web应用程序之间的代理。

如果启用了安全性,它将在访问可能不安全的Web应用程序之前警告用户。使用代理的身份验证和授权的处理方式与其他任何特权Web应用程序一样。

| Parameter | Value | Notes |

|---|---|---|

| yarn.web-proxy.address | WebAppProxy host:port for proxy to AM web apps. | host:port if this is the same as yarn.resourcemanager.webapp.address or it is not defined then the ResourceManager will run the proxy otherwise a standalone proxy server will need to be launched. |

| yarn.web-proxy.keytab | /etc/security/keytab/web-app.service.keytab | Kerberos keytab file for the WebAppProxy. |

| yarn.web-proxy.principal | wap/_HOST@REALM.TLD | Kerberos principal name for the WebAppProxy. |

4.11. LinuxContainerExecutor

由ContainerExecutor使用,它限定任何如何容器推出和控制。

YARN框架使用ContainerExecutor 来启动和控制container .

以下是Hadoop YARN中可用的内容:

| ContainerExecutor | Description |

|---|---|

| DefaultContainerExecutor | YARN默认的executor . 用于管理container 执行.容器进程与NodeManager具有相同的Unix用户。 |

| LinuxContainerExecutor | 仅仅支持GNU/Linux操作系统,此执行器以提交应用程序的用户(启用完全安全性时)或专用用户(默认为nobody)的身份(未启用完全安全性时)运行容器。启用安全认证后,此执行器要求在启动容器的群集节点上创建所有用户帐户。 它使用Hadoop发行版中包含的setuid可执行文件。 NodeManager使用此可执行文件启动和终止容器。 setuid可执行文件将切换到提交应用程序并启动或终止容器的用户。 为了最大限度地提高安全性,此执行器对容器使用的本地文件和目录(如共享对象、jar、中间文件、日志文件等)设置受限权限和用户/组所有权。 特别要注意的是,正因为如此,除了应用程序所有者和节点管理员之外,没有其他用户可以访问任何本地文件/目录,包括那些作为分布式缓存的一部分本地化的文件/目录。 |

要构建LinuxContainerExecutor可执行文件,请运行:

mvn package -Dcontainer-executor.conf.dir=/etc/hadoop/

-Dcontainer-executor.conf.dir中传递的路径应该是setuid可执行文件的配置文件所在的群集节点上的路径。

可执行文件应安装在 $HADOOP_YARN_HOME/bin 中。

可执行文件必须具有特定的权限:6050或--Sr-s ---权限由root用户(超级用户)拥有,并由NodeManager Unix用户是其成员的特殊组(例如hadoop)拥有而且没有普通的应用程序用户。

如果有任何应用程序用户属于该特殊组,则安全性将受到损害。

应该在conf/yarn-site.xml和conf/container-executor.cfg中为配置属性yarn.nodemanager.linux-container-executor.group指定这个特殊的组名。

例如,假设NodeManager以用户yarn的身份运行,该用户是AAAAA和hadoop组的一部分,而其中的任何一个都是primary group。 假设AAAAA同时拥有yarn和另一个用户(应用程序提交者)alice作为其成员,并且alice不属于hadoop。

按照上面的描述,setuid / setgid可执行文件应设置为6050或–Sr-s —,setuid / setgid可执行文件的所有者为yarn和hadoop 组, 应用的提交者所属的用户不应该隶属于hadoop用户组.

LinuxTaskController要求将包含并通向yarn.nodemanager.local-dirs和yarn.nodemanager.log-dirs中指定的目录的路径设置为755权限,如上表中目录权限所述。

- conf/container-executor.cfg

需要一个叫container-executor.cfg 的配置文件

该可执行文件要求在传递给上述mvn目标的配置目录中存在一个名为container-executor.cfg的配置文件。

配置文件必须由运行NodeManager的用户拥有(在上例中为user yarn),任何人都必须归属于该用户的用户组,并且应具有权限 0400 or r--------。

可执行文件要求conf / container-executor.cfg文件中包含以下配置项。这些项目应以简单的 key=value 的形式提及,每行一个:

| Parameter | Value | Notes |

|---|---|---|

| yarn.nodemanager.linux-container-executor.group | hadoop | NodeManager所属的Unix group container-executor也应该属于这个组. 和NodeManager中配置的的值一样. 这个配置需要验证container-executor的访问权限 |

| banned.users | hdfs,yarn,mapred,bin | 被禁止访问的用户 |

| allowed.system.users | foo,bar | 允许的系统用户 |

| min.user.id | 1000 | 防止其他超级用户 |

概括一下,这是与LinuxContainerExecutor相关的各种路径所需的本地文件系统权限:

| Filesystem | Path | User:Group | Permissions |

|---|---|---|---|

| local | container-executor | root:hadoop | –Sr-s–* |

| local | conf/container-executor.cfg | root:hadoop | r-------* |

| local | yarn.nodemanager.local-dirs | yarn:hadoop | drwxr-xr-x |

| local | yarn.nodemanager.log-dirs | yarn:hadoop | drwxr-xr-x |

4.10.MapReduce JobHistory Server

| Filesystem | value | Notes |

|---|---|---|

| mapreduce.jobhistory.address | MapReduce JobHistory Server host:port | Default port is 10020. |

| mapreduce.jobhistory.keytab | /etc/security/keytab/jhs.service.keytab | Kerberos keytab file for the MapReduce JobHistory Server. |

| mapreduce.jobhistory.principal | jhs/_HOST@REALM.TLD | Kerberos principal name for the MapReduce JobHistory Server. |

五. 多宿主

其中每个主机在DNS中具有多个主机名(例如,对应于公共和专用网络接口的不同主机名)的多宿主设置可能需要其他配置才能使Kerberos身份验证起作用。请参阅 HDFS Support for Multihomed Networks

六. 故障排除

Kerberos很难设置,也很难调试。常见的问题是

- 网络和DNS配置。

- 主机上的Kerberos配置(/etc/krb5.conf)。

- Keytab的创建和维护。

- 环境设置:JVM,用户登录名,系统时钟等。

来自JVM的错误消息实际上是毫无意义的,这无助于诊断和解决此类问题。

可以为客户端和任何服务启用额外的调试信息

- 将环境变量

HADOOP_JAAS_DEBUG设置为true。

export HADOOP_JAAS_DEBUG=true

- 编辑

log4j.properties文件以在DEBUG级别记录Hadoop的安全包。

log4j.logger.org.apache.hadoop.security=DEBUG

- 通过设置一些系统属性来启用JVM级调试。

export HADOOP_OPTS=“-Djava.net.preferIPv4Stack=true -Dsun.security.krb5.debug=true -Dsun.security.spnego.debug”

使用KDiag进行故障排除

Hadoop具有帮助验证设置的工具:KDiag

它包含一系列探针JVM的配置和环境,转储出一些系统文件(/etc/krb5.conf中,/etc/ntp.conf中),打印出一些系统状态,然后尝试登录到Kerberos作为当前用户或named keytab中的特定principal。

命令的输出可用于本地诊断,或转发给支持群集的任何人。

该KDiag命令有其自己的入口点; 通过将kdiag传递给bin / hadoop命令来调用它。因此,它将显示用于调用它的命令的kerberos客户端状态。

hadoop kdiag

该命令执行诊断成功会返回状态码0。

这并不意味着Kerberos在工作—只是KDiag命令没有从其有限的探针集中识别出任何问题。

特别是,由于它不尝试连接到任何远程服务,因此它不验证客户端是否受任何服务信任。

如果失败,则退出代码为

-1: the command failed for an unknown reason

41: Unauthorized (== HTTP’s 401). KDiag detected a condition which causes Kerberos to not work. Examine the output to identify the issue.

6.1. 使用

KDiag: Diagnose Kerberos Problems

[-D key=value] : Define a configuration option.

[–jaas] : Require a JAAS file to be defined in java.security.auth.login.config.

[–keylen ] : Require a minimum size for encryption keys supported by the JVM. Default value : 256.

[–keytab --principal ] : Login from a keytab as a specific principal.

[–nofail] : Do not fail on the first problem.

[–nologin] : Do not attempt to log in.

[–out ] : Write output to a file.

[–resource ] : Load an XML configuration resource.

[–secure] : Require the hadoop configuration to be secure.

[–verifyshortname ]: Verify the short name of the specific principal does not contain ‘@’ or ‘/’

–jaas: Require a JAAS file to be defined in java.security.auth.login.config.

If --jaas is set, the Java system property java.security.auth.login.config must be set to a JAAS file; this file must exist, be a simple file of non-zero bytes, and readable by the current user. More detailed validation is not performed.

JAAS files are not needed by Hadoop itself, but some services (such as Zookeeper) do require them for secure operation.

–keylen : Require a minimum size for encryption keys supported by the JVM".

If the JVM does not support this length, the command will fail.

The default value is to 256, as needed for the AES256 encryption scheme. A JVM without the Java Cryptography Extensions installed does not support such a key length. Kerberos will not work unless configured to use an encryption scheme with a shorter key length.

–keytab --principal : Log in from a keytab.

Log in from a keytab as the specific principal.

1.The file must contain the specific principal, including any named host. That is, there is no mapping from _HOST to the current hostname.

2. KDiag will log out and attempt to log back in again. This catches JVM compatibility problems which have existed in the past. (Hadoop’s Kerberos support requires use of/introspection into JVM-specific classes).

–nofail : Do not fail on the first problem

KDiag will make a best-effort attempt to diagnose all Kerberos problems, rather than stop at the first one.

This is somewhat limited; checks are made in the order which problems surface (e.g keylength is checked first), so an early failure can trigger many more problems. But it does produce a more detailed report.

–nologin: Do not attempt to log in.

Skip trying to log in. This takes precedence over the --keytab option, and also disables trying to log in to kerberos as the current kinited user.

This is useful when the KDiag command is being invoked within an application, as it does not set up Hadoop’s static security state —merely check for some basic Kerberos preconditions.

–out outfile: Write output to file.

hadoop kdiag --out out.txt

Much of the diagnostics information comes from the JRE (to stderr) and from Log4j (to stdout). To get all the output, it is best to redirect both these output streams to the same file, and omit the --out option.

hadoop kdiag --keytab zk.service.keytab --principal zookeeper/devix.example.org@REALM > out.txt 2>&1

Even there, the output of the two streams, emitted across multiple threads, can be a bit confusing. It will get easier with practise. Looking at the thread name in the Log4j output to distinguish background threads from the main thread helps at the hadoop level, but doesn’t assist in JVM-level logging.

–resource : XML configuration resource to load.

To load XML configuration files, this option can be used. As by default, the core-default and core-site XML resources are only loaded. This will help, when additional configuration files has any Kerberos related configurations.

hadoop kdiag --resource hbase-default.xml --resource hbase-site.xml

For extra logging during the operation, set the logging and HADOOP_JAAS_DEBUG environment variable to the values listed in “Troubleshooting”. The JVM options are automatically set in KDiag.

–secure: Fail if the command is not executed on a secure cluster.

That is: if the authentication mechanism of the cluster is explicitly or implicitly set to “simple”:

网上学习资料一大堆,但如果学到的知识不成体系,遇到问题时只是浅尝辄止,不再深入研究,那么很难做到真正的技术提升。

需要这份系统化的资料的朋友,可以添加V获取:vip1024b (备注软件测试)

一个人可以走的很快,但一群人才能走的更远!不论你是正从事IT行业的老鸟或是对IT行业感兴趣的新人,都欢迎加入我们的的圈子(技术交流、学习资源、职场吐槽、大厂内推、面试辅导),让我们一起学习成长!

l if the command is not executed on a secure cluster.**

That is: if the authentication mechanism of the cluster is explicitly or implicitly set to “simple”:

网上学习资料一大堆,但如果学到的知识不成体系,遇到问题时只是浅尝辄止,不再深入研究,那么很难做到真正的技术提升。

需要这份系统化的资料的朋友,可以添加V获取:vip1024b (备注软件测试)

[外链图片转存中…(img-G2u39bL0-1713429954565)]

一个人可以走的很快,但一群人才能走的更远!不论你是正从事IT行业的老鸟或是对IT行业感兴趣的新人,都欢迎加入我们的的圈子(技术交流、学习资源、职场吐槽、大厂内推、面试辅导),让我们一起学习成长!

2801

2801

被折叠的 条评论

为什么被折叠?

被折叠的 条评论

为什么被折叠?