先自我介绍一下,小编浙江大学毕业,去过华为、字节跳动等大厂,目前阿里P7

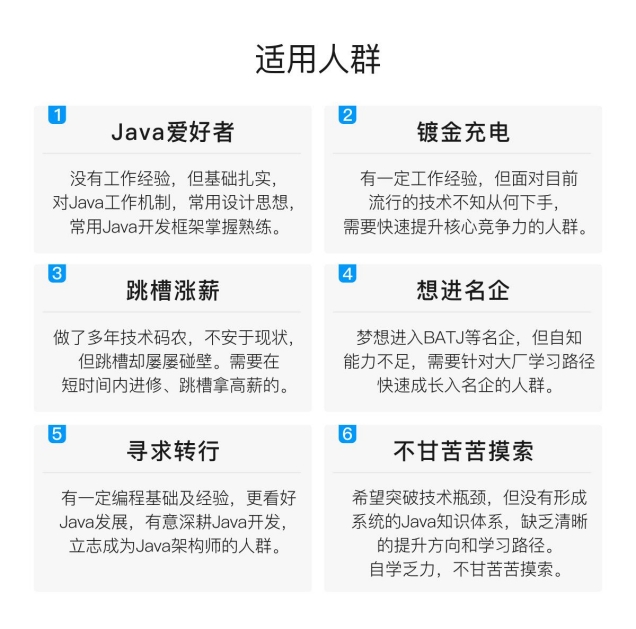

深知大多数程序员,想要提升技能,往往是自己摸索成长,但自己不成体系的自学效果低效又漫长,而且极易碰到天花板技术停滞不前!

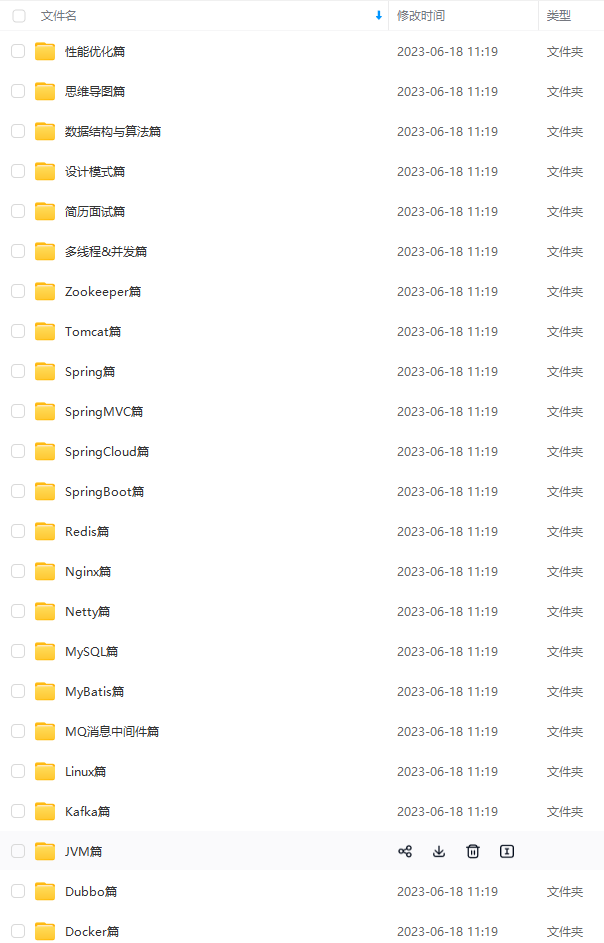

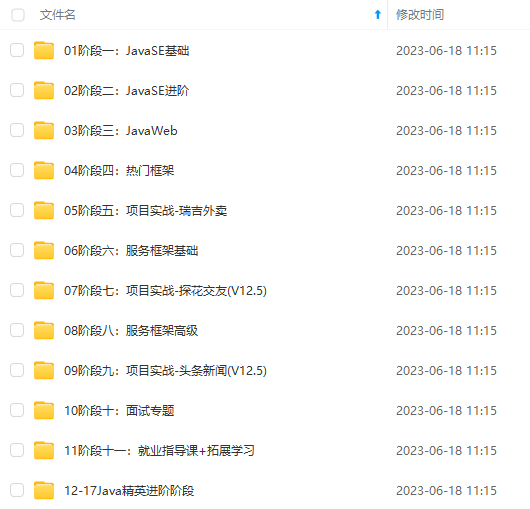

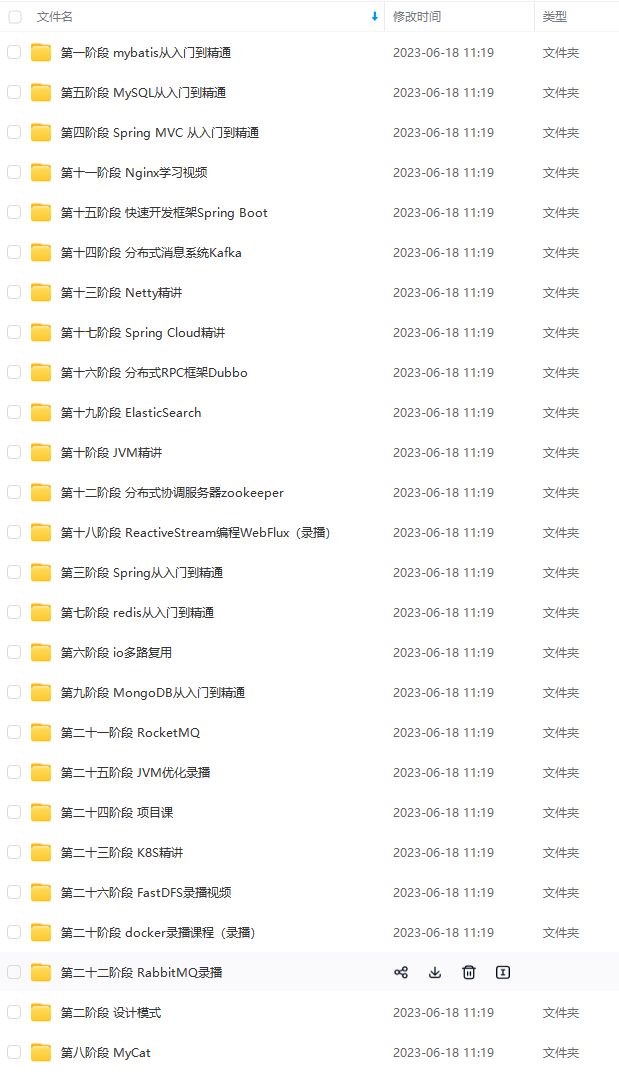

因此收集整理了一份《2024年最新Java开发全套学习资料》,初衷也很简单,就是希望能够帮助到想自学提升又不知道该从何学起的朋友。

既有适合小白学习的零基础资料,也有适合3年以上经验的小伙伴深入学习提升的进阶课程,涵盖了95%以上Java开发知识点,真正体系化!

由于文件比较多,这里只是将部分目录截图出来,全套包含大厂面经、学习笔记、源码讲义、实战项目、大纲路线、讲解视频,并且后续会持续更新

如果你需要这些资料,可以添加V获取:vip1024b (备注Java)

正文

… 1 more

Caused by: java.io.FileNotFoundException: HADOOP_HOME and hadoop.home.dir are unset.

at org.apache.hadoop.util.Shell.checkHadoopHomeInner(Shell.java:468)

at org.apache.hadoop.util.Shell.checkHadoopHome(Shell.java:439)

at org.apache.hadoop.util.Shell.(Shell.java:516)

… 11 more

方式二:不依赖于大数据环境

网上许多写入 parquet 需要在本地安装 haddop 环境,下面介绍一种不需要安装 haddop 即可写入 parquet 文件的方式;

Maven 依赖:

org.apache.avro avro 1.8.2 org.apache.hadoop hadoop-core 1.2.1 org.apache.parquet parquet-hadoop 1.8.1 org.apache.parquet parquet-avro 1.8.1public class User {

private String id;

private String name;

private String password;

public User() {

}

public User(String id, String name, String password) {

this.id = id;

this.name = name;

this.password = password;

}

public String getId() {

return id;

}

public String getName() {

return name;

}

public void setName(String name) {

this.name = name;

}

public String getPassword() {

return password;

}

public void setPassword(String password) {

this.password = password;

}

@Override

public String toString() {

return “User{” +

“id='” + id + ‘’’ +

“, name='” + name + ‘’’ +

“, password='” + password + ‘’’ +

‘}’;

}

}

注:这种方式的 User 实体类和上面方式的 schema.avsc 文件中的 "name": "User" 有冲突,报错:

Exception in thread “main” org.apache.parquet.io.ParquetDecodingException: Can not read value at 1 in block 0 in file file:/heheda/output.parquet

at org.apache.parquet.hadoop.InternalParquetRecordReader.nextKeyValue(InternalParquetRecordReader.java:254)

at org.apache.parquet.hadoop.ParquetReader.read(ParquetReader.java:132)

at org.apache.parquet.hadoop.ParquetReader.read(ParquetReader.java:136)

at WriteToParquet.main(WriteToParquet.java:55)

Caused by: java.lang.ClassCastException: User cannot be cast to org.apache.avro.generic.IndexedRecord

at org.apache.avro.generic.GenericData.setField(GenericData.java:818)

at org.apache.parquet.avro.AvroRecordConverter.set(AvroRecordConverter.java:396)

at org.apache.parquet.avro.AvroRecordConverter

2.

a

d

d

(

A

v

r

o

R

e

c

o

r

d

C

o

n

v

e

r

t

e

r

.

j

a

v

a

:

132

)

a

t

o

r

g

.

a

p

a

c

h

e

.

p

a

r

q

u

e

t

.

a

v

r

o

.

A

v

r

o

C

o

n

v

e

r

t

e

r

s

2.add(AvroRecordConverter.java:132) at org.apache.parquet.avro.AvroConverters

2.add(AvroRecordConverter.java:132)atorg.apache.parquet.avro.AvroConvertersBinaryConverter.addBinary(AvroConverters.java:64)

at org.apache.parquet.column.impl.ColumnReaderBase$2$6.writeValue(ColumnReaderBase.java:390)

at org.apache.parquet.column.impl.ColumnReaderBase.writeCurrentValueToConverter(ColumnReaderBase.java:440)

at org.apache.parquet.column.impl.ColumnReaderImpl.writeCurrentValueToConverter(ColumnReaderImpl.java:30)

at org.apache.parquet.io.RecordReaderImplementation.read(RecordReaderImplementation.java:406)

at org.apache.parquet.hadoop.InternalParquetRecordReader.nextKeyValue(InternalParquetRecordReader.java:229)

… 3 more

AvroParquetWriter 写入:

import org.apache.avro.reflect.ReflectData;

import org.apache.hadoop.conf.Configuration;

import org.apache.hadoop.fs.Path;

import org.apache.parquet.avro.AvroParquetWriter;

import org.apache.parquet.hadoop.ParquetWriter;

import java.io.IOException;

import java.util.ArrayList;

import java.util.List;

import static org.apache.parquet.hadoop.ParquetFileWriter.Mode.OVERWRITE;

import static org.apache.parquet.hadoop.metadata.CompressionCodecName.SNAPPY;

public class WriteToParquet {

public static void main(String[] args) {

try {

List users = new ArrayList<>();

User user1 = new User(“1”,“huangchixin”,“123123”);

User user2 = new User(“2”,“huangchixin2”,“123445”);

users.add(user1);

users.add(user2);

Path dataFile = new Path(“output.parquet”);

ParquetWriter writer = AvroParquetWriter.builder(dataFile)

.withSchema(ReflectData.AllowNull.get().getSchema(User.class))

.withDataModel(ReflectData.get())

.withConf(new Configuration())

.withCompressionCodec(SNAPPY)

.withWriteMode(OVERWRITE)

.build();

for (User user : users) {

writer.write(user);

}

writer.close();

} catch (

IOException e) {

e.printStackTrace();

}

}

}

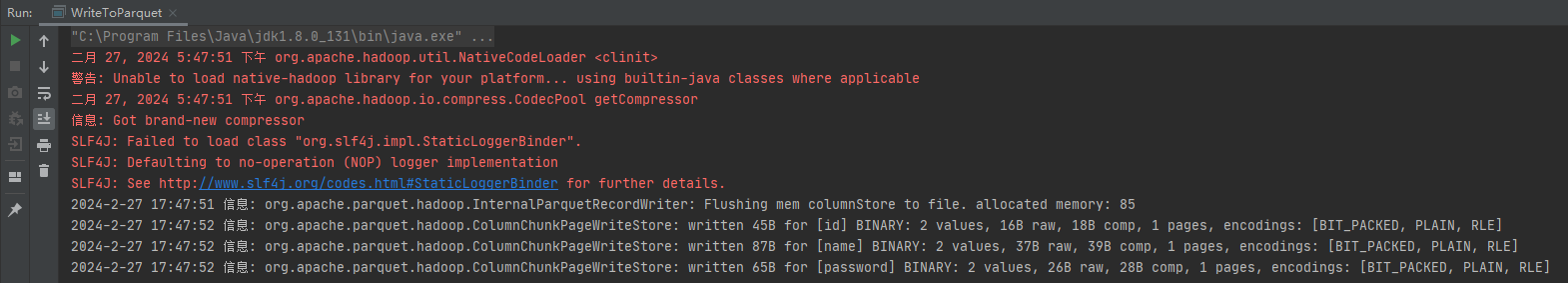

Idea 本地执行:

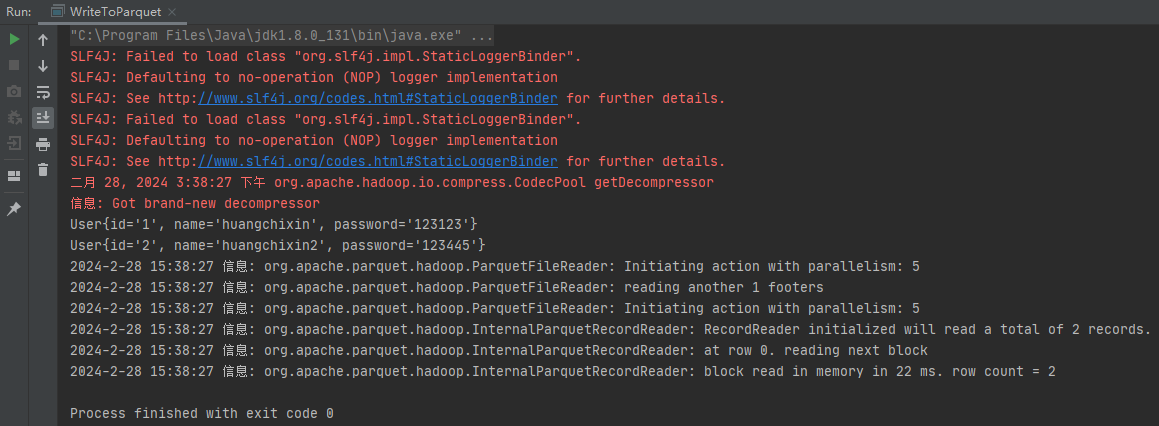

AvroParquetReader 读取,需要指定对象 class:

import org.apache.avro.reflect.ReflectData;

import org.apache.hadoop.conf.Configuration;

import org.apache.hadoop.fs.Path;

import org.apache.parquet.avro.AvroParquetReader;

import org.apache.parquet.hadoop.ParquetReader;

import java.io.IOException;

public class WriteToParquet {

public static void main(String[] args) {

try {

Path dataFile = new Path(“output.parquet”);

ParquetReader reader = AvroParquetReader.builder(dataFile)

.withDataModel(new ReflectData(User.class.getClassLoader()))

.disableCompatibility()

.withConf(new Configuration())

.build();

User user;

while ((user = reader.read()) != null) {

System.out.println(user);

}

} catch (

IOException e) {

e.printStackTrace();

}

}

}

ParquetFileReader 读取,只需虚拟 haddop:

Maven 配置:

org.apache.hadoop hadoop-core 1.2.1 org.apache.parquet parquet-hadoop 1.8.1 log4j log4j 1.2.17 com.google.guava guava 11.0.2 com.fasterxml.jackson.core jackson-databind 2.8.3方式一:

列实体:

package com.kestrel;

public class TableHead {

/**

* 列名

*/

private String name;

/**

* 存储 列的 数据类型

*/

private String type;

/**

* 所在列

*/

private Integer index;

public String getType() {

return type;

}

public void setType(String type) {

this.type = type;

}

public String getName() {

return name;

}

public void setName(String name) {

this.name = name;

}

public Integer getIndex() {

return index;

}

public void setIndex(Integer index) {

this.index = index;

}

}

Parquet 实体类:

package com.kestrel;

import java.util.List;

public class TableResult {

/**

* 解析文件的表头信息 暂时只对 arrow,csv 文件有效

*/

private List< TableHead> columns;

/**

* 数据内容

*/

private List<?> data;

public List< TableHead> getColumns() {

return columns;

}

public void setColumns(List< TableHead> columns) {

this.columns = columns;

}

public List<?> getData() {

return data;

}

public void setData(List<?> data) {

this.data = data;

}

}

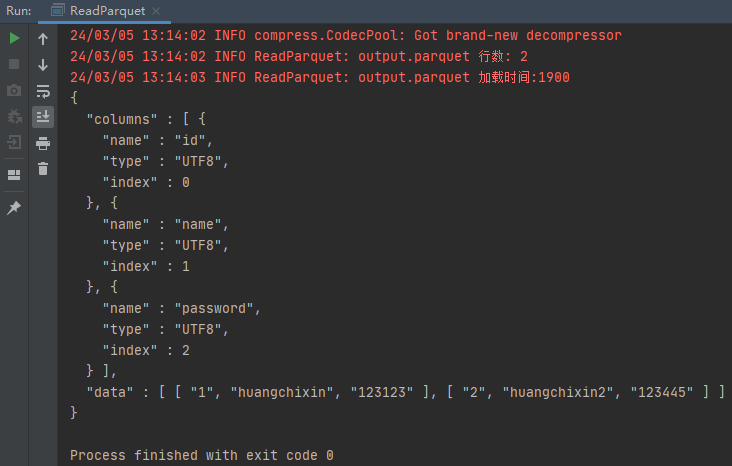

读取 parquet 文件:

import com.fasterxml.jackson.databind.ObjectMapper;

import com.google.common.collect.Lists;

import org.apache.hadoop.conf.Configuration;

import org.apache.hadoop.fs.Path;

import org.apache.parquet.column.page.PageReadStore;

import org.apache.parquet.example.data.Group;

import org.apache.parquet.example.data.simple.convert.GroupRecordConverter;

import org.apache.parquet.format.converter.ParquetMetadataConverter;

import org.apache.parquet.hadoop.ParquetFileReader;

import org.apache.parquet.hadoop.metadata.ParquetMetadata;

import org.apache.parquet.io.ColumnIOFactory;

import org.apache.parquet.io.MessageColumnIO;

import org.apache.parquet.io.RecordReader;

import org.apache.parquet.schema.GroupType;

import org.apache.parquet.schema.MessageType;

import org.apache.parquet.schema.OriginalType;

import org.apache.parquet.schema.Type;

import org.apache.commons.logging.Log;

import org.apache.commons.logging.LogFactory;

import java.io.File;

import java.io.IOException;

import java.util.ArrayList;

import java.util.List;

public class ReadParquet {

private static final Log logger = LogFactory.getLog(ReadParquet.class);

public static void main(String[] args) throws Exception {

TableResult tableResult = parquetReaderV2(new File(“output.parquet”));

ObjectMapper mapper = new ObjectMapper();

String jsonString = mapper.writerWithDefaultPrettyPrinter()

.writeValueAsString(tableResult);

System.out.println(jsonString);

}

public static TableResult parquetReaderV2(File file) throws Exception {

long start = System.currentTimeMillis();

haddopEnv();

Path path = new Path(file.getAbsolutePath());

Configuration conf = new Configuration();

TableResult table = new TableResult();

//二位数据列表

List<List> dataList = Lists.newArrayList();

ParquetMetadata readFooter = ParquetFileReader.readFooter(conf, path, ParquetMetadataConverter.NO_FILTER);

MessageType schema = readFooter.getFileMetaData().getSchema();

ParquetFileReader r = new ParquetFileReader(conf, readFooter.getFileMetaData(), path, readFooter.getBlocks(), schema.getColumns());

// org.apache.parquet 1.9.0版本使用以下创建对象

// ParquetFileReader r = new ParquetFileReader(conf, path, readFooter);

PageReadStore pages = null;

try {

while (null != (pages = r.readNextRowGroup())) {

final long rows = pages.getRowCount();

logger.info(file.getName()+" 行数: " + rows);

final MessageColumnIO columnIO = new ColumnIOFactory().getColumnIO(schema);

final RecordReader recordReader = columnIO.getRecordReader(pages,

new GroupRecordConverter(schema));

for (int i = 0; i <= rows; i++) {

// System.out.println(recordReader.shouldSkipCurrentRecord());

final Group g = recordReader.read();

if (i == 0) {

// 设置表头列名

table.setColumns(parquetColumn(g));

i++;

}

// 获取行数据

List row = getparquetData(table.getColumns(), g);

dataList.add(row);

// printGroup(g);

}

}

} finally {

r.close();

}

logger.info(file.getName()+" 加载时间:"+(System.currentTimeMillis() - start));

table.setData(dataList);

return table;

}

private static List getparquetData(List columns, Group line) {

List row = new ArrayList<>();

Object cellStr = null;

for (int i = 0; i < columns.size(); i++) {

try {

switch (columns.get(i).getType()) {

case “DOUBLE”:

cellStr = line.getDouble(i, 0);

break;

case “FLOAT”:

cellStr = line.getFloat(i, 0);

break;

case “BOOLEAN”:

cellStr = line.getBoolean(i, 0);

break;

case “INT96”:

cellStr = line.getInt96(i, 0);

break;

case “LONG”:

cellStr = line.getLong(i, 0);

break;

default:

cellStr = line.getValueToString(i, 0);

}

} catch (RuntimeException e) {

} finally {

row.add(cellStr);

}

}

return row;

}

/**

* 获取arrow 文件 表头信息

*

* @param

* @return

*/

private static List parquetColumn(Group line) {

List columns = Lists.newArrayList();

TableHead dto = null;

GroupType groupType = line.getType();

int fieldCount = groupType.getFieldCount();

for (int i = 0; i < fieldCount; i++) {

dto = new TableHead();

Type type = groupType.getType(i);

String fieldName = type.getName();

OriginalType originalType = type.getOriginalType();

String typeName = null;

if (originalType != null) {

typeName = originalType.name();

} else {

typeName = type.asPrimitiveType().getPrimitiveTypeName().name();

}

dto.setIndex(i);

dto.setName(fieldName);

dto.setType(typeName);

columns.add(dto);

}

return columns;

}

public static void haddopEnv() throws IOException {

File workaround = new File(“.”);

System.getProperties().put(“hadoop.home.dir”, workaround.getAbsolutePath());

new File(“./bin”).mkdirs();

new File(“./bin/winutils.exe”).createNewFile();

}

}

方式二:

行式读取:

import org.apache.avro.Schema;

import org.apache.avro.generic.GenericRecord;

import org.apache.hadoop.fs.Path;

import org.apache.parquet.avro.AvroParquetReader;

import org.apache.parquet.avro.AvroReadSupport;

import org.apache.parquet.hadoop.ParquetReader;

import java.io.File;

import java.io.IOException;

import java.util.ArrayList;

import java.util.HashMap;

import java.util.List;

import java.util.Map;

public class ParquetRecordReader {

// 指定 Parquet 文件路径

public static List<Map<String,Object>> readParquetFileWithRecord(String filePath) throws IOException {

// 拼接parquet文件全路径

Path parquetFilePath = new Path(filePath);

ParquetReader reader = AvroParquetReader.builder(new AvroReadSupport(), parquetFilePath).build();

GenericRecord record;

List<Map<String,Object>> recordList = new ArrayList<>();

// 开始遍历行数据

while ((record = reader.read()) != null) {

Map<String,Object> recordMap = new HashMap<>();

Schema schema = record.getSchema();

// 行的字段信息

List<Schema.Field> fields = schema.getFields();

GenericRecord finalRecord = record;

fields.stream().forEach(item->{

// 根据字段名称获取对应值

String name = item.name();

Object val = finalRecord.get(name);

recordMap.put(name,val);

});

recordList.add(recordMap);

}

reader.close();

return recordList;

}

public static void main(String[] args) throws IOException {

String filePath = “D:\parquet\file” + File.separator+ “test1.parquet”;

readParquetFileWithRecord(filePath);

}

}

列式读取:

import org.apache.hadoop.conf.Configuration;

import org.apache.hadoop.fs.Path;

import org.apache.parquet.Version;

import org.apache.parquet.column.ColumnDescriptor;

import org.apache.parquet.column.ColumnReadStore;

import org.apache.parquet.column.ColumnReader;

import org.apache.parquet.column.impl.ColumnReadStoreImpl;

import org.apache.parquet.column.page.PageReadStore;

import org.apache.parquet.example.data.simple.convert.GroupRecordConverter;

import org.apache.parquet.format.converter.ParquetMetadataConverter;

import org.apache.parquet.hadoop.ParquetFileReader;

import org.apache.parquet.hadoop.metadata.ParquetMetadata;

import org.apache.parquet.schema.MessageType;

import org.apache.parquet.schema.PrimitiveType;

import java.io.File;

import java.io.IOException;

import java.util.*;

public class ParquetColumnReader {

public static Map<String, List> readParquetFileWithColumn(String filePath) throws IOException {

Map<String, List> columnMap = new HashMap<>();

Configuration conf = new Configuration();

final Path path = new Path(filePath);

ParquetMetadata readFooter = ParquetFileReader.readFooter(conf, path, ParquetMetadataConverter.NO_FILTER);

MessageType schema = readFooter.getFileMetaData().getSchema();

ParquetFileReader r = new ParquetFileReader(conf, readFooter.getFileMetaData(), path, readFooter.getBlocks(), schema.getColumns());

// ParquetFileReader r = new ParquetFileReader(conf, path, readFooter);

// 遍历行组信息

PageReadStore rowGroup = null;

while (null != (rowGroup = r.readNextRowGroup())) {

ColumnReader colReader = null;

// 读取列信息

ColumnReadStore colReadStore = new ColumnReadStoreImpl(rowGroup, new GroupRecordConverter(schema).getRootConverter(), schema,Version.FULL_VERSION);

List descriptorList = schema.getColumns();

//遍历列

for (ColumnDescriptor colDescriptor : descriptorList) {

String[] columnNamePath = colDescriptor.getPath();

// 列名称

String columnName = Arrays.toString(columnNamePath);

colReader = colReadStore.getColumnReader(colDescriptor);

// 当前列的数据行数

long totalValuesInColumnChunk = rowGroup.getPageReader(colDescriptor).getTotalValueCount();

//获取列类型,根据列类型调用不同的方法获取数据

PrimitiveType.PrimitiveTypeName type = colDescriptor.getType();

final String name = type.name();

List columnList = new ArrayList<>();

columnMap.put(columnName, columnList);

//遍历列中每个元素

for (int i = 0; i < totalValuesInColumnChunk; i++) {

String val = “”;

if(name.equals(“INT32”)){

val = String.valueOf(colReader.getInteger());

}else if(name.equals(“INT64”)){

val = String.valueOf(colReader.getLong());

}

else{

val = colReader.getBinary().toStringUsingUTF8();

}

columnList.add(val);

colReader.consume();

}

}

}

r.close();

return columnMap;

}

public static void main(String[] args) throws IOException {

String filePath = “D:\parquet\file” + File.separator+ “test1.parquet”;

readParquetFileWithColumn(filePath);

}

}

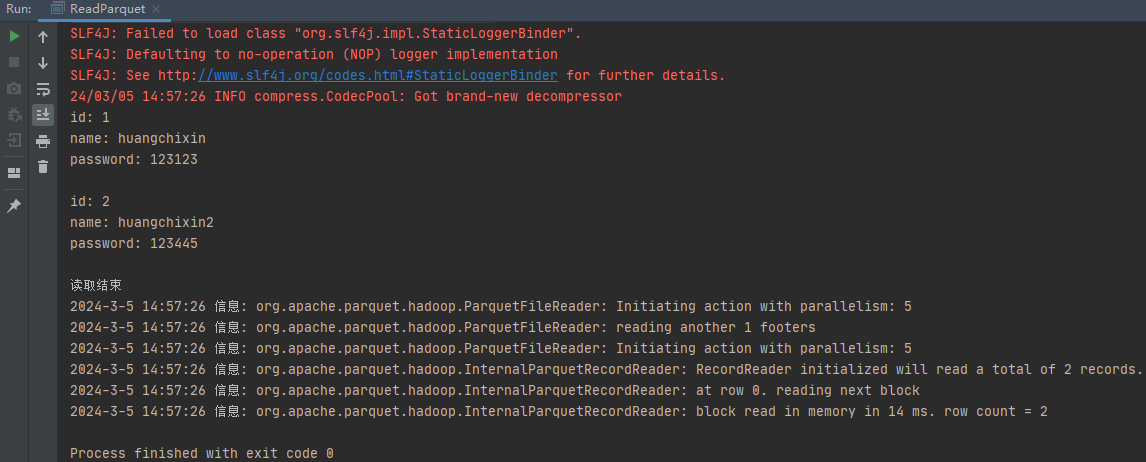

ParquetReader 读取:

import org.apache.hadoop.fs.Path;

import org.apache.parquet.example.data.Group;

import org.apache.parquet.hadoop.ParquetReader;

import org.apache.parquet.hadoop.example.GroupReadSupport;

public class ReadParquet {

public static void main(String[] args) throws Exception {

parquetReader(“output.parquet”);

}

static void parquetReader(String inPath) throws Exception{

GroupReadSupport readSupport = new GroupReadSupport();

ParquetReader reader = new ParquetReader(new Path(inPath),readSupport);

// 新版本中new ParquetReader() 所有构造方法好像都弃用了,用 builder 去构造对象

// ParquetReader reader = ParquetReader.builder(readSupport, new Path(inPath)).build();

Group line=null;

while((line=reader.read())!=null){

System.out.println(line.toString());

// System.out.println(line.getString(“id”,0));

// System.out.println(line.getString(“name”,0));

// System.out.println(line.getString(“password”,0));

// 如果是其他数据类型的,可以参考下面的解析

// System.out.println(line.getInteger(“intValue”,0));

// System.out.println(line.getLong(“longValue”,0));

// System.out.println(line.getDouble(“doubleValue”,0));

// System.out.println(line.getString(“stringValue”,0));

// System.out.println(new String(line.getBinary(“byteValue”,0).getBytes()));

// System.out.println(new String(line.getBinary(“byteNone”,0).getBytes()));

}

System.out.println(“读取结束”);

}

}

ExampleParquetWriter 写入:Spring boot 方式

参考:Parquet文件测试(二)——Java方式对Parquet文件进行文件生成和解析

首先定义一个结构,到时候生成的 Parquet 文件会储存如下结构的内容:

import lombok.Data;

/**

* 测试结构

* 属性为表字段

*/

@Data

public class TestEntity {

private int intValue;

private long longValue;

private double doubleValue;

private String stringValue;

private byte[] byteValue;

private byte[] byteNone;

}

生成 Parquet 文件的测试用例代码如下:

import lombok.RequiredArgsConstructor;

import org.apache.hadoop.fs.Path;

import org.apache.parquet.column.ParquetProperties;

import org.apache.parquet.example.data.Group;

import org.apache.parquet.example.data.simple.SimpleGroupFactory;

import org.apache.parquet.hadoop.ParquetFileWriter;

import org.apache.parquet.hadoop.ParquetWriter;

import org.apache.parquet.hadoop.example.ExampleParquetWriter;

import org.apache.parquet.hadoop.metadata.CompressionCodecName;

import org.apache.parquet.io.api.Binary;

import org.apache.parquet.schema.MessageType;

import org.springframework.beans.factory.annotation.Autowired;

import org.springframework.beans.factory.annotation.Qualifier;

import org.springframework.stereotype.Component;

import java.io.IOException;

@Component

@RequiredArgsConstructor

public class TestComponent {

@Autowired

@Qualifier(“testMessageType”)

private MessageType testMessageType;

private static final String javaDirPath = “D:\tmp\”;

/**

* 文件写入parquet

*/

public void javaWriteToParquet(TestEntity testEntity) throws IOException {

String filePath = javaDirPath + System.currentTimeMillis() + “.parquet”;

ParquetWriter parquetWriter = ExampleParquetWriter.builder(new Path(filePath))

.withWriteMode(ParquetFileWriter.Mode.CREATE)

.withWriterVersion(ParquetProperties.WriterVersion.PARQUET_1_0)

.withCompressionCodec(CompressionCodecName.SNAPPY)

.withType(testMessageType).build();

//写入数据

SimpleGroupFactory simpleGroupFactory = new SimpleGroupFactory(testMessageType);

Group group = simpleGroupFactory.newGroup();

group.add(“intValue”, testEntity.getIntValue());

group.add(“longValue”, testEntity.getLongValue());

group.add(“doubleValue”, testEntity.getDoubleValue());

group.add(“stringValue”, testEntity.getStringValue());

group.add(“byteValue”, Binary.fromConstantByteArray(testEntity.getByteValue()));

group.add(“byteNone”, Binary.EMPTY);

parquetWriter.write(group);

parquetWriter.close();

}

}

此处还是使用 SpringBootTest 创建测试用例。接下来配置个 Parquet 的结构:

import org.apache.parquet.schema.LogicalTypeAnnotation;

import org.apache.parquet.schema.MessageType;

import org.apache.parquet.schema.Types;

import org.springframework.context.annotation.Bean;

import org.springframework.context.annotation.Configuration;

import static org.apache.parquet.schema.PrimitiveType.PrimitiveTypeName.*;

@Configuration

public class TestConfiguration {

@Bean(“testMessageType”)

public MessageType testMessageType() {

Types.MessageTypeBuilder messageTypeBuilder = Types.buildMessage();

messageTypeBuilder.required(INT32).named(“intValue”);

messageTypeBuilder.required(INT64).named(“longValue”);

messageTypeBuilder.required(DOUBLE).named(“doubleValue”);

messageTypeBuilder.required(BINARY).as(LogicalTypeAnnotation.stringType()).named(“stringValue”);

messageTypeBuilder.required(BINARY).as(LogicalTypeAnnotation.bsonType()).named(“byteValue”);

messageTypeBuilder.required(BINARY).as(LogicalTypeAnnotation.bsonType()).named(“byteNone”);

return messageTypeBuilder.named(“test”);

}

}

接下来执行测试方法生成 Parquet 文件:

import com.hui.ParquetConvertApplication;

import org.junit.Test;

import org.junit.runner.RunWith;

import org.springframework.boot.test.context.SpringBootTest;

import org.springframework.test.context.junit4.SpringRunner;

import javax.annotation.Resource;

import java.io.IOException;

import java.nio.charset.StandardCharsets;

@RunWith(SpringRunner.class)

@SpringBootTest(classes = ParquetConvertApplication.class)

public class TestConvertTest {

@Resource

private TestComponent testComponent;

@Test

public void startTest() throws IOException {

TestEntity testEntity = new TestEntity();

testEntity.setIntValue(100);

感受:

其实我投简历的时候,都不太敢投递阿里。因为在阿里一面前已经过了字节的三次面试,投阿里的简历一直没被捞,所以以为简历就挂了。

特别感谢一面的面试官捞了我,给了我机会,同时也认可我的努力和态度。对比我的面经和其他大佬的面经,自己真的是运气好。别人8成实力,我可能8成运气。所以对我而言,我要继续加倍努力,弥补自己技术上的不足,以及与科班大佬们基础上的差距。希望自己能继续保持学习的热情,继续努力走下去。

也祝愿各位同学,都能找到自己心动的offer。

分享我在这次面试前所做的准备(刷题复习资料以及一些大佬们的学习笔记和学习路线),都已经整理成了电子文档

网上学习资料一大堆,但如果学到的知识不成体系,遇到问题时只是浅尝辄止,不再深入研究,那么很难做到真正的技术提升。

需要这份系统化的资料的朋友,可以添加V获取:vip1024b (备注Java)

一个人可以走的很快,但一群人才能走的更远!不论你是正从事IT行业的老鸟或是对IT行业感兴趣的新人,都欢迎加入我们的的圈子(技术交流、学习资源、职场吐槽、大厂内推、面试辅导),让我们一起学习成长!

r.class)

@SpringBootTest(classes = ParquetConvertApplication.class)

public class TestConvertTest {

@Resource

private TestComponent testComponent;

@Test

public void startTest() throws IOException {

TestEntity testEntity = new TestEntity();

testEntity.setIntValue(100);

感受:

其实我投简历的时候,都不太敢投递阿里。因为在阿里一面前已经过了字节的三次面试,投阿里的简历一直没被捞,所以以为简历就挂了。

特别感谢一面的面试官捞了我,给了我机会,同时也认可我的努力和态度。对比我的面经和其他大佬的面经,自己真的是运气好。别人8成实力,我可能8成运气。所以对我而言,我要继续加倍努力,弥补自己技术上的不足,以及与科班大佬们基础上的差距。希望自己能继续保持学习的热情,继续努力走下去。

也祝愿各位同学,都能找到自己心动的offer。

分享我在这次面试前所做的准备(刷题复习资料以及一些大佬们的学习笔记和学习路线),都已经整理成了电子文档

[外链图片转存中…(img-WkrRGVUE-1713644373866)]

网上学习资料一大堆,但如果学到的知识不成体系,遇到问题时只是浅尝辄止,不再深入研究,那么很难做到真正的技术提升。

需要这份系统化的资料的朋友,可以添加V获取:vip1024b (备注Java)

[外链图片转存中…(img-KqvY9mgX-1713644373867)]

一个人可以走的很快,但一群人才能走的更远!不论你是正从事IT行业的老鸟或是对IT行业感兴趣的新人,都欢迎加入我们的的圈子(技术交流、学习资源、职场吐槽、大厂内推、面试辅导),让我们一起学习成长!

8740

8740

被折叠的 条评论

为什么被折叠?

被折叠的 条评论

为什么被折叠?