print('showId ', show_id)

print(“type of result”, type(position_result))

total_count = position_result[‘totalCount’]

没有符合条件的工作,直接返回

if total_count == 0:

return

remain_page_count = math.ceil(total_count / JOBS_COUNT_ONE_PAGE)

result = position_result[‘result’]

for item in result:

position_id = item[‘positionId’]

job_name = item[‘positionName’]

job_advantage = item[‘positionAdvantage’]

job_salary = item[‘salary’]

publish_time = item[‘createTime’]

company_id = item[‘companyId’]

company_name = item[‘companyFullName’]

company_labels = item[‘companyLabelList’]

company_size = item[‘companySize’]

jobs_id.append(position_id)

jobs_name.append(job_name)

jobs_advantage.append(job_advantage)

jobs_salary.append(job_salary)

jobs_publish_time.append(publish_time)

companies_name.append(company_name)

companies_id.append(company_id)

companies_labels.append(company_labels)

companies_size.append(company_size)

remain_page_count = remain_page_count - 1

page_number = page_number + 1

first = ‘false’

存储基本工作信息,公司信息到csv文件中

job_df = pd.DataFrame(

{‘job_id’: jobs_id, ‘job_name’: jobs_name, ‘job_advantage’: jobs_advantage, ‘salary’: jobs_salary,

‘publish_time’: jobs_publish_time, ‘company_id’: companies_id})

company_df = pd.DataFrame({‘company_id’: companies_id, ‘company_name’: companies_name, ‘labels’: companies_labels,

‘size’: companies_size})

job_df.to_csv(job_info_file, mode=‘w’, header=True, index=False)

company_df.to_csv(company_info_file, mode=‘w’, header=True, index=False)

return show_id

‘’’

根据得到的job_id访问工作页面并存储工作信息到csv文件中

‘’’

def get_and_store_job_info(show_id: str):

jobs_detail = []

PAGE_SIZE = 500

comments_content = []

comments_time = []

users_id = []

company_scores = []

interviewer_scores = []

describe_scores = []

comprehensive_scores = []

useful_counts = []

tags = []

从csv文件读取job_id并与show_id组合成工作页面url

df = pd.read_csv(job_info_file)

jobs_id = df[‘job_id’]

for job_id in jobs_id:

user_agent = get_user_agent()

session = requests.session()

job_page_url = ‘https://www.lagou.com/jobs/’ + str(job_id) + ‘.html?show=’ + show_id

访问工作页面获取职位描述和面试评价

r = request_page_result(url=job_page_url, session=session, user_agent=user_agent)

doc = pq(r.text)

job_detail = doc(‘#job_detail > dd.job_bt > div’).text()

print(“job_detail”, job_detail)

jobs_detail.append(job_detail)

获取面试评价

review_ajax_url = ‘https://www.lagou.com/interview/experience/byPosition.json’

data = {

‘positionId’: job_id,

‘pageSize’: PAGE_SIZE,

}

response = request_ajax_result(review_ajax_url, job_page_url, session, user_agent, data)

response_json = response.json()

print(“response json”, response_json)

if response_json[‘content’][‘data’][‘data’][‘totalCount’] != 0:

result = response_json[‘content’][‘data’][‘data’][‘result’]

for item in result:

comment_content = item[‘content’]

comment_time = item[‘createTime’]

user_id = item[‘userId’]

company_score = item[‘companyScore’]

interviewer_score = item[‘interviewerScore’]

describe_score = item[‘describeScore’]

comprehensive_score = item[‘comprehensiveScore’]

useful_count = item[‘usefulCount’]

tag = item[‘tags’]

print(“content”,comment_content)

comments_content.append(comment_content)

comments_time.append(comment_time)

users_id.append(user_id)

company_scores.append(company_score)

interviewer_scores.append(interviewer_score)

describe_scores.append(describe_score)

comprehensive_scores.append(comprehensive_score)

useful_counts.append(useful_count)

tags.append(tag)

j_ids=[]

j_ids.extend(job_id for i in range(len(comments_content)))

comment_df = pd.DataFrame({‘job_id’: j_ids, ‘content’: comments_content})

comment_df.to_csv(comment_info_file, mode=‘a’, header=False, index=False)

将获取到的职位描述面试评价存储到csv文件中

df[‘job_detail’] = jobs_detail

df.to_csv(job_info_file)

def request_page_result(url, session, user_agent):

try:

r = session.get(url, headers={

‘User-Agent’: user_agent

})

except MaxRetryError as e:

print(e)

user_agent = get_user_agent()

r = session.get(url, headers={

‘User-Agent’: user_agent

}, proxies=get_proxy())

return r,session

def request_ajax_result(ajax_url, page_url, session, user_agent, data):

try:

result = session.post(ajax_url, headers=get_ajax_header(page_url, user_agent), data=data,

allow_redirects=False)

except MaxRetryError as e:

print(e)

user_agent = get_user_agent()

result = session.post(ajax_url, headers=get_ajax_header(page_url, user_agent),

proxies=get_proxy(),

data=data, allow_redirects=False)

return result

‘’’

访问拉勾页面所使用的header

‘’’

def get_page_header():

page_header = {

‘User-Agent’: get_user_agent()

}

return page_header

‘’’

访问拉勾ajax json所使用的header

‘’’

def get_ajax_header(url, user_agent):

ajax_header = {

‘Accept’: ‘application/json, text/javascript, /; q=0.01’,

‘Accept-Encoding’: ‘gzip, deflate, br’,

‘Accept-Language’: ‘zh-CN,zh;q=0.9’,

‘Connection’: ‘keep-alive’,

‘Content-Length’: ‘25’,

‘Content-Type’: ‘application/x-www-form-urlencoded; charset=UTF-8’,

‘Host’: ‘www.lagou.com’,

‘Origin’: ‘https://www.lagou.com’,

‘Referer’: url,

‘Sec-Fetch-Mode’: ‘cors’,

‘Sec-Fetch-Site’: ‘same-origin’,

‘User-Agent’: user_agent,

‘X-Anit-Forge-Code’: ‘0’,

‘X-Anit-Forge-Token’: ‘None’,

‘X-Requested-With’: ‘XMLHttpRequest’

}

return ajax_header

def get_user_agent():

user_agent = [

“Mozilla/5.0 (Windows NT 6.1; WOW64) AppleWebKit/537.1 (KHTML, like Gecko) Chrome/22.0.1207.1 Safari/537.1”

"Mozilla/5.0 (X11; CrOS i686 2268.111.0) AppleWebKit/536.11 (KHTML, like Gecko) Chrome/20.0.1132.57 "

“Safari/536.11”,

“Mozilla/5.0 (Windows NT 6.1; WOW64) AppleWebKit/536.6 (KHTML, like Gecko) Chrome/20.0.1092.0 Safari/536.6”

]

return random.choice(user_agent)

def get_proxy():

response = requests.get(PROXY_POOL_URL)

如果你也是看准了Python,想自学Python,在这里为大家准备了丰厚的免费学习大礼包,带大家一起学习,给大家剖析Python兼职、就业行情前景的这些事儿。

一、Python所有方向的学习路线

Python所有方向路线就是把Python常用的技术点做整理,形成各个领域的知识点汇总,它的用处就在于,你可以按照上面的知识点去找对应的学习资源,保证自己学得较为全面。

二、学习软件

工欲善其必先利其器。学习Python常用的开发软件都在这里了,给大家节省了很多时间。

三、全套PDF电子书

书籍的好处就在于权威和体系健全,刚开始学习的时候你可以只看视频或者听某个人讲课,但等你学完之后,你觉得你掌握了,这时候建议还是得去看一下书籍,看权威技术书籍也是每个程序员必经之路。

四、入门学习视频

我们在看视频学习的时候,不能光动眼动脑不动手,比较科学的学习方法是在理解之后运用它们,这时候练手项目就很适合了。

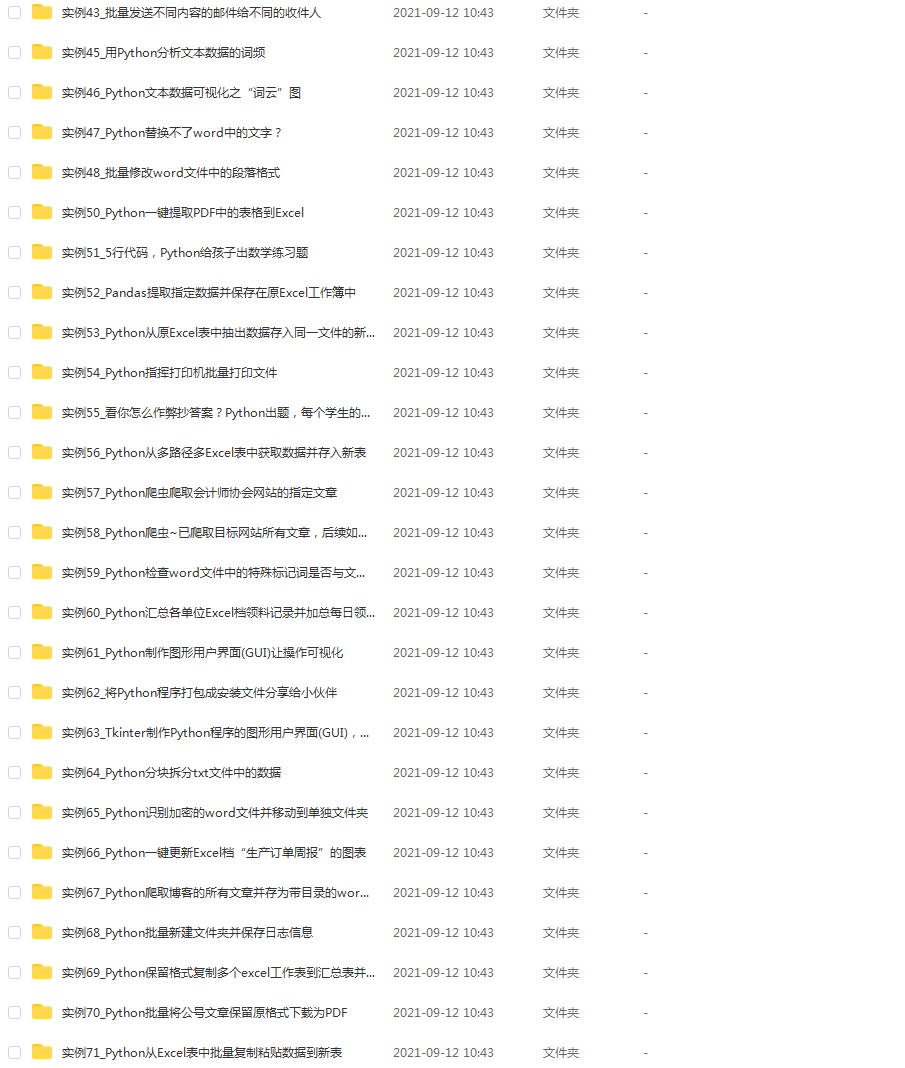

四、实战案例

光学理论是没用的,要学会跟着一起敲,要动手实操,才能将自己的所学运用到实际当中去,这时候可以搞点实战案例来学习。

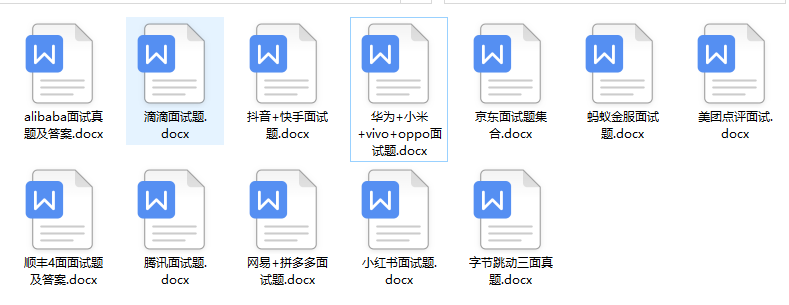

五、面试资料

我们学习Python必然是为了找到高薪的工作,下面这些面试题是来自阿里、腾讯、字节等一线互联网大厂最新的面试资料,并且有阿里大佬给出了权威的解答,刷完这一套面试资料相信大家都能找到满意的工作。

成为一个Python程序员专家或许需要花费数年时间,但是打下坚实的基础只要几周就可以,如果你按照我提供的学习路线以及资料有意识地去实践,你就有很大可能成功!

最后祝你好运!!!

网上学习资料一大堆,但如果学到的知识不成体系,遇到问题时只是浅尝辄止,不再深入研究,那么很难做到真正的技术提升。

一个人可以走的很快,但一群人才能走的更远!不论你是正从事IT行业的老鸟或是对IT行业感兴趣的新人,都欢迎加入我们的的圈子(技术交流、学习资源、职场吐槽、大厂内推、面试辅导),让我们一起学习成长!

7529

7529

被折叠的 条评论

为什么被折叠?

被折叠的 条评论

为什么被折叠?