Version : 1.2

Creation Time : Tue Apr 21 15:55:29 2020

Raid Level : raid0

Array Size : 41906176 (39.96 GiB 42.91 GB)

Raid Devices : 2

Total Devices : 2

Persistence : Superblock is persistent

Update Time : Tue Apr 21 15:55:29 2020

State : clean

Active Devices : 2

Working Devices : 2

Failed Devices : 0

Spare Devices : 0

Chunk Size : 512K

Consistency Policy : none

Name : localhost.localdomain:0 (local to host localhost.localdomain)

UUID : 4b439b50:63314c34:0fb14c51:c9930745

Events : 0

Number Major Minor RaidDevice State

0 8 17 0 active sync /dev/sdb1

1 8 33 1 active sync /dev/sdc1

2.格式化分区

[root@localhost ~]# mkfs.xfs /dev/md0

meta-data=/dev/md0 isize=512 agcount=16, agsize=654720 blks

= sectsz=512 attr=2, projid32bit=1

= crc=1 finobt=0, sparse=0

data = bsize=4096 blocks=10475520, imaxpct=25

= sunit=128 swidth=256 blks

naming =version 2 bsize=4096 ascii-ci=0 ftype=1

log =internal log bsize=4096 blocks=5120, version=2

= sectsz=512 sunit=8 blks, lazy-count=1

realtime =none extsz=4096 blocks=0, rtextents=0

[root@localhost ~]# blkid /dev/md0

/dev/md0: UUID=“13a0c896-5e79-451f-b6f1-b04b79c1bc40” TYPE=“xfs”

3.格式化后挂载

[root@localhost ~]# mkdir /raid0 //创建挂载目录

[root@localhost ~]# mount /dev/md0 /raid0/ //将/dev/md0挂载到/raid0

[root@localhost ~]# df -h //查看挂载是否成功

文件系统 容量 已用 可用 已用% 挂载点

/dev/mapper/centos-root 17G 11G 6.7G 61% /

devtmpfs 1.1G 0 1.1G 0% /dev

tmpfs 1.1G 0 1.1G 0% /dev/shm

…

/dev/md0 40G 33M 40G 1% /raid0

## RAID 1 实验

1.创建RAID 1

[root@localhost ~]# mdadm -C -v /dev/md1 -l 1 -n 2 /dev/sdd1 /dev/sde1 -x 1 /dev/sdb1

//在/dev/md1目录下将sdd1与sde1两块磁盘创建为RAID级别为1,磁盘数为2

的RAID1磁盘阵列并将sdd1作为备用磁盘

mdadm: Note: this array has metadata at the start and

may not be suitable as a boot device. If you plan to

store ‘/boot’ on this device please ensure that

your boot-loader understands md/v1.x metadata, or use

–metadata=0.90

mdadm: /dev/sdb1 appears to be part of a raid array:

level=raid0 devices=2 ctime=Tue Apr 21 15:55:29 2020

mdadm: size set to 20953088K

Continue creating array? y

mdadm: Fail create md1 when using /sys/module/md_mod/parameters/new_array

mdadm: Defaulting to version 1.2 metadata

mdadm: array /dev/md1 started.

2.查看RAID 1状态

[root@localhost ~]# cat /proc/mdstat

Personalities : [raid0] [raid1]

md1 : active raid1 sdb12 sde1[1] sdd1[0]

20953088 blocks super 1.2 [2/2] [UU]

[==========>…] resync = 54.4% (11407360/20953088) finish=0.7min speed=200040K/sec

unused devices:

[root@localhost ~]# mdadm -D /dev/md1

/dev/md1:

Version : 1.2

Creation Time : Tue Apr 21 16:11:16 2020

Raid Level : raid1

Array Size : 20953088 (19.98 GiB 21.46 GB)

Used Dev Size : 20953088 (19.98 GiB 21.46 GB)

Raid Devices : 2

Total Devices : 3

Persistence : Superblock is persistent

Update Time : Tue Apr 21 16:12:34 2020

State : clean, resyncing

Active Devices : 2

Working Devices : 3

Failed Devices : 0

Spare Devices : 1

Consistency Policy : resync

Resync Status : 77% complete

Name : localhost.localdomain:1 (local to host localhost.localdomain)

UUID : 98b76e6e:b6390011:26a822a8:3dcc4cc9

Events : 12

Number Major Minor RaidDevice State

0 8 49 0 active sync /dev/sdd1

1 8 65 1 active sync /dev/sde1

2 8 17 - spare /dev/sdb1

3.格式化并挂载

[root@localhost ~]# mkfs.xfs /dev/md1

[root@localhost ~]# blkid /dev/md1

/dev/md1: UUID=“18a8f33b-1bb6-43c2-8dfc-2b21a871961a” TYPE=“xfs”

[root@localhost ~]# mkdir /raid1

[root@localhost ~]# mount /dev/md1 /raid1/

[root@localhost ~]# df -h

文件系统 容量 已用 可用 已用% 挂载点

/dev/mapper/centos-root 17G 11G 6.7G 61% /

…

/dev/md1 20G 33M 20G 1% /raid1

## 4.模拟磁盘损坏

模拟损坏后查看RAID1阵列详细信息,发现/dev/sdb1自动替换了损坏的/dev/sdd1磁盘。

[root@localhost ~]# mdadm /dev/md1 -f /dev/sdd1

[root@localhost ~]# mdadm -D /dev/md1 //查看

/dev/md1:

Version : 1.2

Creation Time : Tue Apr 21 16:11:16 2020

Raid Level : raid1

Array Size : 20953088 (19.98 GiB 21.46 GB)

Used Dev Size : 20953088 (19.98 GiB 21.46 GB)

Raid Devices : 2

Total Devices : 3

Persistence : Superblock is persistent

Update Time : Tue Apr 21 16:29:38 2020

State : clean, degraded, recovering //正在自动恢复

Active Devices : 1

Working Devices : 2

Failed Devices : 1 //已损坏的磁盘

Spare Devices : 1 //备用设备数

Consistency Policy : resync

Rebuild Status : 46% complete

Name : localhost.localdomain:1 (local to host localhost.localdomain)

UUID : 98b76e6e:b6390011:26a822a8:3dcc4cc9

Events : 26

Number Major Minor RaidDevice State

2 8 17 0 spare rebuilding /dev/sdb1

1 8 65 1 active sync /dev/sde1

0 8 49 - faulty /dev/sdd1

****** 备用磁盘正在自动替换损坏的磁盘,等几分钟再查看RAID1阵列详细信息

[root@localhost ~]# mdadm -D /dev/md1

/dev/md1:

Version : 1.2

Creation Time : Tue Apr 21 16:11:16 2020

Raid Level : raid1

Array Size : 20953088 (19.98 GiB 21.46 GB)

Used Dev Size : 20953088 (19.98 GiB 21.46 GB)

Raid Devices : 2

Total Devices : 3

Persistence : Superblock is persistent

Update Time : Tue Apr 21 16:30:39 2020

State : clean //干净,已经替换成功了

Active Devices : 2

Working Devices : 2

Failed Devices : 1 //已损坏的磁盘

Spare Devices : 0 //备用设备数为0了

Consistency Policy : resync

Name : localhost.localdomain:1 (local to host localhost.localdomain)

UUID : 98b76e6e:b6390011:26a822a8:3dcc4cc9

Events : 36

Number Major Minor RaidDevice State

2 8 17 0 active sync /dev/sdb1

1 8 65 1 active sync /dev/sde1

0 8 49 - faulty /dev/sdd1

5.移除损坏的磁盘

[root@localhost ~]# mdadm -r /dev/md1 /dev/sdd1

mdadm: hot removed /dev/sdd1 from /dev/md1

[root@localhost ~]# mdadm -D /dev/md1

/dev/md1:

Version : 1.2

Creation Time : Tue Apr 21 16:11:16 2020

Raid Level : raid1

Array Size : 20953088 (19.98 GiB 21.46 GB)

Used Dev Size : 20953088 (19.98 GiB 21.46 GB)

Raid Devices : 2

Total Devices : 2

Persistence : Superblock is persistent

Update Time : Tue Apr 21 16:38:32 2020

State : clean

Active Devices : 2

Working Devices : 2

Failed Devices : 0 //因为我们已经移除了,所以这里已经没有显示了

Spare Devices : 0

Consistency Policy : resync

Name : localhost.localdomain:1 (local to host localhost.localdomain)

UUID : 98b76e6e:b6390011:26a822a8:3dcc4cc9

Events : 37

Number Major Minor RaidDevice State

2 8 17 0 active sync /dev/sdb1

1 8 65 1 active sync /dev/sde1

6.添加新磁盘到RAID1阵列:

[root@localhost ~]# mdadm -a /dev/md1 /dev/sdc1

//将/dev/sdc1磁盘添加为RAID1阵列的备用设备

mdadm: added /dev/sdc1

[root@localhost ~]# mdadm -D /dev/md1

/dev/md1:

Version : 1.2

Creation Time : Tue Apr 21 16:11:16 2020

Raid Level : raid1

Array Size : 20953088 (19.98 GiB 21.46 GB)

Used Dev Size : 20953088 (19.98 GiB 21.46 GB)

Raid Devices : 2

Total Devices : 3

Persistence : Superblock is persistent

Update Time : Tue Apr 21 16:40:20 2020

State : clean

Active Devices : 2

Working Devices : 3

Failed Devices : 0

Spare Devices : 1 //刚添加了一块新磁盘,备用磁盘这里已经有显示

Consistency Policy : resync

Name : localhost.localdomain:1 (local to host localhost.localdomain)

UUID : 98b76e6e:b6390011:26a822a8:3dcc4cc9

Events : 38

Number Major Minor RaidDevice State

2 8 17 0 active sync /dev/sdb1

1 8 65 1 active sync /dev/sde1

3 8 33 - spare /dev/sdc1

**注意:**

* 新增加的硬盘需要与原硬盘大小一致。

* 如果原有阵列缺少工作磁盘(如raid1只有一块在工作,raid5只有2块在工作),这时新增加的磁盘直接变为工作磁盘,如果原有阵列工作正常,则新增加的磁盘为热备磁盘。

## 7.停止RAID阵列

要停止阵列,需要先将挂载的RAID先取消挂载才可以停止阵列,并且停止阵列之后会自动删除创建阵列的目录。

[root@localhost ~]# umount /dev/md1

[root@localhost ~]# df -h

文件系统 容量 已用 可用 已用% 挂载点

/dev/mapper/centos-root 17G 11G 6.7G 61% /

devtmpfs 1.1G 0 1.1G 0% /dev

tmpfs 1.1G 0 1.1G 0% /dev/shm

tmpfs 1.1G 9.7M 1.1G 1% /run

tmpfs 1.1G 0 1.1G 0% /sys/fs/cgroup

/dev/sda1 1014M 130M 885M 13% /boot

overlay 17G 11G 6.7G 61% /var/lib/docker/overlay2/2131dc663296fd193837265e88fa5c9c62b9bfd924303381cea8b4c39c652c84/merged

shm 64M 0 64M 0% /var/lib/docker/containers/436f7e6619c1805553ea71d800fd49ab08843cef6ed162acb35b4c32064ea449/mounts/shm

tmpfs 211M 0 211M 0% /run/user/0

[root@localhost ~]# mdadm -S /dev/md1

mdadm: stopped /dev/md1

[root@localhost ~]# ls /dev/md1

ls: 无法访问/dev/md1: 没有那个文件或目录

## RAID 5 实验

1.创建RAID 5

[root@localhost ~]# mdadm -C -v /dev/md5 -l 5 -n 3 /dev/sdb1 /dev/sdc1 /dev/sdd1 -x 1 /dev/sde1

//在/dev/md5目录下将sdb1、sdc1、sdd1三块磁盘创建为RAID级别为5,磁盘

数为3的RAID5磁盘阵列并将sde1作为备用磁盘

mdadm: layout defaults to left-symmetric

mdadm: layout defaults to left-symmetric

mdadm: chunk size defaults to 512K

mdadm: /dev/sdb1 appears to be part of a raid array:

level=raid1 devices=2 ctime=Tue Apr 21 16:11:16 2020

mdadm: /dev/sdc1 appears to be part of a raid array:

level=raid1 devices=2 ctime=Tue Apr 21 16:11:16 2020

mdadm: /dev/sdd1 appears to be part of a raid array:

level=raid1 devices=2 ctime=Tue Apr 21 16:11:16 2020

mdadm: /dev/sde1 appears to be part of a raid array:

level=raid1 devices=2 ctime=Tue Apr 21 16:11:16 2020

mdadm: size set to 20953088K

Continue creating array? y

mdadm: Fail create md5 when using /sys/module/md_mod/parameters/new_array

mdadm: Defaulting to version 1.2 metadata

mdadm: array /dev/md5 started.

2.查看RAID 5阵列信息

[root@localhost ~]# cat /proc/mdstat

Personalities : [raid0] [raid1] [raid6] [raid5] [raid4]

md5 : active raid5 sdd1[4] sde13 sdc1[1] sdb1[0]

41906176 blocks super 1.2 level 5, 512k chunk, algorithm 2 [3/3] [UUU]

unused devices:

[root@localhost ~]# mdadm -D /dev/md5

/dev/md5:

Version : 1.2

Creation Time : Tue Apr 21 16:56:09 2020

Raid Level : raid5

Array Size : 41906176 (39.96 GiB 42.91 GB)

Used Dev Size : 20953088 (19.98 GiB 21.46 GB)

Raid Devices : 3

Total Devices : 4

Persistence : Superblock is persistent

Update Time : Tue Apr 21 16:59:56 2020

State : clean

Active Devices : 3

Working Devices : 4

Failed Devices : 0

Spare Devices : 1 //备用设备数1

Layout : left-symmetric

Chunk Size : 512K

Consistency Policy : resync

Name : localhost.localdomain:5 (local to host localhost.localdomain)

UUID : 422363cb:e7fd4d3a:aaf61344:9bdd00b3

Events : 18

Number Major Minor RaidDevice State

0 8 17 0 active sync /dev/sdb1

1 8 33 1 active sync /dev/sdc1

4 8 49 2 active sync /dev/sdd1

3 8 65 - spare /dev/sde1

3.模拟磁盘损坏

[root@localhost ~]# mdadm -f /dev/md5 /dev/sdb1

mdadm: set /dev/sdb1 faulty in /dev/md5 //提示sdb1已损坏

[root@localhost ~]# mdadm -D /dev/md5

/dev/md5:

Version : 1.2

Creation Time : Tue Apr 21 16:56:09 2020

Raid Level : raid5

Array Size : 41906176 (39.96 GiB 42.91 GB)

Used Dev Size : 20953088 (19.98 GiB 21.46 GB)

Raid Devices : 3

Total Devices : 4

Persistence : Superblock is persistent

Update Time : Tue Apr 21 17:04:36 2020

State : clean, degraded, recovering //正在自动替换

Active Devices : 2

Working Devices : 3

Failed Devices : 1

Spare Devices : 1

Layout : left-symmetric

Chunk Size : 512K

Consistency Policy : resync

Rebuild Status : 16% complete

Name : localhost.localdomain:5 (local to host localhost.localdomain)

UUID : 422363cb:e7fd4d3a:aaf61344:9bdd00b3

Events : 22

Number Major Minor RaidDevice State

3 8 65 0 spare rebuilding /dev/sde1

1 8 33 1 active sync /dev/sdc1

4 8 49 2 active sync /dev/sdd1

0 8 17 - faulty /dev/sdb1

[root@localhost ~]# mdadm -D /dev/md5

/dev/md5:

Version : 1.2

Creation Time : Tue Apr 21 16:56:09 2020

Raid Level : raid5

Array Size : 41906176 (39.96 GiB 42.91 GB)

Used Dev Size : 20953088 (19.98 GiB 21.46 GB)

Raid Devices : 3

Total Devices : 4

Persistence : Superblock is persistent

Update Time : Tue Apr 21 17:07:58 2020

State : clean //自动替换成功

Active Devices : 3

Working Devices : 3

Failed Devices : 1 //损坏磁盘数为1

Spare Devices : 0 //备用磁盘数为0,因为已经替换

Layout : left-symmetric

Chunk Size : 512K

Consistency Policy : resync

Name : localhost.localdomain:5 (local to host localhost.localdomain)

UUID : 422363cb:e7fd4d3a:aaf61344:9bdd00b3

Events : 37

Number Major Minor RaidDevice State

3 8 65 0 active sync /dev/sde1

1 8 33 1 active sync /dev/sdc1

4 8 49 2 active sync /dev/sdd1

0 8 17 - faulty /dev/sdb1

4.格式化并挂载

[root@localhost ~]# mkdir /raid5

[root@localhost ~]# mkfs.xfs /dev/md5

[root@localhost ~]# mount /dev/md5 /raid5/

[root@localhost ~]# df -h

文件系统 容量 已用 可用 已用% 挂载点

/dev/mapper/centos-root 17G 11G 6.7G 61% /

…

/dev/md5 40G 33M 40G 1% /raid5

5.停止阵列

[root@localhost ~]# mdadm -S /dev/md5

mdadm: stopped /dev/md5

## RAID 6 实验

1.创建RAID 6阵列

[root@localhost ~]# mdadm -C -v /dev/md6 -l 6 -n 4 /dev/sdb1 /dev/sdc1 /dev/sdd1 /dev/sde1 -x 2 /dev/sdf1 /dev/sdg1

//在/dev/md6目录下将sdb1、sdc1、sdd1、sde1四块磁盘创建为RAID级别

为6,磁盘数为4的RAID6磁盘阵列并将sdf1、sdg1作为备用磁盘

mdadm: layout defaults to left-symmetric

mdadm: layout defaults to left-symmetric

mdadm: chunk size defaults to 512K

mdadm: /dev/sdb1 appears to be part of a raid array:

level=raid6 devices=4 ctime=Tue Apr 21 17:37:15 2020

mdadm: partition table exists on /dev/sdb1 but will be lost or

meaningless after creating array

mdadm: /dev/sdc1 appears to be part of a raid array:

level=raid6 devices=4 ctime=Tue Apr 21 17:37:15 2020

mdadm: /dev/sdd1 appears to be part of a raid array:

level=raid6 devices=4 ctime=Tue Apr 21 17:37:15 2020

mdadm: /dev/sde1 appears to be part of a raid array:

level=raid6 devices=4 ctime=Tue Apr 21 17:37:15 2020

mdadm: /dev/sdf1 appears to be part of a raid array:

level=raid6 devices=4 ctime=Tue Apr 21 17:37:15 2020

mdadm: size set to 10467328K

mdadm: largest drive (/dev/sdb1) exceeds size (10467328K) by more than 1%

Continue creating array? y

mdadm: Fail create md6 when using /sys/module/md_mod/parameters/new_array

mdadm: Defaulting to version 1.2 metadata

mdadm: array /dev/md6 started.

2.查看RAID 6阵列信息

[root@localhost ~]# cat /proc/mdstat

Personalities : [raid6] [raid5] [raid4]

md6 : active raid6 sdg15 sdf14 sde1[3] sdd1[2] sdc1[1] sdb1[0]

20934656 blocks super 1.2 level 6, 512k chunk, algorithm 2 [4/4] [UUUU]

unused devices:

[root@localhost ~]# mdadm -D /dev/md6

/dev/md6:

Version : 1.2

Creation Time : Tue Apr 21 17:54:25 2020

Raid Level : raid6

Array Size : 20934656 (19.96 GiB 21.44 GB)

Used Dev Size : 10467328 (9.98 GiB 10.72 GB)

Raid Devices : 4

Total Devices : 6

Persistence : Superblock is persistent

Update Time : Tue Apr 21 17:58:16 2020

State : clean

Active Devices : 4

Working Devices : 6

Failed Devices : 0

Spare Devices : 2

Layout : left-symmetric

Chunk Size : 512K

Consistency Policy : resync

Name : localhost.localdomain:6 (local to host localhost.localdomain)

UUID : 9a5c470e:eb95d0b4:2a213dac:f0fd3315

Events : 17

Number Major Minor RaidDevice State

0 8 17 0 active sync /dev/sdb1

1 8 33 1 active sync /dev/sdc1

2 8 49 2 active sync /dev/sdd1

3 8 65 3 active sync /dev/sde1

4 8 81 - spare /dev/sdf1

5 8 97 - spare /dev/sdg1

3.模拟磁盘损坏(同时损坏两块)

[root@localhost ~]# mdadm -f /dev/md6 /dev/sdb1 //sdb1损坏

mdadm: set /dev/sdb1 faulty in /dev/md6

[root@localhost ~]# mdadm -f /dev/md6 /dev/sdc1 //sdc1损坏

mdadm: set /dev/sdc1 faulty in /dev/md6

[root@localhost ~]# mdadm -D /dev/md6 //查看RAID6阵列状态

/dev/md6:

Version : 1.2

Creation Time : Tue Apr 21 17:54:25 2020

Raid Level : raid6

Array Size : 20934656 (19.96 GiB 21.44 GB)

Used Dev Size : 10467328 (9.98 GiB 10.72 GB)

Raid Devices : 4

Total Devices : 6

Persistence : Superblock is persistent

Update Time : Tue Apr 21 18:01:46 2020

State : clean, degraded, recovering //正在替换

Active Devices : 2

Working Devices : 4

Failed Devices : 2 //损坏磁盘数2块

Spare Devices : 2 //备用设备数2

Layout : left-symmetric

Chunk Size : 512K

Consistency Policy : resync

Rebuild Status : 19% complete

Name : localhost.localdomain:6 (local to host localhost.localdomain)

UUID : 9a5c470e:eb95d0b4:2a213dac:f0fd3315

Events : 29

Number Major Minor RaidDevice State

5 8 97 0 spare rebuilding /dev/sdg1

4 8 81 1 spare rebuilding /dev/sdf1

2 8 49 2 active sync /dev/sdd1

3 8 65 3 active sync /dev/sde1

0 8 17 - faulty /dev/sdb1

1 8 33 - faulty /dev/sdc1

[root@localhost ~]# mdadm -D /dev/md6

/dev/md6:

Version : 1.2

Creation Time : Tue Apr 21 17:54:25 2020

Raid Level : raid6

Array Size : 20934656 (19.96 GiB 21.44 GB)

Used Dev Size : 10467328 (9.98 GiB 10.72 GB)

Raid Devices : 4

Total Devices : 6

Persistence : Superblock is persistent

Update Time : Tue Apr 21 18:04:02 2020

State : clean //已自动替换

Active Devices : 4

Working Devices : 4

Failed Devices : 2

Spare Devices : 0 //备用设备为0

Layout : left-symmetric

Chunk Size : 512K

Consistency Policy : resync

Name : localhost.localdomain:6 (local to host localhost.localdomain)

UUID : 9a5c470e:eb95d0b4:2a213dac:f0fd3315

Events : 43

Number Major Minor RaidDevice State

5 8 97 0 active sync /dev/sdg1

4 8 81 1 active sync /dev/sdf1

2 8 49 2 active sync /dev/sdd1

3 8 65 3 active sync /dev/sde1

0 8 17 - faulty /dev/sdb1

1 8 33 - faulty /dev/sdc1

**4.格式化并挂载**

方法同上。

**5.停止阵列**

[root@localhost ~]# mdadm -S /dev/md6

mdadm: stopped /dev/md6

## RAID 10 实验

RAID 1+0是用两个RAID 1来创建。

1.创建两个RAID 1阵列

[root@localhost ~]# mdadm -C -v /dev/md1 -l 1 -n 2 /dev/sdb1 /dev/sdc1

mdadm: /dev/sdb1 appears to be part of a raid array:

level=raid1 devices=2 ctime=Wed Apr 22 00:47:05 2020

mdadm: partition table exists on /dev/sdb1 but will be lost or

meaningless after creating array

mdadm: Note: this array has metadata at the start and

may not be suitable as a boot device. If you plan to

store ‘/boot’ on this device please ensure that

your boot-loader understands md/v1.x metadata, or use

–metadata=0.90

mdadm: /dev/sdc1 appears to be part of a raid array:

level=raid1 devices=2 ctime=Wed Apr 22 00:47:05 2020

mdadm: size set to 20953088K

Continue creating array? y

mdadm: Fail create md1 when using /sys/module/md_mod/parameters/new_array

mdadm: Defaulting to version 1.2 metadata

mdadm: array /dev/md1 started.

[root@localhost ~]# mdadm -C -v /dev/md0 -l 1 -n 2 /dev/sdd1 /dev/sde1

mdadm: /dev/sdd1 appears to be part of a raid array:

level=raid6 devices=4 ctime=Tue Apr 21 17:54:25 2020

mdadm: Note: this array has metadata at the start and

may not be suitable as a boot device. If you plan to

store ‘/boot’ on this device please ensure that

your boot-loader understands md/v1.x metadata, or use

–metadata=0.90

mdadm: /dev/sde1 appears to be part of a raid array:

level=raid6 devices=4 ctime=Tue Apr 21 17:54:25 2020

mdadm: size set to 20953088K

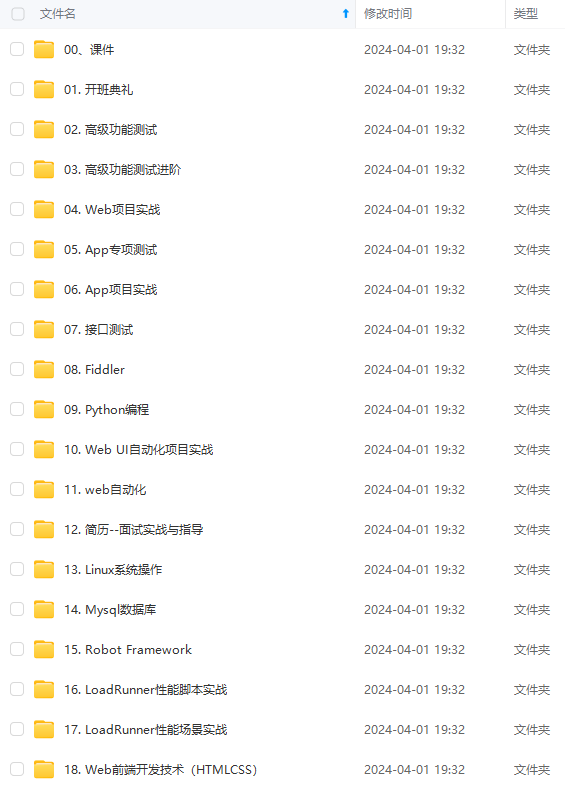

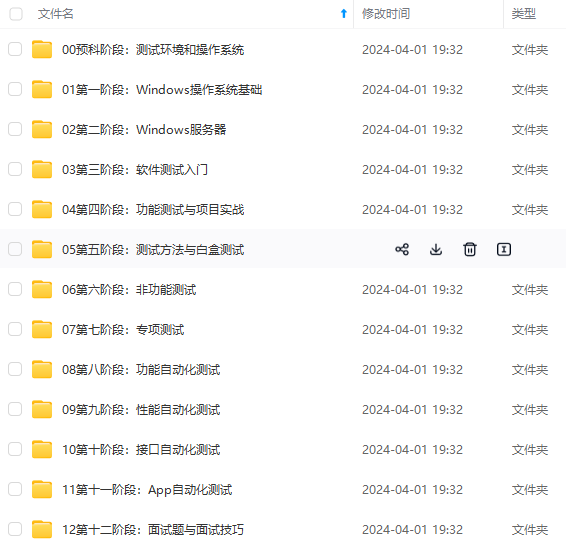

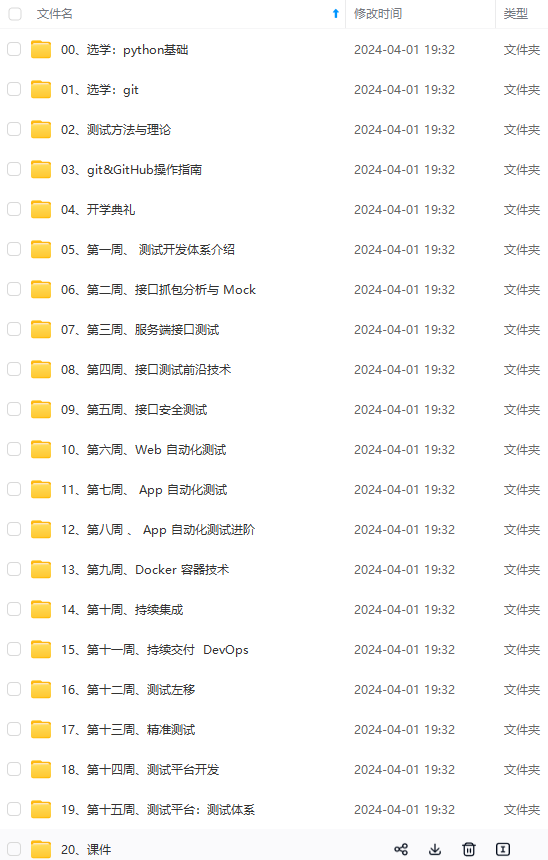

既有适合小白学习的零基础资料,也有适合3年以上经验的小伙伴深入学习提升的进阶课程,涵盖了95%以上软件测试知识点,真正体系化!

[root@localhost ~]# mdadm -C -v /dev/md0 -l 1 -n 2 /dev/sdd1 /dev/sde1

mdadm: /dev/sdd1 appears to be part of a raid array:

level=raid6 devices=4 ctime=Tue Apr 21 17:54:25 2020

mdadm: Note: this array has metadata at the start and

may not be suitable as a boot device. If you plan to

store ‘/boot’ on this device please ensure that

your boot-loader understands md/v1.x metadata, or use

–metadata=0.90

mdadm: /dev/sde1 appears to be part of a raid array:

level=raid6 devices=4 ctime=Tue Apr 21 17:54:25 2020

mdadm: size set to 20953088K

[外链图片转存中…(img-NUUVlMQL-1719255092629)]

[外链图片转存中…(img-k1eevErT-1719255092630)]

[外链图片转存中…(img-fCz5cMdp-1719255092630)]

既有适合小白学习的零基础资料,也有适合3年以上经验的小伙伴深入学习提升的进阶课程,涵盖了95%以上软件测试知识点,真正体系化!

3597

3597

被折叠的 条评论

为什么被折叠?

被折叠的 条评论

为什么被折叠?