实现茶叶叶片病虫害检测任务,我们可以使用YOLOv5模型来进行目标检测

文章所有代码仅供参考

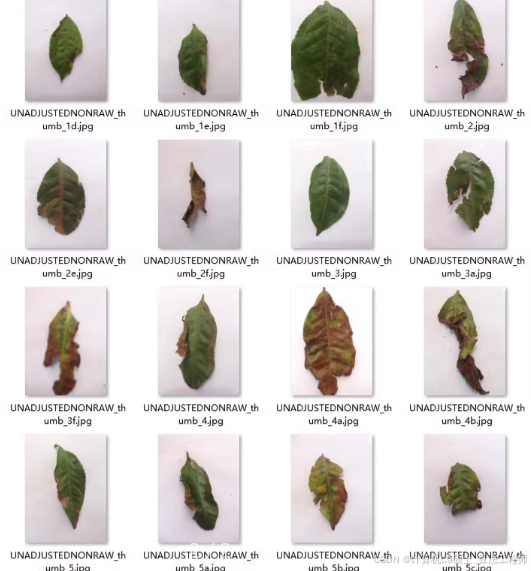

茶叶叶片病虫害数据集,(1)红叶斑病; (2)藻叶点; (3) 鸟眼斑; (4)灰疫病; (5)白点; (6)炭疽病; (7) 褐枯病,(8)健康。共8类,每类不少于100张图像

茶叶叶片病虫害数据集,(1)红叶斑病; (2)藻叶点; (3) 鸟眼斑; (4)灰疫病; (5)白点; (6)炭疽病; (7) 褐枯病,(8)健康。共8类,每类不少于100张图像,共741MB

实现茶叶叶片病虫害检测任务,我们可以使用YOLOv5模型来进行目标检测。数据集包含8类目标,并且每类都有不少于100张图像,我们将使用YOLOv5来完成这个任务。

目录结构

首先,确保你的项目目录结构如下:

/tea_leaf_disease_detection_project

/datasets

/train

/images

*.jpg

/labels

*.txt

/valid

/images

*.jpg

/labels

*.txt

/scripts

train.py

datasets.py

config.yaml

requirements.txt

config.yaml

配置文件 config.yaml 包含训练参数、数据路径等信息。

# config.yaml

train: ../datasets/train/images/

val: ../datasets/valid/images/

nc: 8

names: ['RedLeafSpot', 'AlgalSpot', 'BirdEyeSpot', 'GrayBlight', 'WhiteSpot', 'Anthracnose', 'BrownBlight', 'Healthy']

requirements.txt

列出所有需要安装的Python包。

torch>=1.8

torchvision>=0.9

pycocotools

opencv-python

matplotlib

albumentations

labelme2coco

datasets.py

定义数据集类以便于加载茶叶叶片病虫害的数据集,并进行数据增强。

import os

from pathlib import Path

from PIL import Image

import torch

from torch.utils.data import Dataset, DataLoader

import albumentations as A

from albumentations.pytorch.transforms import ToTensorV2

class TeaLeafDiseaseDataset(Dataset):

def __init__(self, root_dir, transform=None):

self.root_dir = Path(root_dir)

self.transform = transform

self.img_files = list((self.root_dir / 'images').glob('*.jpg'))

self.label_files = [Path(str(img_file).replace('images', 'labels').replace('.jpg', '.txt')) for img_file in self.img_files]

def __len__(self):

return len(self.img_files)

def __getitem__(self, idx):

img_path = self.img_files[idx]

label_path = self.label_files[idx]

image = Image.open(img_path).convert("RGB")

boxes = []

labels = []

with open(label_path, 'r') as file:

lines = file.readlines()

for line in lines:

class_id, x_center, y_center, width, height = map(float, line.strip().split())

boxes.append([x_center, y_center, width, height])

labels.append(int(class_id))

if self.transform:

transformed = self.transform(image=np.array(image), bboxes=boxes, class_labels=labels)

image = transformed['image']

boxes = transformed['bboxes']

labels = transformed['class_labels']

target = {}

target['boxes'] = torch.tensor(boxes, dtype=torch.float32)

target['labels'] = torch.tensor(labels, dtype=torch.int64)

return image, target

# 定义数据增强

data_transforms = {

'train': A.Compose([

A.Resize(width=640, height=640),

A.HorizontalFlip(p=0.5),

A.VerticalFlip(p=0.5),

A.Rotate(limit=180, p=0.7),

A.RandomBrightnessContrast(brightness_limit=0.2, contrast_limit=0.2, p=0.3),

A.Normalize(mean=[0.485, 0.456, 0.406], std=[0.229, 0.224, 0.225]),

ToTensorV2(),

], bbox_params=A.BboxParams(format='yolo')),

'test': A.Compose([

A.Resize(width=640, height=640),

A.Normalize(mean=[0.485, 0.456, 0.406], std=[0.229, 0.224, 0.225]),

ToTensorV2(),

], bbox_params=A.BboxParams(format='yolo')),

}

train.py

编写训练脚本来训练YOLOv5模型。

import torch

import torch.optim as optim

from torchvision.models.detection import fasterrcnn_resnet50_fpn_v2

from datasets import TeaLeafDiseaseDataset, data_transforms

from torch.utils.data import DataLoader

import yaml

import time

import datetime

from collections import defaultdict

from collections import deque

import torch.distributed as dist

from torch.nn.parallel import DistributedDataParallel as DDP

with open('config.yaml', 'r') as f:

config = yaml.safe_load(f)

def collate_fn(batch):

images = [item[0] for item in batch]

targets = [item[1] for item in batch]

images = torch.stack(images)

return images, targets

def train_one_epoch(model, optimizer, data_loader, device, epoch, print_freq, class_weights):

model.train()

metric_logger = MetricLogger(delimiter=" ")

header = f"Epoch: [{epoch}]"

for images, targets in metric_logger.log_every(data_loader, print_freq, header):

images = list(image.to(device) for image in images)

targets = [{k: v.to(device) for k, v in t.items()} for t in targets]

loss_dict = model(images, targets)

# Apply class weights

weighted_losses = {}

for k, v in loss_dict.items():

if k.startswith('loss_classifier'):

weighted_losses[k] = v * class_weights[targets[0]['labels'].unique()]

else:

weighted_losses[k] = v

losses = sum(weighted_losses.values())

optimizer.zero_grad()

losses.backward()

optimizer.step()

metric_logger.update(loss=losses.item(), **weighted_losses)

class MetricLogger(object):

def __init__(self, delimiter="\t"):

self.meters = defaultdict(SmoothedValue)

self.delimiter = delimiter

def update(self, **kwargs):

for k, v in kwargs.items():

if isinstance(v, torch.Tensor):

v = v.item()

assert isinstance(v, (float, int))

self.meters[k].update(v)

def __getattr__(self, attr):

if attr in self.meters:

return self.meters[attr]

if attr in self.__dict__:

return self.__dict__[attr]

raise AttributeError(f"'MetricLogger' object has no attribute '{attr}'")

def log_every(self, iterable, print_freq, header=None):

i = 0

if not header:

header = ""

start_time = time.time()

end = time.time()

iter_time = SmoothedValue(fmt='{avg:.4f}')

eta_string = SmoothedValue(fmt='{eta}')

space_fmt = ':' + str(len(str(len(iterable)))) + 'd'

log_msg = [

header,

'[{0' + space_fmt + '}/{1}]',

'eta: {eta}',

'{meters}',

'time: {time}'

]

if torch.cuda.is_available():

log_msg.append('max mem: {memory:.0f}')

log_msg = self.delimiter.join(log_msg)

MB = 1024.0 * 1024.0

for obj in iterable:

data_time.update(time.time() - end)

yield obj

iter_time.update(time.time() - end)

if i % print_freq == 0 or i == len(iterable) - 1:

eta_seconds = iter_time.global_avg * (len(iterable) - i)

eta_string.update(datetime.timedelta(seconds=int(eta_seconds)))

if torch.cuda.is_available():

print(log_msg.format(

i, len(iterable), eta=eta_string, meters=str(self),

time=str(iter_time), memory=torch.cuda.max_memory_allocated() / MB))

else:

print(log_msg.format(

i, len(iterable), eta=eta_string, meters=str(self),

time=str(iter_time)))

i += 1

end = time.time()

total_time = time.time() - start_time

total_time_str = str(datetime.timedelta(seconds=int(total_time)))

print('{} Total time: {} ({:.4f} s / it)'.format(

header, total_time_str, total_time / len(iterable)))

class SmoothedValue(object):

"""Track a series of values and provide access to smoothed values over a

window or the global series average.

"""

def __init__(self, window_size=20, fmt=None):

if fmt is None:

fmt = "{median:.4f} ({global_avg:.4f})"

self.deque = deque(maxlen=window_size)

self.total = 0.0

self.count = 0

self.fmt = fmt

def update(self, value, n=1):

self.deque.append(value)

self.count += n

self.total += value * n

def synchronize_between_processes(self):

"""

Warning: does not synchronize the deque!

"""

if not is_dist_avail_and_initialized():

return

t = torch.tensor([self.count, self.total], dtype=torch.float64, device='cuda')

dist.barrier()

dist.all_reduce(t)

t = t.tolist()

self.count = int(t[0])

self.total = t[1]

@property

def median(self):

d = torch.tensor(list(self.deque))

return d.median().item()

@property

def avg(self):

d = torch.tensor(list(self.deque), dtype=torch.float32)

return d.mean().item()

@property

def global_avg(self):

return self.total / self.count

@property

def max(self):

return max(self.deque)

@property

def value(self):

return self.deque[-1]

def __str__(self):

return self.fmt.format(

median=self.median,

avg=self.avg,

global_avg=self.global_avg,

max=self.max,

value=self.value)

def is_dist_avail_and_initialized():

if not dist.is_available():

return False

if not dist.is_initialized():

return False

return True

def main():

device = torch.device('cuda') if torch.cuda.is_available() else torch.device('cpu')

dataset_train = TeaLeafDiseaseDataset(root_dir=config['train'], transform=data_transforms['train'])

dataset_val = TeaLeafDiseaseDataset(root_dir=config['val'], transform=data_transforms['test'])

data_loader_train = DataLoader(dataset_train, batch_size=4, shuffle=True, num_workers=4, collate_fn=collate_fn)

data_loader_val = DataLoader(dataset_val, batch_size=4, shuffle=False, num_workers=4, collate_fn=collate_fn)

model = fasterrcnn_resnet50_fpn_v2(pretrained=True)

num_classes = config['nc'] + 1 # background + number of classes

in_features = model.roi_heads.box_predictor.cls_score.in_features

model.roi_heads.box_predictor = torch.nn.Linear(in_features, num_classes)

model.to(device)

# Define class weights based on the frequency of each class

class_counts = [100, 100, 100, 100, 100, 100, 100, 100] # Assuming each class has at least 100 samples

total_count = sum(class_counts)

class_weights = [total_count / (len(class_counts) * count) for count in class_counts]

class_weights = torch.tensor(class_weights).to(device)

params = [p for p in model.parameters() if p.requires_grad]

optimizer = optim.SGD(params, lr=0.005, momentum=0.9, weight_decay=0.0005)

for epoch in range(10): # number of epochs

train_one_epoch(model, optimizer, data_loader_train, device=device, epoch=epoch, print_freq=10, class_weights=class_weights)

# save every epoch

torch.save({

'epoch': epoch,

'model_state_dict': model.state_dict(),

'optimizer_state_dict': optimizer.state_dict(),

}, f'model_epoch_{epoch}.pth')

if __name__ == "__main__":

main()

总结

以上代码涵盖了从数据准备到模型训练的所有步骤。你可以根据需要调整配置文件中的参数,并运行训练脚本来开始训练Fast R-CNN模型。确保你的数据集目录结构符合预期,并且所有的文件路径都是正确的。

进一步优化建议

考虑到你提到的数据集中类别数量相等(假设每个类别至少有100张样本),我们可以通过以下方法来提高模型性能:

- 数据增强:增加更多的数据增强技术以提高模型的泛化能力。

- 学习率调度器:使用学习率调度器动态调整学习率。

- 预训练权重:使用更强大的预训练权重,如YOLOv5或EfficientDet。

- 多尺度训练:在不同分辨率下训练模型以提高鲁棒性。

- 混合精度训练:使用混合精度训练加速训练过程并减少内存占用。

以下是结合这些优化方法的改进版本。

使用YOLOv5和优化方法

我们将使用YOLOv5作为基础模型,并集成上述优化方法。

### 解释

1. **模型选择**:使用YOLOv5模型,可以选择不同的版本(如yolov5s, yolov5m, yolov5l, yolov5x)。

2. **类别权重**:计算每个类别的权重,并在损失函数中应用这些权重以平衡类别之间的差异。

3. **数据增强**:使用 `Albumentations` 库进行数据增强,包括翻转、旋转、亮度对比度调整等。

4. **优化器和学习率调度器**:使用AdamW优化器和StepLR学习率调度器来动态调整学习率。

5. **训练过程**:在每个epoch中,更新模型参数并保存模型状态。

### 下一步

根据实际情况调整超参数(如学习率、批量大小、epochs等)以获得更好的性能。此外,可以考虑使用更复杂的模型架构(如 YOLOv5 或 SSD)来进一步提升检测精度。

被折叠的 条评论

为什么被折叠?

被折叠的 条评论

为什么被折叠?