安装与环境配置

环境:Ubuntu18.04

安装celery

root@ubuntu:/home/toohoo/learncelery# pip install celery==3.1.25

Collecting celery==3.1.25

...

安装redis

pip install redis

安装redis组件

pip install -U 'celery[redis]'

验证

root@ubuntu:/home/toohoo/learncelery# celery --version

4.3.0 (rhubarb) #不知道为什么变成4.X了,反正可以就行了先

基本操作

创建任务

#-*-encoding=utf-8-*-

from celery import Celery

# 创建Celery实例

app = Celery('tasks',

broker = 'redis://localhost',

)

#创建任务

@app.task

def add(x,y):

print("计算2个值的和:%s %s" %(x,y))

return x+y

启动Worker

root@ubuntu:/home/toohoo/learncelery# celery -A task1 worker --loglevel=info

/usr/local/lib/python2.7/dist-packages/celery/platforms.py:801: RuntimeWarning: You're running the worker with superuser privileges: this is

absolutely not recommended!

Please specify a different user using the --uid option.

User information: uid=0 euid=0 gid=0 egid=0

uid=uid, euid=euid, gid=gid, egid=egid,

-------------- celery@ubuntu v4.3.0 (rhubarb)

---- **** -----

--- * *** * -- Linux-4.15.0-46-generic-x86_64-with-Ubuntu-18.04-bionic 2019-04-02 16:03:44

-- * - **** ---

- ** ---------- [config]

- ** ---------- .> app: tasks:0x7f5b97f9ce10

- ** ---------- .> transport: redis://localhost:6379//

- ** ---------- .> results: disabled://

- *** --- * --- .> concurrency: 1 (prefork)

-- ******* ---- .> task events: OFF (enable -E to monitor tasks in this worker)

--- ***** -----

-------------- [queues]

.> celery exchange=celery(direct) key=celery

[tasks]

. task1.add

[2019-04-02 16:03:44,489: INFO/MainProcess] Connected to redis://localhost:6379//

[2019-04-02 16:03:44,496: INFO/MainProcess] mingle: searching for neighbors

[2019-04-02 16:03:45,596: INFO/MainProcess] mingle: all alone

[2019-04-02 16:03:45,636: INFO/MainProcess] celery@ubuntu ready.

调用任务

新开一个窗口:

root@ubuntu:/home/toohoo/learncelery# python

Python 2.7.15rc1 (default, Nov 12 2018, 14:31:15)

[GCC 7.3.0] on linux2

Type "help", "copyright", "credits" or "license" for more information.

>>> import sys

>>> dir = r"./"

>>> sys.path.append(dir)

>>> from task1 import add

>>> add.delay(1,2)

<AsyncResult: 5fe5149d-1ec2-4182-8243-4b10e6db0d04>

>>>

返回原窗口查看worker的显示信息:

[2019-04-02 16:19:43,183: INFO/MainProcess] Received task: task1.add[5fe5149d-1ec2-4182-8243-4b10e6db0d04]

[2019-04-02 16:19:43,185: WARNING/ForkPoolWorker-1] 计算2个值的和:1 2

[2019-04-02 16:19:43,186: INFO/ForkPoolWorker-1] Task task1.add[5fe5149d-1ec2-4182-8243-4b10e6db0d04] succeeded in 0.00141431500379s: 3

上面只是一个发送任务的调用,结果是拿不到的。上面也没有接收返回值,这次把返回值保存到起来:

>>> t = add.delay(3,4)

>>> type(t) #查看返回值的类型

<class 'celery.result.AsyncResult'>

>>> t.get() #报错了

Traceback (most recent call last):

File "<stdin>", line 1, in <module>

File "/usr/local/lib/python2.7/dist-packages/celery/result.py", line 226, in get

on_message=on_message,

File "/usr/local/lib/python2.7/dist-packages/celery/backends/base.py", line 496, in wait_for_pending

no_ack=no_ack,

File "/usr/local/lib/python2.7/dist-packages/celery/backends/base.py", line 829, in _is_disabled

raise NotImplementedError(E_NO_BACKEND.strip())

NotImplementedError: No result backend is configured.

Please see the documentation for more information.

这里是实例化的时候,没有定义backend,就是保存任务结果的位置。

获取返回结果

修改最初的task1.py代码

#-*-encoding=utf-8-*-

from celery import Celery

# 创建Celery实例

app = Celery('tasks',

broker = 'redis://localhost',

backend= 'redis://localhost', #添加backend

)

#创建任务

@app.task

def add(x,y):

print("计算2个值的和:%s %s" %(x,y))

return x+y

重启Worker,关闭并重新打开Python调用窗口:

调用窗口显示:

root@ubuntu:/home/toohoo/learncelery# python

Python 2.7.15rc1 (default, Nov 12 2018, 14:31:15)

[GCC 7.3.0] on linux2

Type "help", "copyright", "credits" or "license" for more information.

>>> import sys

>>> dir = r"./"

>>> sys.path.append(dir)

>>> from task1 import add

>>> t = add.delay(3,4)

>>> t.get()

7

Worker窗口显示:

[2019-04-02 16:33:38,974: INFO/MainProcess] Received task: task1.add[9039571c-179f-4f88-ba06-3cb9cdcb85a0]

[2019-04-02 16:33:38,977: WARNING/ForkPoolWorker-1] 计算2个值的和:3 4

[2019-04-02 16:33:38,992: INFO/ForkPoolWorker-1] Task task1.add[9039571c-179f-4f88-ba06-3cb9cdcb85a0] succeeded in 0.0158289919927s: 7

其他操作

get进入阻塞

任务执行太快,我们可能需要慢一点,准备一个需要执行一段时间的任务:

import time

@app.task

def upper(v):

for i in range(10):

time.sleep(1)

print(i)

return v.upper()

使用get调用任务会进入阻塞,直到任务返回结果,这样就没有异步效果了:

调用窗口显示:

root@ubuntu:/home/toohoo/learncelery# python

Python 2.7.15rc1 (default, Nov 12 2018, 14:31:15)

[GCC 7.3.0] on linux2

Type "help", "copyright", "credits" or "license" for more information.

>>> import sys

>>> dir = r"./"

>>> sys.path.append(dir)

>>> from task1 import upper

>>> t = upper.delay("abc")

>>> t.get()

u'ABC'

Worker窗口显示:

[2019-04-02 16:45:51,236: INFO/MainProcess] Received task: task1.upper[7f04c396-b125-4b56-adbb-bc113fa17d6e]

[2019-04-02 16:45:52,240: WARNING/ForkPoolWorker-1] 0

[2019-04-02 16:45:53,242: WARNING/ForkPoolWorker-1] 1

[2019-04-02 16:45:54,244: WARNING/ForkPoolWorker-1] 2

[2019-04-02 16:45:55,248: WARNING/ForkPoolWorker-1] 3

[2019-04-02 16:45:56,251: WARNING/ForkPoolWorker-1] 4

[2019-04-02 16:45:57,252: WARNING/ForkPoolWorker-1] 5

[2019-04-02 16:45:58,254: WARNING/ForkPoolWorker-1] 6

[2019-04-02 16:45:59,257: WARNING/ForkPoolWorker-1] 7

[2019-04-02 16:46:00,259: WARNING/ForkPoolWorker-1] 8

[2019-04-02 16:46:01,262: WARNING/ForkPoolWorker-1] 9

[2019-04-02 16:46:01,291: INFO/ForkPoolWorker-1] Task task1.upper[7f04c396-b125-4b56-adbb-bc113fa17d6e] succeeded in 10.052781638s: 'ABC'

ready获取任务是否完成,不阻塞

ready()方法可以返回任务是否执行完成,等到返回True了再去get,马上能拿到结果:

>>> t = upper.delay("abcd")

>>> t.ready()

False

>>> t.ready()

False

>>> t.ready()

False

>>> t.ready()

True

>>> t.get()

u'ABCD'

>>>

get设置超时时间

还可以给get设置一个超时时间,如果超时,会抛出异常:

>>> t = upper.delay("abcde")

>>> t.get(timeout=20)

u'ABCDE'

>>> t = upper.delay("abcde")

>>> t.get(timeout=1)

Traceback (most recent call last):

File "<stdin>", line 1, in <module>

File "/usr/local/lib/python2.7/dist-packages/celery/result.py", line 226, in get

on_message=on_message,

File "/usr/local/lib/python2.7/dist-packages/celery/backends/asynchronous.py", line 188, in wait_for_pending

for _ in self._wait_for_pending(result, **kwargs):

File "/usr/local/lib/python2.7/dist-packages/celery/backends/asynchronous.py", line 259, in _wait_for_pending

raise TimeoutError('The operation timed out.')

celery.exceptions.TimeoutError: The operation timed out.

任务报错:

如果任务执行报错,比如执行这个任务:

>>> t = upper.delay(123)

这时候Worker那边会显示错误的内容:

[2019-04-02 17:03:38,387: ERROR/ForkPoolWorker-1] Task task1.upper[e283c043-e5d6-4d85-8f1f-87da32ce9743] raised unexpected: AttributeError("'int' object has no attribute 'upper'",)

Traceback (most recent call last):

File "/usr/local/lib/python2.7/dist-packages/celery/app/trace.py", line 385, in trace_task

R = retval = fun(*args, **kwargs)

File "/usr/local/lib/python2.7/dist-packages/celery/app/trace.py", line 648, in __protected_call__

return self.run(*args, **kwargs)

File "/home/toohoo/learncelery/task1.py", line 24, in upper

return v.upper()

AttributeError: 'int' object has no attribute 'upper'

然后再get结果的时候,会把这个错误作为异常抛出,这样很不友好:

>>> t = upper.delay(123)

>>> t.get()

Traceback (most recent call last):

File "<stdin>", line 1, in <module>

File "/usr/local/lib/python2.7/dist-packages/celery/result.py", line 226, in get

on_message=on_message,

File "/usr/local/lib/python2.7/dist-packages/celery/backends/asynchronous.py", line 190, in wait_for_pending

return result.maybe_throw(callback=callback, propagate=propagate)

File "/usr/local/lib/python2.7/dist-packages/celery/result.py", line 331, in maybe_throw

self.throw(value, self._to_remote_traceback(tb))

File "/usr/local/lib/python2.7/dist-packages/celery/result.py", line 324, in throw

self.on_ready.throw(*args, **kwargs)

File "/usr/local/lib/python2.7/dist-packages/vine/promises.py", line 244, in throw

reraise(type(exc), exc, tb)

File "<string>", line 1, in reraise

AttributeError: 'int' object has no attribute 'upper'

>>>

get设置只获取错误结果,不触发异常

>>> t.get(propagate = False)

AttributeError(u"'int' object has no attribute 'upper'",)

>>>

traceback 里面存着错误信息

>>> t.traceback

u'Traceback (most recent call last):\n File "/usr/local/lib/python2.7/dist-packages/celery/app/trace.py", line 385, in trace_task\n R = retval = fun(*args, **kwargs)\n File "/usr/local/lib/python2.7/dist-packages/celery/app/trace.py", line 648, in __protected_call__\n return self.run(*args, **kwargs)\n File "/home/toohoo/learncelery/task1.py", line 24, in upper\n return v.upper()\nAttributeError: \'int\' object has no attribute \'upper\'\n'

>>>

小结

启动Celery Worker来开始监听并执行任务

$ celery -A tasks worker --loglevel=info

调用任务

>>> from tasks import add

>>> t = add.delay(4, 4)

同步拿结果

>>> t.get()

>>> t.get(timeout=1)

检查任务是否完成

>>> t.ready()

如果出错,获取错误结果,不触发异常

>>> t.get(propagate=False)

>>> t.traceback # 打印异常详细结果

在项目里面使用Celery

可以把celery配置成一个应用,假设应用名字是CeleryPro,目录格式如下:

root@ubuntu:/home/toohoo/learncelery/CeleryPro# tree

.

├── celery.py

├── __init__.py

└── tasks.py

0 directories, 3 files

- 注意:

1、这里的连接文件命名必须为 celery.py,其他名字随意。

2、Unix/linux下初始文件名为__init__.py,Windows操作系统下是__init.py

celery文件

这个文件名字必须为celery.py:

root@ubuntu:/home/toohoo/learncelery/CeleryPro# cat celery.py

#-*-encoding=utf-8-*-

from __future__ import absolute_import,unicode_literals

from celery import Celery

app = Celery('CeleryPro',

broker = "redis://localhost",

backend= 'redis://localhost',

include= ['CeleryPro.tasks']

)

# Optional configuration,see the application user guide

app.conf.update(

result_expires=3600,

)

if __name__=='__main__':

app.start()

第一句 from future import absolute_import, unicode_literals ,后面的unicode_literals不知道是什么。不过前面的absolute_import是绝对引入。因为这个文件的文件名就是celery,所以默认后面的 form celery 是引入这个文件,但我们实际需要的是引入celery模块,所以用了绝对引入这个模块。如果要引入这个文件,可以这么写 from .celery ,加个点,下面的tasks里会用到

tasks文件

这个文件开始的两行就多了一个点,这里要导入上面的celery.py文件。后面只要写各种任务加上装饰器就可以:

#-*-encoding=utf-8-*-

from __future__ import absolute_import,unicode_literals

from .celery import app

import time

@app.task

def add(x,y):

print("计算2个值的和:%s %s" %(x,y))

return x+y

#创建一个花时间的任务

@app.task

def upper(v):

for i in range(10):

time.sleep(1)

print(i)

return v.upper()

启动Worker

启动的时候,-A 参数后面用应用名称 CeleryPro 。你还需要cd到你CeleryPro的父级目录上启动,否则找不到:

root@ubuntu:/home/toohoo/learncelery# celery -A CeleryPro worker -l info

/usr/local/lib/python2.7/dist-packages/celery/platforms.py:801: RuntimeWarning: You're running the worker with superuser privileges: this is

absolutely not recommended!

Please specify a different user using the --uid option.

User information: uid=0 euid=0 gid=0 egid=0

uid=uid, euid=euid, gid=gid, egid=egid,

-------------- celery@ubuntu v4.3.0 (rhubarb)

---- **** -----

--- * *** * -- Linux-4.15.0-46-generic-x86_64-with-Ubuntu-18.04-bionic 2019-04-02 19:58:17

-- * - **** ---

- ** ---------- [config]

- ** ---------- .> app: CeleryPro:0x7f83f6d77e50

- ** ---------- .> transport: redis://localhost:6379//

- ** ---------- .> results: redis://localhost/

- *** --- * --- .> concurrency: 1 (prefork)

-- ******* ---- .> task events: OFF (enable -E to monitor tasks in this worker)

--- ***** -----

-------------- [queues]

.> celery exchange=celery(direct) key=celery

[tasks]

. CeleryPro.tasks.add

. CeleryPro.tasks.upper

[2019-04-02 19:58:17,200: INFO/MainProcess] Connected to redis://localhost:6379//

[2019-04-02 19:58:17,362: INFO/MainProcess] mingle: searching for neighbors

[2019-04-02 19:58:18,406: INFO/MainProcess] mingle: all alone

[2019-04-02 19:58:18,445: INFO/MainProcess] celery@ubuntu ready.

各种启动的姿势

这里注意用的都是CeleryPro:

celery -A CeleryPro worker -loglevel=info # 前台启动不推荐

celery -A CeleryPro worker -l info # 前台启动简写

celery multi start w1 -A CeleryPro -l info # 推荐用后台启动

后台启动打印的信息如下:

root@ubuntu:/home/toohoo/learncelery# celery multi start w1 -A CeleryPro -l info

celery multi v4.3.0 (rhubarb)

> Starting nodes...

> w1@ubuntu: OK

调用任务

调用任务也是在CeleryPro的父级目录下调用就好了,各种用法都一样。

操作都要在CeleryPro的父级目录下执行,就是说只要保证CeleryPro的父级目录在环境变量里。或者用 sys.path.append() 加到环境变量里去。例如:

>>> import sys

>>> dir = "./CeleryPro/"

>>> sys.path.append(dir)

>>> from CeleryPro.tasks import add

>>> t = add(3,4)

计算2个值的和: 3 4

>>> print(t)

7

这里理解为把celery包装成了你项目里的一个应用,应用的内容都放在了CeleryPro这个文件夹下。而CeleryPro就作为你的项目里的一个模块。而你项目的主目录一定在项目启动的时候加到环境变量里的,所以其实这样包装好之后再在项目里使用应该很方便。

后台启动多个Worker

启动命令:

- celery -A 项目名 worker -loglevel=info :前台启动命令

- celery multi start w1 -A 项目名 -l info:后台启动命令

- celery multi restart w1 -A 项目名 -l info:后台重启命令

- celery multi stop w1 -A 项目名 -l info:后台停止命令

前后台的区别:后台是通过multi启动的。

w1是worker的名称,可以后台启动多个worker,每个worker有一个名称。

即便是所有的worker都已经done了,用户仍然启动了任务,所有的任务依然会被保留,直到有worker来执行并返回结果。

如果前台启动额worker断开了,那么worker的任务就会消失;如果后台启动的worker断开了,后台的任务仍然在。意思不好理解。

查看当前还有多少个Celery的worker,似乎也只能通过ps来查看了,下面先起来3个后台worker,ps看一下,然后停掉一个worker,再用ps看一下:

root@ubuntu:/home/toohoo/learncelery# celery multi start w1 -A CeleryPro -l info

celery multi v4.3.0 (rhubarb)

> Starting nodes...

> w1@ubuntu: OK

ERROR: Pidfile (w1.pid) already exists. #之前测试时已经开启

Seems we're already running? (pid: 26973)

root@ubuntu:/home/toohoo/learncelery# celery multi start w2 -A CeleryPro -l info

celery multi v4.3.0 (rhubarb)

> Starting nodes...

> w2@ubuntu: OK

root@ubuntu:/home/toohoo/learncelery# celery multi start w3 -A CeleryPro -l info

celery multi v4.3.0 (rhubarb)

> Starting nodes...

> w3@ubuntu: OK

root@ubuntu:/home/toohoo/learncelery# ps -ef|grep celery

root 26973 63295 0 19:59 ? 00:00:04 /usr/bin/python -m celery worker -l info -A CeleryPro --logfile=w1%I.log --pidfile=w1.pid --hostname=w1@ubuntu

root 26977 26973 0 19:59 ? 00:00:00 /usr/bin/python -m celery worker -l info -A CeleryPro --logfile=w1%I.log --pidfile=w1.pid --hostname=w1@ubuntu

root 45267 63295 1 20:29 ? 00:00:00 /usr/bin/python -m celery worker -l info -A CeleryPro --logfile=w2%I.log --pidfile=w2.pid --hostname=w2@ubuntu

root 45271 45267 0 20:29 ? 00:00:00 /usr/bin/python -m celery worker -l info -A CeleryPro --logfile=w2%I.log --pidfile=w2.pid --hostname=w2@ubuntu

root 45482 63295 1 20:30 ? 00:00:00 /usr/bin/python -m celery worker -l info -A CeleryPro --logfile=w3%I.log --pidfile=w3.pid --hostname=w3@ubuntu

root 45486 45482 0 20:30 ? 00:00:00 /usr/bin/python -m celery worker -l info -A CeleryPro --logfile=w3%I.log --pidfile=w3.pid --hostname=w3@ubuntu

root 45684 87412 0 20:30 pts/3 00:00:00 grep --color=auto celery

root@ubuntu:/home/toohoo/learncelery# celery multi stop w1

celery multi v4.3.0 (rhubarb)

> Stopping nodes...

> w1@ubuntu: TERM -> 26973

root@ubuntu:/home/toohoo/learncelery# ps -ef|grep celery

root 45267 63295 0 20:29 ? 00:00:00 /usr/bin/python -m celery worker -l info -A CeleryPro --logfile=w2%I.log --pidfile=w2.pid --hostname=w2@ubuntu

root 45271 45267 0 20:29 ? 00:00:00 /usr/bin/python -m celery worker -l info -A CeleryPro --logfile=w2%I.log --pidfile=w2.pid --hostname=w2@ubuntu

root 45482 63295 0 20:30 ? 00:00:00 /usr/bin/python -m celery worker -l info -A CeleryPro --logfile=w3%I.log --pidfile=w3.pid --hostname=w3@ubuntu

root 45486 45482 0 20:30 ? 00:00:00 /usr/bin/python -m celery worker -l info -A CeleryPro --logfile=w3%I.log --pidfile=w3.pid --hostname=w3@ubuntu

root 46546 87412 0 20:32 pts/3 00:00:00 grep --color=auto celery

root@ubuntu:/home/toohoo/learncelery#

Windows平台不支持(仅仅遵循原文转载,没有验证)

错误信息如下:

File "g:\steed\documents\pycharmprojects\venv\celery\lib\site-packages\celery\platforms.py", line 429, in detached

raise RuntimeError('This platform does not support detach.')

RuntimeError: This platform does not support detach.

> w1@IDX-xujf: * Child terminated with errorcode 1

FAILED

根据错误信息查看一下429行的代码:

if not resource:

raise RuntimeError('This platform does not support detach.')

这里判断了一下resource,然后就直接抛出异常了。resource具体是什么,可以在这个文件里搜索一下变量名(resource)

# 在开头获取了这个resource的值

resource = try_import('resource')

#上面的try_import方法,在另外一个文件里

def try_import(module, default=None):

"""Try to import and return module, or return

None if the module does not exist."""

try:

return importlib.import_module(module)

except ImportError:

return default

#下面有一个方法注释里表明resource为None代表是Windows

def get_fdmax(default=None):

"""Return the maximum number of open file descriptors

on this system.

:keyword default: Value returned if there's no file

descriptor limit.

"""

try:

return os.sysconf('SC_OPEN_MAX')

except:

pass

if resource is None: # Windows

return default

fdmax = resource.getrlimit(resource.RLIMIT_NOFILE)[1]

if fdmax == resource.RLIM_INFINITY:

return default

return fdmax

上面做的就是要尝试导入一个模块 “resource” 。该模块只用于Unix。

定时任务

3版本的定时任务和4版本还是有很大差别的。另外4版本里有更多的定时任务。

Celery3

继续使用之前的2个任务,只需要为celery添加一些配置(conf),为任务设置计划。

app.conf里的参数都是全大写的,这里大小写敏感,不能用小写:

#CeleryPro/tasks.py

from __future__ import absolute_import, unicode_literals

from .celery import app

import time

@app.task

def add(x, y):

print("计算2个值的和: %s %s" % (x, y))

return x+y

@app.task

def upper(v):

for i in range(10):

time.sleep(1)

print(i)

return v.upper()

#CeleryPro/celery.py

from __future__ import absolute_import, unicode_literals

from celery import Celery

from celery.schedules import crontab

from datetime import timedelta

app = Celery('CeleryPro',

broker='redis://192.168.246.11',

backend='redis://192.168.246.11',

include=['CeleryPro.tasks'])

app.conf.CELERYBEAT_SCHEDULE = {

'add every 10 seconds': {

'task': 'CeleryPro.tasks.add',

'schedule': timedelta(seconds=10), # 可以用timedelta对象

# 'schedule': 10, # 也支持直接用数字表示秒数

'args': (1, 2)

},

'upper every 2 minutes': {

'task': 'CeleryPro.tasks.upper',

'schedule': crontab(minute='*/2'),

'args': ('abc', ),

},

}

#app.conf.CELERY_TIMEZONE = 'UTC'

app.conf.CELERY_TIMEZONE = 'Asia/Shanghai'

#Optional configuration, see the application user guide.

app.conf.update(

CELERY_TASK_RESULT_EXPIRES=3600,

)

if __name__ == '__main__':

app.start()

任务结果过期设置 `CELERY_TASK_RESULT_EXPIRES=3600’ 。默认设置是1天,官网介绍这是靠一个内置的周期性任务把超过时限的任务结果给清除的。

A built-in periodic task will delete the results after this time (celery.task.backend_cleanup).

设置完成后,启动Worker,启动Beat就OK了:(在这里我装的是Celery是4.X的,这个没有通过,报错,Ubuntu下的celery3.x没有验证过,遵循作者的原文转载)

G:\Steed\Documents\PycharmProjects\Celery>G:\Steed\Documents\PycharmProjects\venv\Celery\Scripts\celery.exe -A CeleryPro worker -l info

G:\Steed\Documents\PycharmProjects\Celery>G:\Steed\Documents\PycharmProjects\venv\Celery\Scripts\celery.exe -A CeleryPro beat -l info

3版本里的参数都是全大写的,到了4版本开始改用小写了,并且很多参数名也变了。这里有新旧参数的对应关系:

http://docs.celeryproject.org/en/latest/userguide/configuration.html#configuration

如果使用celery4.x运行会报错,格式不对:

...

celery.exceptions.ImproperlyConfigured:

Cannot mix new setting names with old setting names, please

rename the following settings to use the old format:

result_expires -> CELERY_TASK_RESULT_EXPIRES

Or change all of the settings to use the new format :)

Celery4

新版的好处是,可以把定时的任务和普通的任务一样单独定义。多了@app.on_after_configure.connect 这个装饰器,3版本是没有这个装饰器的。

写代码

单独在创建一个py文件,存放定时任务:

# CeleryPro/periodic4.py

from __future__ import absolute_import, unicode_literals

from .celery import app

from celery.schedules import crontab

@app.on_after_configure.connect

def setup_periodic_tasks(sender, **kwargs):

# 每10秒执行一次

sender.add_periodic_task(10.0, hello.s(), name='hello every 10') # 给任务取个名字

# 每30秒执行一次

sender.add_periodic_task(30, upper.s('abcdefg'), expires=10) # 设置任务超时时间10秒

# 执行周期和Linux的计划任务crontab设置一样

sender.add_periodic_task(

crontab(hour='*', minute='*/2', day_of_week='*'),

add.s(11, 22),

)

@app.task

def hello():

print('Hello World')

@app.task

def upper(arg):

return arg.upper()

@app.task

def add(x, y):

print("计算2个值的和: %s %s" % (x, y))

return x+y

上面一共定了3个计划。

name参数给计划取名,这样这个任务报告的时候就会使用name的值,像这样:hello every 10。否则默认显示的是调用函数的命令,像这样:CeleryPro.periodic4.upper(‘abcdefg’) 。

expires参数设置任务超时时间,超时未完成,可能就放弃了(没测试)。

修改一下之前的celery.py文件,把新写的任务文件添加到include的列表里。

#-*-encoding=utf-8-*-

from __future__ import absolute_import, unicode_literals

from celery import Celery

app = Celery('CeleryPro',

broker='redis://localhost',

backend='redis://localhost',

include=['CeleryPro.tasks','CeleryPro.periodic4'])

app.conf.timezone = 'UTC' #计划任务的默认时间是UTC时间,timezone,注意是celery4的了。

#app.conf.CELERY_TIMEZONE = 'Asia/Shanghai'

# Optional configuration, see the application user guide.

app.conf.update(

result_expires=3600,

)

if __name__ == '__main__':

app.start()

启动worker

启动方法和之前的一样:

root@ubuntu:/home/toohoo/learncelery# celery -A CeleryPro worker -l info

/usr/local/lib/python2.7/dist-packages/celery/platforms.py:801: RuntimeWarning: You're running the worker with superuser privileges: this is

absolutely not recommended!

Please specify a different user using the --uid option.

User information: uid=0 euid=0 gid=0 egid=0

uid=uid, euid=euid, gid=gid, egid=egid,

-------------- celery@ubuntu v4.3.0 (rhubarb)

---- **** -----

--- * *** * -- Linux-4.15.0-46-generic-x86_64-with-Ubuntu-18.04-bionic 2019-04-02 21:36:14

-- * - **** ---

- ** ---------- [config]

- ** ---------- .> app: CeleryPro:0x7f5e8d0fce50

- ** ---------- .> transport: redis://localhost:6379//

- ** ---------- .> results: redis://localhost/

- *** --- * --- .> concurrency: 1 (prefork)

-- ******* ---- .> task events: OFF (enable -E to monitor tasks in this worker)

--- ***** -----

-------------- [queues]

.> celery exchange=celery(direct) key=celery

[tasks]

. CeleryPro.periodic4.add

. CeleryPro.periodic4.hello

. CeleryPro.periodic4.upper

. CeleryPro.tasks.add

. CeleryPro.tasks.upper

[2019-04-02 21:36:16,633: INFO/MainProcess] Connected to redis://localhost:6379//

[2019-04-02 21:36:16,729: INFO/MainProcess] mingle: searching for neighbors

[2019-04-02 21:36:17,787: INFO/MainProcess] mingle: sync with 2 nodes

[2019-04-02 21:36:17,856: INFO/MainProcess] mingle: sync complete

[2019-04-02 21:36:18,189: INFO/MainProcess] celery@ubuntu ready.

启动后看一下[tasks],新加的定时任务已经列出来了,之前的任务也都在。

启动Beat

这里-A后面要写全 CeleryPro.periodic4 ,和启动Worker的参数有点不一样:

root@ubuntu:/home/toohoo/learncelery# celery -A CeleryPro.periodic4 beat -l info

celery beat v4.3.0 (rhubarb) is starting.

__ - ... __ - _

LocalTime -> 2019-04-02 21:40:42

Configuration ->

. broker -> redis://localhost:6379//

. loader -> celery.loaders.app.AppLoader

. scheduler -> celery.beat.PersistentScheduler

. db -> celerybeat-schedule

. logfile -> [stderr]@%INFO

. maxinterval -> 5.00 minutes (300s)

[2019-04-02 21:40:42,255: INFO/MainProcess] beat: Starting...

[2019-04-02 21:40:52,603: INFO/MainProcess] Scheduler: Sending due task hello every 10 (CeleryPro.periodic4.hello)

[2019-04-02 21:41:02,585: INFO/MainProcess] Scheduler: Sending due task hello every 10 (CeleryPro.periodic4.hello)

[2019-04-02 21:41:12,582: INFO/MainProcess] Scheduler: Sending due task CeleryPro.periodic4.upper(u'abcdefg') (CeleryPro.periodic4.upper)

[2019-04-02 21:41:12,592: INFO/MainProcess] Scheduler: Sending due task hello every 10 (CeleryPro.periodic4.hello)

[2019-04-02 21:41:22,593: INFO/MainProcess] Scheduler: Sending due task hello every 10 (CeleryPro.periodic4.hello)

[2019-04-02 21:41:32,593: INFO/MainProcess] Scheduler: Sending due task hello every 10 (CeleryPro.periodic4.hello)

[2019-04-02 21:41:42,582: INFO/MainProcess] Scheduler: Sending due task CeleryPro.periodic4.upper(u'abcdefg') (CeleryPro.periodic4.upper)

[2019-04-02 21:41:42,593: INFO/MainProcess] Scheduler: Sending due task hello every 10 (CeleryPro.periodic4.hello)

[2019-04-02 21:41:52,593: INFO/MainProcess] Scheduler: Sending due task hello every 10 (CeleryPro.periodic4.hello)

[2019-04-02 21:42:00,000: INFO/MainProcess] Scheduler: Sending due task CeleryPro.periodic4.add(11, 22) (CeleryPro.periodic4.add)

[2019-04-02 21:42:02,593: INFO/MainProcess] Scheduler: Sending due task hello every 10 (CeleryPro.periodic4.hello)

[2019-04-02 21:42:12,582: INFO/MainProcess] Scheduler: Sending due task CeleryPro.periodic4.upper(u'abcdefg') (CeleryPro.periodic4.upper)

[2019-04-02 21:42:12,595: INFO/MainProcess] Scheduler: Sending due task hello every 10 (CeleryPro.periodic4.hello)

[2019-04-02 21:42:22,595: INFO/MainProcess] Scheduler: Sending due task hello every 10 (CeleryPro.periodic4.hello)

[2019-04-02 21:42:32,595: INFO/MainProcess] Scheduler: Sending due task hello every 10 (CeleryPro.periodic4.hello)

[2019-04-02 21:42:42,583: INFO/MainProcess] Scheduler: Sending due task CeleryPro.periodic4.upper(u'abcdefg') (CeleryPro.periodic4.upper)

启动之后马上就把2个每隔一段时间执行的任务发送给Worker执行了,之后会根据定义的间隔继续发送。

另外一个用crontab设置的任务需要等到时间匹配上了才会发送。当时是41分,等到42分时已经正确执行了。

旧版本的做法一样可以用

上面说了,新版主要是多提供了一个装饰器。不用新提供的装饰器,依然可以把定时任务写在配置里:

# CeleryPro/celery.py

from __future__ import absolute_import, unicode_literals

from celery import Celery

app = Celery('CeleryPro',

broker='redis://localhost',

backend='redis://localhost',

include=['CeleryPro.tasks'])

app.conf.beat_schedule = {

'every 5 seconds': {

'task': 'CeleryPro.tasks.upper',

'schedule': 5,

'args': ('xyz',)

}

}

# Optional configuration, see the application user guide.

app.conf.update(

result_expires=3600,

)

if __name__ == '__main__':

app.start()

这里就是在配置里设置,定时启动一个普通任务。这里把include里的CeleryPro.periodic4删掉了,留着也没影响。

任务文件tasks.py还是之前的那个,具体如下:

# CeleryPro/tasks.py

from __future__ import absolute_import, unicode_literals

from .celery import app

import time

@app.task

def add(x, y):

print("计算2个值的和: %s %s" % (x, y))

return x+y

@app.task

def upper(v):

for i in range(10):

time.sleep(1)

print(i)

return v.upper()

最后启动Worker,启动Breat试一下:

#celery -A CeleryPro beat -l info

这里Beat的-A参数用 CeleryPro 也能启动这里的定时任务。CeleryPro.tasks 效果也是一样的。另外如果把periodic4.py加到include列表里去,用 CeleryPro.periodic4 参数启动的话,这里的定时任务也会启动。

这里也是支持用crontab的,用法和之前的一样,把schedule参数的值换成调用crontab的函数。

小结

上面的两种定时任务的方法,各有应用场景。

如果要改任务执行的函数,只能改代码,然后重启Worker了。

这里要说的是改计划(包括新增、取消和修改计划周期),但是任务执行的函数不变。用@app.on_after_configure.connect装饰器,是把计划写死在一个函数里了。似乎无法动态添加新任务。不过好处是结构比较清晰。

而后一种方法,只要更新一下 app.conf.beat_schedule这个字典里的配置信息,然后重启Beat就能生效了。

crontab 举例

下面是crontab的一些例子:

| Example | Meaning |

|---|---|

| crontab() | Execute every minute |

| crontab(minute=0,hour=0) | Execute daily at midnight |

| crontab(minute=0, hour=’*/3’) | Execute every three hours: 3am, 6am, 9am, noon, 3pm, 6pm, 9pm. |

| crontab(minute=0,hour=‘0,3,6,9,12,15,18,21’) | Same as previous. |

| crontab(minute=’*/15’) | Execute every 15 minutes. |

| crontab(day_of_week=‘sunday’) | Execute every minute (!) at Sundays. |

| crontab(minute=’’,hour=’’, day_of_week=‘sun’) | Same as previous. |

| crontab(minute=’*/10’,hour=‘3,17,22’, day_of_week=‘thu,fri’) | Execute every ten minutes, but only between 3-4 am, 5-6 pm and 10-11 pm on Thursdays or Fridays. |

| crontab(minute=0, hour=’/2,/3’) | Execute every even hour, and every hour divisible by three. This means: at every hour except: 1am, 5am, 7am, 11am, 1pm, 5pm, 7pm, 11pm |

| crontab(minute=0, hour=’*/5’) | Execute hour divisible by 5. This means that it is triggered at 3pm, not 5pm (since 3pm equals the 24-hour clock value of “15”, which is divisible by 5). |

| crontab(minute=0, hour=’*/3,8-17’) | Execute every hour divisible by 3, and every hour during office hours (8am-5pm). |

| crontab(day_of_month=‘2’) | Execute on the second day of every month. |

| crontab(day_of_month=‘2-30/3’) | Execute on every even numbered day. |

| crontab(day_of_month=‘1-7,15-21’) | Execute on the first and third weeks of the month. |

| crontab(day_of_month=‘11’,month_of_year=‘5’) | Execute on 11th of May every year. |

| crontab(month_of_year=’*/3’) | Execute on the first month of every quarter. |

日程表(Solar schedules)

4版本里还提供这样的方法来指定计划

If you have a task that should be executed according to sunrise, sunset, dawn or dusk, you can use the solar schedule type:

如果你有一个任务,是根据日出,日落,黎明或黄昏来执行的,你可以使用日程表类型:

所有事件都是根据UTC时间计算的,所以不受时区设置影响。官网的例子:

from celery.schedules import solar

app.conf.beat_schedule = {

# Executes at sunset in Melbourne

'add-at-melbourne-sunset': {

'task': 'tasks.add',

'schedule': solar('sunset', -37.81753, 144.96715),

'args': (16, 16),

},

}

这里solar函数要提供3个参数,事件、纬度、经度。经纬度使用的标志看下表:

| Sign | Argument | Meaning |

|---|---|---|

| + | latitude | North |

| - | latitude | South |

| + | longitude | East |

| - | longitude | West |

支持的事件类型如下:

| Event | Meaning |

|---|---|

| dawn_astronomical | Execute at the moment after which the sky is no longer completely dark. This is when the sun is 18 degrees below the horizon. |

| dawn_nautical | Execute when there’s enough sunlight for the horizon and some objects to be distinguishable; formally, when the sun is 12 degrees below the horizon. |

| dawn_civil | Execute when there’s enough light for objects to be distinguishable so that outdoor activities can commence; formally, when the Sun is 6 degrees below the horizon. |

| sunrise | Execute when the upper edge of the sun appears over the eastern horizon in the morning. |

| solar_noon | Execute when the sun is highest above the horizon on that day. |

| sunset | Execute when the trailing edge of the sun disappears over the western horizon in the evening. |

| dusk_civil | Execute at the end of civil twilight, when objects are still distinguishable and some stars and planets are visible. Formally, when the sun is 6 degrees below the horizon. |

| dusk_nautical | Execute when the sun is 12 degrees below the horizon. Objects are no longer distinguishable, and the horizon is no longer visible to the naked eye. |

| dusk_astronomical | Execute at the moment after which the sky becomes completely dark; formally, when the sun is 18 degrees below the horizon. |

在Django中使用的最佳实践

在django中使用的话,可以把celery的配置直接写在django的settings.py文件里。另外任务函数则写在tasks.py文件里放在各个app的目录下。每个app下都可以有一个tasks.py,所有的任务都是共享的。

创建目录结构

使用工具创建一个django的项目,项目名称就叫CeleryDjango,app的名字就app01好了。创建好项目后,在项目目录下创建CeleryPro目录,目录下建一个celery.py文件。目录结构如下:

toohoo@ubuntu:/home/toohoo/PycharmProjects/CeleryDjango$ tree -L 2

.

|-- CeleryDjango

| |-- __init__.py

| |-- __pycache__

| |-- settings.py

| |-- urls.py

| `-- wsgi.py

|-- CeleryPro

| |-- __init__.py

| `-- celery.py

|-- app01

| |-- __init__.py

| |-- admin.py

| |-- apps.py

| |-- migrations

| |-- models.py

| |-- tests.py

| `-- views.py

|-- manage.py

|-- templates

`-- venv

|-- bin

|-- include

|-- lib

|-- lib64 -> lib

|-- pip-selfcheck.json

`-- pyvenv.cfg

11 directories, 15 files

上面只要关注一下CeleryPro的结构和位置就好了,其他都是创建django项目后的默认内容。

CeleryPro/celery.py 文件,是用来创建celery实例的。

CeleryPro/init.py 文件,需要确保当Django启动时加载celery。之后在app里会用到celery模块里的 @shared_task 这个装饰器。

CeleryPro 示例代码

具体的示例文件在官方的github里,3版本和4版本有一些的区别。

最新版的:https://github.com/celery/celery/tree/master/examples/django

3.1版本的:https://github.com/celery/celery/tree/3.1/examples/django

Github里也可以自行切换各个版本的分支查看。

以下的试验环境是Ubuntu18.04.

#CeleryDjango/CeleryPro/__init__.py

#!/usr/bin/env python3

#-*-encoding:UTF-8-*-

from __future__ import absolute_import,unicode_literals

__author__ = 'toohoo'

#This will make sure the app is always imported when Django starts

#so that shared_task will use this app

from .celery import app as celery_app

__all__ = ('celery_app',)

#CeleryDjango/CeleryPro/celery.py

#!/usr/bin/env python3

# -*-encoding:UTF-8-*-

from __future__ import absolute_import,unicode_literals

import os

from celery import Celery

# set the default Django settings module for the 'celery' program

os.environ.setdefault('DJANGO_SETTINGS_MODULE','CeleryDjango.settings')

from django.conf import settings # noqa

app = Celery('CeleryPro')

# Using a string here means the worker will not have to

# pickle the object when using Windows.

app.config_from_object('django.conf:settings', namespace='CELERY')

# 自动发现所有app下的tasks

# app.autodiscover_tasks(lambda: settings.INSTALLED_APPS) # 这是官方示例的写法默认是使用amqp对应的RabbitMQ作为broker的

app.autodiscover_tasks() #这里让它使用redis

# 但是,新版django的INSTALLED_APPS的写法可能无法发现到,

# windows操作系统下面可能会出现这个问题

'''

# 这里是setting.py里的INSTALLED_APPS部分

INSTALLED_APPS = [

'django.contrib.admin',

'django.contrib.auth',

'django.contrib.contenttypes',

'django.contrib.sessions',

'django.contrib.messages',

'django.contrib.staticfiles',

'app01.apps.App01Config', # 这种写法自动发现找不到tasks

# 'app01', # 这种写法就能自动发现

]

'''

# 或者不想改settings.INSTALLED_APPS,那就自己把app的列表写在一个列表里作为参数吧

# app.autodiscover_tasks(['app01']) # 这里我就这么做

@app.task(bind=True)

def debug_task(self):

print('Request:{0!r}'.format(self.request))

应该是Windows下出现的这个坑,看看注释。

任务文件 tasks

在app下创建tasks.py文件(和models.py文件同一级目录),创建任务。

- app01/

- app01/tasks.py

- app01/models.py

tasks.py文件里创建的函数用的是 @shared_task这个装饰器。这些任务是所有app共享的。

#CeleryDjango/app01/tasks.py

#!/usr/bin/env python3

# -*-encoding:UTF-8-*-

# Create your tasks here

from __future__ import absolute_import, unicode_literals

from celery import shared_task

@shared_task

def add(x, y):

return x + y

@shared_task

def mul(x, y):

return x * y

@shared_task

def xsum(numbers):

return sum(numbers)

设置settings,py

这个是django的配置文件,不过现在celery的配置也都可以写在这里了:

#CeleryDjango/CeleryDjango/settings.py

# 其他都是django的配置内容,就省了

# Celery settings

BROKER_URL = 'redis://localhost'

CELERY_RESULT_BACKEND = 'redis://localhost'

这里就做最基本的设置,用redis收任务和存任务结果,其他都默认了设置了。

启动Worker

启动命令是一样的,关键就是-A后面的参数:

toohoo@ubuntu:~/PycharmProjects/CeleryDjango$ celery -A CeleryPro worker -l info

-------------- celery@ubuntu v4.3.0 (rhubarb)

---- **** -----

--- * *** * -- Linux-4.15.0-46-generic-x86_64-with-Ubuntu-18.04-bionic 2019-04-03 13:41:20

-- * - **** ---

- ** ---------- [config]

- ** ---------- .> app: CeleryPro:0x7f91be91f160

- ** ---------- .> transport: amqp://guest:**@localhost:5672//

- ** ---------- .> results: redis://localhost/

- *** --- * --- .> concurrency: 1 (prefork)

-- ******* ---- .> task events: OFF (enable -E to monitor tasks in this worker)

--- ***** -----

-------------- [queues]

.> celery exchange=celery(direct) key=celery

[tasks]

. CeleryPro.celery.debug_task

. app01.tasks.add

. app01.tasks.mul

. app01.tasks.xsum

[2019-04-03 13:41:21,197: INFO/MainProcess] Connected to amqp://guest:**@127.0.0.1:5672//

[2019-04-03 13:41:21,251: INFO/MainProcess] mingle: searching for neighbors

[2019-04-03 13:41:22,328: INFO/MainProcess] mingle: all alone

[2019-04-03 13:41:22,412: WARNING/MainProcess] /home/toohoo/PycharmProjects/CeleryDjango/venv/lib/python3.6/site-packages/celery/fixups/django.py:202: UserWarning: Using settings.DEBUG leads to a memory leak, never use this setting in production environments!

warnings.warn('Using settings.DEBUG leads to a memory leak, never '

[2019-04-03 13:41:22,413: INFO/MainProcess] celery@ubuntu ready.

上面这样就是成功启动了,确认一下[tasks]下面的任务是否都有就没问题了。

下面进行页面调用:

路由函数

有两个url,一个是提交任务页面的url。还有一个url是根据uuid拿任务结果的,这个视图没写html,直接用HttpResponse返回了:

# CeleryDjango/UsingCeleryWithDjango/urls.py

from django.contrib import admin

from django.urls import path

from app01 import views

urlpatterns = [

path('admin/', admin.site.urls),

path('task_test',views.task_test),

]

视图函数

#CeleryDjango/app01/views.py

from django.shortcuts import render,redirect,HttpResponse

# Create your views here.

from app01 import tasks

def task_test(request):

# 测试通过

res = tasks.add.delay(228, 24)

print(tasks)

print("start running task")

print("async task res", res.get())

return HttpResponse('res %s' % res.get())

接下来进行的测试,可以先在控制台启动worker,并打印出信息:

toohoo@ubuntu:~/PycharmProjects/CeleryDjango$ celery -A CeleryPro worker -l info

-------------- celery@ubuntu v4.3.0 (rhubarb)

---- **** -----

--- * *** * -- Linux-4.15.0-47-generic-x86_64-with-Ubuntu-18.04-bionic 2019-04-06 00:39:17

-- * - **** ---

- ** ---------- [config]

- ** ---------- .> app: CeleryPro:0x7faa783040b8

- ** ---------- .> transport: redis://127.0.0.1:6379//

- ** ---------- .> results: redis://127.0.0.1:6379/

- *** --- * --- .> concurrency: 1 (prefork)

-- ******* ---- .> task events: OFF (enable -E to monitor tasks in this worker)

--- ***** -----

-------------- [queues]

.> celery exchange=celery(direct) key=celery

[tasks]

. CeleryPro.celery.debug_task

. app01.tasks.add

. app01.tasks.mul

. app01.tasks.xsum

[2019-04-06 00:39:18,138: INFO/MainProcess] Connected to redis://127.0.0.1:6379//

[2019-04-06 00:39:18,201: INFO/MainProcess] mingle: searching for neighbors

[2019-04-06 00:39:19,260: INFO/MainProcess] mingle: all alone

[2019-04-06 00:39:19,307: WARNING/MainProcess] /home/toohoo/PycharmProjects/CeleryDjango/venv/lib/python3.6/site-packages/celery/fixups/django.py:202: UserWarning: Using settings.DEBUG leads to a memory leak, never use this setting in production environments!

warnings.warn('Using settings.DEBUG leads to a memory leak, never '

[2019-04-06 00:39:19,309: INFO/MainProcess] celery@ubuntu ready.

[2019-04-06 00:39:19,518: INFO/MainProcess] Received task: app01.tasks.add[e4229647-2202-4d57-88f6-9f417fcddb34]

[2019-04-06 00:39:19,523: INFO/MainProcess] Received task: app01.tasks.add[ffa9c240-c8a4-446b-b76e-ca9b78fb1265]

[2019-04-06 00:39:19,551: INFO/ForkPoolWorker-1] Task app01.tasks.add[e4229647-2202-4d57-88f6-9f417fcddb34] succeeded in 0.025759028000038597s: 252

浏览器访问http://127.0.0.1:8000/task_test时候也会返回信息:

res 252

在django中使用定时任务

要在django中使用定时任务,到这里需要再安装一个模块:

pip install django_celery_beat

使用 Django_Celery_Beat

1、先在settings的INSTALLED_APPS里注册一下:

INSTALLED_APPS = [

......

'django_celery_beat',

]

注意,模块名称中没有破折号,只有下划线。

2、应用django_celery_beat的数据库,会自动创建几张表。只要直接migrate就好了:

toohoo@ubuntu:~/PycharmProjects/CeleryDjango$ python3 manage.py migrate

Operations to perform:

Apply all migrations: admin, auth, contenttypes, django_celery_beat, sessions

Running migrations:

Applying django_celery_beat.0001_initial... OK

Applying django_celery_beat.0002_auto_20161118_0346... OK

Applying django_celery_beat.0003_auto_20161209_0049... OK

Applying django_celery_beat.0004_auto_20170221_0000... OK

Applying django_celery_beat.0005_add_solarschedule_events_choices_squashed_0009_merge_20181012_1416... OK

Applying django_celery_beat.0006_periodictask_priority... OK

登录超级用户之后,会发现下方显示创建出了4张表:

任务都是配置在Periodic tasks表里的。另外几张表就是各种任务执行周期的:

任务都是配置在Periodic tasks表里的。另外几张表就是各种任务执行周期的:

Crontabs:计划任务执行周期,根据crontab一样

Intervals:比较简单的定时计划,比如每隔几分钟执行一次

Periodic tasks:要执行的任务在这里配置。

配置任务

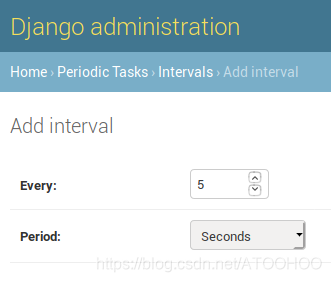

先进入 Intervals 表,新建任务周期。这里建一个每5秒的周期。

然后进入 Periodic tasks 表,选择要执行的任务,关联上某个周期。

然后进入 Periodic tasks 表,选择要执行的任务,关联上某个周期。

这里能看到的任务就是通过自动发现注册的任务,只需要在每个app里面的task.py配置任务就可以在这里自动发现:

关联上上面的任务,下面的任务只能设置一个:

关联上上面的任务,下面的任务只能设置一个:

这里是参数,要传递给你的任务函数的必须是json格式:

这里的JSON会反序列化之后,以 “*args, **kwargs” 传递给任务函数的。

好了任务配置完了,其他任务周期也是一样的,就不试了。

启动Beat

这里依然需要启动一个Beat来定时发任务的。先把Worker起动起来,然后启动Beat需要多加一个参数 “-S django” :

toohoo@ubuntu:~/PycharmProjects/CeleryDjango$ celery -A CeleryPro beat -l info -S django

beat v4.3.0 (rhubarb) is starting.

__ - ... __ - _

LocalTime -> 2019-04-06 02:02:20

Configuration ->

. broker -> redis://127.0.0.1:6379//

. loader -> celery.loaders.app.AppLoader

. scheduler -> django_celery_beat.schedulers.DatabaseScheduler

. logfile -> [stderr]@%INFO

. maxinterval -> 5.00 seconds (5s)

[2019-04-06 02:02:20,464: INFO/MainProcess] beat: Starting...

[2019-04-06 02:02:20,464: INFO/MainProcess] Writing entries...

[2019-04-06 02:02:25,926: INFO/MainProcess] Writing entries...

[2019-04-06 02:02:26,162: INFO/MainProcess] Scheduler: Sending due task mytasks (mytasks)

[2019-04-06 02:02:31,117: INFO/MainProcess] Scheduler: Sending due task mytasks (mytasks)

[2019-04-06 02:02:36,171: INFO/MainProcess] Scheduler: Sending due task mytasks (mytasks)

[2019-04-06 02:02:41,317: INFO/MainProcess] Scheduler: Sending due task mytasks (mytasks)

注意:每次修改任务,都需要重启Beat,最新的配置才能生效。这个对 Intervals 的任务(每隔一段时间执行的),影响比较大。Crontab的任务问题貌似不是很大。

参考1:https://www.cnblogs.com/alex3714/articles/6351797.html

参考2:https://blog.51cto.com/steed/2292346?source=dra

1164

1164

被折叠的 条评论

为什么被折叠?

被折叠的 条评论

为什么被折叠?