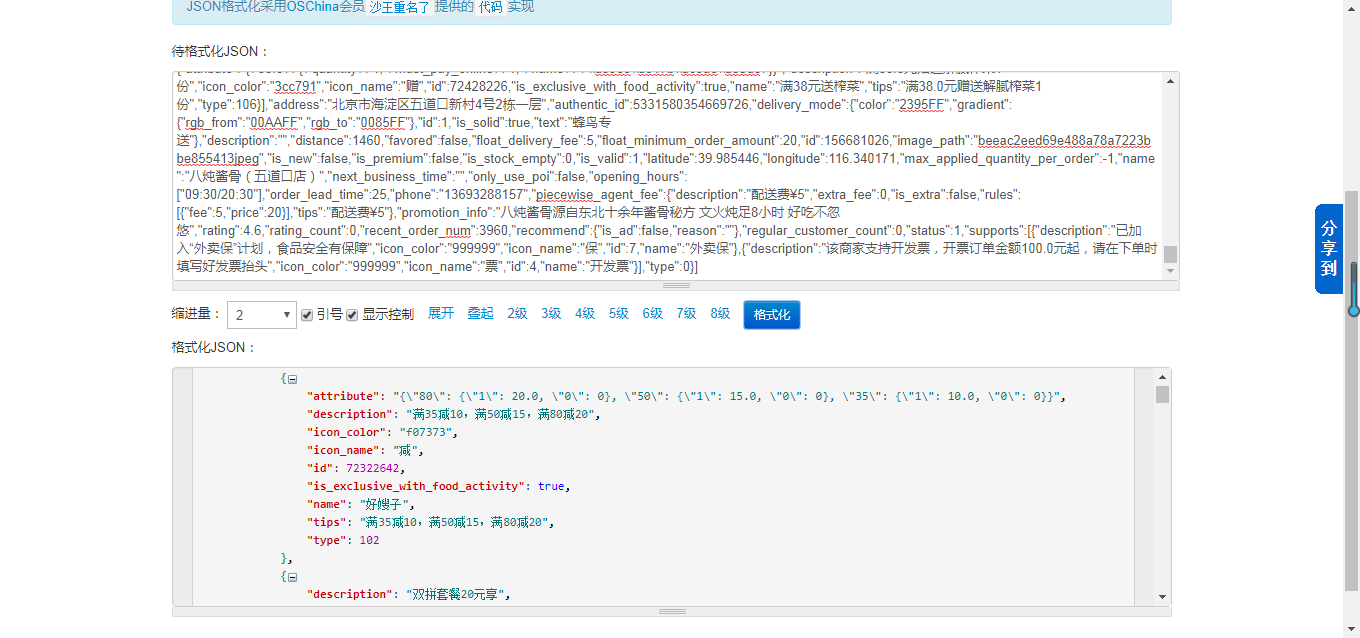

推荐使用在线格式化工具:http://tool.oschina.net/codeformat/json

将获取的json数据格式化

找出我们需要的字段信息,在java中写出实体类,注意属性的类型。

写出实体类

商家类:

public class Shop {

private Integer id; //店铺ID

private String name; //店铺名称

private Float rating; //店铺评分

private Integer recent_order_num; //店铺总售量

private Integer order_lead_time; //最迟送到时间

}信息类:

public class Info {

private String name; //分类名称

private Food[] foods; //菜品

private long id; //分类ID

}菜品类:

public class Food {

private Float rating; //菜品等级

private long restaurant_id; //所属餐厅ID

private String description; //描述

private Integer month_sales; //月售量

private Integer rating_count;

private String image_path; //图片路径

private String name; //菜品名称

private Integer satisfy_count; //满意的顾客数

private Integer satisfy_rate; //满意度

private Specfood[] specfoods; //菜品详情

}菜品详情类:(这个是我要的数据类)

public class Specfood {

private String name; //菜品名

private Integer restaurant_id; //所属餐厅ID

private Integer food_id; //菜品ID

private Float recent_rating; //最近评价

private Float price; //价格

private Float recent_popularity; //最近销售量

}上面的类省去了set get 方法、构造方法以及toString( )。

利用gson进行解析

gson的maven坐标<dependency>

<groupId>com.google.code.gson</groupId>

<artifactId>gson</artifactId>

<version>2.8.1</version>

</dependency>下面以爬取北京市海淀区北京大学医学部附近的商铺为例:

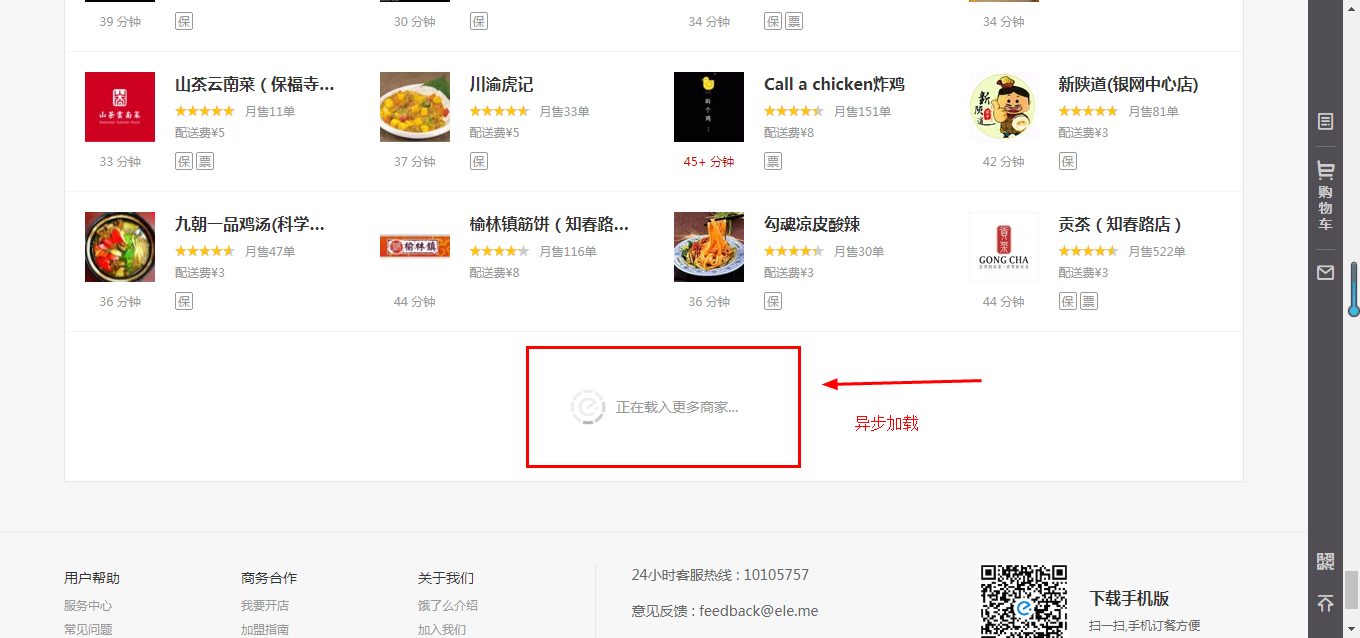

因为商铺很多,饿了么是通过滚动条滚到底部,加载一次数据的方式加载店铺

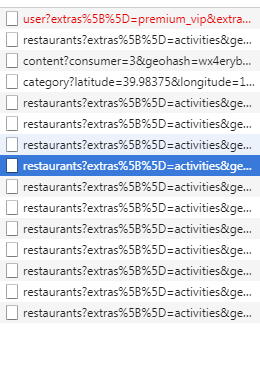

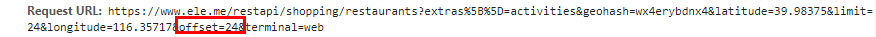

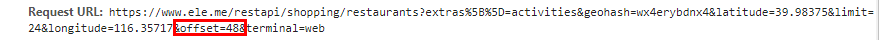

Chrome 监控数据

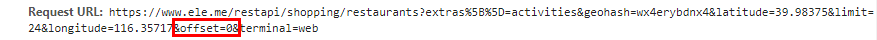

每次请求只有offset变化,并且是从0~624,每次以24的偏移量递增

package com.yc.elm.utils;

import java.io.BufferedWriter;

import java.io.FileWriter;

import java.util.List;

import org.jsoup.Connection;

import org.jsoup.Connection.Response;

import org.jsoup.Jsoup;

import com.google.gson.Gson;

import com.google.gson.GsonBuilder;

import com.google.gson.reflect.TypeToken;

import com.yc.elm.entity.Food;

import com.yc.elm.entity.Info;

import com.yc.elm.entity.Shop;

import com.yc.elm.entity.Specfood;

public class DataUtil {

public static void main(String[] args) throws Exception {

for (int i = 0; i < 27; i++) {

String url = "https://www.ele.me/restapi/shopping/restaurants?extras%5B%5D=activities&geohash=wx4erybdnx4&latitude=39.98375&limit=24&longitude=116.35717"

+ "&offset=" + i * 24 + "&terminal=web";

Connection con = Jsoup.connect(url).ignoreContentType(true);

Response response = con.execute();

String str = response.body();

System.out.println(i);

Gson gson = new Gson();

List<Shop> shops = gson.fromJson(str, new TypeToken<List<Shop>>() {

}.getType());

parseFoods(shops);

}

}

public static void parseFoods(List<Shop> shops) throws Exception {

FileWriter fw = new FileWriter("data.txt", true);

BufferedWriter bw = new BufferedWriter(fw);

for (Shop shop : shops) {

String url = "https://www.ele.me/restapi/shopping/v2/menu?restaurant_id=" + shop.getId();

Connection con = Jsoup.connect(url).ignoreContentType(true);

Response response = con.execute();

String str = response.body();

Gson gson = new GsonBuilder().create();

if (shop.getId() == 271908 || shop.getId() == 156329101 || shop.getId() == 156277299

|| shop.getId() == 682323 || shop.getId() == 157034079 || shop.getId() == 154898695

|| shop.getId() == 1001894 || shop.getId() == 2142009 || shop.getId() == 476592

|| shop.getId() == 305155 || shop.getId() == 156447071) {

break;

}

List<Info> infos = null;

try {

infos = gson.fromJson(str, new TypeToken<List<Info>>() {

}.getType());

} catch (Exception e) {

throw new RuntimeException(shop.getId() + "网页有问题!!!", e);

}

for (Info info : infos) {

Food[] foods = info.getFoods();

for (Food food : foods) {

Specfood[] specfoods = food.getSpecfoods();

for (Specfood specfood : specfoods) {

Integer food_id = specfood.getFood_id();

String name = specfood.getName();

Integer restaurant_id = specfood.getRestaurant_id();

Float price = specfood.getPrice();

Float recent_rating = specfood.getRecent_rating();

Float recent_popularity = specfood.getRecent_popularity();

if (food_id > 0 && name != null && restaurant_id != 0 && price != 0.0) {

String data = food_id + "\t" + name + "\t" + restaurant_id + "\t" + shop.getName() + "\t"

+ price + "\t" + recent_rating + "\t" + recent_popularity + "\r\n";

bw.append(data);

bw.flush();

}

}

}

}

}

bw.close();

}

}

其中有一些店铺的数据结构比较特殊,遇到他们我们都会 break 掉。

还有一个问题就是,当你多次请求该页面,该页面会把你当成网络攻击器,拒绝你访问。并报出429 430错误。

Exception in thread "main" org.jsoup.HttpStatusException: HTTP error fetching URL. Status=429, URL=https://www.ele.me/restapi/shopping/v2/menu?restaurant_id=157108780

at org.jsoup.helper.HttpConnection$Response.execute(HttpConnection.java:679)

at org.jsoup.helper.HttpConnection$Response.execute(HttpConnection.java:628)

at org.jsoup.helper.HttpConnection.execute(HttpConnection.java:260)

at com.yc.elm.utils.DataUtil.parseFoods(DataUtil.java:51)

at com.yc.elm.utils.DataUtil.main(DataUtil.java:33)

Exception in thread "main" org.jsoup.HttpStatusException: HTTP error fetching URL. Status=430, URL=https://www.ele.me/restapi/shopping/restaurants?extras%255B%255D=activities&geohash=wx4erybdnx4&latitude=39.98375&limit=24&longitude=116.35717&offset=0&terminal=web

at org.jsoup.helper.HttpConnection$Response.execute(HttpConnection.java:679)

at org.jsoup.helper.HttpConnection$Response.execute(HttpConnection.java:628)

at org.jsoup.helper.HttpConnection.execute(HttpConnection.java:260)

at com.yc.elm.utils.DataUtil.main(DataUtil.java:27)429 Too Many Requests (太多请求)

当你需要限制客户端请求某个服务数量时,该状态码就很有用,也就是请求速度限制。

在此之前,有一些类似的状态码,例如 '509 Bandwidth Limit Exceeded'. Twitter 使用 420 (这不是HTTP定义的状态码)

如果你希望限制客户端对服务的请求数,可使用 429 状态码,同时包含一个 Retry-After 响应头用于告诉客户端多长时间后可以再次请求服务。

报429后 隔段时间再次访问还是可以,一旦报430,就必须更换IP地址,这里没有对伪装IP和配置代理进行下一步研究,这个问题暂时没有解决。亲测,这里可以用代码模拟下拉三个页面。

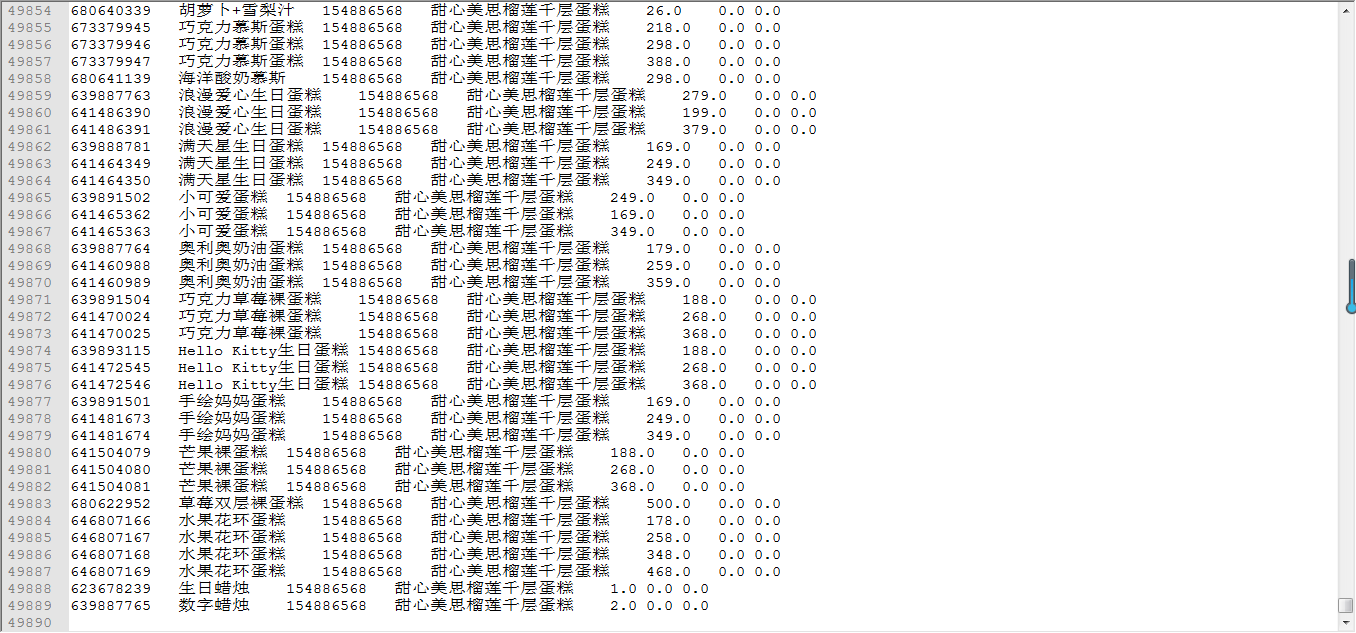

结果:

数据量还是太小,但这是一个好的开始。

6244

6244

被折叠的 条评论

为什么被折叠?

被折叠的 条评论

为什么被折叠?