参考

Kafka3.0

介绍

zookeeper存储kafka集群中上下线信息,还会存储每个分区下那个是leader,用来对集群元数据的管理、控制器的选举

kafka2.8版本之后,不再需要zookeeper,而是用了自己使用的

监听器

listeners

listeners:指定broker启动时本机的监听名称、端口,给服务端使用

# PLAINTEXT协议

listeners=PLAINTEXT://:9092

# SSL协议

listeners=PLAINTEXT://192.168.1.10:9092

# SASL_PLAINTEXT协议

listeners=PLAINTEXT://hostname:9092

# SASL_SSL协议

listeners=PLAINTEXT://0.0.0.0:9092

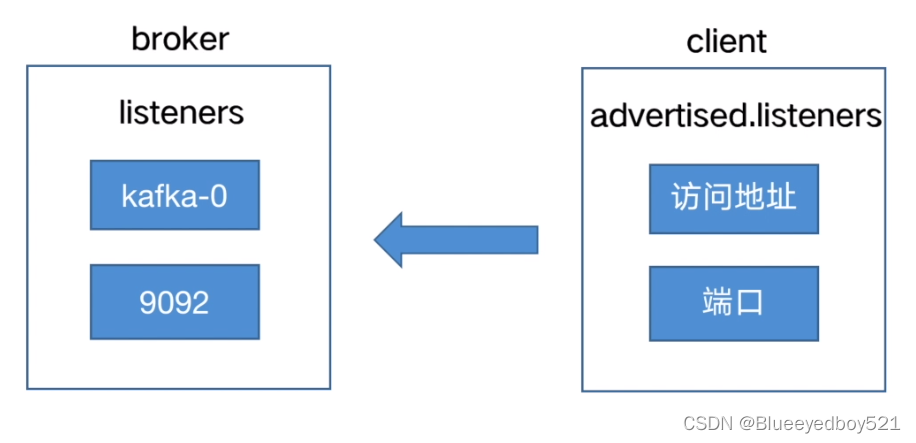

advertised.listeners

advertised.listeners:对外发布的访问IP和端口,注册到zookeeper中,给客户端(client)使用,所以这个ip一定是外部可以访问的

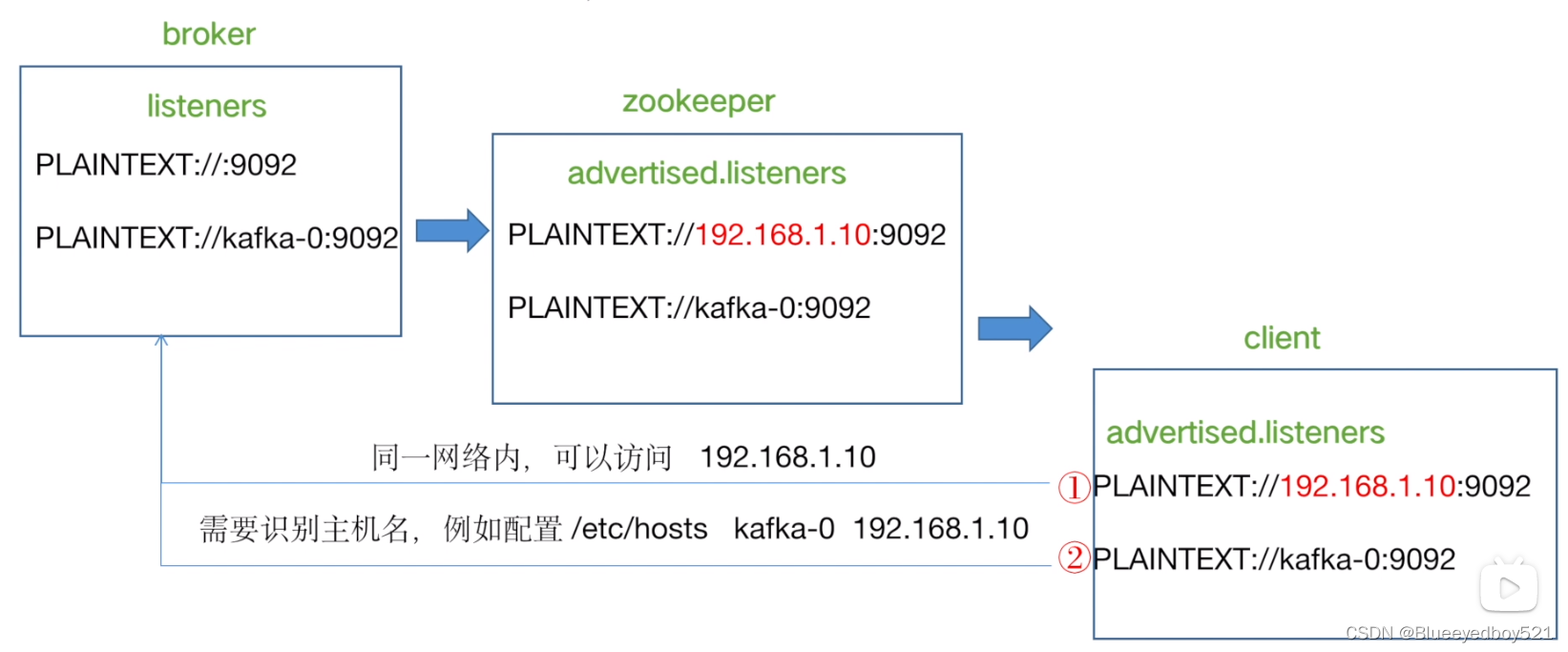

配置方式1(内网)

advertised.listeners如果没有哦配置,采用listeners的配置

**注册有两种方式**

方法一:在broker中不写ip配置为PLAINTEXT://:9092,则注册到zookeeper中会自动带入ip:PLAINTEXT://192.168.1.10:9092,这个要求客户端必须可以局域网访问

方法二:在broker中配置主机名:PLAINTEXT://kafka-0:9092,则注册到zookeeper中也是用主机名,客户端需要是被主机名需要修改hosts

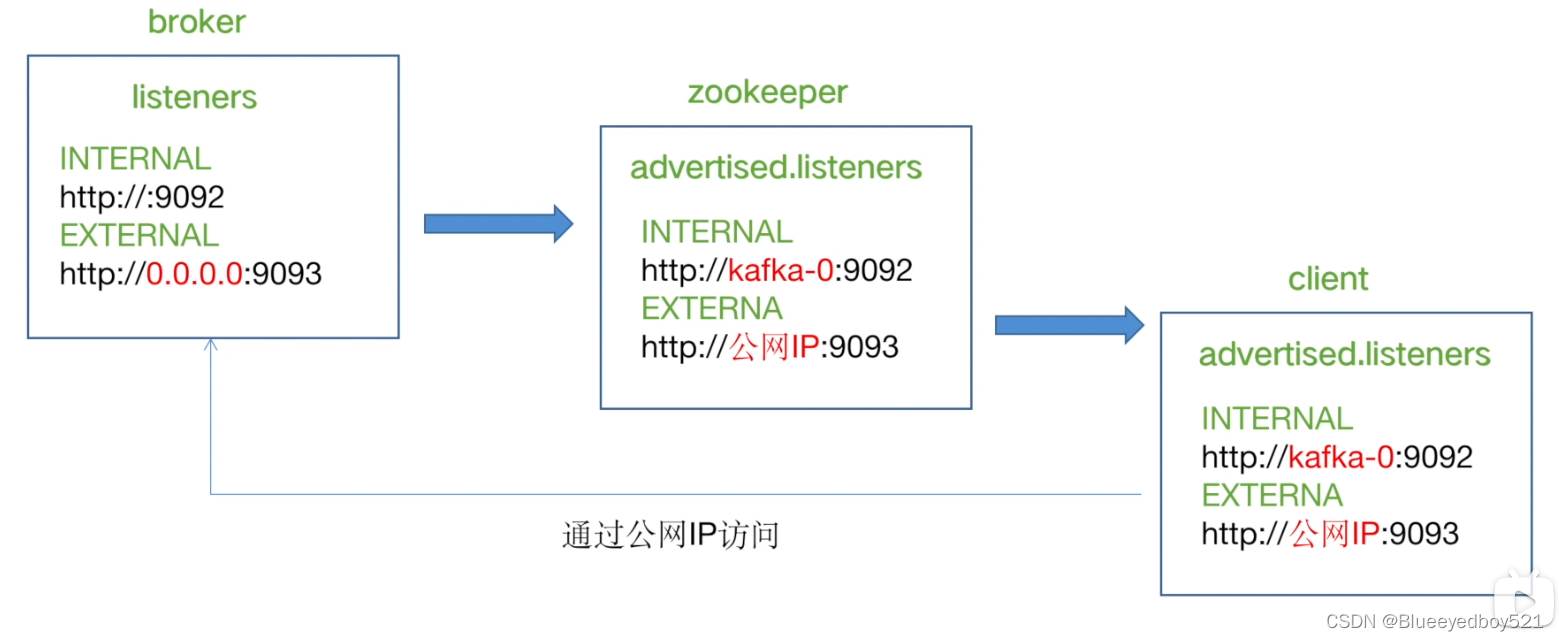

配置方式二(内部、外部网络)

这里需要broker配置两个监听,一个监听内网9092,一个监听公网9093,advertised.listeners需要配置成公网IP

配置示例如下:

listeners=INTERNAL://:9092,EXTERNAL://0.0.0.0:9093

advertised.listeners=INTERNAL://:9092,EXTERNAL://0.0.0.0:9093

listeners.security.protocol.map=INTERNAL:PLAINTEXT,EXTERNAL:PLAINTEXT

inter.broker.listener.name=INTERNAL

主题操作

# 连接单个节点

/bin/kafka-topics.sh --bootstrap-server hadoop102:9092

# 连接多个节点,生产环境写多个,避免其中一个挂掉

/bin/kafka-topics.sh --bootstrap-server hadoop102:9092,hadoop103:9092

# 创建topic

/bin/kafka-topics.sh --bootstrap-server hadoop102:9092 --topic first --partitions 1 --replication-factor 3

# 查看主题列表

/bin/kafka-topics.sh --bootstrap-server hadoop102:9092 --list

# 查看主题详细描述

/bin/kafka-topics.sh --bootstrap-server hadoop102:9092 --topic first --describe

# 修改增加分区数,只能增不能减

/bin/kafka-topics.sh --bootstrap-server hadoop102:9092 --topic first --alter --partitions 3

–bootstrap-server:连接的Kafka Broker主机名称和端口号

–topic:创建主题

–list:查看所有主题

–delete:删除主题

–alter:修改主题

–describe:查看主题描述

–partitions:设置分区数

–replication-factor:设置分区副本

生产者发送

# 输入如下执行连接到broker然后回出现>即可发送消息内容

/bin/kafka-console-producer.sh --bootstrap-server hadoop102:9092 --topic first

消费者消费消息

# 实时增量消费

/bin/kafka-console-consumer.sh --bootstrap-server hadoop102:9092 --topic first

# 从头开始消费,消费历史数据

/bin/kafka-console-consumer.sh --bootstrap-server hadoop102:9092 --topic first --from-beginning

docker部署

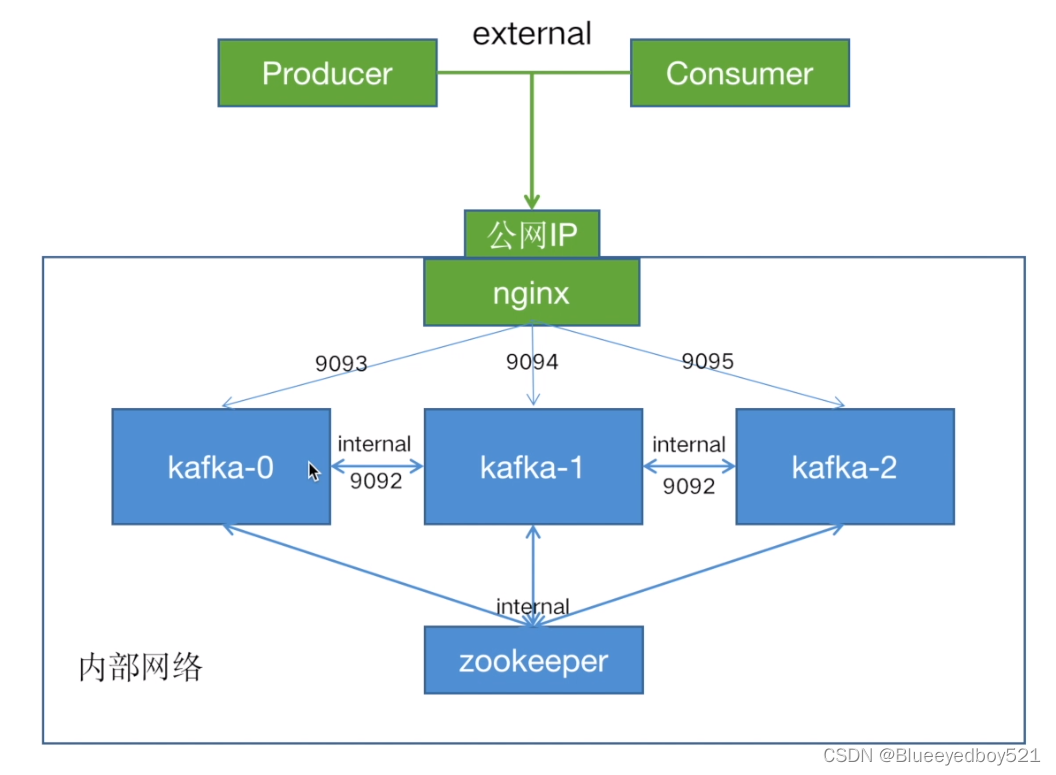

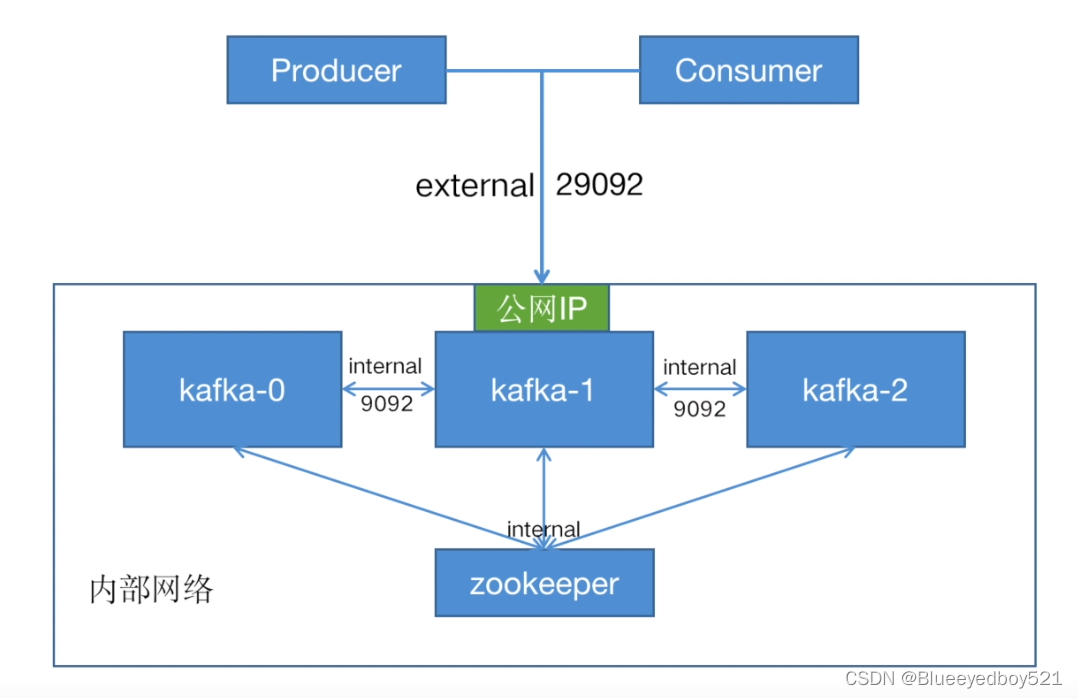

集群部署架构

内外网配置如下:

创建文件夹

mkdir /usr/local/zookeeper

mkdir /usr/local/zookeeper/data

mkdir /usr/local/kafka0

mkdir /usr/local/kafka0/data

mkdir /usr/local/kafka1

mkdir /usr/local/kafka1/data

mkdir /usr/local/kafka2

mkdir /usr/local/kafka2/data

# 授权data文件夹,否则程序无法启动

chmod -R 775 /usr/local/zookeeper/data

chmod -R 775 /usr/local/kafka0/data

chmod -R 775 /usr/local/kafka1/data

chmod -R 775 /usr/local/kafka2/data

准备docker-compose.yml

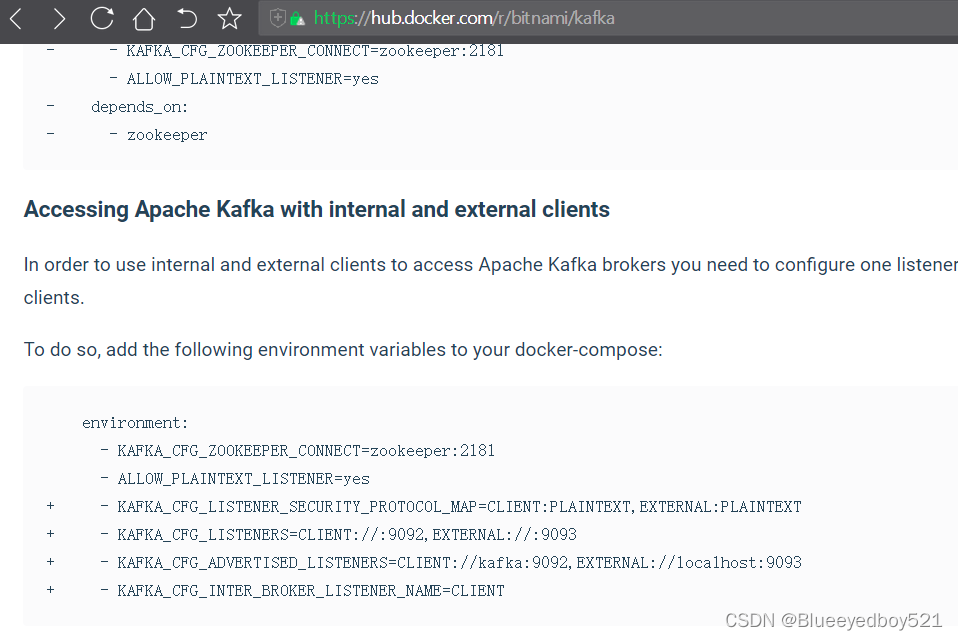

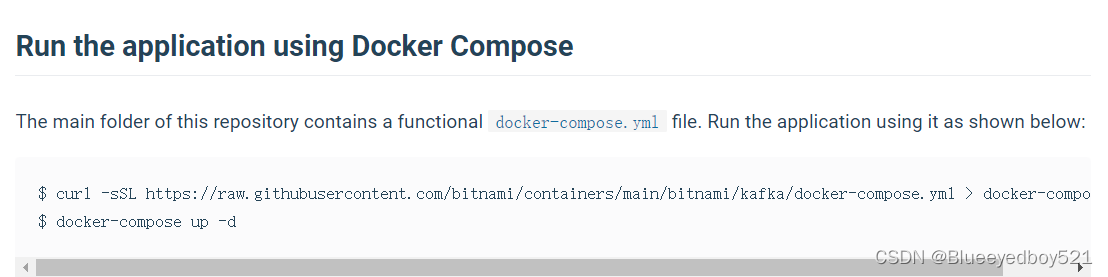

搜索kafka镜像:https://hub.docker.com/r/bitnami/kafka

下拉找到下图

点击docker-compose.yml,单击部署打开https://github.com/bitnami/containers/blob/main/bitnami/kafka/docker-compose.yml

集群部署:https://github.com/bitnami/containers/blob/main/bitnami/kafka/docker-compose-cluster.yml

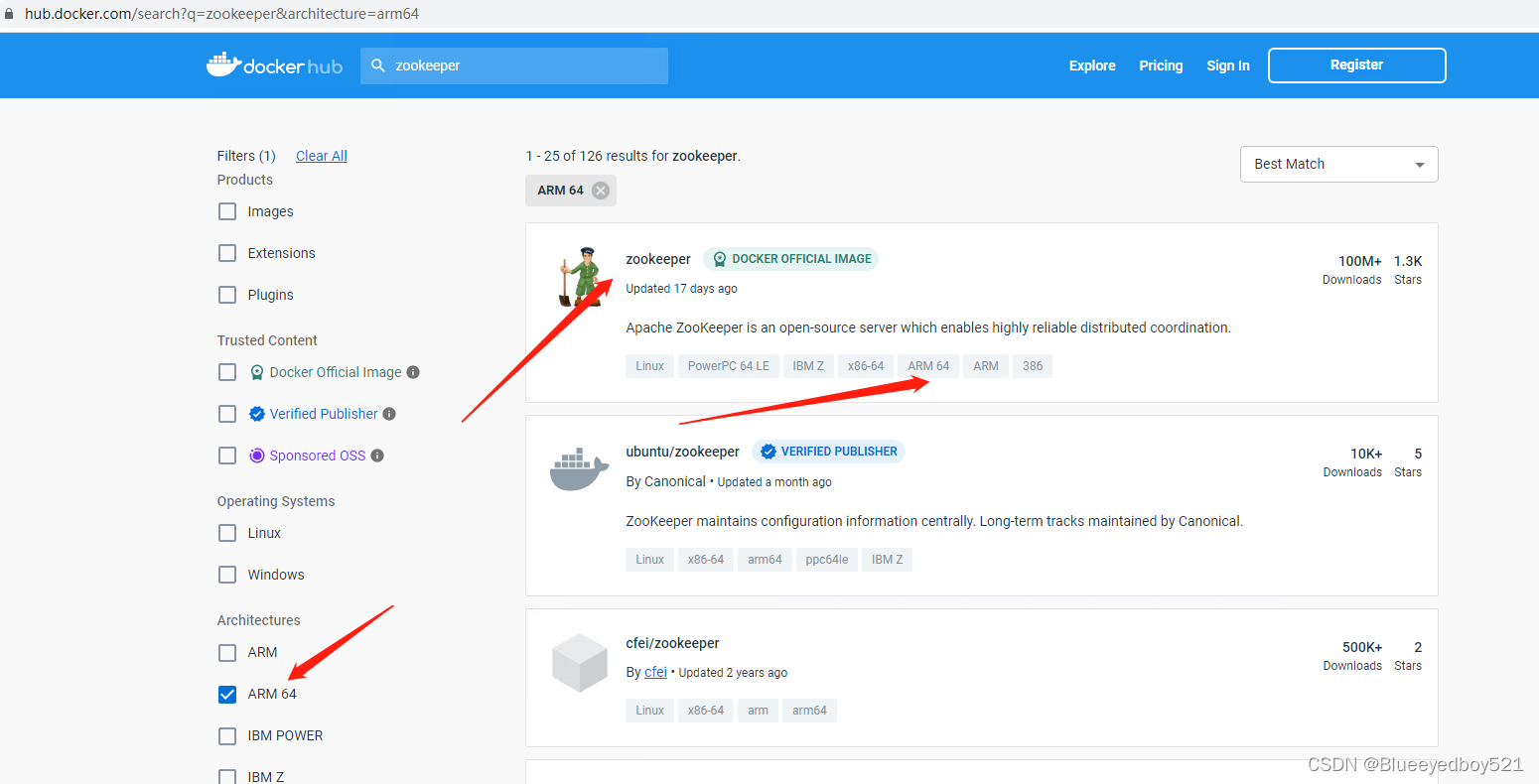

配置arm架构aarch64

version: "2"

services:

zookeeper:

container_name: zookeeper

hostname: zookeeper

image: zookeeper

ports:

- "2181:2181"

volumes:

- /usr/local/zookeeper/data:/data

- /usr/local/zookeeper/config:/config

- /usr/local/zookeeper/datalog:/datalog

- /usr/local/zookeeper/logs:/logs

#- /etc/timezone:/etc/timezone #指定时区

- /etc/localtime:/etc/localtime

environment:

- ALLOW_ANONYMOUS_LOGIN=yes

- TZ=Asia/Shanghai

networks:

- gxu-net

kafka:

image: wurstmeister/kafka

container_name: kafka

hostname: kafka

ports:

- "9092:9092"

environment:

- KAFKA_CFG_BROKER_ID=0

- KAFKA_BROKER_ID=0

- KAFKA_CFG_ZOOKEEPER_CONNECT=zookeeper:2181

- KAFKA_ZOOKEEPER_CONNECT=zookeeper:2181

- ALLOW_PLAINTEXT_LISTENER=yes

#- KAFKA_CFG_LISTENER_SECURITY_PROTOCOL_MAP=INTERNAL:PLAINTEXT,EXTERNAL:PLAINTEXT

#- KAFKA_CFG_LISTENERS=INTERNAL://:9092,EXTERNAL://0.0.0.0:19092

#- KAFKA_CFG_ADVERTISED_LISTENERS=INTERNAL://kafka:9092,EXTERNAL://192.168.0.44:19092

#- KAFKA_CFG_INTER_BROKER_LISTENER_NAME=INTERNAL

- KAFKA_CFG_LISTENERS=PLAINTEXT://0.0.0.0:9092

- KAFKA_CFG_ADVERTISED_LISTENERS=PLAINTEXT://10.80.1.215:9092

- KAFKA_LISTENERS=PLAINTEXT://0.0.0.0:9092

- KAFKA_ADVERTISED_LISTENERS=PLAINTEXT://10.80.1.215:9092

- KAFKA_CFG_CREATE_TOPICS="hello_world"

- KAFKA_CFG_HEAP_OPTS=-Xmx1G -Xms256M

- TZ=Asia/Shanghai

volumes:

#- kafka_0_data:/bitnami/kafka # 官方写法

- /usr/local/kafka:/kafka

- /usr/local/kafka/logs:/opt/kafka/logs

- /usr/local/kafka/docker.sock:/var/run/docker.sock

#- /etc/timezone:/etc/timezone #指定时区

- /etc/localtime:/etc/localtime

depends_on:

- zookeeper

networks:

- gxu-net

volumes:

zookeeper_data:

driver: local

kafka_data:

driver: local

networks:

gxu-net:

external: true

配置2.8版本单节点

version: '3'

services:

kafka:

image: wurstmeister/kafka # 原镜像`wurstmeister/kafka`

container_name: kafka # 容器名为'kafka'

volumes: # 数据卷挂载路径设置,将本机目录映射到容器目录

- /usr/local/kafka/logs:/opt/kafka/logs

- /usr/local/kafka/docker.sock:/var/run/docker.sock

- /etc/timezone:/etc/timezone #指定时区

- /etc/localtime:/etc/localtime

environment: # 设置环境变量,相当于docker run命令中的-e

KAFKA_BROKER_ID: 0 # 在kafka集群中,每个kafka都有一个BROKER_ID来区分自己

KAFKA_ADVERTISED_LISTENERS: PLAINTEXT://192.168.0.44:19092 # TODO 将kafka的地址端口注册给zookeeper

KAFKA_LISTENERS: PLAINTEXT://0.0.0.0:19092 # 配置kafka的监听端口

KAFKA_ZOOKEEPER_CONNECT: zookeeper:2181

KAFKA_CREATE_TOPICS: "hello_world"

KAFKA_HEAP_OPTS: -Xmx1G -Xms256M

TZ: Asia/Shanghai

ports: # 映射端口

- "19092:19092"

networks:

- mynet

kafka-manager:

image: sheepkiller/kafka-manager # 原镜像`sheepkiller/kafka-manager`

container_name: kafka-manager # 容器名为'kafka-manager'

environment: # 设置环境变量,相当于docker run命令中的-e

ZK_HOSTS: zookeeper:2181

APPLICATION_SECRET: xxxxx

KAFKA_MANAGER_AUTH_ENABLED: "true" # 开启kafka-manager权限校验

KAFKA_MANAGER_USERNAME: admin # 登陆账户

KAFKA_MANAGER_PASSWORD: 123456 # 登陆密码

ports: # 映射端口

- "19000:9000"

depends_on: # 解决容器依赖启动先后问题

- kafka

networks:

- mynet

networks:

mynet:

external: true

配置3.2版本单节点

version: "2"

services:

#zookeeper:

# container_name: zookeeper

# hostname: zookeeper

# image: docker.io/bitnami/zookeeper:3.8

# ports:

# - "2181:2181"

# volumes:

# - "zookeeper_data:/bitnami"

# environment:

# - ALLOW_ANONYMOUS_LOGIN=yes

# networks:

# - mynet

kafka:

image: docker.io/bitnami/kafka:3.2

container_name: kafka

hostname: kafka

ports:

- "19092:19092"

environment:

- KAFKA_CFG_BROKER_ID=0

- KAFKA_CFG_ZOOKEEPER_CONNECT=zookeeper:2181

- ALLOW_PLAINTEXT_LISTENER=yes

#- KAFKA_CFG_LISTENER_SECURITY_PROTOCOL_MAP=INTERNAL:PLAINTEXT,EXTERNAL:PLAINTEXT

#- KAFKA_CFG_LISTENERS=INTERNAL://:9092,EXTERNAL://0.0.0.0:19092

#- KAFKA_CFG_ADVERTISED_LISTENERS=INTERNAL://kafka:9092,EXTERNAL://192.168.0.44:19092

#- KAFKA_CFG_INTER_BROKER_LISTENER_NAME=INTERNAL

- KAFKA_CFG_LISTENERS=PLAINTEXT://0.0.0.0:19092

- KAFKA_CFG_ADVERTISED_LISTENERS=PLAINTEXT://192.168.0.44:19092

- KAFKA_CFG_CREATE_TOPICS="hello_world"

- KAFKA_CFG_HEAP_OPTS=-Xmx1G -Xms256M

- TZ=Asia/Shanghai

volumes:

#- kafka_0_data:/bitnami/kafka # 官方写法

- /usr/local/kafka/data:/bitnami/kafka/data

- /usr/local/kafka/logs:/opt/kafka/logs

- /usr/local/kafka/docker.sock:/var/run/docker.sock

- /etc/timezone:/etc/timezone #指定时区

- /etc/localtime:/etc/localtime

#depends_on:

# - zookeeper

networks:

- mynet

kafka-manager:

image: sheepkiller/kafka-manager # 原镜像`sheepkiller/kafka-manager`

container_name: kafka-manager # 容器名为'kafka-manager'

environment: # 设置环境变量,相当于docker run命令中的-e

ZK_HOSTS: zookeeper:2181

APPLICATION_SECRET: xxxxx

KAFKA_MANAGER_AUTH_ENABLED: "true" # 开启kafka-manager权限校验

KAFKA_MANAGER_USERNAME: admin # 登陆账户

KAFKA_MANAGER_PASSWORD: 123456 # 登陆密码

ports: # 映射端口

- "19000:9000"

depends_on: # 解决容器依赖启动先后问题

- kafka

volumes:

zookeeper_data:

driver: local

kafka_data:

driver: local

networks:

mynet:

external: true

配置3.2集群

version: "2"

services:

zookeeper:

container_name: zookeeper

hostname: zookeeper

image: docker.io/bitnami/zookeeper:3.8

# 内部端口不需要暴露出去

ports:

- "2181:2181"

environment:

- ALLOW_ANONYMOUS_LOGIN=yes

- TZ=Asia/Shanghai

volumes:

#- zookeeper_data:/bitnami/zookeeper # 官方写法

- /usr/local/zookeeper/data:/bitnami/zookeeper/data

- /usr/local/zookeeper/config:/config

- /usr/local/zookeeper/datalog:/datalog

- /usr/local/zookeeper/logs:/logs

- /etc/timezone:/etc/timezone #指定时区

- /etc/localtime:/etc/localtime

networks:

- mynet

kafka-0:

container_name: kafka-0

hostname: kafka-0

image: docker.io/bitnami/kafka:3.2

ports:

- "19093:9093"

environment:

- KAFKA_CFG_ZOOKEEPER_CONNECT=zookeeper:2181

- KAFKA_CFG_BROKER_ID=0

- ALLOW_PLAINTEXT_LISTENER=yes

- KAFKA_CFG_LISTENER_SECURITY_PROTOCOL_MAP=INTERNAL:PLAINTEXT,EXTERNAL:PLAINTEXT

- KAFKA_CFG_LISTENERS=INTERNAL://:9092,EXTERNAL://0.0.0.0:9093

- KAFKA_CFG_ADVERTISED_LISTENERS=INTERNAL://kafka-0:9092,EXTERNAL://192.168.0.44:9093

- KAFKA_CFG_INTER_BROKER_LISTENER_NAME=INTERNAL

- TZ=Asia/Shanghai

volumes:

#- kafka_0_data:/bitnami/kafka # 官方写法

- /usr/local/kafka0/data:/bitnami/kafka/data

- /usr/local/kafka0/logs:/opt/kafka/logs

- /usr/local/kafka0/docker.sock:/var/run/docker.sock

- /etc/timezone:/etc/timezone #指定时区

- /etc/localtime:/etc/localtime

depends_on:

- zookeeper

networks:

- mynet

kafka-1:

container_name: kafka-1

hostname: kafka-1

image: docker.io/bitnami/kafka:3.2

ports:

- "19094:9094"

environment:

- KAFKA_CFG_ZOOKEEPER_CONNECT=zookeeper:2181

- KAFKA_CFG_BROKER_ID=1

- ALLOW_PLAINTEXT_LISTENER=yes

- KAFKA_CFG_LISTENER_SECURITY_PROTOCOL_MAP=INTERNAL:PLAINTEXT,EXTERNAL:PLAINTEXT

- KAFKA_CFG_LISTENERS=INTERNAL://:9092,EXTERNAL://0.0.0.0:9094

- KAFKA_CFG_ADVERTISED_LISTENERS=INTERNAL://kafka-1:9092,EXTERNAL://192.168.0.44:9094

- KAFKA_CFG_INTER_BROKER_LISTENER_NAME=INTERNAL

- TZ=Asia/Shanghai

volumes:

#- kafka_0_data:/bitnami/kafka # 官方写法

- /usr/local/kafka1/data:/bitnami/kafka/data

- /usr/local/kafka1/logs:/opt/kafka/logs

- /usr/local/kafka1/docker.sock:/var/run/docker.sock

- /etc/timezone:/etc/timezone #指定时区

- /etc/localtime:/etc/localtime

depends_on:

- zookeeper

networks:

- mynet

kafka-2:

container_name: kafka-2

hostname: kafka-2

image: docker.io/bitnami/kafka:3.2

ports:

- "19095:9095"

environment:

- KAFKA_CFG_ZOOKEEPER_CONNECT=zookeeper:2181

- KAFKA_CFG_BROKER_ID=2

- ALLOW_PLAINTEXT_LISTENER=yes

- KAFKA_CFG_LISTENER_SECURITY_PROTOCOL_MAP=INTERNAL:PLAINTEXT,EXTERNAL:PLAINTEXT

- KAFKA_CFG_LISTENERS=INTERNAL://:9092,EXTERNAL://0.0.0.0:9095

- KAFKA_CFG_ADVERTISED_LISTENERS=INTERNAL://kafka-2:9092,EXTERNAL://192.168.0.44:9095

- KAFKA_CFG_INTER_BROKER_LISTENER_NAME=INTERNAL

- TZ=Asia/Shanghai

volumes:

#- kafka_0_data:/bitnami/kafka # 官方写法

- /usr/local/kafka2/data:/bitnami/kafka/data

- /usr/local/kafka2/logs:/opt/kafka/logs

- /usr/local/kafka2/docker.sock:/var/run/docker.sock

- /etc/timezone:/etc/timezone #指定时区

- /etc/localtime:/etc/localtime

depends_on:

- zookeeper

networks:

- mynet

volumes:

zookeeper_data:

driver: local

kafka_0_data:

driver: local

kafka_1_data:

driver: local

kafka_2_data:

driver: local

networks:

mynet:

external: true```

## 部署

```bash

docker-compose up -d

常见错误

解决ZooKeeper报错:java.io.IOException: No snapshot found, but there are log entries. Something is broken!

重点在于No snapshot found, but there are log entries. Something is broken!这句提示,翻译下大概意思就是:找不到快照,但有日志记录,有文件损坏了!

需要把/usr/local/zookeeper/datalog中内容删掉

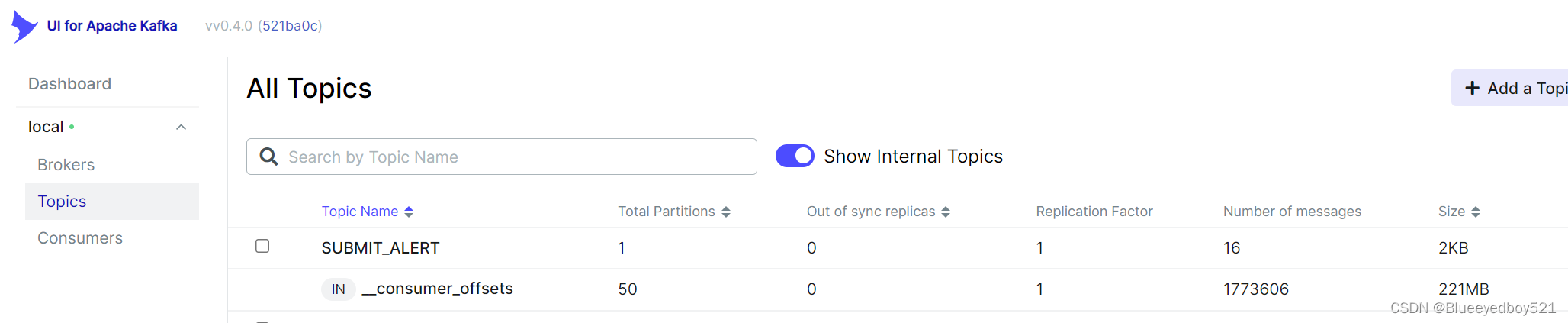

部署可视化工具kafka-ui

地址:https://github.com/provectus/kafka-ui

docker run -p 8080:8080 \

--name kafka-ui \

--net=mynet \

-v /etc/localtime:/etc/localtime \

-e KAFKA_CLUSTERS_0_NAME=local \

-e KAFKA_CLUSTERS_0_BOOTSTRAPSERVERS=kafka:9092 \

-d provectuslabs/kafka-ui:latest

访问127.0.0.1:8080

测试

创建主题

docker exec -it kafka-0 /opt/bitnami/kafka/bin/kafka-topics.sh \

--create --bootstrap-server kafka-0:9092 \

--topic my-topic \

--partitions 1 --replication-factor 3

# 旧版

docker exec -it kafka /opt/kafka/bin/kafka-topics.sh \

--create --bootstrap-server kafka:9092 \

--topic gxuMessage \

--partitions 1 --replication-factor 3

查看主题列表

# 查看主题列表

/bin/kafka-topics.sh --bootstrap-server hadoop102:9092 --list

# 3.2版本

docker exec -it kafka-0 /opt/bitnami/kafka/bin/kafka-topics.sh \

--bootstrap-server kafka-0:9092 \

--list

# 旧版本

docker exec -it kafka /opt/kafka/bin/kafka-topics.sh \

--bootstrap-server kafka:9092 \

--list

新版和旧版注意kafka的路径不同

查看主题详情

# 查看主题详细描述

/bin/kafka-topics.sh --bootstrap-server hadoop102:9092 --topic first --describe

# 3.2版本

docker exec -it kafka-0 /opt/bitnami/kafka/bin/kafka-topics.sh \

--bootstrap-server kafka-0:9092 \

--topic my-topic --describe

# 旧版本

docker exec -it kafka /opt/kafka/bin/kafka-topics.sh \

--bootstrap-server kafka:9092 \

--topic my-topic --describe

创建生产者

docker exec -it kafka-0 /opt/bitnami/kafka/bin/kafka-console-producer.sh \

--bootstrap-server kafka-0:9092 \

--topic my-topic

发送hello

root@sony-HP-Notebook:~# docker exec -it kafka-0 /opt/bitnami/kafka/bin/kafka-console-producer.sh \

> --bootstrap-server kafka-0:9092 \

> --topic my-topic

>hello

>

创建消费者

docker exec -it kafka-0 /opt/bitnami/kafka/bin/kafka-console-consumer.sh \

--bootstrap-server kafka-0:9092 \

--topic my-topic

接收到消息

root@sony-HP-Notebook:~# docker exec -it kafka-0 /opt/bitnami/kafka/bin/kafka-console-consumer.sh \

> --bootstrap-server kafka-0:9092 \

> --topic my-topic

hello

删除主题

1、删除主题

在server.properties中增加设置,默认未开启

delete.topic.enable=true

删除主题命令

/bin/kafka-topics --delete --topic test --zookeeper localhost:2181

2、删除主题数据

如果想保留主题,只删除主题现有数据(log)。可以通过修改数据保留时间实现

bin/kafka-configs --zookeeper localhost:2181 --entity-type topics --entity-name test --alter --add-config retention.ms=3000

### 修改保留时间为三秒,但不是修改后三秒就马上删掉,kafka是采用轮训的方式,轮训到这个主题发现三秒前的数据都是删掉。时间由自己在

server.properties里面设置,设置见下面。

数据删除后,继续使用主题,那主题数据的保留时间就不可能为三秒,所以把上面修改的配置删掉,采用server.properties里面统一的配置。

bin/kafka-configs --zookeeper localhost:2181 --entity-type topics --entity-name test --alter --delete-config retention.ms

server.properties里面数据保留时间的配置

log.retention.hours=168 //保留时间,单位小时

log.retention.check.interval.ms=300000 //保留时间检查间隔,单位毫秒

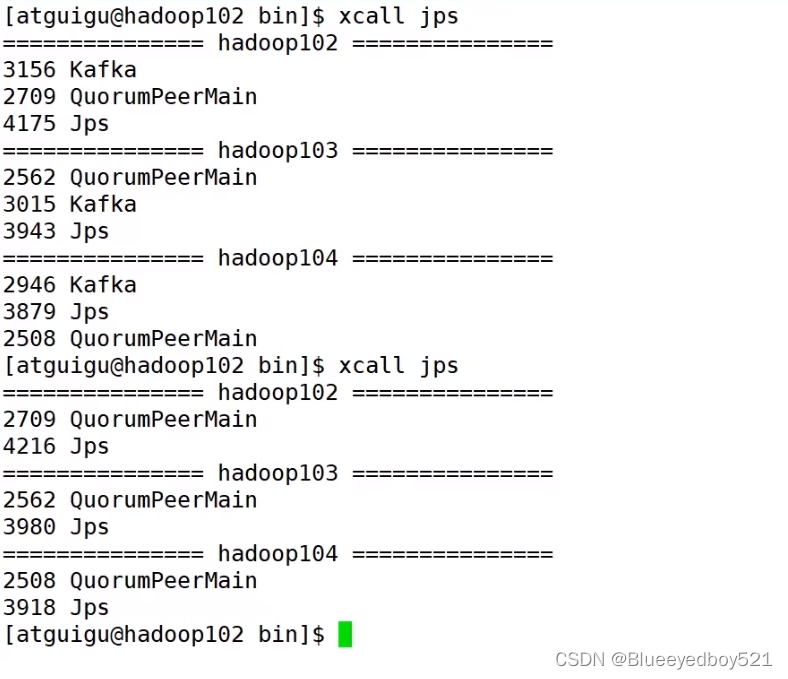

批量启动脚本

批量启动多台机器的kafka

vim kafka.sh

#!/bin/bash

case $1 in

"start")

for i in hadoop101 hadoop102 hadoop103

do

echo "--- 启动 $i kafka ---"

ssh $i "/opt/module/kafka/bin/kafka-server-start.sh -daemon /opt/module/kafka/config/server.properties"

done

;;

"stop")

for i in hadoop101 hadoop102 hadoop103

do

echo "--- 停止 $i kafka ---"

ssh $i "/opt/module/kafka/bin/kafka-server-stop.sh"

done

;;

esac

执行

# 启动

kafka.sh start

# 停止

kafka.sh stop

查看启动状态

查看各个机器的启动状态

xcall jps

361

361

被折叠的 条评论

为什么被折叠?

被折叠的 条评论

为什么被折叠?