Streaming media refers to the capability of playing media data while the data is being transferred from server. The user doesn't need to wait until full media content has been downloaded to start playing. In media streaming, media content is split into small chunks as the transport unit. After the user's player has received sufficient chunks, it starts playing.

From the developer's perspective, media streaming is comprised of two tasks, transfer data and render data. Application developers usually concentrate more on transfer data than render data, because codec and media renderer are often available already.

On android, streaming audio is somewhat easier than video for android provides a more friendly api to render audio data in small chunks. No matter what is our transfer mechanism, rtp, raw udp or raw file reading, we need to feed chunks we received to renderer. The

AudioTrack.writefunction enables us doing so.

AudioTrack object runs in two modes, static or stream. In static mode, we write the whole audio file to audio hardware. In stream mode, audio data are written in small chunks. The static mode is more efficient because it doesn't have the overhead of copying data from java layer to native layer, but it's not suitable if the audio file is too big to fit it memory. It's important to notice that we call

play at different time in two modes. In static mode, we must call write first, then call play. Otherwise, the AudioTrack raises an exception complains that AudioTrack object isn't properly initialized. In stream mode, they are called in reverse order. Under the hood, static and stream mode determine the memory model. In static mode, audio data are passed to renderer via shared memory. Thus static mode is more efficient.

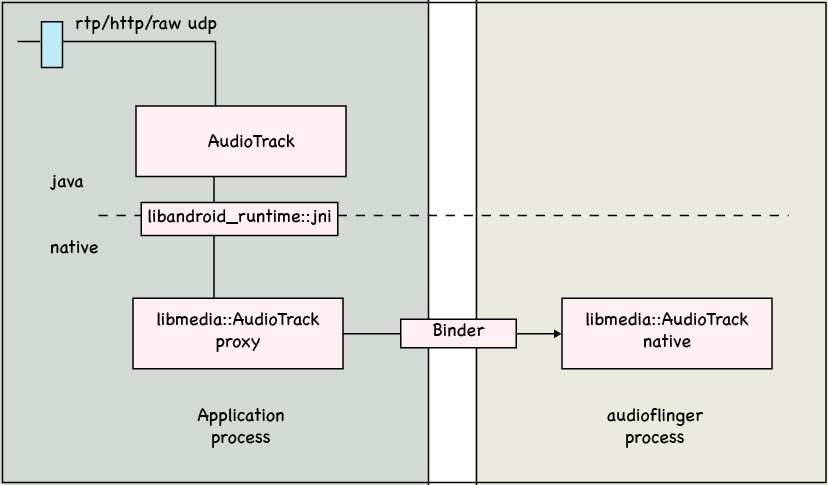

From birds eye view, the architecture of an typical audio streaming application is:

Our application receives data from network. Then the data will be passed to a java layer

AudioTrack object which internally calls through

jni to native AudioTrack object. The native AudioTrack object in our application is a proxy that refers to the implementation AudioTrack object resides in

audioflinger process, through binder ipc mechanism. The audiofinger process will interact with audio hardware.

Since our application and audioflinger are separate processes, so after our application has written data to audioflinger, the playback will not stop even if our application exits.

AudioTrack only supports

PCM (a.k.a

G.711) audio format. In other words, we can't stream mp3 audio directly. We have to deal with decoding ourselves, and feed decoded data to AudioTrack.

Sample

http://code.google.com/p/rxwen-blog-stuff/source/browse/trunk/android/streaming_audio/

For demonstration purpose, this sample chooses a very simple transfer mechanism. It reads data from a wav file on disk in chunks, but we can consider it as if the data were delivered from a media server on network. The idea is similar.

Update: Check

this post for a more concrete example.

stream audio on android 音频流 android

最新推荐文章于 2022-08-30 15:37:03 发布

1131

1131

被折叠的 条评论

为什么被折叠?

被折叠的 条评论

为什么被折叠?