小程序多文件分片上传实现

后台代码(springboot+redis+mysql)

FileSparkController

@RestController

@RequestMapping()

@Slf4j

public class FileSparkController extends BaseController {

@Value("${save.file-path}")

private String filePath;

@Resource

private FileSparkService fileSparkService;

@Resource

private ChunkService chunkService;

@Resource

private SparkFileUtils sparkFileUtils;

@GetMapping("/check")

public Result checkFile(@RequestParam("md5") String md5){

LambdaQueryWrapper<FileSpark> wrapper = new LambdaQueryWrapper<>();

wrapper.eq(FileSpark::getMd5,md5);

//首先检查是否有完整的文件

List<FileSpark> tempFile = fileSparkService.list(wrapper);

Map<String,Object> data = new HashMap<>();

if(tempFile.size()!=0){

data.put("isUploaded",true);

return data(201,"文件已经秒传",data);

}

//如果没有,就查找分片信息,并返回给前端

LambdaQueryWrapper<Chunk> chunkWrapper = new LambdaQueryWrapper<>();

chunkWrapper.eq(Chunk::getMd5,md5);

List<Integer> chunkList = sparkFileUtils.get(md5);

data.put("chunkList",chunkList);

return data(201,"",data);

}

/**

*

* @param chunk 文件

* @param md5 md5

* @param index 索引

* @param chunkTotal 分片总数

* @param fileSize 文件大小

* @param fileName 文件名称

* @param chunkSize 分片大小

* @return

*/

@PostMapping("/upload/chunk")

public Result uploadChunk(@RequestParam("chunk") MultipartFile chunk,

@RequestParam("md5") String md5,

@RequestParam("index") Integer index,

@RequestParam("chunkTotal")Integer chunkTotal,

@RequestParam("fileSize")Long fileSize,

@RequestParam("fileName")String fileName,

@RequestParam("chunkSize")Long chunkSize

){

String[] splits = fileName.split("\\.");

String type = splits[splits.length-1];

String resultFileName = filePath+md5+"."+type;

chunkService.saveChunk(chunk,md5,index,chunkSize,resultFileName);

log.info("上传分片:"+index +" ,"+chunkTotal+","+fileName+","+resultFileName);

LambdaQueryWrapper<Chunk> wrapper = new LambdaQueryWrapper<>();

wrapper.eq(Chunk::getMd5,md5);

if(Objects.equals(index, chunkTotal)){

FileSpark filePO = new FileSpark();

filePO.setName(fileName).setMd5(md5).setSize(fileSize);

fileSparkService.save(filePO);

sparkFileUtils.remove(md5);

return data(200,"文件上传成功",index);

}else{

return data(201,"分片上传成功",index);

}

}

@GetMapping("/fileList")

public Result getFileList(){

List<FileSpark> fileList = fileSparkService.list();

return data(201,"文件列表查询成功",fileList);

}

}

ChunkServiceImpl

@Override

public Integer saveChunk(MultipartFile chunk, String md5, Integer index, Long chunkSize, String resultFileName) {

try (RandomAccessFile randomAccessFile = new RandomAccessFile(resultFileName, "rw")) {

// 偏移量

long offset = chunkSize * (index - 1);

// 定位到该分片的偏移量

randomAccessFile.seek(offset);

// 写入

randomAccessFile.write(chunk.getBytes());

Chunk chunkPo = new Chunk();

chunkPo.setMd5(md5).setIndex(index);

sparkFileUtils.save(md5,index);

return 1;

} catch (IOException e) {

e.printStackTrace();

return 0;

}

}

SparkFileUtils

public class SparkFileUtils {

@Resource

private RedisTemplate<String, Integer> redisTemplate;

private final String FILE_HEAD = "spark-file:";

/**

* 保存数据

* @param md5

* @param index

* @return

*/

public boolean save(String md5,Integer index) {

Long aLong = redisTemplate.opsForList().rightPush(FILE_HEAD+md5, index);

System.out.println(aLong);

return aLong != null && aLong > 0;

}

/**

* 根据md查询临时保存的索引列表

* @param md5

* @return

*/

public List<Integer> get(String md5) {

List<Integer> range = redisTemplate.opsForList().range(FILE_HEAD+md5, 0, -1);

System.out.println(range);

return range;

}

public boolean remove(String md5) {

return Boolean.TRUE.equals(redisTemplate.delete(FILE_HEAD + md5));

}

}

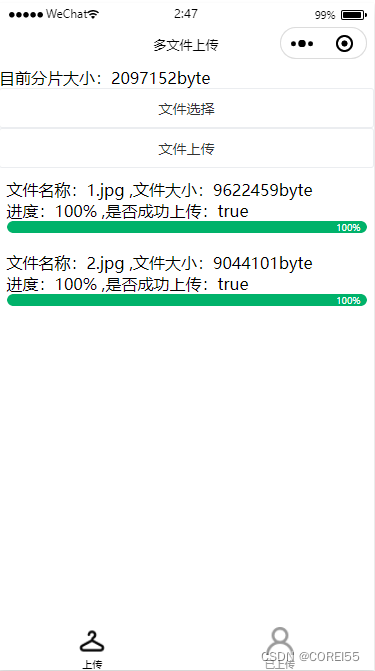

小程序代码(uniapp)

说明:

已经将分片上传的逻辑封装好了

index.vue

<template>

<view>

<view>目前分片大小:{{chunkSize}}byte</view>

<u-button @tap="selectFile">文件选择</u-button>

<u-button @tap="upload">文件上传</u-button>

<view v-for="(item,index) in uploadFiles" :key="index" style="padding: 2vw;margin: 1vw 0;">

<view>文件名称:{{item.fileName}} ,文件大小:{{item.size}}byte</view>

<view>进度:{{item.rate}}% ,是否成功上传:{{item.uploadStatus}}</view>

<u-line-progress :percentage="item.rate" activeColor="#00b26a"></u-line-progress>

</view>

</view>

</template>

<script>

import {reactive,toRefs} from "vue"

import BigUpload from "@/tools/sparkUpload";

export default {

setup(){

const data = reactive({

chunkSize:1024*1024*2,

uploadFiles:[],

})

const selectFile = async () => {

const res= await wx.chooseMessageFile()

console.log(res)

let tempArray = []

for (let i = 0; i < res.tempFiles.length; i++) {

tempArray.push({

path:res.tempFiles[i].path,

size:res.tempFiles[i].size,

fileName:res.tempFiles[i].name,

md5:'',

rate:0,

uploadStatus:false

})

}

// data.uploadFiles = [...data.uploadFiles,...tempArray]

data.uploadFiles = [...tempArray]

console.log(data.uploadFiles)

}

const upload = () => {

for (let i = 0; i < data.uploadFiles.length; i++) {

const uploadFile = new BigUpload({

uploadUrl:'http://localhost:9001/upload/chunk',

checkUrl:'http://localhost:9001/check',

filePath: data.uploadFiles[i].path,

byteLength: data.uploadFiles[i].size,

size: 1024*1024,//设定分片大小

fileName: data.uploadFiles[i].fileName,

drowSpeed: (p) => {

data.uploadFiles[i].rate = p

},

callback: (state) => {

if (state) {

data.uploadFiles[i].uploadStatus = true

}

}

})

uploadFile.startUpload()

}

}

return {...toRefs(data),selectFile,upload}

}

}

</script>

sparkUpload.js

import SparkMD5 from 'spark-md5'

export default class BigUpload {

constructor(Setting) {

this.Setting = Setting

}

startUpload() {

this.chunkSize = this.Setting.size

if (!this.Setting.filePath) {

return

}

this.pt_md5 = ''

this.chunks = Math.ceil(this.Setting.byteLength / this.chunkSize)

this.currentChunk = 0

this.gowith = true

this.chunkList=[]

this.fileSlice(0, this.Setting.byteLength, file => {

this.handshake(flag => {

if (flag) {

this.loadNext()

} else {

this.Setting.callback(false)

}

}, file)

})

}

handshake= (cbk, e)=> {

let formData = {}

let md5 = this.getDataMd5(e)

this.pt_md5 = md5

formData.md5 = md5

uni.request({

url: this.Setting.checkUrl,

method: 'GET',

data: {md5:md5},

success: (res) => {

console.log(res)

if(res.statusCode===200){

const data = res.data

console.log(data)

if(data.data.isUploaded){

this.gowith=false

this.drowSpeed(100)

this.callback(true)

return uni.$showMsg('上传完成')

}else {

this.gowith=true

this.chunkList=data.data.chunkList

cbk(true)

}

}else {

return uni.$showMsg('network fail')

}

},

fail: (err) => {

return uni.$showMsg('check fail')

}

})

}

loadNext() {

const p = this.currentChunk * 100 / this.chunks

this.drowSpeed(parseInt(p));

let start = this.currentChunk * this.chunkSize

let length = start + this.chunkSize >= this.Setting.byteLength ? this.Setting.byteLength - start : this.chunkSize

if (this.gowith) {

this.fileSlice(start, length, file => {

this.uploadFileBinary(file)

})

}

}

uploadFileBinary(data) {

const fs = uni.getFileSystemManager()

const md5 = this.getDataMd5(data)

const tempPath = `${wx.env.USER_DATA_PATH}/up_temp/${md5}.temp`

fs.access({

path: `${wx.env.USER_DATA_PATH}/up_temp`,

fail(res) {

fs.mkdirSync(`${wx.env.USER_DATA_PATH}/up_temp`, false)

}

})

fs.writeFile({

filePath: tempPath,

encoding: 'binary',

data: data,

success: res => {

let formData = {}

formData.index = this.currentChunk + 1

formData.md5 = this.pt_md5

formData.chunkTotal = this.chunks

formData.fileSize = this.Setting.byteLength

formData.fileName = this.Setting.fileName

formData.chunkSize = this.Setting.size

uni.uploadFile({

url: this.Setting.uploadUrl,

filePath: tempPath,

name: 'chunk',

formData: formData,

success: res2 => {

console.log('res2',res2)

fs.unlinkSync(tempPath)

if (res2.statusCode === 200) {

const data = JSON.parse(res2.data)

console.log('data',data)

if (data.code === 201) {

console.log('文件分片成功')

this.currentChunk++

if (this.currentChunk < this.chunks) {

this.loadNext()

}

}

else if (data.code === 200){

this.drowSpeed(100)

console.log('文件上传成功')

this.callback(true)

return uni.$showMsg('文件上传成功')

}

else {

this.callback(false)

}

} else {

this.callback(false)

}

},

fail: err => {

console.log(err)

this.callback(false)

}

})

},

fail: err => {

console.log('write file',err)

this.callback(false)

}

})

}

drowSpeed(p) {

if (this.Setting.drowSpeed != null && typeof (this.Setting.drowSpeed) === 'function') {

this.Setting.drowSpeed(p)

}

}

getDataMd5(data) {

if (data) {

let trunkSpark = new SparkMD5()

trunkSpark.appendBinary(data)

let md5 = trunkSpark.end()

return md5

}

}

isPlay(cbk) {

if (this.gowith) {

this.gowith = false

if (typeof (cbk) === 'function') cbk(false)

} else {

this.gowith = true

this.loadNext()

if (typeof (cbk) === 'function') cbk(true)

}

}

fileSlice(start, length, cbk) {

uni.getFileSystemManager().readFile({

filePath: this.Setting.filePath,

encoding: 'binary',

position: start,

length: length,

success: res => {

cbk(res.data)

},

fail: err => {

console.error(err)

this.callback(false)

}

})

}

callback(res) {

if (typeof (this.Setting.callback) === 'function') {

this.Setting.callback(res)

}

}

}

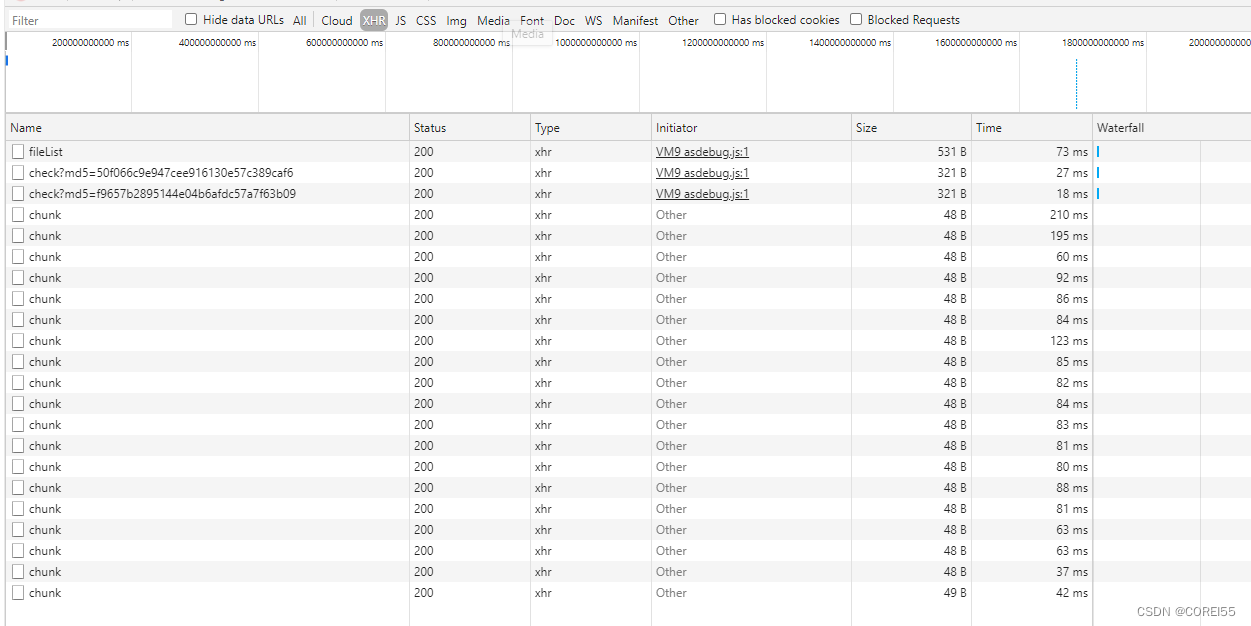

实现效果

二维码体验

可能需要申请为体验成员才能访问,你可以提供微信号我,我把你加入体验成员

3619

3619

被折叠的 条评论

为什么被折叠?

被折叠的 条评论

为什么被折叠?