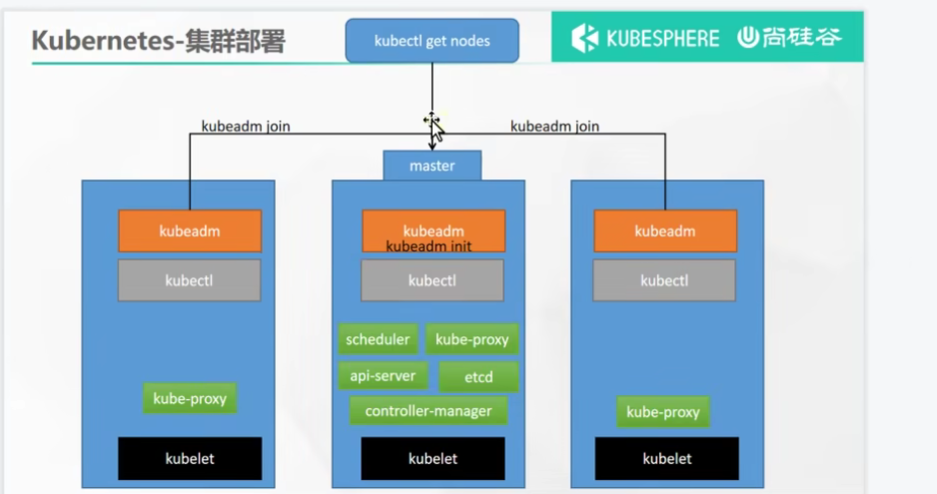

【K8S学习笔记-001】K8s集群与Dashboard部署

K8s集群部署

学习视频:https://www.bilibili.com/video/BV13Q4y1C7hS?p=26&spm_id_from=pageDriver&vd_source=0bf662c33adfc181186b04ba57e11dff

附带笔记:https://www.yuque.com/leifengyang/oncloud/kgheaf

Kubernetes组件组成:

- kubectl - 客户端命令行工具,将接受的命令格式化后发送给kube-apiserver,作为整个系统的操作入口。

- kube-apiserver - 作为整个系统的控制入口,以REST API服务提供接口,提供认证、授权、访问控制、API 注册和发现等机制

- kube-controller-manager - 用来执行整个系统中的后台任务,包括节点状态状况、Pod个数、Pods和Service的关联等。

- kube-scheduler - 负责节点资源管理,接受来自kube-apiserver创建Pods任务,并分配到某个节点。

- etcd - 负责节点间的服务发现和配置共享,保存了整个集群的状态

- kube-proxy - 运行在每个计算节点上,负责Pod网络代理。定时从etcd获取到service信息来做相应的策略。

- kubelet - 运行在每个计算节点上,作为agent,接受分配该节点的Pods任务及管理容器,周期性获取容器状态,反馈给kube-apiserver。

- DNS - 一个可选的服务,用于为每个Service对象创建DNS记录,这样所有的Pod就可以通过DNS访问服务了。

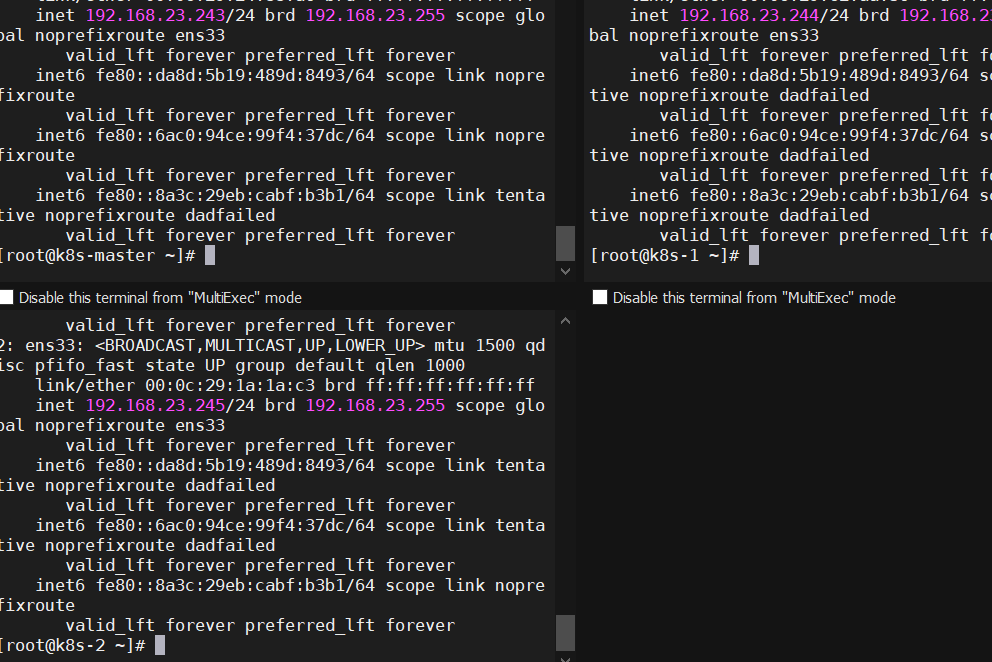

准备3台机器

K8s-Master 192.168.23.243

K8s-01 192.168.23.244

K8s-02 192.168.23.245

# 将 SELinux 设置为 permissive 模式(相当于将其禁用)

sudo setenforce 0

sudo sed -i 's/^SELINUX=enforcing$/SELINUX=permissive/' /etc/selinux/config

#关闭swap

swapoff -a

sed -ri 's/.*swap.*/#&/' /etc/fstab

#允许 iptables 检查桥接流量

cat <<EOF | sudo tee /etc/modules-load.d/k8s.conf

br_netfilter

EOF

cat <<EOF | sudo tee /etc/sysctl.d/k8s.conf

net.bridge.bridge-nf-call-ip6tables = 1

net.bridge.bridge-nf-call-iptables = 1

EOF

sudo sysctl --system

在三台机器安装docker

sudo yum install -y yum-utils

sudo yum-config-manager \

--add-repo \

http://mirrors.aliyun.com/docker-ce/linux/centos/docker-ce.repo

yum install -y docker-ce-20.10.7 docker-ce-cli-20.10.7 containerd.io-1.4.6

systemctl enable docker --now

添加docker加速器

sudo mkdir -p /etc/docker

sudo tee /etc/docker/daemon.json <<-'EOF'

{

"registry-mirrors": ["https://82m9ar63.mirror.aliyuncs.com"],

"exec-opts": ["native.cgroupdriver=systemd"],

"log-driver": "json-file",

"log-opts": {

"max-size": "100m"

},

"storage-driver": "overlay2"

}

EOF

sudo systemctl daemon-reload

sudo systemctl restart docker

在三台机器安装kubelet、kubeadm、kubectl

cat <<EOF | sudo tee /etc/yum.repos.d/kubernetes.repo

[kubernetes]

name=Kubernetes

baseurl=http://mirrors.aliyun.com/kubernetes/yum/repos/kubernetes-el7-x86_64

enabled=1

gpgcheck=0

repo_gpgcheck=0

gpgkey=http://mirrors.aliyun.com/kubernetes/yum/doc/yum-key.gpg

http://mirrors.aliyun.com/kubernetes/yum/doc/rpm-package-key.gpg

exclude=kubelet kubeadm kubectl

EOF

yum install -y kubelet-1.20.9 kubeadm-1.20.9 kubectl-1.20.9 --disableexcludes=kubernetes

sudo systemctl enable --now kubelet

脚本下载镜像

sudo tee ./images.sh <<-'EOF'

#!/bin/bash

images=(

kube-apiserver:v1.20.9

kube-proxy:v1.20.9

kube-controller-manager:v1.20.9

kube-scheduler:v1.20.9

coredns:1.7.0

etcd:3.4.13-0

pause:3.2

)

for imageName in ${images[@]} ; do

docker pull registry.cn-hangzhou.aliyuncs.com/lfy_k8s_images/$imageName

done

EOF

chmod +x ./images.sh && ./images.sh

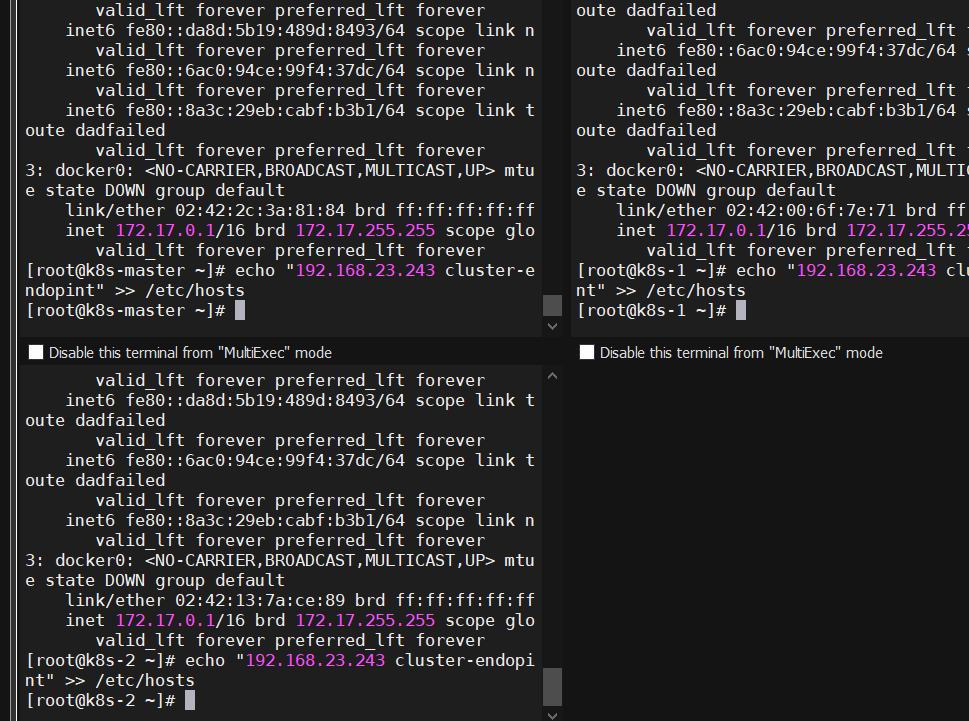

在每台机器上解析主机

主节点初始化

kubeadm init \

--apiserver-advertise-address=192.168.23.243 \

--control-plane-endpoint=cluster-endpoint \

--image-repository registry.cn-hangzhou.aliyuncs.com/lfy_k8s_images \

--kubernetes-version v1.20.9 \

--service-cidr=10.96.0.0/16 \

--pod-network-cidr=193.168.0.0/16 #改为 193

#所有网络范围不重叠也不与主机重叠

Your Kubernetes control-plane has initialized successfully!

To start using your cluster, you need to run the following as a regular user:

mkdir -p $HOME/.kube

sudo cp -i /etc/kubernetes/admin.conf $HOME/.kube/config

sudo chown $(id -u):$(id -g) $HOME/.kube/config

Alternatively, if you are the root user, you can run:

export KUBECONFIG=/etc/kubernetes/admin.conf

You should now deploy a pod network to the cluster.

Run "kubectl apply -f [podnetwork].yaml" with one of the options listed at:

https://kubernetes.io/docs/concepts/cluster-administration/addons/

You can now join any number of control-plane nodes by copying certificate authorities

and service account keys on each node and then running the following as root:

kubeadm join cluster-endpoint:6443 --token 5v4gxm.pf7qndt1xq7q9wbo \

--discovery-token-ca-cert-hash sha256:f33d1ad509cfd7b8a8a0ab430ce15f5e97da6835e783239103a39704cdccfea5 \

--control-plane

Then you can join any number of worker nodes by running the following on each as root:

kubeadm join cluster-endpoint:6443 --token 5v4gxm.pf7qndt1xq7q9wbo \

--discovery-token-ca-cert-hash sha256:f33d1ad509cfd7b8a8a0ab430ce15f5e97da6835e783239103a39704cdccfea5

更具上文操作

mkdir -p $HOME/.kube

sudo cp -i /etc/kubernetes/admin.conf $HOME/.kube/config

sudo chown $(id -u):$(id -g) $HOME/.kube/config

[root@k8s-master ~]# kubectl get nodes

NAME STATUS ROLES AGE VERSION

k8s-master NotReady control-plane,master 9m19s v1.20.9

根据上文部署网络插件

https://kubernetes.io/docs/concepts/cluster-administration/addons/

[root@k8s-master ~]# curl https://docs.projectcalico.org/v3.20/manifests/calico.yaml -O

[root@k8s-master ~]# kubectl apply -f calico.yaml

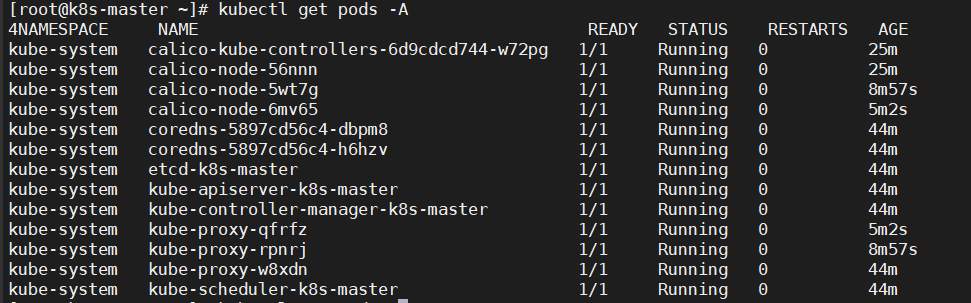

[root@k8s-master ~]# kubectl get pods -A

NAMESPACE NAME READY STATUS RESTARTS AGE

kube-system calico-kube-controllers-6d9cdcd744-w72pg 1/1 Running 0 4m35s

kube-system calico-node-56nnn 1/1 Running 0 4m44s

kube-system coredns-5897cd56c4-dbpm8 1/1 Running 0 24m

kube-system coredns-5897cd56c4-h6hzv 1/1 Running 0 24m

kube-system etcd-k8s-master 1/1 Running 0 24m

kube-system kube-apiserver-k8s-master 1/1 Running 0 24m

kube-system kube-controller-manager-k8s-master 1/1 Running 0 24m

kube-system kube-proxy-w8xdn 1/1 Running 0 24m

kube-system kube-scheduler-k8s-master 1/1 Running 0 24m

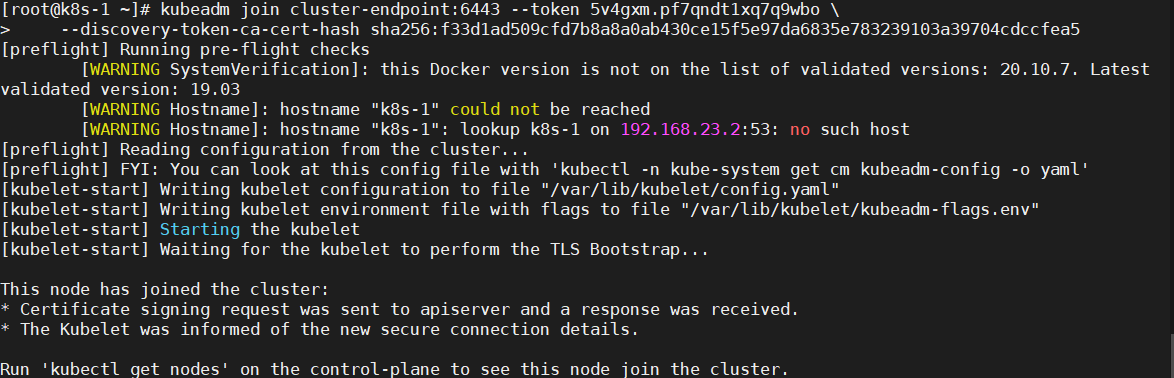

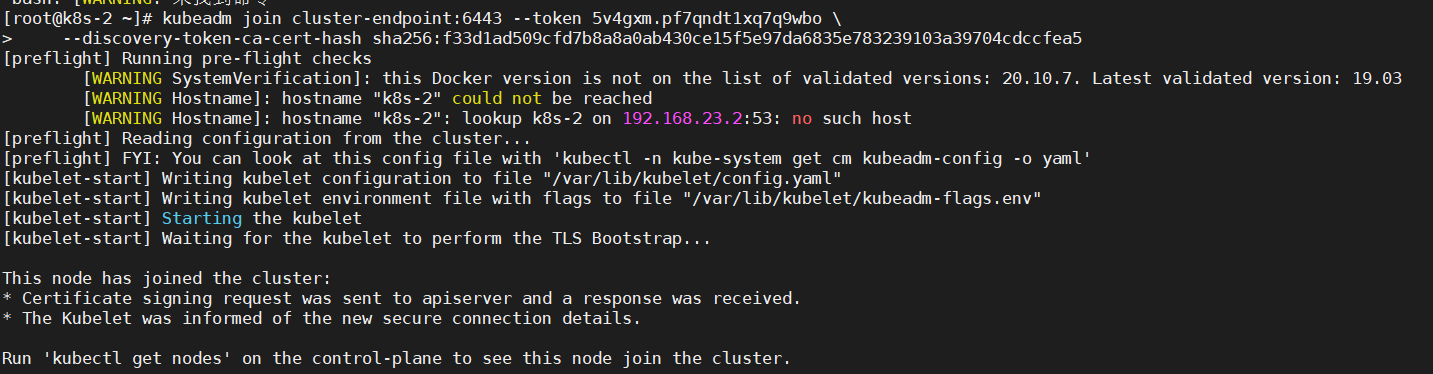

加入node节点

在子节点K8s-01,k8s-02上

kubeadm join cluster-endpoint:6443 --token 5v4gxm.pf7qndt1xq7q9wbo \

--discovery-token-ca-cert-hash sha256:f33d1ad509cfd7b8a8a0ab430ce15f5e97da6835e783239103a39704cdccfea5

之前的令牌只有24小时,如何更新令牌?

如下部署新令牌

[root@k8s-master ~]# kubeadm token create --print-join-command

kubeadm join cluster-endpoint:6443 --token 5y0582.2q536s12onayxsrf --discovery-token-ca-cert-hash sha256:f33d1ad509cfd7b8a8a0ab430ce15f5e97da6835e783239103a39704cdccfea5

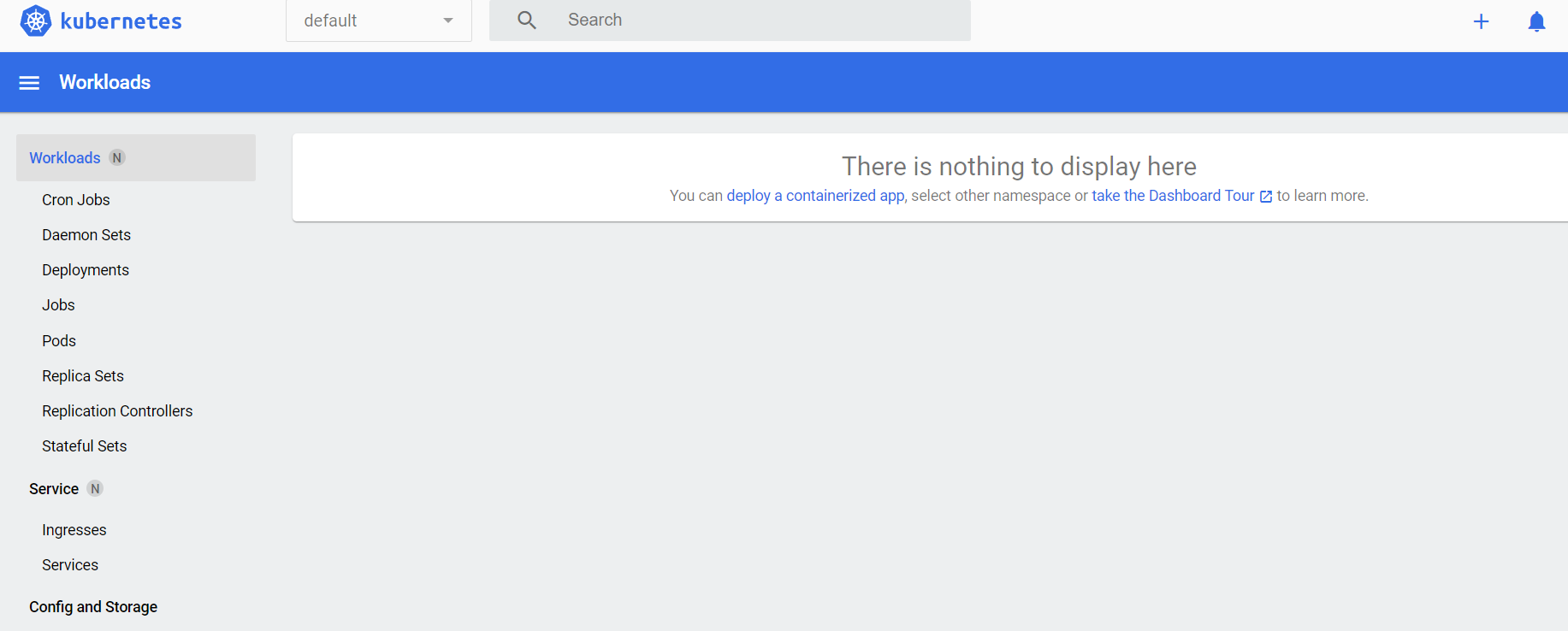

部署可视化界面Dashboard

kubectl apply -f https://raw.githubusercontent.com/kubernetes/dashboard/v2.3.1/aio/deploy/recommended.yaml

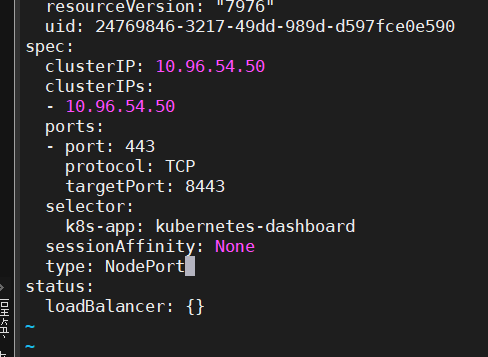

设置访问端口与访问账号

kubectl edit svc kubernetes-dashboard -n kubernetes-dashboard

更改type为NodePort

[root@k8s-master ~]# kubectl get svc -A |grep kubernetes-dashboard

kubernetes-dashboard dashboard-metrics-scraper ClusterIP 10.96.62.206 <none> 8000/TCP 7m51s

kubernetes-dashboard kubernetes-dashboard NodePort 10.96.54.50 <none> 443:31192/TCP 7m51s

## 找到端口,在安全组放行 这个31192就是以后访问K8s控制台的端口

访问: https://集群任意IP:端口

https://192.168.23.244:31192/

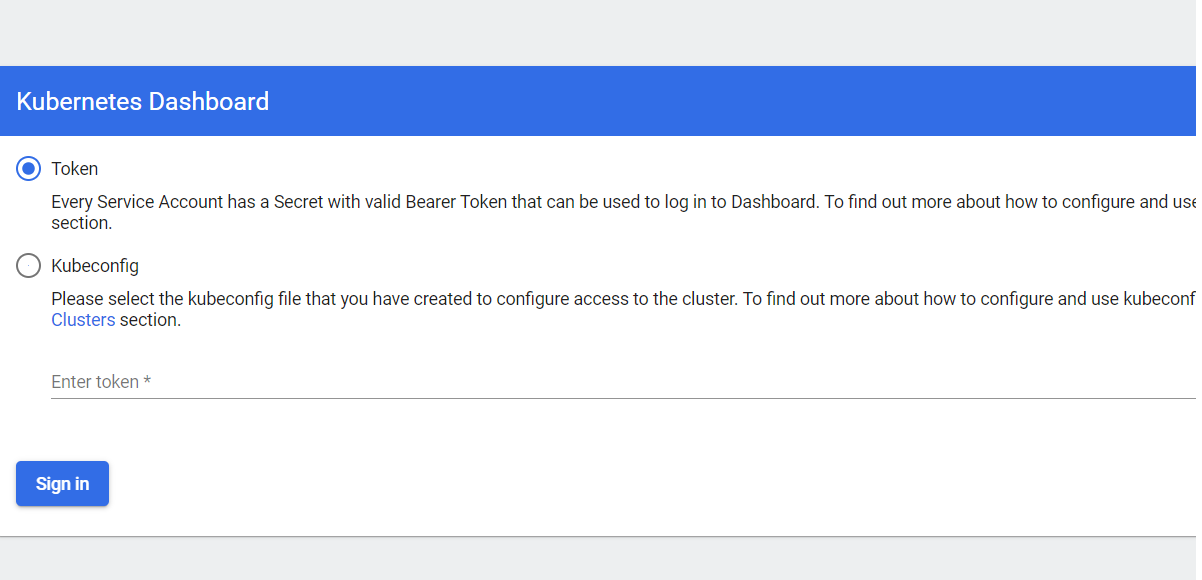

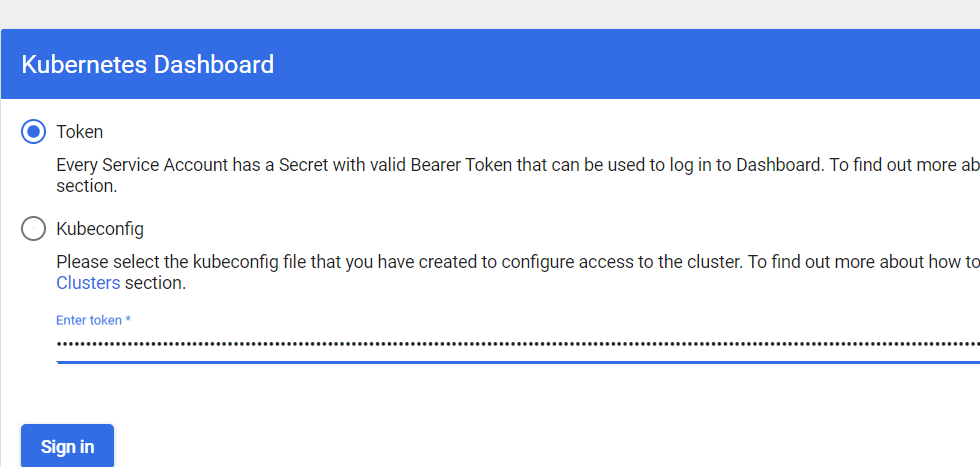

出现

[root@k8s-master ~]# vi dash-usr.yaml

apiVersion: v1

kind: ServiceAccount

metadata:

name: admin-user

namespace: kubernetes-dashboard

---

apiVersion: rbac.authorization.k8s.io/v1

kind: ClusterRoleBinding

metadata:

name: admin-user

roleRef:

apiGroup: rbac.authorization.k8s.io

kind: ClusterRole

name: cluster-admin

subjects:

- kind: ServiceAccount

name: admin-user

namespace: kubernetes-dashboard

[root@k8s-master ~]# kubectl apply -f dash-usr.yaml

serviceaccount/admin-user created

clusterrolebinding.rbac.authorization.k8s.io/admin-user created

获取之前网页需要的访问令牌Kubeconfig

kubectl -n kubernetes-dashboard get secret $(kubectl -n kubernetes-dashboard get sa/admin-user -o jsonpath="{.secrets[0].name}") -o go-template="{{.data.token | base64decode}}"

成功

334

334

被折叠的 条评论

为什么被折叠?

被折叠的 条评论

为什么被折叠?