1.背景

目前opencv存在多种自带模板匹配方案,包括TemplateMatch、ShapeMatch、CompareHisr等多种算法,实际应用过程成存在众多缺陷:尺寸不同、角度不同、灰度分布不同导致匹配过程中出现很多问题,因此目前为提升模板匹配精度、适用性,通过网络搜寻查询到一种新的通过边缘梯度基准的来匹配模板的算法

2.原理

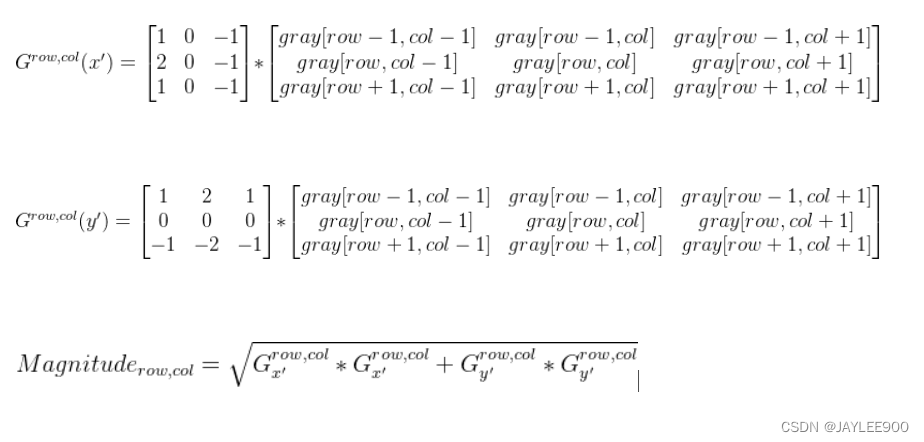

通过收集模板边缘每个位置处X方向与Y方向的灰度梯度,与查询图像中的有效区域的全部像素点的XY方向的灰度梯度进行匹配查询(梯度NCC算法),符合合格阈值的图像将其位置进行收集,废话不多说了,上家伙!!!!

3.算法

3.1数据收集

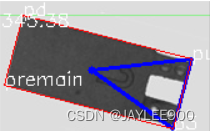

通过Sobel算子分别收集模板和查询图像每个像素点X、Y方向灰度一阶导,并计算出该点对应的综合梯度,配合轮廓查询或者Canny边缘检测以及图像金字塔效率会更高哦,同时记录一下模板收集的有效点中的 基准点Pb,和模板每个有效点相对于刚才基准点的相对坐标P(col-pb.x,row-pb.y)。

3.2数据匹配

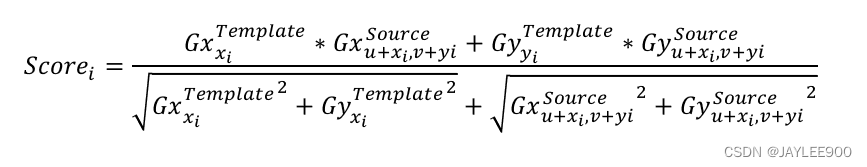

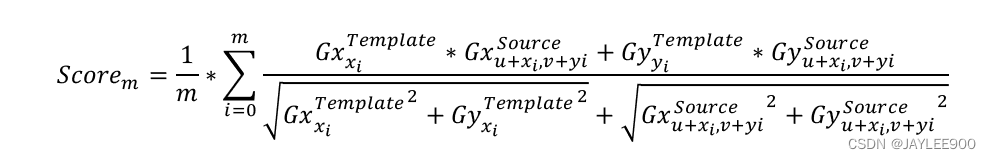

通过上述收集到的模板数据,将查询图像中的每个点梯度值与模板中的每个有效点梯度值进行匹配,并记录匹配分数,匹配算法基于NCC模板匹配,公式到处都是,我这就插一个了

其中template表示模板中收集到相关数据,source为要查询的图像中收集到的数据

3.3匹配优化

匹配过程中由于待查询图像中的像素点数量可能过多,因此可以通过给定每个匹配过程中的像素点得到的匹配分数进行阈值Smin,来提升效率,优化进设置常规阈值可能导致,前期点不匹配后期点匹配出现时部分数据被忽略的情况,因此在阈值数处理时进行一个贪婪参数处理来保证提升匹配效率

4.代码

4.1模板有效点数据收集

通过如下部分代码获取到图像准确轮廓相关点信息量,通过轮廓去找有效点相对于去查询模板全部像素点梯度再去筛选效率高很多,通过对比,梯度值相对比较明显;

static public List<ImageEdgePtInform> ImageContourEdgeInfomGet(Mat img,int margin,ref Size validSize,bool show=false)

{

Mat _uImg = img.Clone();

if (_uImg.Type() != MatType.CV_8UC1)

{

Cv2.CvtColor(_uImg, _uImg, ColorConversionCodes.BGR2GRAY);

}

int edgePointCount = 0;

Point relaOrgPt = new Point(int.MaxValue,int.MaxValue);

List<ImageEdgePtInform> resultEdgeInforms = new List<ImageEdgePtInform>();

Mat _blur = _uImg.GaussianBlur(new Size(3, 3), 0);

Mat _threImg= _blur.Threshold(120, 255, ThresholdTypes.BinaryInv);

//图像前期处理

_threImg.FindContours(out Point[][] cnts, out HierarchyIndex[] hids, RetrievalModes.List, ContourApproximationModes.ApproxNone);

cnts = cnts.Where(cnt => Cv2.ContourArea(cnt) > 3 && Cv2.ArcLength(cnt, false) > 3 && cnt.Length > 3).ToArray();

cnts=cnts.ToList().OrderByDescending(cnt => Cv2.ContourArea(cnt)).ToArray();

//找轮廓,并获取最大轮廓

foreach (var cnt in cnts) edgePointCount += cnt.Length;

Mat xDerivative = _uImg.Sobel(MatType.CV_64FC1, 1, 0, 3);

Mat yDerivative = _uImg.Sobel(MatType.CV_64FC1, 0, 1, 3);

//获取模板图像X方向Y方向梯度

unsafe

{

foreach(var cnt in cnts)

{

foreach(var pt in cnt)

{

ImageEdgePtInform ptInform = new ImageEdgePtInform();

int row = pt.Y, col = pt.X;

//获取轮廓基准点(轮廓边缘矩形左下角)

relaOrgPt.X = Math.Min(relaOrgPt.X, col);

relaOrgPt.Y = Math.Min(relaOrgPt.Y, row);

//获取轮廓边缘矩形右下角点

validSize.Width = Math.Max(validSize.Width, col);

validSize.Height = Math.Max(validSize.Height, row);

double dx = ((double*)xDerivative.Ptr(row))[col];

double dy = ((double*)yDerivative.Ptr(row))[col];

double mag = Math.Sqrt(dx * dx + dy * dy);

double barycentOrient = ContourBaryCenterOrientationGet(cnts[0]);

ptInform.DerivativeX = dx;

ptInform.DerivativeY = dy;

//获取当前点xy方向梯度

ptInform.Magnitude = (double)(1.0 / mag);

ptInform.RelativePos = pt;

ptInform.BarycentOrient = barycentOrient;

resultEdgeInforms.Add(ptInform);

}

}

foreach (var inf in resultEdgeInforms)

{

inf.RelativePos = new Point(inf.RelativePos.X - relaOrgPt.X, inf.RelativePos.Y - relaOrgPt.Y);

}

validSize = new Size(validSize.Width - relaOrgPt.X, validSize.Height - relaOrgPt.Y);

if (show)

{

Mat _sImg = img.Clone();

_sImg.DrawContours(cnts, -1, Scalar.Green, 1);

_sImg.Circle(relaOrgPt, 2, Scalar.Red);

_sImg.Rectangle(new Rect(relaOrgPt, validSize), Scalar.Blue, 1);

_sImg = ImageBasicLineDrawing(_sImg, bcenter, orientation: ENUMS.IMAGE_PERMUTATION_TYPE.HORIZONTAL);

_sImg = ImageBasicLineDrawing(_sImg, bcenter, orientation: ENUMS.IMAGE_PERMUTATION_TYPE.VERTICAL);

_sImg.Circle(bcenter, 5, Scalar.Blue, -1);

ImageShow("asdsad", _sImg);

}

}

return resultEdgeInforms;

}

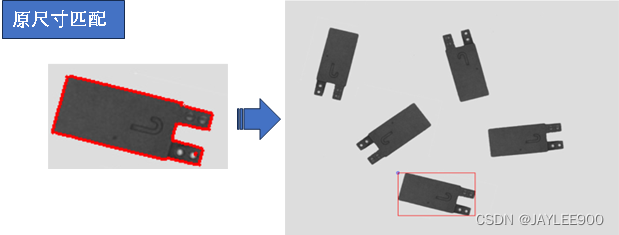

4.2模板数据与查询图像匹配

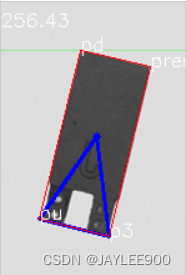

匹配期间,因为查询图像面积较大效率相对来说较慢一些,不过效果还是不错的,不过这样看来仅仅匹配上了相对位置和模板完全一致的样本图像

static public double ImageEdgeMatch(Mat img,List<ImageEdgePtInform> queryEdgeInforms,

double minScore,double greediness, Size validSize, out Point conformPoints)

{

Mat _uImg = img.Clone();

if (_uImg.Type() == MatType.CV_8UC3)

{

Cv2.CvtColor(_uImg, _uImg, ColorConversionCodes.BGR2GRAY);

}

Cv2.GaussianBlur(_uImg, _uImg, new Size(3, 3), 0);

//查询图像前期处理

int Width = _uImg.Width;

int Height = _uImg.Height;

int queryCount = queryEdgeInforms.Count;

double partialScore = 0;

double resultScore = 0;

conformPoints =new Point();

unsafe

{

//获取查询图像X方向和Y方向梯度,并计算筛选成绩相关参数

Mat txMagnitude = _uImg.Sobel(MatType.CV_64FC1, 1, 0, 3);

Mat tyMagnitude = _uImg.Sobel(MatType.CV_64FC1, 0, 1, 3);

Mat torgMagnitude = Mat.Zeros(_uImg.Size(), MatType.CV_64FC1);

double normMinScore = minScore / (double)queryCount;

double normGreediness = ((1 - greediness * minScore) / (1 - greediness)) / queryCount;

for(int row = 0; row < Height; row++)

{

double* xMag = (double*)txMagnitude.Ptr(row);

double* yMag = (double*)tyMagnitude.Ptr(row);

double* oMag = (double*)torgMagnitude.Ptr(row);

for (int col = 0; col < Width; col++)

{

double dx = xMag[col], dy = yMag[col];

double _mag = Math.Sqrt(dx * dx + dy * dy);

oMag[col] = _mag;

}

}

//开始匹配

for(int row = 0; row < Height; row++)

{

for (int col = 0; col < Width; col++)

{

double sum = 0;

double corSum = 0;

bool flag = false;

for (int cn = 0; cn < queryCount; cn++)

{

int xoff = queryEdgeInforms[cn].RelativePos.X;

int yoff = queryEdgeInforms[cn].RelativePos.Y;

//回去相对基准点的查询图像位置

int relaX = xoff + col;

int relaY = yoff + row;

if (relaY >= Height || relaX >= Width)

{

continue;

}

double txD = ((double*)txMagnitude.Ptr(relaY))[relaX];

double tyD = ((double*)tyMagnitude.Ptr(relaY))[relaX];

double tMag = ((double*)torgMagnitude.Ptr(relaY))[relaX];

double qxD = queryEdgeInforms[cn].DerivativeX;

double qyD = queryEdgeInforms[cn].DerivativeY;

double qMag = queryEdgeInforms[cn].Magnitude;

if((txD!=0 || tyD != 0) && (qxD != 0 || qyD != 0))

{

sum += (txD * qxD + tyD * qyD) * qMag / tMag;

}

corSum += 1;

partialScore = sum / corSum;

double curJudge = Math.Min((minScore - 1) + normGreediness * normMinScore, normMinScore * corSum);

//匹配分数不满足则进行下一个点匹配

if (partialScore < curJudge)

{

break;

}

}

if (partialScore > resultScore)

{

resultScore = partialScore;

conformPoints = new Point(col, row);

}

}

}

//二次筛选

if (resultScore > 0.5)

{

if(conformPoints.X+validSize.Width>Width||

conformPoints.Y + validSize.Height > Height)

{

resultScore = 0;

}

}

return resultScore;

}

}

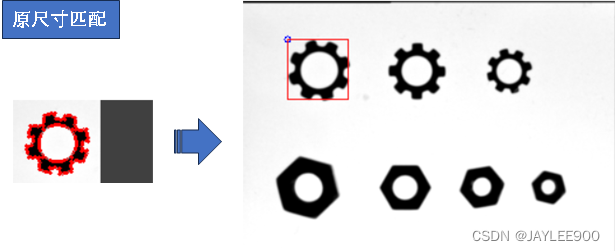

4.3过程优化

通过4.2部分,能够清晰了解到边缘灰度梯度匹配效果还是可以的,不过还是存在部分缺陷:仅能获取到相对位置一致的图像位置、查询效率较低;针对这两个问题,可以先将查询图像进行分割,然后匹配到相同的角度,再去进行数据收集和与模板数据匹配的相关操作,这样一来效果提升明显

{//图像轮廓边缘匹配

Mat _sImg = detectImg.Clone();

Mat queryImg = patternImg.Clone();

Mat trainImg = detectImg.Clone();

Mat queryGray = ImageGrayDetect(queryImg);

Mat trainGray = ImageGrayDetect(trainImg);

Mat queBlur = queryGray.GaussianBlur(new Size(3, 3), 0);

Mat trainBlur = trainGray.GaussianBlur(new Size(3, 3), 0);

List<Size> templateValidSize = new List<Size>();

List<List<ImageEdgePtInform>> templatesEdgeInforms = new List<List<ImageEdgePtInform>>();

ImageGeometricData queryGeoInform = new ImageGeometricData();

ImageGeometricData trainGeoInform = new ImageGeometricData();

Rect[] querySubBoundRects = BbProcesser.ImageConnectedFieldSegment(queBlur, 120, true, show: false).ConnectedFieldDatas.FieldRects.ToArray();

Rect[] trainSubBoundRects = BbProcesser.ImageConnectedFieldSegment(trainBlur, 120, true, show: false).ConnectedFieldDatas.FieldRects.ToArray();

Mat[] querySubImgs = new Mat[querySubBoundRects.Length];

Mat[] trianSubImgs = new Mat[trainSubBoundRects.Length];

List<Rect> matchedConformRegions = new List<Rect>();

int margin = 5;

for (int i =0;i< querySubImgs.Length;i++)

{

Rect selectRegion = querySubBoundRects[i];

if(selectRegion.X-margin>=0

&& selectRegion.Width+margin<queBlur.Width

&& selectRegion.Y-margin>=0

&& selectRegion.Height + margin < queBlur.Height)

{

selectRegion.X -= margin;

selectRegion.Y -= margin;

selectRegion.Width += 2 * margin;

selectRegion.Height += 2 * margin;

}

querySubImgs[i] = new Mat(patternImg.Clone(), selectRegion);

}

for(int i = 0; i < trianSubImgs.Length; i++)

{

Rect selectRegion = trainSubBoundRects[i];

if (selectRegion.X - margin >= 0

&& selectRegion.Width + 2*margin < trainBlur.Width

&& selectRegion.Y - margin >= 0

&& selectRegion.Height + 2*margin < trainBlur.Height)

{

selectRegion.X -= margin;

selectRegion.Y -= margin;

selectRegion.Width += 2 * margin;

selectRegion.Height += 2 * margin;

}

trianSubImgs[i] = new Mat(detectImg.Clone(), selectRegion);

}

unsafe

{

double[] querySubOrients = new double[querySubImgs.Length];

for(int i = 0; i < querySubImgs.Length; i++)

{

Size validSize = new Size();

List<ImageEdgePtInform> subEdgeInforms = GeoProcesser.ImageContourEdgeInfomGet(querySubImgs[i].Clone(), 3,ref validSize,show:true);

templateValidSize.Add(validSize);

templatesEdgeInforms.Add(subEdgeInforms);

}

for(int i = 0; i < trianSubImgs.Length; i++)

{

for(int j = 0; j < templateValidSize.Count; j++)

{

Point outPt = new Point();

//检测图像与模板角度不匹配时旋转

Mat _tImg = trianSubImgs[i].Clone();

{

_tImg = ImageGrayDetect(_tImg);

Cv2.GaussianBlur(_tImg, _tImg, new Size(3, 3), 0);

_tImg = _tImg.Threshold(120, 255, ThresholdTypes.BinaryInv);

Cv2.FindContours(_tImg, out Point[][] tCnts, out HierarchyIndex[] hidxs, RetrievalModes.External, ContourApproximationModes.ApproxSimple);

tCnts = tCnts.ToList().OrderByDescending(cnt => Cv2.ContourArea(cnt)).ToArray();

double tOrient = GeometricProcessor.ContourBaryCenterOrientationGet(tCnts[0]);

if (tOrient != templatesEdgeInforms[j][0].BarycentOrient)

{

double rOrient = templatesEdgeInforms[j][0].BarycentOrient - tOrient;

_tImg = ImageRotate(trianSubImgs[i].Clone(), new Point2f(_tImg.Width / 2, _tImg.Height / 2), rOrient, show: false);

}

}

double score1 = GeometricProcessor.ImageEdgeMatch(trianSubImgs[i].Clone(), templatesEdgeInforms[j],

0.9, 1, templateValidSize[j], out outPt);

double score2 =GeometricProcessor.ImageEdgeMatch(_tImg, templatesEdgeInforms[j],

0.9, 1, templateValidSize[j],out outPt);

if (Math.Max(score1,score2) > 0.9)

{

matchedConformRegions.Add(trainSubBoundRects[i]);

_sImg.Rectangle(trainSubBoundRects[i], Scalar.Red, 1) ;

}

}

}

if (matchedConformRegions.Count != 0)

{

Mat _attachedImg = ImagesMerge(querySubImgs, new Mat());

_sImg = ImagesMerge(new Mat[] { _attachedImg }, _sImg);

ImageShow("GradientDetectResult", _sImg);

}

}

}

5.总结

匹配过程中,发现问题还是比较多,比如模板与查询对象尺寸不一致时怎么处理?遮挡部分面积过大的时候如何去匹配等等。。。慢慢来吧

6.参考

算法和图像参考如下:

函数相关

517

517

被折叠的 条评论

为什么被折叠?

被折叠的 条评论

为什么被折叠?