先确保新增的节点中没有数据,否则会报错;

当前的节点信息

127.0.0.1:8000> cluster slots

1) 1) (integer) 0

2) (integer) 5461

3) 1) "127.0.0.1"

2) (integer) 8000

3) "25d25af226ac55e9c03723288c20f53520420767"

4) 1) "127.0.0.1"

2) (integer) 8100

3) "e4ec27a4f61c66131faebab76d0c33c38fb5695c"

2) 1) (integer) 5462

2) (integer) 10922

3) 1) "127.0.0.1"

2) (integer) 8001

3) "68507c82e45915e6a257afbfc2626c2424684879"

4) 1) "127.0.0.1"

2) (integer) 8101

3) "30bb3d720a0c7dad6aed79f17ab33313246a0629"

3) 1) (integer) 10923

2) (integer) 16383

3) 1) "127.0.0.1"

2) (integer) 8002

3) "3db06c21c6dea8701fadbebfebf1aa92e5b13037"

4) 1) "127.0.0.1"

2) (integer) 8102

3) "e4df1b413eb5731f4de442e3e38a14612dc65700"现在需要新增节点 127.0.0.1:8003,127.0.0.1:8103

5.0之后版本新增节点命令:

redis-cli --cluster add-node {新节点IP:PORT} {集群中任一节点IP:PORT}

[root@XXX ~]# redis-cli --cluster add-node 127.0.0.1:8003 127.0.0.1:8002

>>> Adding node 127.0.0.1:8003 to cluster 127.0.0.1:8002

>>> Performing Cluster Check (using node 127.0.0.1:8002)

M: 3db06c21c6dea8701fadbebfebf1aa92e5b13037 127.0.0.1:8002

slots:[10923-16383] (5461 slots) master

1 additional replica(s)

S: 30bb3d720a0c7dad6aed79f17ab33313246a0629 127.0.0.1:8101

slots: (0 slots) slave

replicates 68507c82e45915e6a257afbfc2626c2424684879

M: 68507c82e45915e6a257afbfc2626c2424684879 127.0.0.1:8001

slots:[5462-10922] (5461 slots) master

1 additional replica(s)

S: e4df1b413eb5731f4de442e3e38a14612dc65700 127.0.0.1:8102

slots: (0 slots) slave

replicates 3db06c21c6dea8701fadbebfebf1aa92e5b13037

S: e4ec27a4f61c66131faebab76d0c33c38fb5695c 127.0.0.1:8100

slots: (0 slots) slave

replicates 25d25af226ac55e9c03723288c20f53520420767

M: 25d25af226ac55e9c03723288c20f53520420767 127.0.0.1:8000

slots:[0-5461] (5462 slots) master

1 additional replica(s)

[OK] All nodes agree about slots configuration.

>>> Check for open slots...

>>> Check slots coverage...

[OK] All 16384 slots covered.

>>> Send CLUSTER MEET to node 127.0.0.1:8003 to make it join the cluster.

[OK] New node added correctly.

[root@XXX ~]# redis-cli --cluster add-node 127.0.0.1:8103 127.0.0.1:8002

>>> Adding node 127.0.0.1:8103 to cluster 127.0.0.1:8002

>>> Performing Cluster Check (using node 127.0.0.1:8002)

M: 3db06c21c6dea8701fadbebfebf1aa92e5b13037 127.0.0.1:8002

slots:[10923-16383] (5461 slots) master

1 additional replica(s)

S: 30bb3d720a0c7dad6aed79f17ab33313246a0629 127.0.0.1:8101

slots: (0 slots) slave

replicates 68507c82e45915e6a257afbfc2626c2424684879

M: 68507c82e45915e6a257afbfc2626c2424684879 127.0.0.1:8001

slots:[5462-10922] (5461 slots) master

1 additional replica(s)

M: e9aac3ea026f8b5b14267861021a282103671a9c 127.0.0.1:8003

slots: (0 slots) master

S: e4df1b413eb5731f4de442e3e38a14612dc65700 127.0.0.1:8102

slots: (0 slots) slave

replicates 3db06c21c6dea8701fadbebfebf1aa92e5b13037

S: e4ec27a4f61c66131faebab76d0c33c38fb5695c 127.0.0.1:8100

slots: (0 slots) slave

replicates 25d25af226ac55e9c03723288c20f53520420767

M: 25d25af226ac55e9c03723288c20f53520420767 127.0.0.1:8000

slots:[0-5461] (5462 slots) master

1 additional replica(s)

[OK] All nodes agree about slots configuration.

>>> Check for open slots...

>>> Check slots coverage...

[OK] All 16384 slots covered.

>>> Send CLUSTER MEET to node 127.0.0.1:8103 to make it join the cluster.

[OK] New node added correctly.执行后,集群中每个节点都会将新节点添加到自己的"通讯录"中;

添加后默认情况下,新节点角色是master,且没有任何hash slot;

127.0.0.1:8000> cluster nodes

e9aac3ea026f8b5b14267861021a282103671a9c 127.0.0.1:8003@18003 master - 0 1626230308792 0 connected

e4ec27a4f61c66131faebab76d0c33c38fb5695c 127.0.0.1:8100@18100 slave 25d25af226ac55e9c03723288c20f53520420767 0 1626230307788 16 connected

68507c82e45915e6a257afbfc2626c2424684879 127.0.0.1:8001@18001 master - 0 1626230306000 14 connected 5462-10922

89609f9d318bbca243c622195dcffb0c4c739c21 127.0.0.1:8103@18103 master - 0 1626230309795 17 connected

25d25af226ac55e9c03723288c20f53520420767 127.0.0.1:8000@18000 myself,master - 0 1626230306000 16 connected 0-5461

e4df1b413eb5731f4de442e3e38a14612dc65700 127.0.0.1:8102@18102 slave 3db06c21c6dea8701fadbebfebf1aa92e5b13037 0 1626230306785 12 connected

30bb3d720a0c7dad6aed79f17ab33313246a0629 127.0.0.1:8101@18101 slave 68507c82e45915e6a257afbfc2626c2424684879 0 1626230307000 14 connected

3db06c21c6dea8701fadbebfebf1aa92e5b13037 127.0.0.1:8002@18002 master - 0 1626230307000 12 connected 10923-16383现在来分配slot,

命令:

redis-cli --cluster reshard 127.0.0.1:8000

127.0.0.1:8000 为集群中的任意一个节点

[root@xxx ~]# redis-cli --cluster reshard 127.0.0.1:8000

>>> Performing Cluster Check (using node 127.0.0.1:8000)

M: 25d25af226ac55e9c03723288c20f53520420767 127.0.0.1:8000

slots:[0-5461] (5462 slots) master # 0-5461

1 additional replica(s)

M: e9aac3ea026f8b5b14267861021a282103671a9c 127.0.0.1:8003

slots: (0 slots) master #新节点没有slots

S: e4ec27a4f61c66131faebab76d0c33c38fb5695c 127.0.0.1:8100

slots: (0 slots) slave

replicates 25d25af226ac55e9c03723288c20f53520420767

M: 68507c82e45915e6a257afbfc2626c2424684879 127.0.0.1:8001

slots:[5462-10922] (5461 slots) master #5462-10922

1 additional replica(s)

M: 89609f9d318bbca243c622195dcffb0c4c739c21 127.0.0.1:8103

slots: (0 slots) master #新节点没有slots

S: e4df1b413eb5731f4de442e3e38a14612dc65700 127.0.0.1:8102

slots: (0 slots) slave

replicates 3db06c21c6dea8701fadbebfebf1aa92e5b13037

S: 30bb3d720a0c7dad6aed79f17ab33313246a0629 127.0.0.1:8101

slots: (0 slots) slave

replicates 68507c82e45915e6a257afbfc2626c2424684879

M: 3db06c21c6dea8701fadbebfebf1aa92e5b13037 127.0.0.1:8002

slots:[10923-16383] (5461 slots) master #10923-16383

1 additional replica(s)

[OK] All nodes agree about slots configuration.

>>> Check for open slots...

>>> Check slots coverage...

[OK] All 16384 slots covered.

How many slots do you want to move (from 1 to 16384)? How many slots do you want to move (from 1 to 16384)?

Redis一共16384个slots,之前是3个Master,现在变成4个Master,需要移动16384/4 = 4096个槽位

How many slots do you want to move (from 1 to 16384)? 4096

What is the receiving node ID? e9aac3ea026f8b5b14267861021a282103671a9c

Please enter all the source node IDs.

Type 'all' to use all the nodes as source nodes for the hash slots.

Type 'done' once you entered all the source nodes IDs.

Source node #1: all

Ready to move 4096 slots.What is the receiving node ID? 输入要接收槽位的节点node-id,填入127.0.0.1:8003的node-id

Source node #1: 从哪个Master移动4096个slots过来? 填all; 从另外3个Master平均移动4096个slots过来,每个Master移动1365个slots;

Ready to move 4096 slots.

Source nodes:

M: 25d25af226ac55e9c03723288c20f53520420767 127.0.0.1:8000

slots:[0-5461] (5462 slots) master

1 additional replica(s)

M: 68507c82e45915e6a257afbfc2626c2424684879 127.0.0.1:8001

slots:[5462-10922] (5461 slots) master

1 additional replica(s)

M: 89609f9d318bbca243c622195dcffb0c4c739c21 127.0.0.1:8103

slots: (0 slots) master

M: 3db06c21c6dea8701fadbebfebf1aa92e5b13037 127.0.0.1:8002

slots:[10923-16383] (5461 slots) master

1 additional replica(s)

Destination node:

M: e9aac3ea026f8b5b14267861021a282103671a9c 127.0.0.1:8003

slots: (0 slots) master展示了Source nodes 机器当前所包含的slots

Destination node为 127.0.0.1:8003

Resharding plan:

Moving slot 0 from 25d25af226ac55e9c03723288c20f53520420767

Moving slot 1 from 25d25af226ac55e9c03723288c20f53520420767

Moving slot 2 from 25d25af226ac55e9c03723288c20f53520420767

......

Moving slot 1363 from 25d25af226ac55e9c03723288c20f53520420767

Moving slot 1364 from 25d25af226ac55e9c03723288c20f53520420767

Moving slot 1365 from 25d25af226ac55e9c03723288c20f53520420767

Moving slot 5462 from 68507c82e45915e6a257afbfc2626c2424684879

Moving slot 5463 from 68507c82e45915e6a257afbfc2626c2424684879

Moving slot 5464 from 68507c82e45915e6a257afbfc2626c2424684879

......

Moving slot 6823 from 68507c82e45915e6a257afbfc2626c2424684879

Moving slot 6824 from 68507c82e45915e6a257afbfc2626c2424684879

Moving slot 6825 from 68507c82e45915e6a257afbfc2626c2424684879

Moving slot 6826 from 68507c82e45915e6a257afbfc2626c2424684879

Moving slot 10923 from 3db06c21c6dea8701fadbebfebf1aa92e5b13037

Moving slot 10924 from 3db06c21c6dea8701fadbebfebf1aa92e5b13037

Moving slot 10925 from 3db06c21c6dea8701fadbebfebf1aa92e5b13037

......

Moving slot 12285 from 3db06c21c6dea8701fadbebfebf1aa92e5b13037

Moving slot 12286 from 3db06c21c6dea8701fadbebfebf1aa92e5b13037

Moving slot 12287 from 3db06c21c6dea8701fadbebfebf1aa92e5b13037

Do you want to proceed with the proposed reshard plan (yes/no)? yes

Moving slot 0 from 127.0.0.1:8000 to 127.0.0.1:8003:

Moving slot 1 from 127.0.0.1:8000 to 127.0.0.1:8003:

Moving slot 2 from 127.0.0.1:8000 to 127.0.0.1:8003:

... ...

Moving slot 12285 from 127.0.0.1:8002 to 127.0.0.1:8003:

Moving slot 12286 from 127.0.0.1:8002 to 127.0.0.1:8003:

Moving slot 12287 from 127.0.0.1:8002 to 127.0.0.1:8003: 上面执行结果可以看到,会从每个分片里面取1365个slot到新增的Master中;

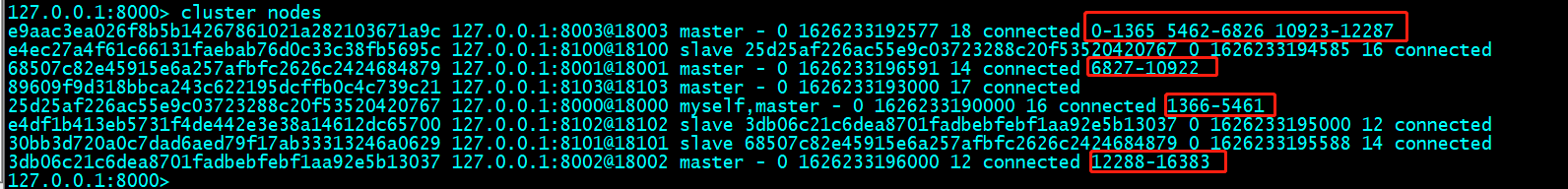

查看node的slot分配情况可以看到当前是平均分配的,每个节点4096个slots

最后还将127.0.0.1:8103修改为127.0.0.1:8003的从库;

127.0.0.1:8103> cluster replicate e9aac3ea026f8b5b14267861021a282103671a9c

OK查看nodes状态,当前是4主4从

127.0.0.1:8103> cluster nodes

e9aac3ea026f8b5b14267861021a282103671a9c 127.0.0.1:8003@18003 master - 0 1626233452000 18 connected 0-1365 5462-6826 10923-12287

25d25af226ac55e9c03723288c20f53520420767 127.0.0.1:8000@18000 master - 0 1626233451000 16 connected 1366-5461

3db06c21c6dea8701fadbebfebf1aa92e5b13037 127.0.0.1:8002@18002 master - 0 1626233451999 12 connected 12288-16383

68507c82e45915e6a257afbfc2626c2424684879 127.0.0.1:8001@18001 master - 0 1626233453002 14 connected 6827-10922

e4df1b413eb5731f4de442e3e38a14612dc65700 127.0.0.1:8102@18102 slave 3db06c21c6dea8701fadbebfebf1aa92e5b13037 0 1626233452000 12 connected

e4ec27a4f61c66131faebab76d0c33c38fb5695c 127.0.0.1:8100@18100 slave 25d25af226ac55e9c03723288c20f53520420767 0 1626233450000 16 connected

30bb3d720a0c7dad6aed79f17ab33313246a0629 127.0.0.1:8101@18101 slave 68507c82e45915e6a257afbfc2626c2424684879 0 1626233451000 14 connected

89609f9d318bbca243c622195dcffb0c4c739c21 127.0.0.1:8103@18103 myself,slave e9aac3ea026f8b5b14267861021a282103671a9c 0 1626233451000 18 connected查看slots分布

127.0.0.1:8103> cluster slots

1) 1) (integer) 0

2) (integer) 1365

3) 1) "127.0.0.1"

2) (integer) 8003

3) "e9aac3ea026f8b5b14267861021a282103671a9c"

4) 1) "127.0.0.1"

2) (integer) 8103

3) "89609f9d318bbca243c622195dcffb0c4c739c21"

2) 1) (integer) 1366

2) (integer) 5461

3) 1) "127.0.0.1"

2) (integer) 8000

3) "25d25af226ac55e9c03723288c20f53520420767"

4) 1) "127.0.0.1"

2) (integer) 8100

3) "e4ec27a4f61c66131faebab76d0c33c38fb5695c"

3) 1) (integer) 5462

2) (integer) 6826

3) 1) "127.0.0.1"

2) (integer) 8003

3) "e9aac3ea026f8b5b14267861021a282103671a9c"

4) 1) "127.0.0.1"

2) (integer) 8103

3) "89609f9d318bbca243c622195dcffb0c4c739c21"

4) 1) (integer) 6827

2) (integer) 10922

3) 1) "127.0.0.1"

2) (integer) 8001

3) "68507c82e45915e6a257afbfc2626c2424684879"

4) 1) "127.0.0.1"

2) (integer) 8101

3) "30bb3d720a0c7dad6aed79f17ab33313246a0629"

5) 1) (integer) 10923

2) (integer) 12287

3) 1) "127.0.0.1"

2) (integer) 8003

3) "e9aac3ea026f8b5b14267861021a282103671a9c"

4) 1) "127.0.0.1"

2) (integer) 8103

3) "89609f9d318bbca243c622195dcffb0c4c739c21"

6) 1) (integer) 12288

2) (integer) 16383

3) 1) "127.0.0.1"

2) (integer) 8002

3) "3db06c21c6dea8701fadbebfebf1aa92e5b13037"

4) 1) "127.0.0.1"

2) (integer) 8102

3) "e4df1b413eb5731f4de442e3e38a14612dc65700"

1466

1466

被折叠的 条评论

为什么被折叠?

被折叠的 条评论

为什么被折叠?